In the dynamic realm of serverless APIs, where scalability and efficiency are paramount, understanding throttling is not merely advantageous; it’s essential. Throttling, the controlled limitation of resource consumption, acts as a crucial mechanism to maintain API stability, prevent abuse, and ensure fair access for all users. This intricate process governs the flow of requests, safeguarding the underlying infrastructure and optimizing the user experience.

Serverless architectures, with their inherent elasticity, introduce unique challenges and opportunities. Throttling in these environments involves implementing various strategies to regulate the number of requests processed within a specific timeframe, the concurrency of executions, and the overall resource utilization. This proactive approach not only protects against potential denial-of-service (DoS) attacks but also enables efficient resource allocation, optimizing costs and performance.

Understanding Serverless API Throttling

Serverless APIs, with their inherent scalability and cost-effectiveness, have revolutionized application development. However, this architecture introduces unique challenges, particularly in resource management and ensuring service availability. Throttling is a critical mechanism within this environment, acting as a safeguard against overuse and ensuring equitable access to resources. It directly impacts the performance, reliability, and cost-efficiency of serverless applications.

Fundamental Concept of Throttling in Serverless APIs

Throttling, in the context of serverless APIs, is a mechanism that controls the rate at which requests are processed. It limits the number of requests an API can handle within a specific timeframe. This control prevents any single user or application from monopolizing the available resources, thereby maintaining the overall stability and performance of the API for all users. The core function is to ensure fairness and prevent denial-of-service scenarios.

Scenarios for Throttling Implementation in Serverless Environments

Throttling is implemented across various scenarios within serverless environments to manage resource consumption and maintain service levels.

- Rate Limiting per User/API Key: This is a common approach where each user or application is assigned a specific rate limit. For instance, an API might allow a user to make a maximum of 100 requests per minute. This prevents individual users from overwhelming the system.

- Global Rate Limiting: A global rate limit restricts the total number of requests the entire API can handle within a given period. This is particularly useful to protect against sudden spikes in traffic or distributed denial-of-service (DDoS) attacks. For example, an API might be configured to handle a maximum of 10,000 requests per second.

- Resource-Based Throttling: This type of throttling focuses on specific resources, such as database connections or compute units. If a particular function requires significant resources, throttling can be applied to limit the number of concurrent executions of that function. For example, if a database can handle a maximum of 50 concurrent connections, the API might throttle requests that exceed this limit to prevent database overload.

- Tiered Throttling: APIs often offer different service tiers, each with its own rate limits. Higher-paying tiers may have higher rate limits, allowing users to access more resources and functionality. This model enables monetization and caters to different usage patterns. For example, a free tier might have a limit of 1,000 requests per day, while a premium tier offers 100,000 requests per day.

Reasons for Implementing Throttling in Serverless APIs

Throttling is an essential component of serverless API architecture due to several critical reasons.

- Resource Protection: Serverless functions are typically billed based on resource consumption (e.g., compute time, memory usage). Throttling helps to prevent excessive resource usage, which can lead to unexpected costs and potential service degradation. For example, without throttling, a poorly designed or malicious client could trigger a function repeatedly, driving up costs and potentially exhausting available resources.

- Preventing Denial-of-Service (DoS) Attacks: Throttling acts as a defense mechanism against DoS attacks. By limiting the rate of incoming requests, it prevents attackers from overwhelming the API and making it unavailable to legitimate users. This helps to maintain the availability and reliability of the service.

- Ensuring Fairness and Service Level Agreements (SLAs): Throttling ensures that all users have fair access to the API resources. It prevents a small number of users from consuming a disproportionate share of the resources, which could negatively impact the performance for others. It also helps in meeting the service level agreements.

- Cost Optimization: By controlling resource consumption, throttling helps to optimize costs. It prevents the API from scaling unnecessarily in response to sudden traffic spikes, which could lead to higher bills. Careful rate limiting and monitoring enable more predictable and manageable spending.

- Database Protection: Throttling protects backend databases from being overwhelmed by excessive requests. Many serverless APIs interact with databases, and throttling helps to prevent database connection exhaustion and performance degradation.

Types of Throttling Mechanisms

Serverless APIs, by their nature, are designed to scale automatically. However, this inherent scalability necessitates robust throttling mechanisms to protect resources and maintain service stability. Different throttling techniques are employed to manage API usage, prevent abuse, and ensure fair access. These techniques can be broadly categorized based on the parameters they control and the methods they utilize.

Rate Limiting

Rate limiting restricts the number of requests a client can make within a specific timeframe. This is a fundamental throttling mechanism used to prevent any single client from overwhelming the API and exhausting resources.Rate limiting can be implemented using various strategies:

- Fixed Window Rate Limiting: This is a simple approach where requests are counted within a fixed time window (e.g., 60 seconds). If the request limit is exceeded within that window, subsequent requests are rejected until the window resets. This method is easy to implement but can be vulnerable to bursts of requests at the beginning of each window.

- Sliding Window Rate Limiting: This method offers improved fairness compared to fixed window. It considers the requests made over a sliding time window. For example, it might calculate a rate based on the last hour, constantly updating the window as time progresses. This mitigates the burst problem by spreading the requests more evenly over time.

- Token Bucket Rate Limiting: This approach uses a “bucket” of tokens that are replenished at a constant rate. Each request consumes a token. If the bucket is empty, the request is throttled. This method allows for a certain degree of burst tolerance, as clients can “spend” tokens accumulated earlier.

- Leaky Bucket Rate Limiting: Similar to the token bucket, the leaky bucket has a fixed capacity. Requests “enter” the bucket at a rate. If the bucket is full, incoming requests are discarded or delayed. The bucket “leaks” requests at a constant rate, smoothing out request flow.

Rate limiting typically involves tracking requests based on various criteria, such as:

- Client IP Address: Restricting requests based on the originating IP address. This is a common method but can be circumvented using proxies.

- API Keys: Limiting requests based on the API key used to access the API. This allows for more granular control and can be tied to user accounts or subscription levels.

- User Accounts: Throttling based on authenticated user accounts, providing personalized rate limits.

Concurrency Limits

Concurrency limits restrict the number of concurrent requests that can be processed simultaneously. This is crucial for preventing resource exhaustion, particularly in serverless environments where functions have limited compute resources. Concurrency limits are often applied at the function level, API level, or account level.Concurrency limits help to:

- Protect Downstream Services: Preventing the API from overwhelming dependent services like databases or other APIs.

- Resource Allocation: Ensuring fair allocation of resources across different API consumers.

- Performance Optimization: Avoiding performance degradation caused by excessive concurrent requests.

Differences Between Rate Limiting and Concurrency Limits

While both rate limiting and concurrency limits are throttling mechanisms, they address different aspects of API usage:

- Scope: Rate limiting focuses on the

-number* of requests over time, while concurrency limits focus on the

-number* of requests

-being processed simultaneously*. - Goal: Rate limiting aims to prevent overuse and abuse by controlling request frequency. Concurrency limits aim to prevent resource exhaustion and ensure system stability by controlling the number of active requests.

- Impact: Exceeding a rate limit results in requests being rejected or delayed. Exceeding a concurrency limit results in requests being queued, rejected, or potentially causing service degradation.

For example, consider a serverless API with a rate limit of 100 requests per minute and a concurrency limit of 10. A client could make 100 requests within a minute, but only 10 of those requests would be processed concurrently. If the client attempts to make 11 concurrent requests, the eleventh request would be throttled (delayed or rejected).

Advantages and Disadvantages of Throttling Approaches

Different throttling approaches have their own strengths and weaknesses, and the optimal choice depends on the specific requirements of the API.

| Throttling Approach | Advantages | Disadvantages |

|---|---|---|

| Rate Limiting (Fixed Window) | Simple to implement. | Vulnerable to burst traffic at the beginning of each window. Can lead to unfairness. |

| Rate Limiting (Sliding Window) | More fair than fixed window. Mitigates burst problems. | More complex to implement than fixed window. Requires tracking requests over a longer time period. |

| Rate Limiting (Token Bucket) | Allows for burst tolerance. Relatively easy to implement. | Can still be susceptible to bursts if the token refill rate is too high. |

| Rate Limiting (Leaky Bucket) | Smooths out request flow. Prevents bursts. | More complex to implement than token bucket. Requires careful tuning of the leak rate. |

| Concurrency Limits | Protects resources from exhaustion. Ensures system stability. | Can be complex to configure optimally. May require careful monitoring and tuning. |

The choice of throttling mechanism should be based on the API’s expected traffic patterns, the sensitivity of its resources, and the desired level of user experience. Often, a combination of rate limiting and concurrency limits is used to provide comprehensive protection and ensure optimal performance. For instance, a serverless API might employ rate limiting to control the overall request volume from each user and concurrency limits to manage the number of concurrent requests that can be processed by the underlying function.

This combined approach allows for both fairness and resource protection.

Impact of Throttling on API Performance

Throttling, while crucial for maintaining API stability and resource management, directly influences API performance and, consequently, the user experience. Understanding this impact is vital for configuring throttling mechanisms effectively and achieving the desired balance between resource protection and service responsiveness. The primary effects of throttling are observed in response times, the prevention of malicious attacks, and the overall scalability of the API.

API Response Time Effects

Throttling introduces latency to API requests. When a request exceeds the configured rate limits, it is either delayed (queued) or rejected. This directly impacts the time it takes for a client to receive a response.

- Queuing Delays: When throttling utilizes a queuing mechanism, requests that exceed the rate limit are placed in a queue and processed sequentially. This can lead to increased response times, especially during periods of high traffic. The delay is directly proportional to the queue depth and the service time for each request. For instance, if a service allows 10 requests per second and a burst of 20 requests arrives simultaneously, the extra 10 requests will be queued.

If each request takes 0.1 seconds to process, the last queued request will experience a delay of 1 second.

- Rejection Delays: If requests are rejected, the client receives an error response, such as an HTTP 429 Too Many Requests error. The client then typically needs to retry the request after a delay, adding to the overall latency. This is often the most immediate impact on user experience. The retry mechanism and the delay between retries influence the perceived performance. For example, if a client receives a 429 error and waits 60 seconds before retrying, the user will experience a 60-second delay.

- Impact on User Experience: Increased response times, whether due to queuing or retries, degrade the user experience. Slow APIs lead to frustration and can cause users to abandon the application. Metrics such as Time to First Byte (TTFB) and Time to Interactive (TTI) are directly affected by throttling configurations. A well-designed throttling strategy aims to minimize these delays while still protecting the API.

Denial-of-Service (DoS) Attack Prevention

Throttling is a critical defense mechanism against Denial-of-Service (DoS) and Distributed Denial-of-Service (DDoS) attacks. By limiting the number of requests from a single source (IP address, user, etc.) within a specific timeframe, throttling prevents attackers from overwhelming the API with malicious traffic.

- Rate Limiting: Rate limiting, a common form of throttling, restricts the number of requests a client can make within a defined period (e.g., requests per second, minute, or hour). This effectively mitigates the impact of DoS attacks by preventing attackers from flooding the API with requests faster than it can handle. For example, if an API limits requests to 100 per minute and an attacker attempts to send 1000 requests in the same minute, 900 requests will be rejected or delayed.

- Request Validation: Throttling can be coupled with request validation to identify and filter malicious requests. This involves checking the request headers, body, and other parameters to detect suspicious patterns or activities. Validating requests can reduce the load on the API by preventing processing of illegitimate requests.

- Resource Protection: Throttling protects the underlying resources of the API, such as the database, servers, and network bandwidth. By limiting the number of requests, throttling prevents these resources from being exhausted, thus ensuring the availability and stability of the API.

Relationship Between Throttling Configurations and API Scalability

The configuration of throttling mechanisms directly influences the scalability of a serverless API. Proper configuration ensures the API can handle increasing traffic loads without performance degradation or service outages.

- Fine-Grained Control: Throttling configurations should be fine-grained and adaptable to various traffic patterns. Different API endpoints may require different throttling limits based on their resource consumption and importance. For instance, a read-only endpoint might tolerate higher request rates than a write-intensive endpoint.

- Elasticity and Auto-Scaling: Serverless APIs benefit from auto-scaling capabilities. Throttling configurations should be designed to work seamlessly with auto-scaling, allowing the API to automatically scale resources based on traffic demands. When traffic increases, the API can automatically provision more instances, increasing the overall capacity and potentially increasing throttling limits.

- Monitoring and Optimization: Continuous monitoring of API performance and traffic patterns is essential. Monitoring metrics like request rates, error rates, and response times provides valuable insights for optimizing throttling configurations. The ideal configuration should be continuously adjusted to maintain optimal performance and resource utilization. A common example of monitoring is the use of tools like CloudWatch or similar services to visualize API request metrics and identify bottlenecks.

Configuring Throttling in Serverless Platforms

Configuring throttling is a critical aspect of managing serverless APIs. Effective throttling prevents resource exhaustion, maintains service availability, and ensures a consistent user experience. This section provides guidance on configuring throttling settings across popular serverless platforms and Artikels best practices for setting appropriate limits.

Platform-Specific Throttling Configuration

Different serverless platforms offer varying mechanisms and configurations for implementing throttling. Understanding the specifics of each platform is essential for optimal API performance and resource management.

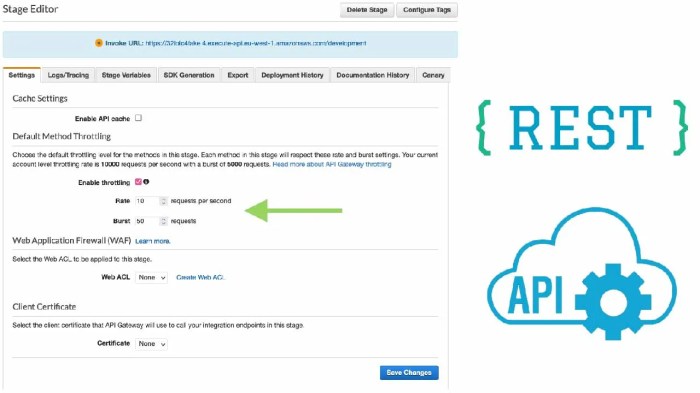

- AWS Lambda: AWS Lambda utilizes concurrency limits and API Gateway throttling settings. Concurrency limits define the maximum number of function instances that can execute concurrently. API Gateway provides a separate set of throttling configurations.

- Azure Functions: Azure Functions offers throttling primarily through its App Service plan settings. These settings include the ability to control the number of function instances and request rate limits.

- Google Cloud Functions: Google Cloud Functions integrates throttling with Google Cloud’s API Gateway or Cloud Endpoints. These services allow configuring request rate limits and concurrent request limits.

Best Practices for Setting Throttling Limits

Setting appropriate throttling limits requires careful consideration of API usage patterns, anticipated traffic, and service level objectives (SLOs). Incorrectly configured limits can lead to either performance bottlenecks or underutilization of resources.

- Analyze API Usage: Before configuring throttling, analyze historical API usage data. Identify peak traffic times, average request rates, and the distribution of request sizes. This data informs the initial throttling limits.

- Monitor and Adjust: Continuously monitor API performance metrics, such as latency, error rates, and resource utilization. Adjust throttling limits dynamically based on observed behavior.

- Implement Circuit Breakers: Implement circuit breakers to protect the API from cascading failures. When an API experiences excessive errors, a circuit breaker can temporarily reject requests, preventing further load and allowing the system to recover.

- Consider Burst Limits: Configure burst limits to handle sudden spikes in traffic. Burst limits allow a short period of increased throughput beyond the regular rate limit.

- Differentiate Throttling by API Operation: If possible, differentiate throttling limits based on API operations. For example, read operations might have higher limits than write operations.

- Use a Rate Limiting Algorithm: Implement a rate limiting algorithm, such as the token bucket or leaky bucket algorithm, to regulate the rate at which requests are processed.

Example Platform Configuration

The following table provides an example of how throttling settings might be configured across different serverless platforms. This is a simplified illustration; actual configuration options may vary.

| Platform | Setting | Description | Example Value |

|---|---|---|---|

| AWS Lambda | Concurrency Limit | Maximum number of concurrent function executions. | 1000 |

| AWS API Gateway | Rate Limit (per API Key) | Maximum number of requests per second. | 100 |

| Azure Functions | App Service Plan Instance Count | Number of function instances available. | 5 |

| Azure Functions | Request Limit (per function) | Maximum number of requests allowed within a time window. | 1000 per minute |

| Google Cloud Functions | Concurrent Request Limit | Maximum number of concurrent function executions. | 500 |

| Google Cloud API Gateway | Rate Limit (per API Key) | Maximum number of requests per minute. | 600 |

Monitoring and Measuring Throttling Effects

Effective throttling in serverless APIs necessitates diligent monitoring and measurement to ensure optimal performance and resource utilization. This involves tracking throttling events, analyzing their impact on API latency and throughput, and making data-driven decisions to refine throttling configurations. A robust monitoring strategy is critical for proactively identifying and addressing potential bottlenecks, preventing service degradation, and maintaining a positive user experience.

Methods for Monitoring Throttling Events and Their Impact

Monitoring throttling events and their subsequent effects involves a multi-faceted approach, combining real-time data collection with historical analysis. This comprehensive strategy provides a complete understanding of throttling behavior.

- API Gateway Metrics: API gateways, the entry point for serverless APIs, provide built-in metrics that are invaluable for monitoring throttling. These metrics typically include:

- Throttled Requests: The number of requests that have been rejected or delayed due to throttling limits. This is a primary indicator of throttling activity.

- 429 Responses: The HTTP status code (429 – Too Many Requests) returned to clients when throttling limits are exceeded. Monitoring the frequency of 429 responses helps quantify the impact on user experience.

- API Latency: The time taken to process a request. Throttling can increase latency, so monitoring this metric is crucial.

- Throughput: The number of requests processed per unit of time. Throttling directly affects throughput, so monitoring this is essential.

- Custom Metrics and Logging: Implement custom metrics and detailed logging within the API code.

- Custom Metrics: These can track more specific aspects of throttling, such as the rate at which specific API endpoints are being throttled or the number of requests per user or application.

- Detailed Logging: Include timestamps, request identifiers, user identifiers, and the reason for throttling in the logs. This enables detailed analysis and correlation with other performance metrics.

- Distributed Tracing: Use distributed tracing tools to track requests as they traverse the API, including any throttling-related delays. This allows for pinpointing the exact location and cause of performance issues.

- Synthetic Monitoring: Regularly send synthetic requests to the API to simulate user traffic and monitor performance under various load conditions. This proactive approach helps identify potential throttling problems before they affect real users.

- Alerting: Set up alerts to notify administrators when throttling thresholds are reached or when performance degrades significantly. Alerts can be based on metrics like the number of throttled requests, the percentage of 429 responses, or increased latency.

Key Metrics to Track for Evaluating Throttling Effectiveness

Evaluating throttling effectiveness involves tracking a set of key metrics that provide insights into the API’s performance, resource utilization, and user experience. Analyzing these metrics enables informed decisions about throttling configuration.

- Throttled Request Rate: This metric represents the percentage of requests that are being throttled. A high throttled request rate indicates that the throttling limits are too restrictive, potentially impacting user experience. A low rate suggests that the throttling limits might be too permissive, potentially leading to resource exhaustion.

- 429 Response Rate: The rate at which the API returns 429 (Too Many Requests) status codes. A high 429 response rate directly indicates that users are experiencing throttling, leading to potential frustration and a poor user experience.

- Average API Latency: Throttling can increase latency, so monitoring the average response time of API requests is critical. An increase in latency, particularly during periods of high traffic, may signal that throttling is affecting performance.

- Throughput (Requests Per Second): The rate at which the API processes requests. Throttling directly affects throughput, so monitoring this metric is crucial to understanding the impact on API capacity.

- Error Rate: Monitoring the rate of other API errors, such as 500 (Internal Server Error) responses, is crucial because excessive throttling can sometimes lead to other issues like cascading failures.

- Resource Utilization: Track resource usage metrics such as CPU utilization, memory consumption, and database connection usage. This provides insights into whether throttling is effectively preventing resource exhaustion.

- User Impact Metrics: Measure the impact of throttling on user behavior, such as the number of retries, abandonment rates, and session durations.

Designing a Monitoring Dashboard for Throttling-Related Data

A well-designed monitoring dashboard provides a centralized view of throttling-related data, enabling real-time analysis and proactive management. The dashboard should present key metrics in an easily understandable format, facilitating quick identification of issues and informed decision-making.

- Dashboard Components: A typical throttling monitoring dashboard should include the following components:

- Real-time Graphs: Visualizations of key metrics such as throttled request rate, 429 response rate, API latency, and throughput, displayed as time-series graphs.

- Summary Statistics: Key performance indicators (KPIs) such as the total number of throttled requests, the average API latency, and the total number of requests processed.

- Alerting Indicators: Clear visual indicators of any active alerts, such as color-coded status indicators or textual warnings.

- Filtering and Grouping: The ability to filter and group data by API endpoint, user, application, or other relevant dimensions for granular analysis.

- Log Integration: A link to access API logs, providing detailed information about individual requests and throttling events.

- Data Visualization: Effective data visualization techniques are crucial for conveying information clearly and concisely.

- Line Graphs: Use line graphs to visualize time-series data, such as API latency and throughput, allowing for the identification of trends and patterns over time.

- Bar Charts: Use bar charts to compare metrics across different dimensions, such as the number of throttled requests per API endpoint.

- Pie Charts: Use pie charts to represent the distribution of requests, such as the percentage of requests that are throttled versus those that are not.

- Color-Coding: Use color-coding to highlight critical thresholds and alert conditions, such as red for high error rates or yellow for increasing latency.

- Example Dashboard Elements:

- Throttled Requests Over Time: A line graph showing the number of throttled requests per minute, with a horizontal line indicating the throttling threshold.

- 429 Response Rate: A gauge or meter showing the percentage of requests that are receiving 429 responses, with color-coded ranges indicating different severity levels.

- API Latency: A line graph showing the average API latency over time, with annotations indicating any performance degradation events.

- Throughput: A line graph displaying the number of requests per second, indicating any throttling impact on API capacity.

- Top Throttled Endpoints: A bar chart showing the API endpoints with the highest number of throttled requests.

- Tools and Technologies: Various tools and technologies can be used to build and maintain monitoring dashboards.

- Cloud Provider Dashboards: Utilize the built-in monitoring dashboards provided by cloud providers like AWS CloudWatch, Google Cloud Monitoring, or Azure Monitor. These platforms often offer pre-built dashboards and metrics specifically for API monitoring.

- Third-Party Monitoring Tools: Consider using third-party monitoring tools like Datadog, New Relic, or Grafana. These tools offer advanced features such as custom dashboards, alerting, and integration with various data sources.

Handling Throttling Errors and Responses

Understanding how serverless APIs respond to throttling and how to handle these responses effectively is critical for building resilient and user-friendly applications. When a serverless API experiences throttling, it needs to communicate this back to the client in a clear and standardized manner. This allows the client to react appropriately, preventing the application from appearing unresponsive or malfunctioning. The following sections detail the typical responses, error codes, and client-side strategies employed when dealing with throttling.

API Response to Throttled Requests

Serverless APIs, when encountering throttling limits, respond to requests in a specific manner to inform the client about the issue. These responses aim to communicate the throttling event and provide guidance for the client on how to proceed. The response usually includes HTTP status codes and potentially additional information within the response body.

HTTP Status Codes for Throttling

The use of appropriate HTTP status codes is fundamental for indicating throttling. These codes provide standardized communication about the nature of the error. Several HTTP status codes are commonly used to signal throttling, each conveying a specific meaning to the client application.

- 429 Too Many Requests: This is the primary and most common status code used to indicate throttling. It explicitly states that the user has sent too many requests in a given amount of time. The server should also include information in the response headers (described below) to specify the throttling parameters.

- 503 Service Unavailable: Although not exclusively for throttling, this status code can also be used. It signifies that the server is temporarily unable to handle the request, often due to overload or maintenance, which can include throttling limitations. The client should expect the issue to be temporary.

Throttling Response Headers

In addition to the HTTP status code, the server often includes specific headers in the response to provide more detailed information about the throttling limits and the client’s current status. These headers are essential for clients to understand the throttling parameters and adjust their behavior accordingly.

- Rate Limit Headers: These headers are used to communicate the rate limits and the client’s current usage. Examples include:

X-RateLimit-Limit: Specifies the number of requests allowed in the current time window.X-RateLimit-Remaining: Indicates the number of requests remaining in the current time window.X-RateLimit-Reset: Provides the time (in seconds or a timestamp) until the rate limit resets, allowing the client to make more requests.

- Retry-After Header: This header is often included with a 429 or 503 status code. It indicates the number of seconds the client should wait before retrying the request. This header is crucial for clients to implement exponential backoff strategies, ensuring they do not repeatedly send requests that will be throttled.

Client-Side Strategies for Handling Throttling Errors

Clients must implement robust strategies to handle throttling errors gracefully. These strategies aim to prevent the application from becoming unresponsive and to provide a better user experience.

- Exponential Backoff: This is a critical strategy. When a 429 or 503 status code is received, the client should wait for a period before retrying the request. The wait time should increase exponentially with each subsequent retry (e.g., 1 second, 2 seconds, 4 seconds, 8 seconds, etc.). This approach reduces the load on the server and allows the client to recover from throttling without overwhelming the API.

- Respecting Retry-After Header: If the server provides a

Retry-Afterheader, the client should use the value specified in this header to determine the delay before retrying. This ensures that the client waits the appropriate amount of time and avoids unnecessary retries. - Limiting Request Frequency: Clients should proactively limit the frequency of their requests, especially when making a large number of requests or operating in a high-load environment. This can be achieved by implementing request queues, throttling mechanisms, or using client-side rate limiting.

- User Notification: In certain scenarios, such as when a user is interacting with a client application, it can be helpful to notify the user when their requests are being throttled. This can be done through user-friendly messages, such as “Too many requests. Please try again later.” This informs the user of the issue and sets expectations.

- Caching: Implement caching strategies where appropriate to reduce the frequency of requests. This can significantly lower the likelihood of hitting throttling limits, especially for frequently accessed data.

- Error Logging and Monitoring: Implement robust logging and monitoring to track throttling events. This allows for identifying patterns, understanding the frequency of throttling, and making adjustments to the client application or API usage. This includes logging the HTTP status codes, the values of the rate limit headers, and any error messages received.

Strategies for Avoiding Throttling

Minimizing the likelihood of encountering throttling limits is crucial for maintaining consistent API performance and ensuring a positive user experience. This proactive approach involves a combination of architectural considerations, code optimization, and strategic resource management. By implementing these strategies, developers can significantly reduce the impact of throttling and maintain the responsiveness of their serverless APIs.

Techniques to Minimize Throttling

Several techniques can be employed to proactively minimize the chances of hitting throttling limits. These strategies often involve careful planning and execution during the design and development phases of the API. Implementing these measures can lead to a more robust and scalable API, capable of handling increased traffic without performance degradation.

- Rate Limiting Implementation: Implementing custom rate limiting within the API can help control the number of requests from a single user or source within a specific time frame. This prevents any single user or service from overwhelming the API. For example, a system might limit a user to 100 requests per minute, allowing for fair usage while protecting against abuse.

- Request Batching: Consolidating multiple small requests into a single, larger request can significantly reduce the number of API calls. This is especially effective when dealing with data retrieval. Instead of making individual calls for each piece of information, batch requests to fetch multiple data points simultaneously. For example, retrieving a list of user profiles can be optimized by batching the retrieval requests instead of retrieving each profile individually.

- Optimize Payload Sizes: Minimize the size of data transmitted in requests and responses. Smaller payloads reduce the bandwidth requirements and processing time, leading to more efficient API calls. This involves selecting appropriate data formats (e.g., JSON, Protocol Buffers), compressing data when possible, and only including necessary fields in responses.

- Implement Exponential Backoff and Retry Mechanisms: When encountering throttling errors, implement an exponential backoff strategy with retries. This involves waiting for progressively longer periods before retrying a failed request. This prevents overwhelming the API during periods of high load and gives the server time to recover. For example, after receiving a 429 (Too Many Requests) error, the client could wait 1 second, then 2 seconds, then 4 seconds, and so on, before retrying the request.

- Monitor API Usage and Adjust Limits: Continuously monitor API usage patterns and adjust throttling limits as needed. This involves tracking request rates, error rates, and resource utilization to identify potential bottlenecks and optimize resource allocation. Regularly reviewing API usage metrics allows for proactive adjustments to throttling configurations, ensuring optimal performance under varying load conditions.

Role of Caching in Reducing Load

Caching plays a vital role in reducing the load on serverless APIs and minimizing the impact of throttling. By storing frequently accessed data, caching mechanisms reduce the number of requests that need to be processed by the API, thereby conserving resources and improving response times. Caching effectively mitigates the effects of throttling by reducing the overall workload on the API infrastructure.

- Caching Strategies: There are several caching strategies that can be implemented to reduce the load on serverless APIs. Choosing the right strategy depends on the specific use case and the nature of the data being cached.

- Client-side Caching: Client-side caching involves storing data on the client device (e.g., web browser). This is suitable for frequently accessed, relatively static data, such as website content or user profile information.

- Server-side Caching: Server-side caching stores data on the server, closer to the API. This can be implemented using a caching service (e.g., Redis, Memcached) or by caching responses within the API itself.

- CDN Caching: Using a Content Delivery Network (CDN) caches API responses at edge locations globally. This reduces latency and improves performance for users worldwide, especially for static assets.

- Cache Invalidation: Implement strategies for cache invalidation to ensure data consistency. This involves automatically removing or updating cached data when the underlying data changes. This can be achieved through time-based expiration policies, event-driven invalidation (e.g., using webhooks), or manual invalidation triggered by data updates.

- Cache Key Design: Design effective cache keys to ensure that data is stored and retrieved correctly. Cache keys should be unique and reflect the data being cached. For example, a cache key for a user profile might include the user ID and a version identifier.

Optimizing API Calls

Optimizing API calls is a critical step in preventing throttling and ensuring efficient resource utilization. The following strategies can be employed to enhance the performance and resilience of API interactions. These optimizations can significantly reduce the likelihood of hitting throttling limits and improve the overall user experience.

- Efficient Query Design: Design queries that retrieve only the necessary data. Avoid retrieving more data than required. Use pagination to limit the number of results returned in a single request. This reduces the amount of data transferred and processed.

- Asynchronous Operations: Utilize asynchronous operations for long-running tasks. This prevents blocking the main thread and improves responsiveness. For example, instead of waiting for a task to complete, offload it to a background process and return a response immediately.

- Minimize External Dependencies: Reduce the number of external dependencies and calls to other services. Each external call adds latency and increases the risk of throttling. Optimize the interaction with external services to minimize the number of requests.

- Connection Pooling: Implement connection pooling to reuse database connections. This reduces the overhead of establishing new connections for each request. Connection pooling can significantly improve performance, especially in high-traffic environments.

- Code Optimization: Write efficient and optimized code. Minimize the use of computationally expensive operations. Profile the code to identify and address performance bottlenecks.

Advanced Throttling Considerations

The landscape of serverless API throttling extends beyond basic rate limiting. Sophisticated techniques are required to manage unpredictable workloads, maintain optimal performance, and provide a consistent user experience. These advanced considerations involve nuanced approaches to traffic management, often leveraging dynamic adaptation and granular control. Understanding these advanced concepts is crucial for building resilient and scalable serverless APIs.

Burst Limits and Dynamic Throttling

Burst limits and dynamic throttling are integral components of advanced throttling strategies, addressing scenarios beyond simple rate limits. Burst limits provide a short-term allowance for spikes in traffic, while dynamic throttling adapts to real-time system conditions.

- Burst Limits: Burst limits permit a brief period of higher-than-normal traffic, accommodating sudden surges. They are often defined in conjunction with rate limits. For example, an API might have a rate limit of 10 requests per second, with a burst limit of 20 requests. This allows for an initial burst of 20 requests, after which the rate limit of 10 requests per second applies.

This is especially useful during initial API usage or promotional periods. This mechanism is crucial for mitigating the impact of sudden increases in API usage.

- Dynamic Throttling: Dynamic throttling adjusts throttling parameters in response to real-time conditions. This can involve monitoring server resource utilization (CPU, memory), database load, and API response times. When resource utilization reaches a predefined threshold, the throttling parameters are adjusted to reduce load. For example, if CPU usage exceeds 80%, the API gateway might temporarily reduce the rate limit. Dynamic throttling leverages feedback loops to optimize resource allocation and maintain performance.

This adaptation helps prevent cascading failures and ensures API availability.

Comparison of Throttling Algorithms

Different throttling algorithms offer varying trade-offs in terms of fairness, responsiveness, and resource utilization. The choice of algorithm depends on the specific requirements of the API and the expected traffic patterns.

- Fixed Window Throttling: Fixed window throttling divides time into fixed intervals (e.g., seconds, minutes). Requests are counted within each interval, and any exceeding the defined limit are throttled. This is a simple and easy-to-implement algorithm. However, it can be vulnerable to bursts of requests at the beginning of each window, potentially leading to temporary overload. For example, if the rate limit is 10 requests per minute, all 10 requests could be made at the start of the minute.

- Sliding Window Throttling: Sliding window throttling improves upon fixed window throttling by considering a rolling time window. It combines elements of both fixed window and leaky bucket algorithms to provide smoother traffic shaping. This algorithm is more responsive to changes in request patterns and less susceptible to burst-related issues. It provides better accuracy in rate limiting.

- Token Bucket Throttling: Token bucket throttling is a more sophisticated algorithm. It simulates a bucket filled with tokens at a constant rate. Each request consumes a token. If the bucket is empty, requests are throttled. This algorithm allows for burst traffic within the capacity of the bucket.

This is useful for applications that require a certain degree of burst tolerance.

- Leaky Bucket Throttling: Leaky bucket throttling is similar to token bucket, but instead of adding tokens, requests are queued. Requests “leak” from the bucket at a constant rate. If the bucket is full, new requests are dropped or queued. This algorithm provides a consistent rate of request processing.

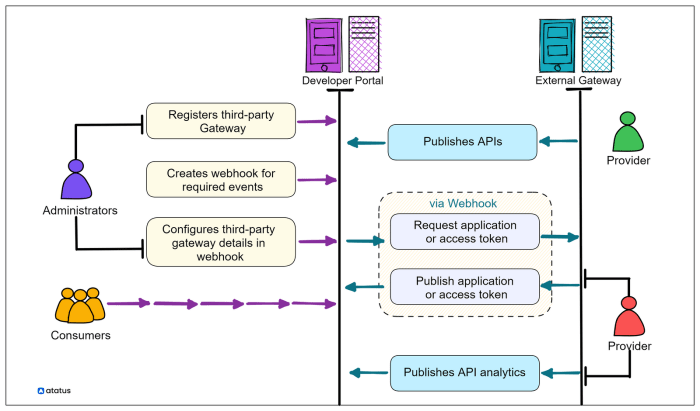

Role of API Gateways in Implementing Throttling Policies

API gateways play a central role in implementing and managing sophisticated throttling policies. They act as the primary entry point for API traffic, providing the necessary infrastructure to enforce throttling rules.

- Centralized Policy Enforcement: API gateways centralize the enforcement of throttling policies, allowing consistent application across all API endpoints. This simplifies management and ensures uniformity. The API gateway can apply rate limits, burst limits, and other throttling mechanisms based on various factors, such as API keys, user roles, or source IP addresses.

- Real-time Monitoring and Analytics: API gateways provide real-time monitoring and analytics capabilities, enabling administrators to track API usage, identify bottlenecks, and fine-tune throttling policies. This data is crucial for understanding traffic patterns and optimizing API performance. The monitoring data can be used to dynamically adjust throttling parameters based on observed API behavior.

- Integration with Authentication and Authorization: API gateways integrate with authentication and authorization mechanisms, allowing throttling policies to be applied based on user identity and access rights. This enables granular control over API usage and enhances security. API gateways can also enforce quotas based on subscription tiers or other business rules.

- Support for Different Throttling Algorithms: API gateways typically support a range of throttling algorithms, allowing administrators to select the most appropriate algorithm for their specific needs. They also provide configuration options for fine-tuning the parameters of these algorithms, such as rate limits, burst sizes, and window durations.

Real-World Throttling Scenarios

Throttling is a critical component in the design of robust and scalable serverless APIs. Its application extends beyond mere rate limiting, playing a vital role in maintaining service availability, protecting against malicious attacks, and ensuring fair resource allocation. Understanding real-world scenarios where throttling is essential demonstrates its practical significance and underscores its necessity for successful API operation.

Protecting Against Unexpected Traffic Spikes

Unexpected surges in API traffic can originate from various sources, including promotional events, viral content, or denial-of-service (DoS) attacks. Throttling acts as a protective measure, preventing these spikes from overwhelming the API’s resources and causing service disruptions. It achieves this by controlling the rate at which requests are processed, thereby preventing the API from becoming overloaded.

- E-commerce during Flash Sales: E-commerce platforms frequently experience massive traffic influxes during flash sales or holiday promotions. Without throttling, the sudden surge in requests for product listings, order processing, and payment gateway interactions could lead to server overload, causing slow response times, transaction failures, and ultimately, a poor customer experience. Throttling ensures that the API can handle the increased load gracefully, prioritizing critical operations and preventing complete service outages.

- Social Media Viral Content: When a piece of content goes viral on social media, the associated API endpoints (e.g., those for sharing, commenting, or viewing content) can be inundated with requests. Throttling mechanisms limit the number of requests per user or per time unit, preventing the API from being overwhelmed by the sudden surge in traffic. This safeguards the API’s stability and ensures that the platform remains accessible to all users.

- DoS and DDoS Attack Mitigation: Distributed denial-of-service (DDoS) attacks aim to disrupt service by flooding a server with traffic. Throttling can mitigate the impact of these attacks by limiting the rate at which requests are processed. While throttling alone might not completely stop a sophisticated DDoS attack, it can significantly reduce its effectiveness by preventing the server from being completely overwhelmed and maintaining some level of service availability.

Case Study: Throttling Incident and Resolution

The following case study illustrates the practical application and importance of throttling in a real-world scenario.

Scenario: A financial services company experienced a significant performance degradation in its API during a peak trading hour. Users reported slow response times and transaction failures, impacting their ability to execute trades.

Root Cause: An unusually large number of concurrent requests were observed, exceeding the API’s capacity. Analysis revealed a combination of factors, including a scheduled marketing campaign that drove increased user activity and a spike in automated trading bots. The lack of effective throttling allowed the excessive traffic to consume available resources, leading to server overload.

Resolution: The engineering team implemented a multi-layered throttling strategy. Initially, a basic rate limit was established at the API gateway to restrict the number of requests per minute per user. Subsequently, more sophisticated throttling rules were introduced, considering factors such as the type of request, the user’s account level, and the time of day. Monitoring dashboards were enhanced to provide real-time visibility into API traffic patterns and the effectiveness of the throttling mechanisms.

Furthermore, automated scaling was configured to dynamically adjust server resources based on traffic volume.

Outcome: The implemented throttling measures successfully mitigated the performance issues. Response times improved significantly, and transaction failures were reduced to a negligible level. The API became more resilient to traffic spikes, and the financial services company was able to maintain a stable and reliable service during peak trading hours. The incident highlighted the critical role of throttling in ensuring API stability and the importance of proactive monitoring and management.

Concluding Remarks

In conclusion, throttling in serverless APIs is a multifaceted discipline that requires a comprehensive understanding of its mechanisms, impacts, and implementation strategies. From rate limiting and concurrency controls to sophisticated monitoring and error handling, mastering throttling is critical for building resilient, scalable, and cost-effective serverless applications. By carefully configuring throttling policies, monitoring their effects, and adapting to changing usage patterns, developers can ensure optimal API performance and a positive user experience, making throttling an indispensable element of serverless architecture.

Common Queries

What happens when a serverless API is throttled?

When a serverless API is throttled, requests exceeding the defined limits are typically rejected. The API might return an HTTP status code like 429 (Too Many Requests) to indicate that the client has exceeded its rate limit or a 503 (Service Unavailable) to indicate the server is at capacity. The client should then implement strategies to handle these errors, such as retrying the request after a delay.

How does throttling differ from rate limiting?

Rate limiting is a specific type of throttling. It restricts the number of requests a client can make within a given time period. Throttling is a broader term encompassing various techniques to control resource consumption, including rate limiting, concurrency limits, and overall resource usage.

What are the key metrics to monitor related to throttling?

Key metrics to monitor include the number of throttled requests, the percentage of requests being throttled, API response times, error rates (specifically 429 and 503 status codes), and resource utilization (e.g., CPU, memory, database connections). These metrics provide insight into the effectiveness of throttling policies and potential performance bottlenecks.

How can I test my throttling configurations?

You can test throttling configurations by simulating high traffic loads using tools like load testing software (e.g., JMeter, Gatling) or by creating scripts that send a large number of requests to your API. Monitor the API’s behavior and the metrics mentioned above to ensure that throttling is working as expected and that the API is responding appropriately under load.