Application migration, the process of transferring software applications from one environment to another, presents a complex interplay of factors that directly influence application latency. This critical performance metric, the time it takes for a user request to be processed and a response to be delivered, can be significantly impacted by the choices made during migration. From network configurations to database transfers, each stage of the migration process holds potential pitfalls that can degrade user experience and hinder operational efficiency.

Understanding these impacts is crucial for organizations seeking to optimize application performance and minimize downtime during and after migration initiatives.

This analysis delves into the multifaceted relationship between migration and application latency. We will explore the various components that contribute to latency, examining the pre-migration assessment, network and database implications, code optimization strategies, data transfer techniques, and post-migration performance tuning. The objective is to provide a comprehensive understanding of the challenges and opportunities associated with mitigating latency issues during application migrations, allowing for informed decision-making and the successful implementation of migration strategies.

Introduction: Migration and Application Latency

Migration, in the context of software applications, refers to the process of transferring an application, its data, and its associated infrastructure from one environment to another. This can encompass a variety of scenarios, including moving from on-premises servers to the cloud, upgrading to a new version of a database, or shifting workloads between different cloud providers. The objective is typically to improve performance, reduce costs, enhance scalability, or address security concerns.

Application latency, conversely, quantifies the delay experienced by users when interacting with an application. This delay can manifest in various ways, from slow loading times to delayed responses to user actions, directly impacting the user experience and, consequently, the application’s usability and business value.

Defining Application Latency

Application latency is a crucial performance metric, and understanding its contributing factors is essential for optimizing application performance. Several elements influence the time it takes for an application to respond to a user’s request.

- Network Latency: This refers to the delay introduced by the network infrastructure connecting the user to the application server. Factors include the physical distance between the user and the server, the number of network hops a request must traverse, and network congestion. High network latency can significantly increase the time it takes for data to travel between the user and the application.

For example, a user accessing an application from a location geographically distant from the server will likely experience higher network latency than a user located closer to the server.

- Server-Side Processing Time: This encompasses the time the server spends processing the user’s request. This includes the execution of application code, database queries, and any other operations performed on the server. The complexity of the application logic, the efficiency of the code, and the server’s hardware resources all contribute to server-side processing time. For instance, an application with inefficient database queries will experience longer server-side processing times.

- Database Latency: This focuses on the time it takes for the database to retrieve and process data requested by the application. Database performance is often a critical bottleneck, especially for data-intensive applications. Database latency is influenced by factors such as the database server’s hardware, the database schema design, and the efficiency of the queries.

- Client-Side Rendering Time: This refers to the time it takes for the user’s device to render the application’s interface after receiving the data from the server. This includes the time spent downloading resources like images and scripts, as well as the time spent by the browser or application to process and display the content. Complex user interfaces and large media files can increase client-side rendering time.

Migration Processes Across Application Types

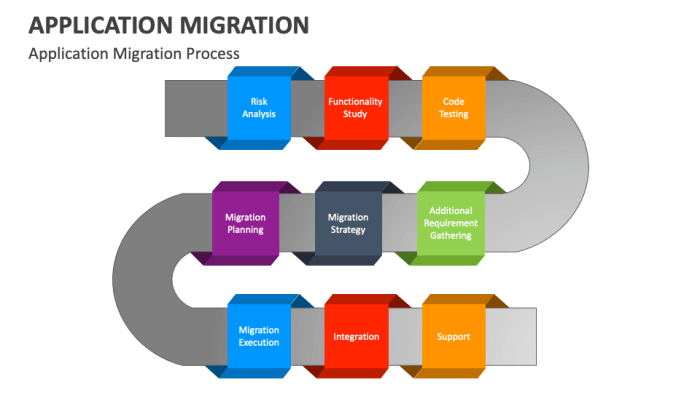

The specific steps and considerations involved in migrating an application vary significantly depending on the application’s type and architecture. Different application categories necessitate distinct migration strategies.

- Web Applications: Migrating web applications often involves moving the application code, associated data (e.g., databases, files), and infrastructure components (e.g., web servers, load balancers). Common migration strategies include:

- Lift and Shift: This approach involves moving the application and its infrastructure to the new environment with minimal changes. This is a straightforward approach but may not fully leverage the benefits of the new environment.

- Re-platforming: This involves making some changes to the application to take advantage of the new environment’s features. For example, moving to a managed database service.

- Re-architecting: This involves fundamentally redesigning the application to optimize it for the new environment. This is the most complex approach but can offer the greatest performance and scalability benefits.

Web applications often rely on databases for data storage. Therefore, database migration is a crucial aspect of web application migration. This can involve migrating the database to a new platform or upgrading the database version.

- Databases: Database migration is a specialized area with its own set of challenges. The process often involves:

- Data Extraction: Extracting the data from the source database.

- Data Transformation: Transforming the data to match the schema of the target database, which might include changes in data types, structures, and constraints.

- Data Loading: Loading the transformed data into the target database.

Database migration tools and techniques vary depending on the database type (e.g., MySQL, PostgreSQL, Oracle, MongoDB) and the target environment. The choice of migration strategy depends on factors like the size of the database, the downtime tolerance, and the desired level of data consistency.

- Other Application Types: Applications such as desktop applications, mobile applications, and microservices-based applications each have their specific migration considerations. Desktop applications may require re-packaging and re-testing for the new environment. Mobile applications may need to be updated to support new operating system versions or platform-specific features. Microservices architectures can present unique challenges due to the distributed nature of the system, requiring careful planning and orchestration of service migrations.

Pre-Migration Impact Assessment

A thorough pre-migration impact assessment is crucial for minimizing the risk of increased application latency during and after a migration. This assessment involves a detailed evaluation of the existing application environment, identifying potential bottlenecks, and establishing baseline performance metrics. This proactive approach allows for informed decision-making, resource allocation, and the implementation of mitigation strategies to ensure a smooth transition and maintain or improve application performance.

Critical Metrics for Evaluation

Identifying the key performance indicators (KPIs) is the cornerstone of a successful pre-migration assessment. These metrics provide a comprehensive understanding of the application’s current behavior and serve as benchmarks for comparison after the migration. Monitoring these metrics throughout the migration process allows for identifying performance degradation and implementing corrective measures.

- Application Response Time: Measures the time taken for the application to respond to user requests. This metric directly impacts user experience. A slow response time can lead to user frustration and decreased productivity.

- Transaction Throughput: Indicates the number of transactions processed by the application per unit of time. A decrease in throughput can signify performance degradation, impacting the application’s ability to handle user load.

- Error Rates: Tracks the frequency of errors encountered by users. High error rates suggest underlying issues, such as database problems, code errors, or infrastructure limitations, that can contribute to latency.

- Resource Utilization (CPU, Memory, Disk I/O, Network): Assesses the consumption of system resources. High resource utilization can lead to bottlenecks and increased latency. Monitoring resource utilization helps identify resource-intensive operations.

- Database Query Performance: Analyzes the execution time of database queries. Slow database queries are a common cause of application latency. Optimizing database queries is often a key step in improving application performance.

- Network Latency: Measures the delay in data transmission between the application and its users or other systems. High network latency can significantly impact application performance, especially for applications that rely on frequent network communication.

- Dependency Latency: Evaluates the latency of external services and dependencies the application relies on. Slow or unreliable dependencies can significantly impact application performance.

Assessing Current Latency Performance

A structured methodology is essential for accurately assessing the current latency performance of an application. This process involves several key steps, including the selection of appropriate monitoring tools, establishing a baseline, and analyzing performance data to identify potential bottlenecks.

- Selecting Monitoring Tools: Choose appropriate monitoring tools based on the application’s architecture and requirements. Common tools include:

- Application Performance Monitoring (APM) tools: (e.g., New Relic, Dynatrace, AppDynamics) provide comprehensive visibility into application performance, including response times, error rates, and resource utilization.

- Infrastructure monitoring tools: (e.g., Prometheus, Nagios, Zabbix) monitor the underlying infrastructure, such as servers, networks, and databases.

- Network monitoring tools: (e.g., Wireshark, tcpdump) capture and analyze network traffic to identify latency issues.

- Establishing a Baseline: Collect performance data over a representative period (e.g., several days or weeks) to establish a baseline. This baseline provides a point of reference for comparison after the migration. The data should include metrics such as average response time, transaction throughput, and error rates.

- Analyzing Performance Data: Analyze the collected data to identify performance bottlenecks. Look for patterns and trends that indicate areas of concern. For example, a consistently high CPU utilization on a particular server might indicate a need for optimization or additional resources.

A common formula for calculating average response time is:

Average Response Time = (Sum of all Response Times) / (Number of Requests) - Simulating User Load: Conduct load testing to simulate realistic user traffic and assess the application’s performance under stress. Load testing can reveal potential performance bottlenecks that may not be apparent during normal operation. Tools like JMeter or LoadRunner can be used for load testing.

- Profiling Application Code: Use profiling tools to identify performance-critical code sections. This can help pinpoint inefficient code that contributes to latency. Profiling tools analyze code execution to identify slow-running functions and bottlenecks.

Pre-Migration Checklist for Mitigation

A well-defined checklist provides a structured approach to mitigating potential latency issues before initiating the migration. This checklist should be comprehensive, covering various aspects of the application environment, from code optimization to infrastructure readiness.

- Code Optimization: Review and optimize the application code for performance. Identify and address inefficient code sections, such as slow database queries or inefficient algorithms. This may involve code refactoring or rewriting specific components.

- Database Optimization: Optimize the database schema, queries, and indexes. Ensure that the database is properly configured and tuned for optimal performance. This might include adding indexes to frequently queried columns or optimizing complex queries.

- Infrastructure Assessment: Assess the existing infrastructure to ensure it can handle the application’s workload after the migration. This involves evaluating the capacity of servers, networks, and storage systems. Consider upgrading infrastructure components if necessary.

- Network Assessment: Evaluate the network connectivity between the application and its users or other systems. Ensure sufficient bandwidth and low latency. This might involve optimizing network configurations or upgrading network hardware.

- Dependency Assessment: Assess the performance of external dependencies, such as databases, APIs, and third-party services. Identify and address any potential bottlenecks or performance issues with these dependencies.

- Data Migration Strategy: Develop a detailed data migration strategy. Consider the volume of data, the migration method (e.g., online, offline), and the potential impact on application performance. The migration strategy should minimize downtime and ensure data integrity.

- Testing and Validation: Conduct thorough testing to validate the application’s performance after the migration. This includes functional testing, performance testing, and stress testing. The testing should simulate real-world user traffic and identify any performance issues.

- Rollback Plan: Develop a rollback plan in case the migration fails or results in unacceptable performance degradation. The rollback plan should include steps to revert to the previous environment and minimize downtime.

- Monitoring and Alerting: Implement robust monitoring and alerting to detect and respond to performance issues after the migration. This involves setting up monitoring tools to track key performance metrics and configuring alerts to notify administrators of any anomalies.

Network Impact During Migration

The network infrastructure plays a critical role in application performance during migration. As data and application components traverse the network, bandwidth limitations and latency significantly impact the overall user experience. Understanding and mitigating these network-related challenges is crucial for a smooth and successful migration process. This section delves into the specific network considerations that arise during migration, exploring the impact of bandwidth and latency, comparing different migration strategies, and outlining optimization techniques.

Network Bandwidth and Latency Effects

Network bandwidth and latency are fundamental factors determining application performance during migration. Insufficient bandwidth can lead to bottlenecks, while high latency introduces delays, both negatively impacting the responsiveness and efficiency of the application.Bandwidth, measured in bits per second (bps), represents the maximum data transfer rate across a network connection. During migration, applications often experience increased data transfer demands. This includes:

- Data Transfer: Migrating large datasets requires substantial bandwidth to ensure timely completion. Insufficient bandwidth can significantly prolong the migration process, leading to downtime and operational disruptions. For instance, migrating a 1 TB database across a 100 Mbps network could take over 24 hours, assuming near-constant bandwidth utilization.

- Application Component Synchronization: As application components are moved or reconfigured, there’s often a need for synchronization between the source and destination environments. This may involve database replication, file transfers, and API calls, all of which consume bandwidth.

- User Access During Migration: If the application remains accessible during migration, user traffic adds to the network load. This can exacerbate bandwidth constraints and impact the user experience.

Latency, measured in milliseconds (ms), refers to the delay in data transmission across the network. High latency can significantly impact application performance, especially for interactive applications. Several factors contribute to network latency, including:

- Distance: The physical distance between the source and destination locations directly affects latency. Data must travel a longer distance, resulting in increased propagation delay.

- Network Congestion: Heavy network traffic can lead to congestion, increasing latency as data packets compete for network resources.

- Network Infrastructure: The quality and configuration of network devices, such as routers and switches, also influence latency. Older or poorly configured devices can introduce delays.

High latency can manifest as:

- Slow Response Times: Users may experience delays in application responses, such as clicking a button or loading a webpage.

- Poor User Experience: Overall application performance degrades, leading to frustration and reduced user satisfaction.

- Transaction Failures: In some cases, high latency can cause transaction timeouts and failures, particularly in applications with strict timing requirements.

Network Latency Implications of Migration Strategies

Different migration strategies have varying implications for network latency. The choice of strategy significantly influences the network demands and the potential impact on application performance. Understanding these differences is crucial for selecting the most appropriate migration approach. Lift-and-Shift: This strategy involves migrating applications and their infrastructure to a new environment with minimal changes. This often involves replicating the existing network configuration, including IP addresses and network topology.

- Network Impact: Lift-and-shift migrations often result in minimal changes to the network architecture. However, if the source and destination environments are geographically distant, latency can become a significant issue due to increased propagation delay. Bandwidth requirements will still be substantial if the data is massive.

- Latency Considerations: Applications that are sensitive to latency may experience performance degradation, especially if the migration involves moving the application to a different geographic region.

- Example: A company migrating its on-premises application to a cloud provider’s infrastructure, without significant architectural changes, is an example of lift-and-shift. The network performance will depend on the distance between the company’s data center and the cloud provider’s region.

Re-platforming: This strategy involves making modifications to the application to take advantage of the new environment. This may include changing the operating system, database, or application server.

- Network Impact: Re-platforming can potentially introduce new network dependencies. If the application relies on specific network services or protocols that are not supported in the new environment, it might lead to network performance issues.

- Latency Considerations: Re-platforming may introduce changes to the application’s network communication patterns. For example, moving from a local database to a cloud-based database will likely increase latency.

- Example: A company re-platforming its application from an older version of a database to a more modern cloud-based database. This will impact latency due to the new database location.

Refactoring: Refactoring involves redesigning and rewriting parts of the application to improve its performance, scalability, and maintainability.

- Network Impact: Refactoring often results in significant changes to the application’s architecture and network communication patterns. This can potentially improve or worsen network performance, depending on the design choices.

- Latency Considerations: Refactoring can optimize network communication, for example, by reducing the number of network requests or using more efficient protocols. Conversely, introducing new services or dependencies can increase latency.

- Example: A company refactoring a monolithic application into a microservices architecture. This might lead to increased network traffic between services, impacting latency.

Re-architecting: This involves completely redesigning the application’s architecture to leverage the capabilities of the new environment.

- Network Impact: Re-architecting typically leads to the most significant changes to the network architecture. This might include using different network services, protocols, or communication patterns.

- Latency Considerations: Re-architecting can introduce new latency challenges, but it also offers the opportunity to optimize network performance. For example, adopting a content delivery network (CDN) can significantly reduce latency for users accessing the application from different geographic locations.

- Example: A company re-architecting its application to use a serverless architecture and a CDN for content delivery. This strategy is designed to minimize latency.

Strategies for Optimizing Network Performance During Migration

Optimizing network performance is essential for ensuring a smooth and efficient migration process. Several strategies can be employed to mitigate the impact of network bandwidth and latency. Bandwidth Optimization:

- Network Segmentation: Segmenting the network can isolate traffic related to the migration, preventing it from competing with other network traffic.

- Data Compression: Compressing data before transmission can reduce the amount of data transferred, saving bandwidth.

- Incremental Data Transfer: Instead of transferring all data at once, transfer data incrementally. This allows for faster completion and minimizes downtime.

- WAN Optimization: Utilize WAN optimization technologies, such as caching and traffic shaping, to improve bandwidth utilization and reduce latency over wide area networks.

- Bandwidth Throttling: Control the bandwidth used by the migration process to avoid impacting other critical applications.

Latency Reduction:

- Geographic Proximity: Migrate applications to a destination environment that is geographically closer to the users.

- Network Optimization Tools: Use network performance monitoring tools to identify and resolve latency bottlenecks.

- Network Path Optimization: Optimize the network path between the source and destination environments.

- Caching: Implement caching mechanisms to reduce the need for repeated data retrieval.

- Load Balancing: Distribute traffic across multiple servers to improve response times.

Content Delivery Networks (CDNs):

- Functionality: CDNs are geographically distributed networks of servers that cache content closer to users. They significantly reduce latency by serving content from the closest available server.

- Benefits: CDNs are particularly beneficial for applications with a global user base, as they can drastically reduce the time it takes for content to load, improving user experience.

- Implementation: During migration, CDNs can be used to cache static content, such as images, videos, and JavaScript files, improving the performance of the application. For example, if a global e-commerce site is migrating to a new infrastructure, using a CDN will ensure that product images and other static content load quickly for users around the world.

Database Migration and its Latency Implications

Database migration, a critical component of application modernization and infrastructure upgrades, presents significant latency challenges. The process involves transferring data from one database system to another, which can impact application performance if not carefully managed. This impact is particularly noticeable during the cutover phase when the new database becomes the primary data source for the application. Understanding and mitigating these latency implications are crucial for maintaining application availability and responsiveness.

Specific Latency Challenges Associated with Database Migration

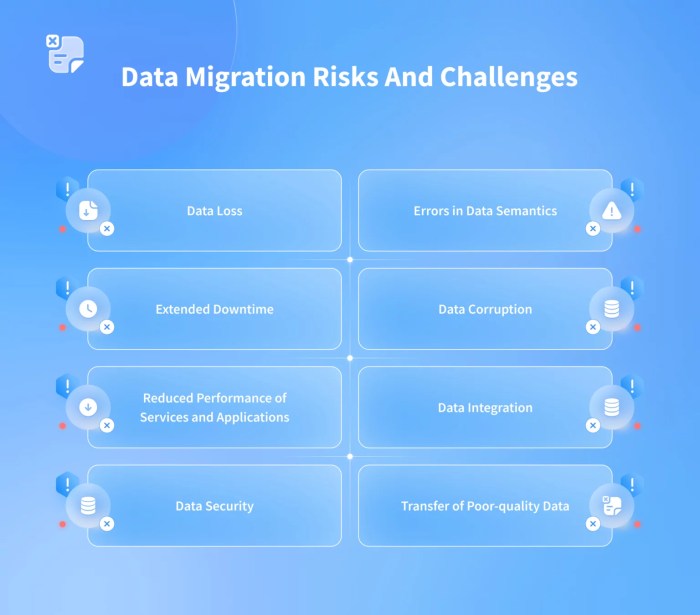

Several factors contribute to latency during database migration. These include data transfer volume, network bandwidth limitations, database schema differences, and the chosen migration strategy.

- Data Transfer Volume: The sheer volume of data being migrated is a primary driver of latency. Larger datasets require more time to transfer, especially when network bandwidth is a constraint. For example, migrating a terabyte-sized database over a congested network can take hours or even days, leading to prolonged downtime and impacting application performance.

- Network Bandwidth: The network infrastructure plays a vital role. Limited bandwidth between the source and destination databases can significantly slow down the data transfer process. This is particularly true for migrations across geographically dispersed data centers or cloud regions.

- Database Schema Differences: Mismatches in database schemas between the source and destination systems can introduce latency. These mismatches necessitate data transformation and conversion processes, adding to the overall migration time. For instance, migrating from an older version of a database with a different data type or storage structure to a newer version often requires complex data mapping and transformation, increasing latency.

- Database Downtime: Downtime is the period during which the application is unavailable or experiences degraded performance. Minimizing downtime is crucial. The cutover process, when the application switches from the old database to the new one, is a critical point for latency. A poorly executed cutover can lead to significant delays and impact user experience.

- Data Consistency and Validation: Ensuring data consistency and validating the migrated data can add to the migration time. This involves comparing data between the source and destination databases to identify and resolve any discrepancies. The complexity of these validation checks and the volume of data to be verified can influence the overall latency.

Procedures for Minimizing Database Downtime During Migration, Considering Latency

Several strategies can be employed to minimize downtime during database migration, with careful consideration of latency impacts. These strategies include choosing the right migration approach, pre-migration activities, and optimized cutover procedures.

- Choosing the Right Migration Approach: The migration approach significantly impacts downtime. Different approaches, such as offline migration, online migration (e.g., using change data capture – CDC), and hybrid approaches, have varying implications for latency and downtime.

- Offline Migration: Involves a complete shutdown of the application during the migration. While it offers simplicity, it results in extended downtime.

- Online Migration: Allows the application to remain online during migration, using techniques like CDC to replicate changes from the source to the destination database. This approach minimizes downtime but requires more complex implementation.

- Hybrid Approach: Combines offline and online methods, potentially offering a balance between downtime and complexity.

- Pre-Migration Activities: Preparation is key.

- Data Profiling and Assessment: Analyzing the source database to understand data volume, schema complexity, and data quality issues.

- Schema Conversion and Mapping: Designing a schema that is compatible with the destination database, including data type mapping and transformation rules.

- Data Cleansing and Transformation: Cleaning and transforming the data to ensure consistency and compatibility with the new database.

- Optimized Cutover Procedures: A well-defined cutover plan is essential.

- Phased Cutover: Migrating a subset of data or a portion of the application first, allowing for testing and validation before migrating the entire system.

- Application-Level Switchover: Implementing mechanisms to switch the application to the new database with minimal disruption, such as using connection pooling and load balancing.

- Rollback Plan: Developing a detailed rollback plan in case of issues during the cutover, allowing for a quick return to the source database.

- Utilizing Tools and Technologies: Leveraging tools and technologies that support high-speed data transfer, automated schema conversion, and efficient data synchronization.

Latency Characteristics of Different Database Migration Tools

The choice of migration tool significantly impacts latency. Different tools employ varying methodologies, leading to different performance characteristics. The following table provides a comparison of some common database migration tools, focusing on latency-related aspects. Note that the actual performance can vary depending on specific configurations, data characteristics, and network conditions.

| Migration Tool | Migration Method | Latency Characteristics | Key Considerations for Latency Mitigation |

|---|---|---|---|

| AWS Database Migration Service (DMS) | Online (CDC) and Offline | Generally offers low latency, especially for online migrations using CDC. Latency depends on network bandwidth and data change rate. | Optimize network configuration, select appropriate replication instance size, monitor replication tasks for performance bottlenecks. |

| Azure Database Migration Service (DMS) | Online (CDC) and Offline | Similar to AWS DMS, provides low-latency online migration capabilities. Network latency and data volume are key factors. | Ensure sufficient network bandwidth, choose appropriate service tier, and monitor migration tasks for performance issues. |

| Google Cloud Database Migration Service (DMS) | Online (CDC) and Offline | Supports online migrations with minimal downtime. Latency is influenced by network performance and data change rates. | Optimize network connectivity between source and destination databases, select appropriate instance sizes, and monitor the migration process. |

| Third-Party Migration Tools (e.g., Ispirer, Informatica) | Varies (Online, Offline, Hybrid) | Latency varies significantly based on the specific tool, migration method, and features. Some tools offer features like parallel data loading to reduce latency. | Evaluate tool performance through thorough testing, consider the impact of data transformation processes, and optimize the tool’s configuration for the specific database environments. |

Code and Application Logic Optimization

Inefficient code and poorly designed application logic are significant contributors to latency, especially during and after migration. These issues can manifest as slow response times, increased resource consumption, and overall degraded user experience. Addressing these inefficiencies is crucial for ensuring a smooth migration process and maintaining application performance post-migration.

Inefficient Code’s Impact on Latency

Inefficient code introduces bottlenecks that directly translate into increased latency. These bottlenecks can stem from various sources, including poorly optimized algorithms, excessive database queries, and inefficient data structures.

- Algorithm Complexity: Algorithms with high time complexity (e.g., O(n^2) or worse) can drastically increase processing time, especially with large datasets. Consider an application migrating a user authentication system. If the user authentication code uses an inefficient algorithm to compare user credentials against a database, it can introduce significant latency during login attempts.

- Database Interaction: Frequent or poorly optimized database queries are a major source of latency. For example, applications making numerous round trips to the database for simple data retrieval or using queries that lack proper indexing can experience substantial performance degradation. A migrated e-commerce platform, for instance, might experience increased latency if product catalog queries are not optimized, leading to slow page load times.

- Resource Consumption: Inefficient code often consumes excessive CPU, memory, and I/O resources. This can lead to contention and delays, especially in resource-constrained environments. An example is a migrated application with memory leaks. Over time, the application’s memory usage grows, leading to slower performance and potential crashes.

- Network Operations: Unnecessary network calls or inefficient data transfer protocols can add to latency. Imagine a migrated mobile application that makes multiple small requests to a server instead of a single, consolidated request. This can lead to increased latency due to the overhead of establishing and tearing down network connections repeatedly.

Profiling Application Code for Performance Bottlenecks

Profiling application code is essential for identifying performance bottlenecks related to latency. This involves systematically analyzing the application’s behavior to pinpoint areas where optimization efforts will yield the greatest impact.

- Instrumentation: Instrument the application code with logging statements and timers to measure the execution time of critical functions and operations. This provides a baseline understanding of performance. Consider using tools like the Python `timeit` module or Java’s `System.nanoTime()` for accurate timing measurements.

- Profiling Tools: Employ profiling tools specific to the application’s programming language and platform. For instance, Java applications can be profiled using tools like JProfiler or YourKit, while Python applications can utilize tools like cProfile or py-spy. These tools provide detailed insights into CPU usage, memory allocation, and function call stacks.

- Load Testing: Conduct load testing to simulate realistic user traffic and identify performance degradation under stress. Tools like Apache JMeter or Gatling can simulate thousands of concurrent users, allowing you to assess how the application behaves under load and pinpoint bottlenecks that emerge under heavy traffic.

- Monitoring and Alerting: Implement continuous monitoring of key performance indicators (KPIs) such as response times, error rates, and resource utilization. Set up alerts to proactively detect performance degradation and trigger investigations. Tools like Prometheus and Grafana are effective for monitoring and visualization.

Code Optimization Techniques to Reduce Latency

Once performance bottlenecks are identified, several code optimization techniques can be applied to reduce latency in migrated applications.

- Algorithm Optimization: Review and optimize algorithms for time complexity. Replace inefficient algorithms with more efficient alternatives. For example, when searching for a specific item in a large unsorted list, a linear search (O(n)) is slow. Using a binary search (O(log n)) if the list is sorted will dramatically reduce the search time.

- Database Query Optimization: Optimize database queries by ensuring proper indexing, rewriting inefficient queries, and minimizing database round trips. Using indexes on frequently queried columns speeds up data retrieval.

- Caching: Implement caching mechanisms to store frequently accessed data in memory, reducing the need to repeatedly fetch data from the database or other slow sources. For example, caching the results of frequently executed database queries can significantly reduce the load on the database and improve response times.

- Code Refactoring: Refactor code to improve its structure, readability, and efficiency. This may involve breaking down large functions into smaller, more manageable units, removing redundant code, and improving the overall design.

- Asynchronous Operations: Use asynchronous operations to prevent blocking the main thread. For example, offload time-consuming tasks like sending emails or processing large files to background threads or queues. This keeps the application responsive while the tasks are being performed.

- Code Profiling and Optimization Cycle: The optimization process is iterative. Profile the application, identify bottlenecks, apply optimization techniques, and then re-profile to measure the impact of the changes. This cycle ensures that the optimization efforts are effective.

Data Transfer and Synchronization

Data transfer and synchronization are critical components of application migration, directly impacting the perceived latency experienced by users. The speed at which data is moved and kept consistent across environments dictates the downtime, the performance of the new application during the cutover phase, and the overall user experience. Efficient data handling minimizes disruption and ensures data integrity, making it a key consideration in any migration strategy.

Impact of Data Transfer Speeds

The speed of data transfer during migration significantly affects application latency. A slow data transfer rate can lead to prolonged downtime, as the application cannot function fully until all data is available in the new environment. This downtime directly translates to increased latency for users, as they are unable to access the application’s features or data. Furthermore, slow transfer speeds can cause performance bottlenecks in the new environment if the application attempts to access data before it’s fully migrated, leading to sluggish response times and a poor user experience.

Factors influencing data transfer speeds include network bandwidth, the volume of data, the distance between the old and new environments, and the efficiency of the transfer protocols used.

Techniques for Data Synchronization

Data synchronization techniques are essential for minimizing latency during application migration. These methods ensure that data is consistent between the old and new application environments, allowing for a smooth transition and reducing the risk of data loss or corruption. Several strategies are employed, each with its own trade-offs regarding complexity, cost, and impact on application performance. Choosing the right synchronization method depends on the specific requirements of the application, the acceptable downtime, and the characteristics of the data.

Pros and Cons of Data Synchronization Methods

Various data synchronization methods exist, each with its advantages and disadvantages. Understanding these trade-offs is crucial for selecting the most appropriate technique for a given migration scenario.

- Full Data Replication: This method involves copying the entire dataset from the source environment to the target environment.

- Pros: Simplest to implement, ensuring complete data consistency. Minimizes the risk of data loss.

- Cons: Time-consuming, especially for large datasets. Requires significant network bandwidth. Can lead to prolonged downtime.

- Incremental Data Replication: Only changes made to the data (inserts, updates, deletes) are replicated.

- Pros: Faster than full replication, as only changes are transferred. Reduces downtime. Less network bandwidth is required.

- Cons: More complex to implement, requiring change data capture (CDC) mechanisms. Potential for data inconsistencies if CDC is not implemented correctly.

- Log-Based Replication: Changes are captured from the database transaction logs and applied to the target environment.

- Pros: Near real-time data synchronization. Minimal downtime. Highly efficient for large datasets.

- Cons: Requires specific database features (e.g., transaction logs). More complex to set up and manage. May require specialized tools.

- Two-Way Synchronization: Changes made in either the source or target environment are synchronized bidirectionally.

- Pros: Allows for ongoing operation of both environments during migration. Enables gradual cutover.

- Cons: Highly complex to implement. Requires conflict resolution mechanisms to handle conflicting changes. Increased risk of data inconsistencies if not carefully managed.

- Snapshot Replication: A point-in-time copy of the data is created and transferred.

- Pros: Relatively simple to implement. Useful for reporting or analytical purposes.

- Cons: Not suitable for applications requiring real-time data. Can lead to data inconsistencies if changes occur during the snapshot process.

Monitoring and Performance Tuning Post-Migration

Following the successful migration of an application, continuous monitoring and performance tuning become paramount to ensure optimal application responsiveness and identify potential latency issues. This proactive approach allows for timely adjustments and optimizations, guaranteeing a seamless user experience and efficient resource utilization.

Identifying Key Performance Indicators (KPIs) to Monitor Application Latency

Effective monitoring relies on the identification and tracking of specific Key Performance Indicators (KPIs) that directly reflect application latency. These KPIs provide actionable insights into the application’s performance, enabling targeted optimization efforts.

- Transaction Response Time: This KPI measures the time taken for a specific transaction or request to complete, from the user’s perspective. It is a critical indicator of overall application responsiveness. For example, the time it takes for a user to log in, submit a form, or retrieve data.

- Server Response Time: This metric focuses on the time the server takes to respond to a client’s request. It’s a key indicator of server-side performance bottlenecks. Monitoring server response time helps pinpoint issues related to server hardware, application code, or database queries.

- Database Query Time: Slow database queries are a common source of latency. Tracking the execution time of individual queries, particularly those frequently executed, allows for identification of poorly optimized queries. This involves examining the time spent by the database in processing requests, including query execution, data retrieval, and index utilization.

- Network Latency: Network latency, encompassing the time it takes for data packets to travel between the client and the server, can significantly impact application performance. Monitoring network latency involves tracking metrics like round-trip time (RTT) and packet loss. High RTT values and packet loss indicate potential network issues.

- Error Rates: High error rates, such as HTTP 500 errors (server errors) or timeouts, can indicate underlying performance problems that indirectly contribute to latency. Monitoring error rates helps identify systemic issues within the application or infrastructure.

- Resource Utilization: Tracking resource utilization, including CPU usage, memory consumption, and disk I/O, is crucial for identifying bottlenecks. High resource utilization, particularly sustained peaks, can lead to performance degradation and increased latency.

Methods for Performance Tuning After Migration to Optimize Application Responsiveness

Post-migration performance tuning involves a multifaceted approach, encompassing code optimization, database tuning, network adjustments, and infrastructure scaling. The goal is to proactively address latency issues and ensure the application functions optimally.

- Code Optimization: Reviewing and optimizing the application code is essential for improving performance. This includes identifying and addressing inefficient algorithms, redundant operations, and areas where code can be streamlined. This involves analyzing code execution paths, profiling performance bottlenecks, and implementing best practices for coding efficiency.

- Database Tuning: Optimizing database performance is critical for reducing latency. This involves several strategies:

- Query Optimization: Analyzing and optimizing database queries, including rewriting complex queries, adding indexes, and using query caching.

- Index Optimization: Ensuring that appropriate indexes are in place to speed up data retrieval.

- Database Caching: Implementing caching mechanisms to reduce the load on the database.

- Database Schema Optimization: Reviewing and optimizing the database schema for performance.

- Network Optimization: Optimizing network performance involves addressing network latency issues. This includes:

- Content Delivery Network (CDN) Implementation: Using a CDN to cache static content closer to users, reducing the distance data must travel.

- Load Balancing: Distributing traffic across multiple servers to prevent overload and ensure high availability.

- Network Configuration: Configuring network settings for optimal performance, including adjusting TCP/IP parameters.

- Infrastructure Scaling: Scaling the infrastructure to handle increased load. This involves adding more servers, increasing the capacity of existing servers, and implementing auto-scaling mechanisms. Horizontal scaling (adding more servers) is often preferred over vertical scaling (increasing the resources of a single server) for scalability and resilience.

- Caching Mechanisms: Employing caching strategies at various levels, including client-side caching (browser caching), server-side caching (e.g., using a caching proxy), and database caching, to reduce the load on servers and databases. Caching stores frequently accessed data in a faster storage medium, thereby improving response times.

- Application Profiling: Using profiling tools to identify performance bottlenecks in the application code. Profiling involves monitoring the application’s execution and identifying the parts of the code that are consuming the most resources or taking the longest time to execute. This helps pinpoint areas that need optimization.

Designing a Dashboard to Visualize Application Latency Metrics, Including Detailed Information

A well-designed dashboard provides a centralized view of application latency metrics, enabling quick identification of performance issues and facilitating data-driven decision-making. The dashboard should present key metrics in an easily understandable format, with drill-down capabilities for detailed analysis.

The dashboard should include the following elements:

- Real-time Monitoring: Displaying real-time data on key performance indicators (KPIs), such as transaction response time, server response time, and database query time. The data should be updated frequently (e.g., every few seconds or minutes) to reflect the current application performance.

- Historical Data: Providing access to historical data to identify trends and patterns. This allows for comparison of performance over time and the identification of performance degradations. The historical data should be available in various time ranges (e.g., hourly, daily, weekly, monthly).

- Alerting and Notifications: Implementing alerts and notifications to notify relevant personnel of performance issues. Alerts should be triggered when specific KPIs exceed predefined thresholds. Notifications should be delivered through multiple channels (e.g., email, SMS, and messaging platforms).

- Data Visualization: Employing clear and concise visualizations to represent the data. Examples include:

- Line Graphs: Used to display trends over time, such as transaction response time or server response time.

- Bar Charts: Used to compare performance across different time periods or components.

- Pie Charts: Used to show the distribution of errors or resource usage.

- Drill-Down Capabilities: Allowing users to drill down into specific metrics to investigate the root cause of performance issues. For example, clicking on a transaction response time spike could lead to detailed information about the affected transactions, including the associated database queries and server resources.

- Contextual Information: Providing context around the metrics, such as information about the application version, server configuration, and network environment. This helps in understanding the factors that might be influencing performance.

- User-Defined Dashboards: Allowing users to customize the dashboard to display the metrics that are most relevant to their roles and responsibilities. This enhances the dashboard’s usability and effectiveness.

An example of a dashboard could feature the following elements:

Top Section:

- Overall Application Status: A visual indicator (e.g., green for healthy, yellow for warning, red for critical) representing the overall application health.

- Key Metrics Summary: A table or card-based view displaying the current values of critical KPIs, such as average transaction response time, error rate, and server CPU utilization.

Middle Section:

- Time Series Graphs: Line graphs showing the trends of transaction response time, server response time, and database query time over time. These graphs would allow users to visually identify performance degradation.

- Error Rate Chart: A chart displaying the rate of different error types, such as HTTP 500 errors and database connection errors.

Bottom Section:

- Database Query Performance: A table or chart displaying the top slow database queries and their execution times. This helps in identifying and addressing database bottlenecks.

- Server Resource Utilization: Graphs showing CPU usage, memory consumption, and disk I/O for each server instance.

- Alerts and Notifications: A list of active alerts and notifications, indicating potential performance issues that require immediate attention.

This dashboard design, combined with appropriate alerting and notification systems, will allow for proactive identification and resolution of latency issues, contributing to a more stable and performant application post-migration.

Impact of Migration Strategy on Latency

![What is Application Migration - Definition and Guide [2025] What is Application Migration - Definition and Guide [2025]](https://wp.ahmadjn.dev/wp-content/uploads/2025/06/5e470fa8d8f0f8cc6f9fd9281005cc42.jpg)

The chosen migration strategy significantly influences application latency. Different approaches introduce varying levels of downtime, data transfer complexity, and code changes, all of which directly affect the time users experience when interacting with the application. Understanding these impacts is crucial for selecting the most appropriate strategy to minimize performance degradation during and after migration.

Comparing Latency Impact of Different Migration Strategies

The selection of a migration strategy directly affects the application latency. Each strategy presents a unique set of challenges and opportunities regarding performance.

- Rehosting (Lift and Shift): This strategy, which involves moving an application to a new infrastructure with minimal changes, typically has the least immediate impact on latency. However, it might not fully leverage cloud-native features, potentially leading to suboptimal performance compared to other strategies. Latency can be affected by network configuration, especially if the new infrastructure is geographically distant from the users. The primary latency consideration here is data transfer time during the initial migration.

- Replatforming (Lift, Tinker, and Shift): This approach involves making some changes to the application to take advantage of cloud-based services, such as database or operating system upgrades, but does not fundamentally change the application’s architecture. It often leads to moderate latency improvements compared to rehosting because it can leverage cloud-optimized services. For example, moving a database to a cloud-managed service can reduce latency by improving query performance and reducing maintenance overhead.

The main latency impact is during the database migration phase and any changes to application code to interface with new services.

- Refactoring (Re-architecting): Refactoring involves fundamentally redesigning the application to leverage cloud-native services and architectures. This approach can potentially offer the greatest performance improvements. While it can improve latency in the long run due to architectural optimizations, it often incurs the highest initial latency costs because of the development time and complexity involved. This includes the time taken to rewrite parts of the application and migrate data.

The latency impact is primarily felt during the development and testing phases, as well as the final data migration.

- Repurchasing: Involves replacing the existing application with a software-as-a-service (SaaS) solution. This strategy typically minimizes migration-related latency as the vendor handles most of the migration. However, it introduces latency related to network connectivity to the SaaS provider and potential integration complexities with other existing systems. The latency impact is related to the time it takes to migrate data to the SaaS platform and any network latency to the provider’s servers.

Impact of Cloud Provider or Infrastructure Choice on Application Latency

The choice of cloud provider and infrastructure significantly influences application latency, and it’s crucial to consider the provider’s global network, available services, and infrastructure design when planning a migration.

- Geographic Location of Data Centers: The physical location of the cloud provider’s data centers impacts latency. The closer the data centers are to the application users, the lower the latency will be. Consider the geographical distribution of your user base and select a cloud provider with data centers in those regions. For example, a company with users primarily in Europe might choose a cloud provider with data centers in Europe to minimize latency.

- Network Infrastructure: The cloud provider’s network infrastructure, including the quality of its backbone, interconnections, and content delivery networks (CDNs), impacts latency. A robust network infrastructure ensures fast data transfer and low latency. Consider a cloud provider with a global CDN to cache content closer to users.

- Service Offerings: The services offered by a cloud provider can impact latency. For example, cloud providers offer database services with various performance tiers and optimized configurations that can affect latency. Choosing a database service that meets the application’s performance requirements is critical. Similarly, selecting a provider with a managed CDN can help reduce latency by caching static content closer to users.

- Virtual Machine (VM) Performance: The performance characteristics of virtual machines (VMs), such as CPU, memory, and storage, impact application latency. Choosing appropriate VM sizes and configurations based on the application’s resource requirements is important. For example, if an application is CPU-bound, selecting VMs with high CPU performance will improve response times.

- Availability Zones and Regions: Cloud providers typically offer multiple availability zones (AZs) within a region. Deploying an application across multiple AZs can improve resilience and reduce latency. If one AZ experiences an outage, traffic can be routed to another AZ within the same region, minimizing downtime and latency.

Examples of Organizations Mitigating Latency Issues During Migrations

Organizations have successfully mitigated latency issues during migrations by employing various strategies. These real-world examples demonstrate the effectiveness of specific approaches.

- Using a CDN for Static Content: An e-commerce company migrating to the cloud used a CDN to cache static content such as images, videos, and JavaScript files. This reduced latency by delivering content from servers closer to the users, improving the overall user experience. The CDN significantly reduced the time it took for web pages to load, particularly for users located far from the origin servers.

- Database Optimization and Migration to Managed Services: A financial services company migrated its database to a cloud-managed database service, optimizing database queries and indexing strategies. This reduced database query times and improved application performance. They also used tools to minimize downtime during the database migration, ensuring minimal disruption to users.

- Phased Migration with Performance Monitoring: A media company adopted a phased migration approach, migrating parts of its application and monitoring performance closely. This allowed them to identify and address latency issues early in the process. They used performance monitoring tools to track key metrics and identify bottlenecks, enabling them to make adjustments as needed. This approach helped them minimize the impact on users.

- Network Optimization: A global software company optimized its network configuration by implementing private network connections between its on-premises data centers and the cloud provider. This reduced network latency and improved data transfer speeds. The company also used network monitoring tools to identify and resolve network congestion issues, ensuring optimal performance during and after the migration.

- Choosing the Right Region and Availability Zones: A healthcare provider deployed its application across multiple availability zones within a region to improve resilience and reduce latency. By distributing its application across multiple AZs, the provider ensured high availability and minimized downtime in case of an outage in one of the zones.

Testing and Validation

Rigorous testing is paramount throughout the migration process to ensure that application latency remains within acceptable bounds and that the migrated application functions as intended. This phase involves a multi-faceted approach, encompassing pre-migration baselining, in-flight validation, and post-migration performance assessments. Thorough testing allows for the identification of performance bottlenecks, validation of the chosen migration strategy, and confirmation that the application meets Service Level Agreements (SLAs) regarding latency and responsiveness.

It also provides the necessary data to refine the migration plan and mitigate potential performance degradations.

Importance of Thorough Testing

The significance of comprehensive testing during and after migration lies in its ability to validate the performance characteristics of the application in its new environment. It serves as a critical quality control mechanism, identifying issues that could impact user experience and overall system performance.

- Performance Baseline Validation: Establishing a clear baseline of the application’s performance metrics before migration allows for a direct comparison with post-migration results. This comparison helps to isolate any performance regressions caused by the migration. For example, if the pre-migration average response time for a specific API call was 100ms, and post-migration it increases to 300ms, this indicates a significant latency issue that needs immediate attention.

- Risk Mitigation: Testing proactively identifies potential performance issues before they impact production users. Addressing these issues during the migration process reduces the risk of service disruptions and minimizes the impact on end-users. This includes testing for unexpected behaviors in the new environment, such as increased database query times or network congestion.

- Validation of Migration Strategy: Testing validates the effectiveness of the chosen migration strategy and the configuration of the new environment. If the testing reveals performance issues, it may be necessary to adjust the migration plan, such as optimizing database queries, increasing server resources, or fine-tuning network configurations.

- Compliance with SLAs: Testing ensures that the migrated application meets the established SLAs for latency, availability, and other performance metrics. Failing to meet SLAs can lead to financial penalties, reputational damage, and customer dissatisfaction.

- Optimization Opportunities: Testing data provides valuable insights into areas where the application can be further optimized for performance. This includes identifying inefficient code, poorly performing database queries, or network bottlenecks.

Comprehensive Testing Plan

A robust testing plan incorporates several key testing methodologies to thoroughly evaluate the application’s performance and identify potential latency issues. The plan should cover all critical aspects of the application and its infrastructure.

- Pre-Migration Baseline: Before any migration activities begin, establish a performance baseline of the existing application. This involves:

- Identifying Key Performance Indicators (KPIs): Define the critical performance metrics to be tracked, such as response times for specific transactions, throughput (transactions per second), error rates, and resource utilization (CPU, memory, disk I/O, network bandwidth).

- Instrumentation and Monitoring: Implement monitoring tools to capture the baseline performance data. This includes application performance monitoring (APM) tools, database monitoring tools, and network monitoring tools. Examples include tools like New Relic, Datadog, and Prometheus.

- Load Testing: Simulate realistic user loads to assess the application’s performance under stress. Load tests should replicate typical user behavior and peak traffic scenarios.

- Functional Testing: Verify the application’s core functionality to ensure that all features work as expected.

- In-Flight Validation: During the migration process, validate the performance of the application in the new environment at various stages.

- Phased Migration: Employ a phased migration approach, migrating a small subset of users or functionality first. This allows for early performance testing and identification of issues before migrating the entire application.

- Shadow Testing: Run the migrated application in parallel with the existing application, sending a copy of the production traffic to both. This allows for a direct comparison of performance metrics without impacting the production environment.

- Regression Testing: Perform regression testing after each migration step to ensure that new changes haven’t introduced performance regressions.

- Post-Migration Performance Testing: After the migration is complete, conduct comprehensive performance testing to validate the overall performance of the migrated application.

- Load Testing: Replicate production traffic patterns and simulate peak loads to assess the application’s performance under stress. Load testing tools like JMeter, Gatling, and LoadRunner can be used to simulate thousands of concurrent users.

- Performance Testing: Analyze the application’s performance under different conditions, such as varying network conditions, database load, and server resource utilization.

- Stress Testing: Push the application beyond its expected capacity to identify its breaking points and understand how it handles extreme loads.

- Soak Testing: Run the application under sustained load for an extended period (e.g., 24 hours or more) to identify memory leaks, resource exhaustion, and other long-term performance issues.

- Testing Environment: Use a testing environment that closely mirrors the production environment in terms of hardware, software, and network configuration. This ensures that the test results accurately reflect the application’s performance in production.

- Test Data: Use realistic test data that represents the data volume and complexity of the production environment. This includes using a representative sample of data and masking sensitive information.

- Automation: Automate the testing process as much as possible to improve efficiency and repeatability. This includes automating test execution, data collection, and result analysis.

Interpreting Test Results and Adjusting the Migration Plan

The interpretation of test results is crucial for identifying and addressing latency issues. This involves analyzing the data collected during the testing phase and making adjustments to the migration plan as needed.

- Data Analysis: Analyze the test results to identify performance bottlenecks and areas where the application is experiencing latency issues. This includes:

- Response Time Analysis: Examine the average, minimum, maximum, and percentile response times for critical transactions.

- Throughput Analysis: Measure the number of transactions processed per second to identify performance limitations.

- Error Rate Analysis: Track the number of errors occurring during testing to identify potential issues.

- Resource Utilization Analysis: Monitor CPU usage, memory usage, disk I/O, and network bandwidth to identify resource constraints.

- Identifying Bottlenecks: Pinpoint the specific components or processes that are contributing to latency issues. This may involve analyzing database query performance, network latency, code inefficiencies, or server resource limitations.

- Adjusting the Migration Plan: Based on the test results, make necessary adjustments to the migration plan to address identified latency issues. This may involve:

- Code Optimization: Identify and optimize inefficient code segments that are contributing to latency. This may involve rewriting code, using more efficient algorithms, or caching frequently accessed data.

- Database Optimization: Optimize database queries, indexes, and schema design to improve query performance.

- Network Optimization: Address network latency issues by optimizing network configurations, reducing network hops, or using a content delivery network (CDN).

- Resource Allocation: Increase server resources (CPU, memory, disk) or scale the application horizontally to handle increased load.

- Configuration Tuning: Fine-tune server configurations, database configurations, and application settings to optimize performance.

- Iterative Process: Testing and optimization is an iterative process. After making adjustments to the migration plan, re-test the application to validate the effectiveness of the changes. Continue this cycle until the application meets the desired performance goals.

- Documentation: Document all test results, analysis, and adjustments made to the migration plan. This documentation serves as a valuable reference for future migrations and performance tuning efforts.

Ultimate Conclusion

In conclusion, the impact of migration on application latency is substantial and requires careful consideration at every stage. By proactively assessing potential bottlenecks, optimizing network performance, refining code, and implementing robust testing and monitoring strategies, organizations can effectively mitigate latency issues. The choice of migration strategy, cloud provider, and data synchronization methods all play pivotal roles in shaping the final performance outcome.

Ultimately, a well-planned and executed migration process, prioritizing performance and user experience, is essential for achieving a seamless transition and maximizing the benefits of application modernization.

FAQs

What are the primary causes of increased latency during application migration?

Increased latency during migration often stems from network bottlenecks, inefficient data transfer processes, database downtime or slow performance, and suboptimal code execution in the new environment. Inadequate testing and performance tuning also contribute to the problem.

How can I measure application latency before and after migration?

Latency can be measured using various tools, including application performance monitoring (APM) solutions, network monitoring tools, and synthetic transaction testing. Key metrics to track include response time, time to first byte (TTFB), and the time it takes for specific operations to complete. Pre-migration baselines are essential for comparison.

What are the trade-offs between different data synchronization methods during migration?

Data synchronization methods vary in their complexity, cost, and impact on latency. Methods like full data replication can be faster initially but may require significant bandwidth. Incremental replication, while potentially slower upfront, can minimize downtime. Choosing the right method depends on the application’s requirements and the acceptable level of downtime.

How does the choice of cloud provider affect application latency?

The cloud provider’s infrastructure, including its network, storage, and compute resources, significantly impacts latency. Factors such as data center locations, network peering arrangements, and the performance of underlying hardware influence the overall application responsiveness. Selecting a provider with optimal infrastructure for the application’s needs is crucial.