The pursuit of operational efficiency has driven the evolution of IT infrastructure, culminating in the concept of NoOps: a paradigm shift aiming to minimize or eliminate the need for dedicated operational teams. This approach, particularly when coupled with serverless computing, promises to streamline application deployment, management, and scaling, fundamentally altering how we build and run software.

This exploration delves into the core tenets of NoOps, dissecting its principles, objectives, and practical implementations. We’ll examine the symbiotic relationship between NoOps and serverless technologies, analyzing how serverless architectures facilitate the automation, self-service, and event-driven characteristics essential for a true NoOps environment. Furthermore, we’ll investigate the critical components such as automation, monitoring, and security within a NoOps framework, and discuss strategies for optimizing costs and navigating the challenges inherent in this transformative approach.

Defining NoOps

NoOps represents a significant paradigm shift in how software is deployed, managed, and operated. It moves away from the traditional model of dedicated operations teams and towards a model where operational responsibilities are significantly reduced or even automated. This evolution is driven by the increasing adoption of cloud computing, automation tools, and serverless architectures.

Core Principles of NoOps and Differentiation from Traditional IT Operations

NoOps is underpinned by several core principles that differentiate it from traditional IT operations. These principles aim to minimize the manual effort required to manage infrastructure and applications, allowing developers to focus on writing code and delivering business value.

- Automation: Automation is a cornerstone of NoOps. It involves using scripts, configuration management tools, and orchestration platforms to automate tasks such as provisioning, deployment, scaling, and monitoring. This reduces the need for manual intervention and minimizes the risk of human error.

- Abstraction: NoOps leverages abstraction to hide the underlying infrastructure complexity from developers. Cloud platforms and serverless technologies provide this abstraction by managing the servers, operating systems, and other infrastructure components.

- Self-Service: Self-service capabilities empower developers to manage their own resources and deploy applications without relying on operations teams. This typically involves providing developers with access to self-service portals and APIs.

- Event-Driven Architecture: Event-driven architectures are central to NoOps. Systems react to events, such as resource utilization thresholds or application errors, triggering automated responses like scaling or remediation. This approach enables proactive management and reduces the need for manual intervention.

- Infrastructure as Code (IaC): IaC treats infrastructure as code, enabling developers to define and manage infrastructure resources through code. This allows for version control, automated deployments, and consistent configurations.

Traditional IT operations, in contrast, often rely heavily on manual processes, dedicated operations teams, and a siloed approach to development and operations. This can lead to slower release cycles, increased operational overhead, and a higher risk of errors. The core difference lies in the degree of automation and the shift in responsibility for operational tasks.

Definition of NoOps for a Technical Audience

For a technical audience, NoOps can be defined as an operational philosophy and set of practices aimed at automating and abstracting infrastructure management to the point where the operational overhead is significantly reduced or eliminated. This allows development teams to focus on application development and innovation.The goals and objectives of NoOps include:

- Reduced Operational Overhead: Minimizing the time and resources spent on managing infrastructure and operations.

- Faster Time to Market: Accelerating the software development and deployment cycles.

- Increased Agility: Enabling rapid adaptation to changing business requirements.

- Improved Reliability: Reducing the risk of errors and improving the overall stability of systems.

- Cost Optimization: Optimizing resource utilization and reducing operational costs.

The core objective is to free up developers and other technical staff from the burden of managing infrastructure, enabling them to focus on building and delivering valuable software.

Key Characteristics of a NoOps Environment

A NoOps environment is characterized by several key features that support its goals. These characteristics contribute to the automation, self-service, and event-driven nature of the environment.

- Automation: Automation is pervasive across all aspects of the application lifecycle, from infrastructure provisioning and deployment to monitoring and scaling. Tools like Terraform, Ansible, and Jenkins are commonly used.

- Self-Service Capabilities: Developers have self-service access to the resources they need, such as compute instances, databases, and storage. This is often facilitated through APIs, cloud consoles, and Infrastructure as Code (IaC).

- Event-Driven Architectures: Systems are designed to react to events in real-time. For example, an application might automatically scale up in response to increased traffic or trigger a notification when an error occurs. Serverless functions and message queues are often used to implement these architectures.

- Continuous Integration and Continuous Delivery (CI/CD): CI/CD pipelines automate the build, test, and deployment processes, enabling faster release cycles and more frequent updates.

- Monitoring and Observability: Comprehensive monitoring and observability tools provide insights into system performance and behavior. This allows for proactive identification and resolution of issues.

- Immutable Infrastructure: Infrastructure is treated as immutable, meaning that it is not modified after deployment. Instead, new versions of infrastructure are deployed to replace the existing ones.

- Serverless Technologies: Serverless computing platforms abstract away the underlying infrastructure, allowing developers to focus on writing code without managing servers.

The implementation of these characteristics varies depending on the specific technologies and platforms used. However, the overall goal is to create an environment where operational tasks are automated, infrastructure is abstracted, and developers have the autonomy to manage their applications.

Serverless Computing Overview

Serverless computing has emerged as a transformative paradigm in cloud computing, promising to alleviate operational burdens associated with application deployment and management. It allows developers to focus primarily on writing code, while the cloud provider handles the underlying infrastructure, including server provisioning, scaling, and maintenance. This section provides a comprehensive overview of serverless computing, exploring its fundamental characteristics, practical applications, and benefits.

Fundamental Characteristics of Serverless Computing

Serverless computing is characterized by several key features that distinguish it from traditional cloud computing models. These characteristics fundamentally alter the way applications are designed, deployed, and operated.

- Pay-per-use: One of the defining features of serverless computing is its pay-per-use pricing model. Users are charged only for the actual compute time and resources consumed by their code. This contrasts with traditional cloud services, where users often pay for provisioned resources, regardless of actual utilization. For example, if a function runs for 100 milliseconds and consumes a certain amount of memory, the user is billed only for those specific resources used during that time.

This can result in significant cost savings, particularly for applications with intermittent workloads or unpredictable traffic patterns.

- Automatic scaling: Serverless platforms automatically scale compute resources up or down based on demand. This eliminates the need for manual scaling configurations or capacity planning. When an event triggers a function, the platform automatically provisions the necessary resources to execute the function. If the demand increases, the platform automatically spins up additional instances of the function to handle the load. This dynamic scaling ensures that applications can handle fluctuating workloads without manual intervention.

For instance, a website using serverless functions to process image uploads can automatically scale to handle a sudden surge in traffic during a promotional campaign, without requiring any manual adjustments to the underlying infrastructure.

- Event-driven execution: Serverless functions are typically triggered by events, such as HTTP requests, database updates, or scheduled timers. This event-driven architecture allows applications to react to specific events in real-time. When an event occurs, the serverless platform automatically invokes the corresponding function. This architecture enables the creation of highly responsive and scalable applications. Examples include triggering a function to resize an image when it is uploaded to an object storage service or running a function to send a notification when a new user registers.

- No server management: Serverless platforms abstract away the underlying server infrastructure. Developers do not need to provision, manage, or maintain servers. The cloud provider handles all aspects of server management, including patching, security updates, and capacity planning. This frees developers from operational tasks, allowing them to focus on writing code and building applications.

Serverless Platforms and Services Offered by Major Cloud Providers

Major cloud providers have embraced serverless computing, offering a wide range of platforms and services to support various use cases. These platforms provide the infrastructure and tools necessary for developing, deploying, and managing serverless applications.

- AWS Lambda: Amazon Web Services (AWS) Lambda is a widely adopted serverless compute service that allows developers to run code without provisioning or managing servers. Developers can upload their code as functions, and Lambda automatically executes the code in response to events, such as HTTP requests, database changes, or scheduled triggers. Lambda supports various programming languages, including Node.js, Python, Java, Go, and .NET.

- Azure Functions: Microsoft Azure Functions is a serverless compute service that enables developers to run event-triggered code without managing infrastructure. Azure Functions supports a variety of programming languages, including C#, JavaScript, Python, and Java. It integrates seamlessly with other Azure services, such as Azure Storage, Azure Cosmos DB, and Azure Event Hubs.

- Google Cloud Functions: Google Cloud Functions is a serverless execution environment that allows developers to run code in response to events. Cloud Functions supports several programming languages, including Node.js, Python, Go, Java, and .NET. It integrates with other Google Cloud services, such as Cloud Storage, Cloud Pub/Sub, and Cloud Firestore.

- Other Serverless Services: Cloud providers also offer a range of other serverless services, including:

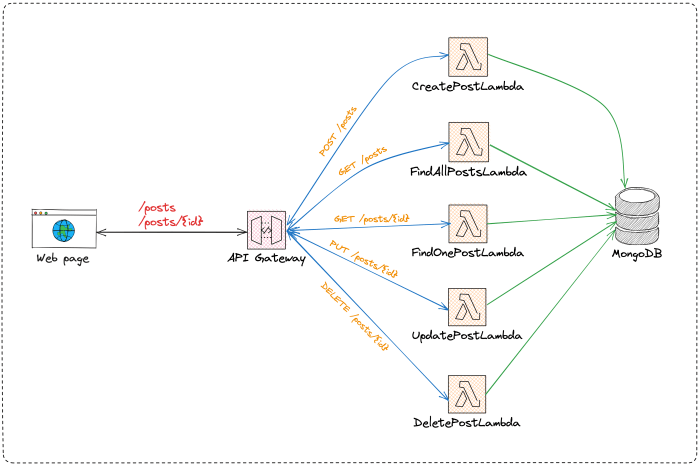

- API Gateway: Services like AWS API Gateway, Azure API Management, and Google Cloud API Gateway provide a managed service for creating, publishing, maintaining, monitoring, and securing APIs. They handle tasks like request routing, authentication, and authorization.

- Object Storage: Services like AWS S3, Azure Blob Storage, and Google Cloud Storage provide scalable and durable object storage for storing and retrieving data.

- Databases: Serverless databases like AWS DynamoDB, Azure Cosmos DB, and Google Cloud Firestore offer managed NoSQL databases that automatically scale and provide high availability.

- Event Streaming: Services like AWS Kinesis, Azure Event Hubs, and Google Cloud Pub/Sub enable real-time data streaming and event processing.

Simplification of Application Deployment and Management in Serverless Architectures

Serverless architectures significantly simplify application deployment and management compared to traditional architectures. This simplification translates to reduced operational overhead, faster development cycles, and improved agility.

- Simplified deployment: Serverless platforms streamline the deployment process. Developers typically package their code and upload it to the serverless platform. The platform automatically handles the deployment, including provisioning the necessary resources and configuring the execution environment. This eliminates the need for manual server setup, configuration, and deployment processes.

- Reduced operational overhead: Serverless architectures reduce operational overhead by abstracting away server management tasks. Cloud providers handle server provisioning, scaling, patching, and security updates. This allows developers to focus on writing code and building applications rather than managing infrastructure.

- Faster development cycles: Serverless platforms enable faster development cycles. Developers can quickly deploy and test code changes without waiting for server provisioning or configuration. The pay-per-use pricing model also encourages experimentation and rapid prototyping.

- Improved scalability and resilience: Serverless architectures automatically scale to handle fluctuating workloads. The platform dynamically adjusts the number of function instances based on demand, ensuring that applications can handle peak loads without manual intervention. Serverless platforms also provide built-in resilience, with automatic failover and redundancy.

- Cost optimization: The pay-per-use pricing model of serverless computing can lead to significant cost savings. Users are charged only for the resources they consume, which can be particularly beneficial for applications with intermittent workloads or unpredictable traffic patterns. This allows for more efficient resource utilization and reduces the overall cost of ownership. For example, a company might use serverless functions to process image thumbnails.

They are only charged when images are uploaded, and when the function processes them, rather than paying for an always-on server that sits idle most of the time.

The Relationship: NoOps and Serverless

Serverless computing has emerged as a key enabler of NoOps, fundamentally altering how applications are deployed, managed, and scaled. The close relationship between these two concepts stems from the shared goal of minimizing operational overhead and allowing developers to focus on writing code. This section will delve into the ways serverless technologies facilitate NoOps practices, contrasting them with traditional infrastructure management and highlighting the advantages of adopting serverless for achieving NoOps goals.

Serverless Technologies Enabling NoOps Practices

Serverless architecture inherently supports NoOps by abstracting away infrastructure management tasks. Developers no longer need to provision, configure, or maintain servers. This shift allows teams to focus on application logic and feature development, rather than operational concerns.

- Automated Scaling: Serverless platforms automatically scale resources based on demand. This eliminates the need for manual capacity planning and scaling operations, which are time-consuming and prone to errors in traditional environments. For example, a web application built with serverless functions can handle sudden traffic spikes without requiring manual intervention to provision additional servers.

- Reduced Operational Burden: The serverless provider handles tasks such as server patching, security updates, and infrastructure maintenance. This significantly reduces the operational burden on development teams, freeing up resources for other priorities. This is in stark contrast to traditional infrastructure management, where these tasks require dedicated teams and significant effort.

- Event-Driven Architecture: Serverless architectures often leverage event-driven models. Events trigger function execution, promoting loose coupling and enabling highly responsive applications. This design simplifies debugging and reduces the impact of individual component failures. Consider an e-commerce platform where an order placement event triggers a series of functions, such as inventory update, payment processing, and notification sending.

- Pay-per-Use Pricing: Serverless platforms typically offer pay-per-use pricing models, meaning users are charged only for the compute time and resources consumed. This eliminates the need for pre-provisioned capacity and reduces costs, particularly during periods of low or intermittent activity. This contrasts with traditional infrastructure, where resources are often paid for regardless of utilization.

Comparing Traditional Infrastructure Management with Serverless Approaches in the Context of NoOps

Traditional infrastructure management requires significant operational effort, including server provisioning, configuration management, monitoring, and scaling. Serverless computing, on the other hand, abstracts away these responsibilities, allowing developers to focus on application code.

| Feature | Traditional Infrastructure | Serverless Approach |

|---|---|---|

| Server Management | Requires manual server provisioning, configuration, and maintenance. | Abstracted away by the serverless provider. No server management is required. |

| Scaling | Requires manual capacity planning and scaling operations. | Automatic scaling based on demand. |

| Operational Overhead | High operational overhead, including server patching, security updates, and monitoring. | Significantly reduced operational overhead. |

| Cost Model | Typically involves fixed costs for infrastructure, regardless of utilization. | Pay-per-use pricing, based on actual resource consumption. |

| Deployment | Complex and time-consuming deployment processes. | Simplified deployment processes, often involving code uploads and configuration. |

Advantages of Using Serverless for Achieving NoOps, such as Reduced Operational Overhead

The advantages of using serverless for achieving NoOps are numerous, primarily centered around the reduction of operational overhead. This translates to increased developer productivity, faster time-to-market, and lower operational costs.

- Increased Developer Productivity: Developers can focus on writing code and building features, rather than managing infrastructure. This leads to faster development cycles and improved productivity. For example, a team using serverless can iterate on application features more quickly than a team managing its own servers.

- Faster Time-to-Market: The simplified deployment and management processes of serverless enable faster time-to-market for new applications and features. This allows businesses to respond quickly to market demands and gain a competitive advantage.

- Reduced Operational Costs: Pay-per-use pricing models and automated scaling can significantly reduce operational costs. Organizations only pay for the resources they consume, and they can avoid the costs associated with managing and maintaining servers. For instance, a company that migrates a batch processing workload to serverless functions may experience a significant reduction in infrastructure costs, especially during periods of low activity.

- Improved Scalability and Reliability: Serverless platforms are designed to handle massive scale and provide high availability. Applications built on serverless architectures can automatically scale to handle traffic spikes, ensuring a consistent user experience.

- Enhanced Security: Serverless providers often handle security updates and patching, reducing the attack surface and improving the overall security posture. This allows organizations to focus on application-level security concerns.

Automation and Orchestration in NoOps

The effective implementation of NoOps hinges on robust automation and orchestration strategies. Serverless architectures, by their very nature, necessitate a high degree of automation to manage the lifecycle of functions, scale resources dynamically, and respond to incidents proactively. This section delves into the specific mechanisms and tools that enable this level of automation, providing a detailed analysis of their functionalities and implications within a NoOps framework.

Designing a System for Automating Deployment and Scaling of Serverless Functions

Automating the deployment and scaling of serverless functions is crucial for achieving the agility and efficiency promised by NoOps. This involves a combination of Continuous Integration and Continuous Delivery (CI/CD) pipelines, automated infrastructure provisioning, and dynamic scaling policies.A well-designed system for this purpose should encompass the following key elements:

- Automated CI/CD Pipelines: These pipelines streamline the build, testing, and deployment processes. They automatically trigger deployments upon code changes, ensuring rapid and consistent releases. A typical CI/CD pipeline might include:

- Code repository integration (e.g., Git) for version control and collaboration.

- Automated build processes, including dependency management and code compilation.

- Automated testing, including unit tests, integration tests, and potentially end-to-end tests.

- Automated deployment to the serverless platform (e.g., AWS Lambda, Azure Functions, Google Cloud Functions).

- Automated rollback mechanisms in case of deployment failures.

- Infrastructure-as-Code (IaC): IaC tools define and provision infrastructure resources programmatically. This enables consistent and repeatable deployments. IaC tools can define all aspects of the serverless environment, including:

- Function configurations (memory, timeout, runtime).

- Event triggers (API Gateway, S3 buckets, CloudWatch events).

- Networking configurations (VPCs, security groups).

- Access control and permissions (IAM roles and policies).

- Automated Scaling Policies: Serverless platforms automatically scale functions based on demand. However, it’s crucial to configure scaling policies to optimize performance and cost. This includes:

- Configuring concurrency limits to prevent resource exhaustion.

- Setting up auto-scaling triggers based on metrics like invocation count, error rate, and latency.

- Implementing cost optimization strategies, such as limiting maximum concurrent executions.

- Monitoring and Alerting: Real-time monitoring of function performance and health is essential for proactive management. This includes:

- Collecting metrics such as invocation count, duration, errors, and cold starts.

- Setting up alerts based on predefined thresholds for critical metrics.

- Integrating with logging and tracing tools to diagnose issues.

A practical example of such a system could leverage tools like AWS CodePipeline for CI/CD, AWS CloudFormation or Terraform for IaC, and AWS Lambda’s built-in auto-scaling capabilities. The CI/CD pipeline would automatically deploy new code to the functions, while IaC manages the underlying infrastructure, and the scaling policies ensure the functions can handle varying workloads.

The Role of Infrastructure as Code (IaC) Tools in a NoOps and Serverless Context

Infrastructure as Code (IaC) tools are fundamental to achieving NoOps principles in a serverless environment. They enable the automated provisioning, management, and versioning of all infrastructure resources, reducing manual intervention and human error.The benefits of using IaC in a NoOps and serverless context are multifaceted:

- Automation and Consistency: IaC tools automate the creation and configuration of infrastructure resources, ensuring consistency across deployments. This eliminates the risk of manual configuration errors and ensures that all environments (development, staging, production) are configured identically.

- Repeatability and Reproducibility: IaC allows infrastructure to be defined as code, which can be versioned and reused. This enables the easy creation of new environments and the consistent replication of existing ones.

- Faster Deployment and Rollbacks: IaC tools significantly speed up the deployment process. If an issue arises, the infrastructure can be quickly rolled back to a previous state, minimizing downtime.

- Improved Collaboration: Infrastructure code can be managed using version control systems, enabling teams to collaborate on infrastructure changes and track changes over time.

- Enhanced Security: IaC tools enable the implementation of security best practices consistently across all environments. Security configurations, such as access control policies, can be defined as code and enforced automatically.

- Cost Optimization: IaC facilitates the implementation of cost optimization strategies by enabling the automatic provisioning and de-provisioning of resources based on demand.

Popular IaC tools used in serverless environments include:

- Terraform: A widely adopted open-source tool for infrastructure provisioning. Terraform supports multiple cloud providers and can manage a wide range of resources.

- AWS CloudFormation: AWS’s native IaC service, specifically designed for managing AWS resources.

- Azure Resource Manager (ARM): Microsoft’s IaC service for managing Azure resources.

- Serverless Framework: An open-source framework specifically designed for deploying and managing serverless applications across multiple cloud providers. It simplifies the definition of serverless functions and their associated infrastructure.

These tools allow infrastructure to be described in declarative configurations, such as YAML or JSON files. For example, a simple CloudFormation template might define an AWS Lambda function, an API Gateway endpoint, and an IAM role. The template is then used to provision and manage these resources automatically.

Creating a Procedure for Automating Incident Response and Remediation in a Serverless Environment

Automating incident response and remediation is critical for maintaining high availability and minimizing the impact of issues in a serverless environment. This requires a proactive approach that combines monitoring, alerting, and automated actions.A robust automated incident response procedure should incorporate the following elements:

- Comprehensive Monitoring: Implement comprehensive monitoring across all aspects of the serverless application, including function performance, API latency, error rates, and resource utilization. This should include:

- Function-level monitoring: Tracks invocation counts, durations, errors, and cold starts.

- API Gateway monitoring: Monitors request rates, latency, and error rates.

- Database monitoring: Monitors database performance and availability.

- External service monitoring: Monitors the health and performance of any external services the application depends on.

- Effective Alerting: Define clear alerts based on predefined thresholds for critical metrics. Alerts should be routed to the appropriate teams or individuals, based on the severity of the issue. This may include:

- Severity levels: Define severity levels (e.g., critical, warning, informational) to prioritize alerts.

- Notification channels: Integrate with notification channels (e.g., email, Slack, PagerDuty) to ensure timely communication.

- Alerting rules: Configure alerts based on metrics like error rates exceeding a threshold, latency exceeding a threshold, or resource utilization reaching capacity.

- Automated Remediation: Implement automated actions to address common issues and reduce the need for manual intervention. This could include:

- Automatic scaling: Automatically scale functions up or down based on demand or resource utilization.

- Rollback mechanisms: Automatically roll back to a previous version of the code or configuration in case of a deployment failure or performance degradation.

- Circuit breakers: Implement circuit breakers to prevent cascading failures by temporarily disabling calls to failing services.

- Restarting instances: Automatically restart functions or other services if they become unresponsive.

- Incident Management Workflow: Establish a well-defined incident management workflow that includes:

- Incident detection: The system automatically detects incidents based on monitoring and alerting rules.

- Incident notification: The system notifies the relevant teams or individuals about the incident.

- Incident investigation: The teams investigate the root cause of the incident using logs, metrics, and tracing data.

- Incident remediation: The teams implement the necessary remediation steps, including automated actions and manual intervention.

- Post-incident review: Conduct a post-incident review to identify the root cause, lessons learned, and areas for improvement.

- Logging and Tracing: Implement comprehensive logging and tracing to provide visibility into the application’s behavior and facilitate troubleshooting. This includes:

- Centralized logging: Aggregate logs from all functions and services into a central location.

- Distributed tracing: Implement distributed tracing to track requests as they flow through the application.

- Contextual logging: Include relevant context information (e.g., request IDs, user IDs) in logs to facilitate debugging.

An example of such a procedure could involve using AWS CloudWatch for monitoring and alerting, AWS Lambda for automated scaling, and AWS Step Functions to orchestrate complex remediation workflows. If an error rate threshold is exceeded, an alert is triggered, and a Step Function could automatically scale the affected function, roll back the deployment, and notify the on-call team. This automated approach minimizes downtime and reduces the burden on operations teams.

Monitoring and Observability in a NoOps World

Monitoring and observability are critical pillars for success in a NoOps environment, particularly when leveraging serverless architectures. The inherent complexities of distributed systems, ephemeral resources, and automated deployments demand robust mechanisms to understand application behavior, identify issues, and ensure optimal performance. Without effective monitoring and observability, the benefits of NoOps, such as reduced operational overhead and increased agility, are significantly diminished.

In a serverless and NoOps setup, monitoring and observability are not just optional; they are essential for operational stability.

Importance of Monitoring and Observability

Effective monitoring and observability are paramount in a serverless and NoOps context. They provide the insights needed to manage and maintain applications without direct hands-on operational tasks.Observability provides a comprehensive understanding of a system’s internal states from external outputs. This includes:

- Understanding Application Behavior: Detailed logging, metrics, and tracing allow for understanding how the application functions, identifying bottlenecks, and assessing performance.

- Proactive Issue Identification: Real-time monitoring and anomaly detection enable teams to identify and address issues before they impact users.

- Performance Optimization: Analyzing metrics and traces helps pinpoint areas for optimization, such as code efficiency or resource allocation.

- Automated Incident Response: Alerting and automated actions can be triggered based on predefined thresholds, allowing the system to self-heal or mitigate issues without manual intervention.

- Capacity Planning: Monitoring resource usage and performance trends facilitates accurate capacity planning and resource allocation.

Methods for Collecting and Analyzing Logs, Metrics, and Traces

Collecting and analyzing logs, metrics, and traces in serverless applications requires specialized tools and techniques, given the distributed and event-driven nature of these architectures.Logs, metrics, and traces serve distinct but interconnected purposes in achieving full observability:

- Logs: Provide detailed information about individual events and actions within the application. These are invaluable for debugging and understanding the sequence of events.

- Collection: Serverless platforms, such as AWS Lambda, Azure Functions, and Google Cloud Functions, automatically generate logs. These logs can be streamed to centralized logging services.

- Analysis: Tools like the Elastic Stack (Elasticsearch, Logstash, Kibana), Splunk, and cloud-provider-specific services (e.g., AWS CloudWatch Logs, Azure Monitor Logs, Google Cloud Logging) can be used to search, analyze, and visualize logs.

- Best Practices: Structured logging, using formats like JSON, allows for easier parsing and analysis. Including context-specific information, such as request IDs and correlation IDs, helps to trace requests across different services.

- Metrics: Quantifiable measurements of system performance and behavior. They provide a high-level overview of application health and performance.

- Collection: Serverless platforms automatically collect many standard metrics (e.g., invocation counts, execution times, error rates). Custom metrics can be emitted using platform-specific APIs.

- Analysis: Monitoring tools, like Prometheus, Grafana, and cloud-provider-specific monitoring services, can be used to visualize and analyze metrics.

- Best Practices: Defining key performance indicators (KPIs) and setting appropriate thresholds is essential for effective monitoring. Metrics should be aligned with business goals and user experience.

- Traces: Track the flow of requests across distributed systems. They provide end-to-end visibility into request processing, helping to identify bottlenecks and performance issues.

- Collection: Distributed tracing systems, such as Jaeger, Zipkin, and AWS X-Ray, can be integrated with serverless functions. OpenTelemetry is a standard for instrumenting applications to generate traces.

- Analysis: Tracing tools provide visualizations of request flows, allowing developers to identify slow components and dependencies.

- Best Practices: Instrumenting code with tracing libraries and using correlation IDs is crucial for tracing requests across different services and platforms.

Implementing Proactive Alerting and Anomaly Detection

Proactive alerting and anomaly detection are essential components of a NoOps architecture, enabling automated responses to issues and minimizing downtime.Automated alerts and anomaly detection systems ensure that operational issues are identified and addressed promptly:

- Alerting Strategies: Alerting systems trigger notifications when predefined thresholds are breached. These alerts can be sent to various channels, such as email, Slack, or incident management tools.

- Threshold-based Alerts: Set thresholds for key metrics (e.g., error rates, latency, resource utilization).

- Anomaly Detection: Use machine learning algorithms to identify unusual patterns in metrics and trigger alerts.

- Example: Anomaly detection can automatically flag an unusual spike in error rates or a sudden increase in latency.

- Anomaly Detection Techniques: Employing various techniques to identify deviations from normal behavior.

- Statistical Methods: Techniques like moving averages, standard deviations, and control charts.

- Machine Learning: Using algorithms like time series analysis, clustering, and classification.

- Example: A system can learn the normal traffic patterns of a website and automatically detect when traffic drops significantly, indicating a potential outage or issue.

- Automated Incident Response: Implement automated responses to alerts, such as scaling resources, restarting services, or rolling back deployments.

- Example: If a service experiences high latency, the system can automatically scale up the resources allocated to the service.

- Integration: Integrate alerting and incident management systems with automation tools (e.g., Infrastructure as Code, configuration management).

- Integration with Automation: Seamlessly integrate monitoring and alerting with automated infrastructure management and deployment pipelines. This integration enables automated responses to detected anomalies.

Challenges of Achieving NoOps

While the promise of NoOps, especially when coupled with serverless computing, is compelling, the path to its realization is fraught with challenges. These hurdles range from technological complexities to organizational and cultural shifts. Understanding these challenges is crucial for organizations aiming to successfully adopt a NoOps strategy.

Technical Complexity and Vendor Lock-in

Adopting NoOps with serverless introduces several technical complexities. These issues can arise from the underlying serverless platform itself and the services integrated within the environment.

- Platform Maturity and Stability: Serverless platforms, while rapidly evolving, are not always as mature or stable as traditional infrastructure. This can lead to unexpected downtime, performance issues, and debugging difficulties. For instance, a study by Gartner in 2023 highlighted that a significant percentage of serverless deployments experience unexpected errors due to platform-specific limitations, especially in the early stages of adoption.

- Debugging and Troubleshooting: Debugging serverless applications can be significantly more complex than debugging traditional applications. The distributed nature of serverless functions, the lack of direct access to underlying infrastructure, and the ephemeral nature of function instances make it challenging to pinpoint the root cause of issues. Tools and techniques for distributed tracing and log aggregation are essential, but they add to the complexity.

- Vendor Lock-in: Serverless platforms often come with vendor lock-in. Services are typically tightly coupled with specific cloud providers, making it difficult to migrate applications to different platforms or even to hybrid environments. This lock-in can limit flexibility and increase costs over time, especially if pricing models change or if the provider’s service offerings evolve in ways that don’t align with the organization’s needs.

The cost of migrating a complex serverless application to another cloud provider can be substantial, potentially involving significant refactoring and retraining.

- Limited Control and Customization: Serverless environments often offer limited control and customization options compared to traditional infrastructure. Organizations have less control over the underlying hardware, operating systems, and network configurations. This can be a significant limitation for applications that require specific hardware or software configurations or those with very stringent performance or security requirements.

Skill Set and Cultural Shifts

Successful NoOps implementation necessitates significant shifts in the skill sets of the operations team and the overall organizational culture. This involves adopting new technologies and practices and fostering a collaborative environment.

- Evolving Skill Requirements: Traditional operations roles need to evolve. Instead of managing servers, teams must focus on code, automation, and infrastructure as code (IaC). This requires expertise in programming languages, CI/CD pipelines, monitoring tools, and cloud-specific services. The shift demands continuous learning and adaptation.

- Cross-Functional Collaboration: NoOps thrives on collaboration between development, operations, and security teams. Silos must be broken down, and teams must work together to automate processes, monitor applications, and respond to incidents. This requires establishing clear communication channels, shared responsibilities, and a culture of shared ownership.

- Automation Mindset: Automation is at the heart of NoOps. Teams must embrace automation as a core principle, automating all aspects of the application lifecycle, from deployment and scaling to monitoring and incident response. This requires a willingness to invest in automation tools, develop IaC scripts, and embrace a DevOps mindset.

- Cultural Resistance to Change: Organizational resistance to change can hinder NoOps adoption. Teams may be reluctant to adopt new technologies or processes, or they may be resistant to sharing responsibilities. Overcoming this resistance requires strong leadership, clear communication, and a commitment to training and education.

Security Considerations and Best Practices

Security in a NoOps serverless environment requires a proactive and multifaceted approach. This involves addressing unique security challenges and adopting best practices to protect applications and data.

- Identity and Access Management (IAM): Proper IAM is crucial in serverless environments. Securing access to resources requires careful management of permissions and roles. Using the principle of least privilege, granting only the necessary permissions to each function or service, minimizes the attack surface. Implementing multi-factor authentication (MFA) for all users is also vital.

- Function Security: Securing individual serverless functions is critical. This includes validating input data, sanitizing output, and protecting against common web vulnerabilities such as SQL injection and cross-site scripting (XSS). Regularly scanning function code for vulnerabilities and using security-focused linters are essential.

- Network Security: While serverless environments abstract away much of the network infrastructure, network security considerations remain important. Implementing network controls such as VPCs (Virtual Private Clouds) and security groups to restrict access to resources is crucial. Using API gateways with security features such as rate limiting and request validation can further enhance network security.

- Monitoring and Logging: Comprehensive monitoring and logging are essential for detecting and responding to security incidents. Implementing centralized logging, analyzing logs for suspicious activity, and setting up alerts for security-related events are crucial. Using security information and event management (SIEM) systems can help correlate security events and provide a holistic view of the security posture.

- Compliance and Governance: Ensuring compliance with relevant regulations and industry standards is essential. This requires implementing security controls that meet compliance requirements, such as data encryption, access controls, and audit trails. Automating compliance checks and using configuration management tools can help ensure that the environment remains compliant over time.

Cost Optimization in a NoOps Serverless Environment

Optimizing costs is paramount in a NoOps serverless environment, as the pay-per-use model necessitates meticulous management to avoid unexpected expenses. Serverless architectures offer significant cost advantages through their inherent scalability and resource efficiency. However, without proper monitoring and control, costs can quickly escalate. This section delves into strategies, methods, and procedures for achieving cost-effective serverless deployments.

Strategies for Optimizing Costs in a Serverless Architecture

Several strategies can be employed to optimize costs in a serverless architecture, focusing on resource utilization, code efficiency, and service selection.

- Right-Sizing Resources: Precisely configuring resource allocations, such as memory and execution time, for serverless functions is crucial. Over-provisioning leads to unnecessary costs. For instance, in AWS Lambda, the memory allocated directly impacts the CPU power available to the function. Testing functions with different memory settings and monitoring performance metrics like execution time and error rates allows for finding the optimal balance between performance and cost.

For example, a function processing image thumbnails might initially be allocated 512MB of memory, but after monitoring, it’s determined that 256MB is sufficient without impacting performance, resulting in a 50% cost reduction for each invocation.

- Optimizing Code Efficiency: Efficiently written code minimizes execution time, thereby reducing costs. Code optimization includes reducing the size of dependencies, minimizing the number of function calls, and using efficient algorithms. Smaller function packages reduce cold start times, which in turn reduces the total execution time and cost. For instance, a poorly optimized function might take 500ms to execute, while an optimized version achieves the same result in 100ms.

If the function is invoked 100,000 times a day, the optimized version would result in significant cost savings.

- Leveraging Serverless-Specific Cost Optimization Features: Cloud providers often offer specific features to help reduce costs. For example, AWS Lambda offers provisioned concurrency, which pre-warms function instances to reduce cold start times and costs for predictable workloads. Google Cloud Functions has automatic scaling, which ensures resources are allocated only when needed. Azure Functions provides various pricing tiers, allowing users to select the most cost-effective option based on their usage patterns.

- Choosing the Right Services: Selecting the appropriate serverless services for specific tasks is vital. For example, using a managed service like AWS S3 for storing static assets is often more cost-effective than running a custom solution on EC2. Similarly, using managed databases like Amazon DynamoDB can reduce the operational overhead and cost compared to self-managed databases.

- Implementing Caching Strategies: Caching frequently accessed data can significantly reduce costs by minimizing the number of calls to backend services. For instance, caching API responses using Amazon CloudFront or caching database query results using Redis can reduce the load on serverless functions and databases, thereby lowering costs.

Methods for Monitoring and Controlling Serverless Spending

Effective monitoring and control are essential for preventing cost overruns in a serverless environment. Various methods and tools facilitate this.

- Setting Up Cost Alerts and Budgets: Cloud providers offer features for setting budgets and alerts to proactively monitor spending. AWS Budgets, Google Cloud Billing Budgets, and Azure Cost Management + Billing allow users to define spending thresholds and receive notifications when those thresholds are approached or exceeded. These alerts enable timely intervention to prevent unexpected cost spikes.

- Utilizing Cost Analysis Tools: Cloud providers provide cost analysis tools to analyze spending patterns, identify cost drivers, and optimize resource utilization. AWS Cost Explorer, Google Cloud Cost Management, and Azure Cost Management + Billing offer detailed insights into spending across different services, regions, and resource tags. These tools help users understand where their money is being spent and identify areas for optimization.

- Implementing Resource Tagging: Tagging resources with relevant metadata, such as project names, application names, and cost centers, allows for granular cost tracking and allocation. This enables organizations to attribute costs accurately and understand the spending patterns of different teams and projects.

- Monitoring Function Execution Metrics: Monitoring key metrics such as execution time, memory usage, and invocation counts is critical for identifying cost optimization opportunities. CloudWatch in AWS, Cloud Monitoring in Google Cloud, and Azure Monitor provide detailed insights into these metrics. Analyzing these metrics helps identify inefficient functions and areas for code optimization.

- Regularly Reviewing and Auditing Serverless Infrastructure: Periodic reviews of the serverless infrastructure, including function configurations, service selections, and resource allocations, are essential. This helps identify and address cost optimization opportunities and ensure that the infrastructure remains aligned with business requirements.

Procedure for Using Cost-Effective Serverless Services

Implementing a systematic procedure ensures the use of cost-effective serverless services. This involves planning, implementation, and continuous monitoring.

- Planning and Design: During the initial design phase, carefully consider the requirements of the application and select the appropriate serverless services. Evaluate different service options and pricing models to determine the most cost-effective solution.

- Resource Provisioning and Configuration: When provisioning serverless resources, carefully configure the settings, such as memory allocation and execution time limits, to optimize cost. Test the performance of functions with different configurations to identify the optimal balance between cost and performance.

- Code Optimization and Deployment: Write efficient code that minimizes execution time and resource consumption. Use code optimization techniques to reduce the size of dependencies and minimize the number of function calls. Deploy the optimized code and continuously monitor its performance.

- Cost Monitoring and Analysis: Implement cost monitoring tools and set up alerts to track spending and identify potential cost overruns. Regularly analyze cost data to understand spending patterns and identify areas for optimization.

- Iterative Optimization and Refinement: Based on the analysis of cost data, make iterative improvements to the serverless infrastructure. Optimize function configurations, code, and service selections to reduce costs. Continuously monitor the impact of these optimizations and refine the approach as needed.

Real-World Examples and Case Studies

The adoption of NoOps, particularly in conjunction with serverless architectures, is not merely a theoretical concept. Several organizations have successfully implemented NoOps strategies, demonstrating the tangible benefits of reduced operational overhead, increased agility, and cost optimization. These case studies provide concrete examples of how serverless implementations have transformed IT operations.

Successful NoOps Implementations

Several companies have leveraged serverless technologies to achieve a significant degree of NoOps, focusing on automating infrastructure management and streamlining deployments. These companies have been able to redirect resources from operational tasks to innovation and product development.

- Netflix: Netflix has a well-documented history of embracing cloud technologies, including serverless, to handle its massive streaming workloads. They utilize serverless functions for various tasks, including video encoding, data processing, and API management. Their adoption of serverless allows them to scale resources automatically based on demand, significantly reducing the need for manual intervention and infrastructure provisioning. This has allowed them to focus on core business activities such as content delivery and user experience.

The scalability and cost-effectiveness of serverless have played a crucial role in managing their global infrastructure and delivering content to millions of users worldwide.

- Coca-Cola: Coca-Cola has adopted serverless architectures to build and deploy applications more quickly and efficiently. They use serverless functions for tasks like processing data from IoT devices and managing customer interactions. This approach enables Coca-Cola to react more quickly to market changes and deliver new features to their customers. The shift to serverless has reduced their operational overhead and allowed their developers to focus on business logic rather than infrastructure management.

This has accelerated the pace of innovation and improved their ability to compete in the rapidly evolving beverage market.

- iRobot: iRobot, the maker of Roomba robotic vacuum cleaners, uses serverless to process data from its connected devices. They employ serverless functions to handle telemetry data, analyze user behavior, and update the robot’s software. This allows iRobot to provide a better user experience and improve the functionality of their products. The serverless architecture enables them to scale their infrastructure automatically based on the volume of data received from their devices.

This has significantly reduced their operational costs and allowed them to focus on data analysis and product improvement.

Demonstrating Reduced Operational Overhead with Serverless

Serverless architectures inherently reduce operational overhead by abstracting away infrastructure management. This shift allows teams to focus on writing code and delivering business value rather than managing servers, patching, and scaling. The examples below highlight how serverless has decreased the burden of operational tasks.

- Reduced Infrastructure Management: Serverless platforms handle server provisioning, scaling, and patching automatically. This eliminates the need for manual configuration and maintenance of servers, which frees up IT staff to focus on more strategic initiatives. For example, a company using serverless functions for its API backend does not need to worry about the underlying infrastructure that supports those functions. The platform automatically scales the resources based on the incoming traffic, reducing the need for manual scaling efforts.

- Automated Scaling and High Availability: Serverless platforms automatically scale resources based on demand, ensuring high availability and optimal performance. This eliminates the need for manual scaling, which can be time-consuming and error-prone. If a serverless function experiences a sudden surge in traffic, the platform automatically provisions additional resources to handle the load. This automatic scaling ensures that the application remains responsive even during peak usage times.

- Simplified Deployment and Updates: Serverless platforms simplify the deployment and update processes, allowing developers to deploy code quickly and efficiently. This reduces the time and effort required to deploy new features and updates. For example, developers can upload new code for a serverless function and have it deployed automatically without needing to manage the underlying infrastructure.

Specific Serverless Implementations and Outcomes

The successful application of serverless is often reflected in specific implementations and their resulting outcomes. These implementations often showcase measurable improvements in areas such as cost, performance, and development velocity.

- API Gateways: Many organizations use serverless API gateways to manage their APIs. This allows them to handle authentication, authorization, and rate limiting without needing to manage the underlying infrastructure. For example, a company might use an API gateway to expose its backend services to external clients. The API gateway handles the authentication and authorization of incoming requests, allowing developers to focus on building the API logic.

The outcomes often include improved security, reduced latency, and lower operational costs.

- Event-Driven Architectures: Serverless functions are commonly used to build event-driven architectures, where functions are triggered by events such as file uploads, database updates, or message queues. This allows organizations to build highly scalable and responsive applications. For example, a company might use serverless functions to process images uploaded to a cloud storage service. When a new image is uploaded, a function is triggered to resize the image and store it in different formats.

The outcomes include increased agility, improved scalability, and reduced operational overhead.

- Data Processing Pipelines: Serverless functions are often used to build data processing pipelines, where data is processed and transformed in real-time. This allows organizations to gain insights from their data quickly and efficiently. For example, a company might use serverless functions to process clickstream data from its website. The functions can aggregate the data, analyze user behavior, and generate reports in real-time.

The outcomes often include faster time-to-insights, improved data quality, and reduced operational costs.

Serverless and CI/CD Integration

Integrating serverless functions with Continuous Integration and Continuous Deployment (CI/CD) pipelines is crucial for automating the software development lifecycle, ensuring faster release cycles, and maintaining code quality. This integration streamlines the process from code commit to deployment, reducing manual intervention and potential errors. The following sections detail the strategies, examples, and pipeline designs necessary for effective CI/CD integration with serverless applications.

Integrating Serverless Functions with CI/CD Pipelines

The integration of serverless functions with CI/CD pipelines typically involves a series of automated steps triggered by code changes. These steps encompass version control, build processes, automated testing, and deployment. Successful integration depends on choosing the right tools and configuring the pipeline to align with the specific serverless platform and application requirements.

- Version Control Integration: The process begins with version control systems such as Git. Developers commit code changes to a repository, which triggers the CI/CD pipeline. The pipeline then pulls the latest code version.

- Build Process: This step involves packaging the serverless function code, dependencies, and any necessary configuration files. Build tools specific to the programming language and serverless platform are utilized. For instance, Node.js functions might use npm or yarn to manage dependencies, while Python functions might use pip.

- Automated Testing: This critical step validates the functionality and quality of the serverless functions. Various testing strategies are employed, as discussed in the next section.

- Deployment: The deployment step packages the built artifacts and deploys them to the serverless platform (e.g., AWS Lambda, Azure Functions, Google Cloud Functions). This process includes configuring infrastructure resources, managing environment variables, and updating function configurations.

- Monitoring and Rollback: Following deployment, the pipeline often incorporates monitoring tools to track function performance and health. In case of deployment failures or performance degradation, automated rollback mechanisms can revert to a previous, stable version of the function.

Automated Testing Strategies for Serverless Applications

Automated testing is fundamental to ensuring the reliability and maintainability of serverless applications. Several testing strategies are employed to validate the functionality and performance of serverless functions. These strategies range from unit tests to end-to-end tests, each serving a specific purpose in the testing process.

- Unit Testing: Unit tests verify the functionality of individual serverless functions or their internal components in isolation. They focus on testing specific code blocks and ensuring they behave as expected. Unit tests are typically fast and easy to execute, providing rapid feedback on code changes. For example, testing a function that calculates the sum of two numbers would involve creating test cases with various input values and verifying the output.

- Integration Testing: Integration tests verify the interaction between different components of a serverless application, including serverless functions, databases, and external APIs. These tests ensure that the components work together correctly. For instance, testing the interaction between an API Gateway and a Lambda function, including the passing of data and the handling of responses.

- End-to-End (E2E) Testing: E2E tests simulate real-world user scenarios, testing the entire application from the user interface to the backend services. They validate the overall functionality of the application and its ability to handle user requests. For example, an E2E test might simulate a user submitting a form on a website, triggering a Lambda function to process the form data and store it in a database.

- Performance Testing: Performance tests evaluate the performance of serverless functions under different load conditions. They measure metrics such as response time, throughput, and error rates to identify potential bottlenecks and ensure the application can handle the expected traffic. For instance, using tools like JMeter or Gatling to simulate a large number of concurrent requests to a Lambda function and measure its performance.

- Security Testing: Security tests identify vulnerabilities and ensure that serverless functions are secure. These tests can include static code analysis, dynamic analysis, and penetration testing. Tools like OWASP ZAP or custom security scanners can be used to identify potential security issues.

Designing a CI/CD Pipeline for Deploying Serverless Functions

A well-designed CI/CD pipeline automates the build, test, and deployment processes for serverless functions. This pipeline should incorporate version control, automated testing, infrastructure-as-code, and rollback mechanisms to ensure efficient and reliable deployments. The specific steps and tools used will depend on the serverless platform and the complexity of the application.

- Version Control: The pipeline begins with a version control system, such as Git. Developers commit code changes to a repository. This triggers the CI/CD pipeline.

- Build Stage: The build stage retrieves the latest code version from the repository. It then builds the serverless function code, packages dependencies, and creates deployment artifacts. This stage may involve using build tools specific to the programming language and serverless platform.

- Testing Stage: This stage executes automated tests, including unit tests, integration tests, and E2E tests. The tests validate the functionality and performance of the serverless functions. The pipeline can be configured to fail the build if any tests fail, preventing the deployment of potentially broken code.

- Deployment Stage: The deployment stage deploys the built artifacts to the serverless platform. This involves configuring infrastructure resources, such as Lambda functions, API Gateways, and databases, and deploying the function code. This stage can use Infrastructure-as-Code (IaC) tools like Terraform or AWS CloudFormation to automate the provisioning and management of infrastructure.

- Monitoring and Rollback: After deployment, the pipeline incorporates monitoring tools to track function performance and health. In case of deployment failures or performance degradation, automated rollback mechanisms can revert to a previous, stable version of the function.

- Infrastructure as Code (IaC): IaC enables the definition and management of infrastructure resources using code. Tools like Terraform, AWS CloudFormation, and Azure Resource Manager allow defining infrastructure components such as functions, APIs, and databases in a declarative manner. This approach enables consistent, repeatable deployments and simplifies infrastructure management. For instance, defining an AWS Lambda function, an API Gateway endpoint, and a DynamoDB table in a Terraform configuration file, and then using the CI/CD pipeline to apply these configurations, automatically provisioning the resources.

- Rollback Mechanisms: Rollback mechanisms are essential for ensuring the stability of serverless applications. These mechanisms enable reverting to a previous, stable version of a function in case of deployment failures or performance degradation. Rollback strategies include versioning (e.g., using Lambda versions and aliases) and blue/green deployments.

Security Considerations in NoOps

The adoption of NoOps, particularly in serverless environments, introduces a unique set of security challenges. While the operational burden is shifted, the responsibility for security remains paramount. A comprehensive security strategy is essential to protect serverless applications and the sensitive data they handle. This involves understanding the specific vulnerabilities inherent in serverless architectures and implementing robust security practices.

Security Challenges in a NoOps Environment

The shift towards NoOps and serverless computing introduces several security challenges that must be addressed. The distributed nature of serverless applications, the reliance on third-party services, and the ephemeral nature of function instances necessitate a proactive and adaptive security approach.

- Increased Attack Surface: Serverless applications often comprise numerous, interconnected functions, APIs, and third-party services. This distributed architecture expands the potential attack surface, making it more difficult to monitor and secure. The use of multiple vendors and services increases the complexity of managing security policies.

- Dependency Management: Serverless functions frequently rely on external libraries and dependencies. Vulnerabilities within these dependencies can be exploited to compromise the application. Managing and patching these dependencies is a continuous process, requiring automation and vigilance.

- Ephemeral Nature of Functions: Serverless functions are created, executed, and terminated automatically. This ephemeral nature makes traditional security practices, such as static analysis and persistent logging, more challenging. Security measures must be integrated into the build and deployment pipelines.

- Lack of Infrastructure Control: In a NoOps environment, developers often have limited control over the underlying infrastructure. This can make it difficult to implement security controls at the network or operating system level. Security must be focused on the application code and the configuration of serverless services.

- Shared Responsibility Model: The security responsibility is shared between the cloud provider and the application developer. Understanding the division of responsibility is crucial for implementing appropriate security measures. The cloud provider secures the infrastructure, while the developer secures the application and its data.

Security Best Practices for Serverless Functions and Data

Implementing security best practices is crucial for mitigating risks in serverless environments. These practices should be applied throughout the development lifecycle, from coding to deployment and monitoring.

- Least Privilege Principle: Functions should be granted only the minimum necessary permissions to access resources. This minimizes the impact of a compromised function. Use IAM roles and policies to restrict access to specific resources.

- Input Validation: Validate all input data to prevent injection attacks and other vulnerabilities. Sanitize user input to remove potentially malicious code. This is a critical defense against common web application attacks.

- Secure Coding Practices: Follow secure coding practices to prevent vulnerabilities such as SQL injection, cross-site scripting (XSS), and cross-site request forgery (CSRF). Regularly review code for security flaws. Utilize static and dynamic analysis tools to identify vulnerabilities.

- Encryption: Encrypt data at rest and in transit to protect sensitive information. Use HTTPS for all communication. Employ encryption keys managed by a secure key management service.

- Regular Updates and Patching: Keep serverless functions, dependencies, and runtime environments up-to-date with the latest security patches. Automate the patching process to minimize the time window for exploitation.

- Secrets Management: Store sensitive information, such as API keys and database credentials, securely. Use a secrets management service to protect and manage these secrets. Avoid hardcoding secrets in the code.

- Logging and Monitoring: Implement comprehensive logging and monitoring to detect and respond to security incidents. Collect logs from all functions and services. Use monitoring tools to identify anomalies and suspicious activity.

Securing Serverless Applications: Authentication, Authorization, and Data Protection

Securing serverless applications involves a multi-layered approach that encompasses authentication, authorization, and robust data protection mechanisms. These elements are essential for ensuring the confidentiality, integrity, and availability of the application and its data.

- Authentication: Implement robust authentication mechanisms to verify the identity of users and applications. Use industry-standard authentication protocols, such as OAuth 2.0 or OpenID Connect, to securely authenticate users. Consider multi-factor authentication (MFA) for enhanced security.

- Authorization: Implement granular authorization policies to control access to resources. Use role-based access control (RBAC) to define permissions based on user roles. Regularly review and update authorization policies to align with business requirements.

- Data Protection at Rest: Encrypt data stored in databases, object storage, and other data stores. Use encryption keys managed by a secure key management service. Implement data loss prevention (DLP) measures to prevent unauthorized access to sensitive data.

- Data Protection in Transit: Use HTTPS for all communication between serverless functions and other services. Implement Transport Layer Security (TLS) to encrypt data in transit. Regularly update TLS certificates to ensure security.

- API Security: Secure APIs using authentication and authorization mechanisms. Implement API gateways to manage API access, rate limiting, and monitoring. Protect APIs against common attacks, such as denial-of-service (DoS) and injection attacks.

- Security Auditing and Compliance: Conduct regular security audits to identify vulnerabilities and assess compliance with security standards. Implement automated security testing and vulnerability scanning. Maintain detailed documentation of security controls and procedures.

- Example: Consider a serverless e-commerce application. User authentication could be handled via Amazon Cognito, providing secure user sign-up, sign-in, and access control. Authorization could be managed using IAM roles, granting functions access to specific resources like product databases or payment gateways based on user roles (e.g., customer, administrator). Data at rest in a DynamoDB database would be encrypted, and data in transit between the user’s browser and the serverless functions would be secured using HTTPS.

API Gateway could manage API access, enforcing rate limits to prevent abuse.

Future Trends and Evolution

The trajectory of NoOps and serverless computing is poised for significant advancements, driven by evolving technological landscapes and the increasing demand for agility and efficiency in software development and deployment. This evolution will not only refine existing practices but also introduce new paradigms, impacting how organizations manage and operate their IT infrastructure.

Emerging Technologies Impacting NoOps and Serverless

Several emerging technologies are expected to significantly influence the future of NoOps and serverless computing. These technologies will enhance automation, improve resource management, and further reduce operational overhead.

- Artificial Intelligence (AI) and Machine Learning (ML): AI and ML will play a crucial role in automating various operational tasks. Specifically, they will be used for predictive scaling, anomaly detection, and intelligent resource allocation. For instance, AI-powered systems can analyze historical traffic patterns to proactively scale serverless functions, preventing performance bottlenecks and optimizing costs. ML algorithms can also detect unusual behavior in application logs, enabling faster identification and resolution of issues.

This proactive approach will reduce the need for manual intervention and improve system reliability.

- Edge Computing: Edge computing, which involves processing data closer to the source, will be increasingly integrated with serverless architectures. This combination enables low-latency applications, particularly for use cases like IoT, content delivery, and real-time data processing. Serverless functions can be deployed to edge locations, reducing the distance data needs to travel and improving responsiveness. For example, a smart factory could use serverless functions at the edge to analyze sensor data in real-time, triggering automated responses without relying on centralized cloud resources.

- WebAssembly (Wasm): WebAssembly is a binary instruction format that allows code written in various languages to be executed in web browsers and other environments. Its adoption in serverless environments will offer enhanced portability and performance. Developers will be able to write serverless functions in a wider range of languages and execute them across different platforms with improved efficiency. This can be beneficial for computationally intensive tasks like image processing or scientific simulations.

- Service Mesh: Service meshes will become more prevalent in managing and securing serverless applications. They provide a dedicated infrastructure layer for service-to-service communication, enabling features like traffic management, security, and observability. In a serverless context, service meshes can handle complex routing rules, implement security policies, and provide detailed insights into the interactions between serverless functions and other services. This can simplify the management of microservices architectures.

- Serverless Databases: The emergence of serverless databases will further simplify database management and integration with serverless applications. These databases automatically scale, require no manual provisioning, and offer pay-per-use pricing models. This aligns perfectly with the serverless philosophy of eliminating operational overhead and optimizing costs. Examples include database offerings from major cloud providers.

Forecast of NoOps and Serverless Evolution

The next few years will witness a rapid evolution of NoOps and serverless computing, with several key trends shaping the landscape. These trends are expected to accelerate the adoption of serverless architectures and transform how organizations approach software development and IT operations.

- Increased Automation and Orchestration: Automation will become even more sophisticated, with AI-powered tools handling a wider range of tasks. Orchestration platforms will provide greater control and visibility over serverless deployments, enabling complex workflows and streamlined management. This will further reduce the need for manual intervention and improve the efficiency of operations.

- Enhanced Observability and Monitoring: Observability tools will provide deeper insights into the performance and behavior of serverless applications. This will involve advanced metrics, distributed tracing, and automated anomaly detection. Improved monitoring will enable developers and operations teams to quickly identify and resolve issues, ensuring optimal application performance.

- Focus on Security and Compliance: Security will remain a top priority, with increased emphasis on securing serverless functions and the data they access. Organizations will adopt robust security measures, including automated vulnerability scanning, identity and access management (IAM), and compliance frameworks. Serverless platforms will offer more built-in security features and compliance certifications to streamline the implementation of security best practices.

- Wider Adoption Across Industries: Serverless computing will expand beyond its current use cases, with increased adoption in various industries, including finance, healthcare, and manufacturing. The benefits of serverless, such as scalability, cost-effectiveness, and reduced operational overhead, will appeal to a wider range of organizations. Serverless will become the preferred architecture for new applications and gradually replace traditional infrastructure in existing systems.

- Standardization and Interoperability: Efforts to standardize serverless technologies and improve interoperability will continue. This will allow developers to easily move applications between different cloud providers and use a wider range of tools and services. Open-source initiatives and industry collaborations will play a key role in driving standardization and fostering innovation in the serverless ecosystem.

Final Wrap-Up

In conclusion, the convergence of NoOps and serverless technologies represents a significant advancement in IT operations. While challenges exist, the potential for reduced operational overhead, increased agility, and enhanced cost efficiency is undeniable. By embracing automation, robust monitoring, and proactive security measures, organizations can move closer to realizing the vision of a truly operational-free environment, ultimately enabling them to focus on innovation and delivering value to their users.

FAQ

What is the primary goal of NoOps?

The primary goal of NoOps is to minimize or eliminate the need for IT operations teams by automating and abstracting operational tasks, allowing developers to focus on writing code rather than managing infrastructure.

How does serverless contribute to achieving NoOps?

Serverless computing enables NoOps by abstracting away the underlying infrastructure management. Developers deploy code without managing servers, and the cloud provider handles scaling, patching, and other operational tasks, aligning perfectly with NoOps principles.

What are the main challenges of adopting NoOps?