What is Kubernetes rightsizing for pods and nodes is a critical practice for optimizing resource utilization and cost-efficiency within a Kubernetes environment. It involves carefully allocating the right amount of CPU, memory, storage, and network resources to both individual pods (the smallest deployable units in Kubernetes) and the nodes (the worker machines) that host them. This strategic approach ensures applications receive the resources they need without overspending on unused capacity or suffering from performance bottlenecks.

This guide delves into the fundamentals of rightsizing, exploring the potential pitfalls of both over-provisioning and under-provisioning. We will navigate the intricacies of pod and node rightsizing, examining various strategies, tools, and techniques. From understanding CPU and memory requests to optimizing storage and network configurations, this comprehensive overview aims to equip you with the knowledge and skills to implement effective rightsizing strategies for diverse workloads, ultimately leading to improved application performance and reduced operational costs.

Understanding Kubernetes Rightsizing Fundamentals

Kubernetes rightsizing is a crucial practice for optimizing resource utilization and cost efficiency within a cluster. It involves carefully matching the resources allocated to pods and nodes with their actual needs, preventing waste and ensuring optimal performance. Effective rightsizing contributes significantly to operational excellence in a Kubernetes environment.

Core Concept of Rightsizing in Kubernetes

Rightsizing in Kubernetes is the process of ensuring that the resources allocated to pods (CPU and memory) and nodes (compute instances) align with their actual requirements. The goal is to find the “sweet spot” where applications have enough resources to perform well without over-provisioning, which leads to wasted resources and increased costs.

Scenarios of Inefficient Resource Allocation

Improper resource allocation in Kubernetes can manifest in various ways, leading to significant inefficiencies. These scenarios highlight the importance of careful rightsizing:* Over-provisioning of CPU: When a pod is allocated more CPU resources than it actually needs, the excess capacity remains idle. This unused CPU capacity represents a waste of resources, increasing infrastructure costs without providing any tangible benefit to the application.

For example, a web server pod might be allocated 4 vCPUs, but its average CPU utilization is only 10%. The remaining 3.6 vCPUs are essentially wasted.* Over-provisioning of Memory: Similar to CPU, allocating excessive memory to a pod leads to wasted resources. Unused memory does not contribute to improved application performance and increases the overall cost of running the cluster.

A database pod, for instance, might be given 16 GB of memory, but its actual memory usage consistently hovers around 4 GB.* Under-provisioning of CPU: Insufficient CPU allocation can lead to performance bottlenecks. Pods may experience slow response times, increased latency, and degraded overall performance. For example, a computationally intensive image processing application might be allocated only 1 vCPU, leading to significantly longer processing times and potentially impacting user experience.* Under-provisioning of Memory: If a pod lacks sufficient memory, it may be subject to frequent garbage collection cycles, swapping, and even crashes.

This leads to unstable applications and negatively impacts user experience. A caching service, for instance, might be allocated insufficient memory to store its frequently accessed data, leading to increased cache misses and reduced performance.

Consequences of Resource Misallocation for Pods

Both over-provisioning and under-provisioning of resources have distinct consequences for pods, affecting performance, cost, and overall cluster efficiency.* Over-provisioning Consequences: Over-provisioning leads to wasted resources and increased operational costs.

- Increased Costs: Paying for unused compute resources translates directly into higher infrastructure bills.

- Inefficient Resource Utilization: Wasted CPU and memory capacity prevents the cluster from effectively using its resources.

- Reduced Density: Over-provisioned pods take up more space on nodes, reducing the number of pods that can be scheduled on a single node. This can lead to underutilized nodes or the need for more nodes than necessary.

* Under-provisioning Consequences: Under-provisioning results in performance degradation and potential application instability.

- Performance Bottlenecks: Insufficient CPU and memory can cause slow response times, increased latency, and degraded application performance.

- Application Instability: Pods may crash or become unresponsive due to resource starvation, leading to service disruptions.

- Impacted User Experience: Slow application performance and service outages directly affect end-users.

- Increased Operational Overhead: Troubleshooting performance issues caused by resource constraints adds to the operational burden of the Kubernetes cluster.

Rightsizing Pods

Rightsizing pods is a critical aspect of Kubernetes resource management, directly impacting application performance, cost efficiency, and overall cluster stability. Properly sizing CPU and memory requests and limits ensures that pods have sufficient resources to operate effectively without wasting allocated resources. This section delves into strategies for optimizing pod resource allocation, focusing specifically on CPU and memory management.

Determining Optimal CPU Requests and Limits for a Typical Web Application Pod

Optimizing CPU allocation for web application pods involves a systematic approach that combines monitoring, analysis, and iterative adjustments. This process ensures that pods receive the necessary CPU resources to handle traffic and operations efficiently.Here’s a method for determining optimal CPU requests and limits:

1. Baseline Measurement

Begin by deploying the web application with initial, conservative CPU requests and limits. For instance, a starting point might be 100m (millicores) as the request and 500m as the limit.

2. Performance Monitoring

Implement robust monitoring tools to track CPU utilization, request latency, and error rates. Prometheus and Grafana, for example, are popular choices. Collect metrics like CPU usage percentage, average response time, and the number of errors.

3. Load Testing

Simulate realistic traffic loads using tools like ApacheBench or JMeter. Gradually increase the load to identify the application’s performance under stress. Observe how CPU utilization and response times change.

4. Analysis of Metrics

Analyze the collected metrics to identify bottlenecks and areas for improvement.

If CPU utilization consistently exceeds 80% during peak load, increase the CPU request.

If the application experiences performance degradation or errors, and CPU utilization is at or near the limit, increase the CPU limit.

If CPU utilization is consistently low, consider reducing the CPU request to avoid over-allocation.

5. Iterative Adjustments

Based on the analysis, adjust CPU requests and limits incrementally. Deploy the updated pod configuration and repeat the monitoring and load testing steps.

6. Autoscaling Considerations

Implement Horizontal Pod Autoscaling (HPA) to automatically adjust the number of pods based on CPU utilization. This ensures that the application can handle fluctuating traffic demands. Configure HPA to scale based on CPU usage targets.

7. Observability and Alerts

Set up alerts to notify operators of any issues, such as high CPU utilization or performance degradation. This allows for proactive intervention and adjustments.

Comparing Different CPU Request Strategies

Different strategies can be employed for setting CPU requests, each with its own advantages and disadvantages. Choosing the right strategy depends on the specific application requirements and the operational environment.Here is a comparison of several CPU request strategies:

| Strategy | Description | Advantages | Disadvantages |

|---|---|---|---|

| Static | Fixed CPU request and limit values are defined in the pod configuration. | Simple to implement; predictable resource allocation. | Can lead to under-utilization or over-allocation of resources; requires manual tuning. |

| Dynamic | CPU requests and limits are adjusted automatically based on predefined rules or external signals. | More efficient resource utilization; can adapt to changing workloads. | Requires careful configuration and monitoring; potential for instability if rules are not well-defined. |

| Based on Load | CPU requests are adjusted based on the current load on the application, often using Horizontal Pod Autoscaling (HPA). | Automatically scales resources based on demand; responsive to traffic fluctuations. | Requires proper HPA configuration and monitoring; can be complex to set up initially. |

| Bursting | Allows pods to temporarily exceed their CPU request limit if resources are available. | Improves performance during short bursts of high load; allows applications to utilize spare resources. | Can lead to performance degradation if resources are consistently over-utilized; requires careful monitoring and resource planning. |

Impact of Memory Requests and Limits on Pod Performance and Stability

Memory requests and limits significantly impact pod performance and stability. Setting appropriate memory values is essential for preventing performance issues, resource contention, and potential pod termination.Memory requests and limits affect pod behavior in the following ways:* Resource Allocation:

Request

Kubernetes uses the memory request to schedule pods onto nodes with sufficient available memory. If a node doesn’t have enough memory to satisfy a pod’s request, the pod will not be scheduled on that node.

Limit

The memory limit defines the maximum amount of memory a pod can consume. If a pod exceeds its memory limit, it can be terminated by Kubernetes (OOMKilled – Out Of Memory Killed).* Performance:

Too Low Request

If the memory request is too low, the pod might be scheduled on a node with insufficient resources, leading to performance degradation due to swapping or contention.

Too Low Limit

If the memory limit is too low, the pod can be killed when it attempts to use more memory than is allocated. This can result in application downtime and data loss.* Stability:

Memory Exhaustion

When a pod consumes more memory than its limit, Kubernetes can terminate the pod to prevent it from impacting other pods on the same node.

Node Pressure

If many pods on a node exceed their memory limits, the node itself can experience memory pressure, potentially leading to the eviction of pods or even node failure.* Best Practices:

Set Memory Requests

Set memory requests to a value that the application needs to function correctly under normal load.

Set Memory Limits

Set memory limits to a value that the application should not exceed, considering peak load and potential memory leaks.

Monitor Memory Usage

Regularly monitor pod memory usage using tools like Prometheus and Grafana to identify potential issues and optimize resource allocation.

Use Vertical Pod Autoscaling (VPA)

Consider using VPA to automatically adjust pod memory requests and limits based on observed memory usage.* Example Scenario: Imagine a web application pod with a memory request of 500Mi and a memory limit of 1Gi. If the application experiences a sudden spike in traffic, it might start consuming more memory to handle the increased load. If the memory usage exceeds 1Gi, Kubernetes will terminate the pod.

Conversely, if the memory request is set too high, the pod might be scheduled on a node with excessive resources, leading to wasted resources and potential inefficiency. Therefore, tuning memory requests and limits correctly is essential for ensuring optimal performance, stability, and resource utilization.

Rightsizing Pods

In our exploration of Kubernetes rightsizing, we’ve already established the importance of optimizing resource allocation for Pods. This section delves into the critical aspects of storage and network rightsizing, essential for ensuring application performance, cost efficiency, and overall cluster health. Effective management of storage and network resources is crucial, especially for stateful applications and those with demanding bandwidth requirements.

Rightsizing Pods: Storage and Network

Storage and network configurations directly impact a Pod’s ability to function effectively. Inadequate storage can lead to data loss or application failures, while insufficient network resources can cause performance bottlenecks.

Common Challenges in Storage Rightsizing for Stateful Applications in Kubernetes

Stateful applications, such as databases and message queues, present unique storage rightsizing challenges due to their persistent data requirements. These challenges include:

- Predicting Storage Needs: Accurately forecasting storage requirements for stateful applications is difficult because of variable data growth rates, fluctuating workloads, and unforeseen events. The complexity increases with the application’s data lifecycle.

- Performance Bottlenecks: Inadequate storage performance (IOPS and throughput) can significantly impact application responsiveness. Choosing the wrong storage class or provisioning insufficient resources can lead to slow read/write operations, affecting overall performance.

- Data Durability and Availability: Ensuring data durability and high availability is paramount for stateful applications. Choosing storage solutions with appropriate replication, backup, and recovery mechanisms is crucial.

- Cost Optimization: Balancing storage performance, capacity, and cost can be complex. Over-provisioning storage leads to wasted resources and increased expenses, while under-provisioning can cause performance issues.

- Storage Class Selection: Selecting the correct storage class, which defines the underlying storage infrastructure (e.g., SSD, HDD, cloud provider-specific storage), can be complex. The choice impacts performance, cost, and data durability.

- Dynamic Volume Provisioning: Managing persistent volumes dynamically can be challenging. Ensuring that the storage provisioned meets the application’s needs and is properly reclaimed when no longer needed requires careful planning and configuration.

Strategies for Optimizing Network Resource Allocation

Optimizing network resource allocation is critical for preventing bottlenecks and ensuring smooth communication within and outside the Kubernetes cluster. Strategies include:

- Bandwidth Management:

- Network Policies: Implement network policies to control traffic flow between Pods, namespaces, and external networks. This helps to isolate workloads and limit the impact of network congestion.

- Resource Quotas: Set resource quotas for network bandwidth to limit the amount of network resources a Pod or namespace can consume.

- Traffic Shaping: Use traffic shaping techniques to prioritize critical traffic and prevent less important traffic from overwhelming the network. Tools like `tc` (traffic control) can be used to limit the bandwidth.

- Connection Limits:

- Pod-Level Limits: Configure connection limits at the Pod level to prevent individual Pods from consuming excessive network resources.

- Service-Level Limits: Use Kubernetes Services and ingress controllers to manage connection limits and load balance traffic across Pods.

- Connection Pooling: Implement connection pooling to reuse existing connections and reduce the overhead of establishing new connections.

- Monitoring and Analysis:

- Network Monitoring Tools: Use network monitoring tools (e.g., Prometheus, Grafana, or provider-specific tools) to track network performance metrics such as bandwidth utilization, latency, and packet loss.

- Log Analysis: Analyze application logs to identify network-related issues, such as slow response times or connection errors.

- Choosing Appropriate Network Plugins: Select a suitable CNI (Container Network Interface) plugin based on the cluster’s requirements, such as performance, scalability, and integration with existing network infrastructure. Popular options include Calico, Cilium, and Flannel.

Best Practices for Selecting Appropriate Storage Classes and Sizes

Choosing the right storage class and size is critical for performance, cost, and data durability. Here are some best practices:

- Understand Workload Requirements:

- Application Type: Consider the type of workload (e.g., database, web server, logging) and its storage needs.

- Performance Needs: Determine the required IOPS (Input/Output Operations Per Second) and throughput based on the application’s read/write patterns.

- Data Volume: Estimate the amount of storage required, including future growth.

- Data Durability: Define the level of data durability required, considering factors such as replication and backup strategies.

- Storage Class Selection:

- Cloud Provider Integration: Leverage storage classes provided by your cloud provider (e.g., AWS EBS, Google Persistent Disk, Azure Disks).

- Performance Tiers: Choose storage classes that offer different performance tiers (e.g., SSD, HDD) to match the application’s needs.

- Cost Considerations: Compare the cost of different storage classes and select the most cost-effective option that meets performance and durability requirements.

- Storage Size Provisioning:

- Initial Sizing: Provision storage with an initial size that meets the current needs of the application.

- Dynamic Volume Provisioning: Use dynamic volume provisioning to automatically create and resize persistent volumes as needed.

- Monitoring and Adjustment: Monitor storage utilization and adjust the size of persistent volumes as needed.

- Capacity Planning: Estimate future storage requirements and plan for capacity upgrades.

- Data Backup and Recovery:

- Backup Strategies: Implement a robust backup strategy to protect data from loss or corruption.

- Recovery Procedures: Develop and test recovery procedures to ensure that data can be restored quickly and reliably.

- Example Scenario:

- For a database application with high I/O requirements, select a storage class with SSD-backed storage and provision sufficient IOPS to handle the expected workload. Consider the database size and plan for future growth when determining the initial storage size. Implement regular backups to ensure data durability.

Node Rightsizing: Hardware Considerations

Optimizing Kubernetes node resources is crucial for achieving efficient resource utilization, cost savings, and improved application performance. Selecting the appropriate hardware for your nodes, also known as node rightsizing, involves careful consideration of various factors, including workload characteristics, application resource requirements, and cluster size. This section focuses on hardware considerations for node rightsizing, providing guidance on choosing the right node sizes and hardware specifications.

Comparing Node Sizes

The choice of node size significantly impacts cluster performance and cost-effectiveness. Different node sizes cater to different workload demands. Understanding the advantages and disadvantages of each size is essential for making informed decisions.

- Small Nodes: These nodes typically have limited CPU, memory, and storage resources. They are suitable for:

- Testing and development environments.

- Workloads with low resource requirements.

- Applications with a small footprint.

Benefits: Lower cost per node, faster deployment times, and easier scaling in and out.

Drawbacks: Limited capacity, potential for resource contention if workloads grow, and less efficient resource utilization if nodes are underutilized.

- Medium Nodes: These nodes offer a balance between resource capacity and cost. They are well-suited for:

- Production workloads with moderate resource demands.

- Applications that require a consistent level of performance.

- Clusters with a mix of workloads.

Benefits: Good balance between cost and performance, can handle a variety of workloads, and provide a reasonable level of redundancy.

Drawbacks: May not be optimal for very large or resource-intensive applications, and resource fragmentation can occur if workloads are not properly sized.

- Large Nodes: These nodes are equipped with substantial CPU, memory, and storage resources. They are ideal for:

- High-performance workloads.

- Applications with significant resource requirements (e.g., databases, machine learning).

- Consolidating workloads to reduce the number of nodes.

Benefits: High capacity, ability to handle demanding workloads, and potentially lower operational overhead due to fewer nodes to manage.

Drawbacks: Higher cost per node, potential for wasted resources if nodes are underutilized, and may require more careful planning for capacity management.

Guidelines for Choosing Hardware Specifications

Selecting the appropriate hardware specifications (CPU, memory, and storage) is a critical step in node rightsizing. The choices should be based on the workload’s resource demands and the overall cluster design.

- CPU:

Consider the number of vCPUs required per pod and the expected number of pods per node.

Guidelines:

- Start with a CPU overcommit ratio of 1:1 or 2:1, depending on the workload’s characteristics (e.g., burstable vs. steady-state).

- Monitor CPU utilization to identify potential bottlenecks.

- Choose CPU types that are optimized for the workload (e.g., high core count for CPU-bound tasks, specific instruction sets for certain applications).

- Memory:

Determine the memory requirements of each pod and the expected number of pods per node.

Guidelines:

- Allocate sufficient memory to accommodate the workloads’ needs and the operating system’s overhead.

- Monitor memory utilization to identify potential out-of-memory (OOM) errors.

- Consider the use of memory limits and requests to prevent resource exhaustion.

- Storage:

Assess the storage requirements of the workloads, including disk I/O performance (IOPS), storage capacity, and data persistence needs.

Guidelines:

- Choose storage types that meet the performance and capacity requirements of the applications (e.g., SSDs for high-performance workloads, cloud-based storage for scalability).

- Consider the use of persistent volumes for stateful applications.

- Monitor storage utilization and performance metrics (e.g., disk I/O, latency) to identify bottlenecks.

Estimating Resource Capacity for a Node

Estimating the resource capacity needed to support a given workload on a node is a crucial step in node rightsizing. This involves analyzing the workload’s resource demands and determining the appropriate node specifications.

Step-by-Step Process:

- Analyze Workload Resource Requirements:

Gather historical data or conduct performance tests to determine the CPU, memory, and storage requirements of the applications.

- Determine Pod Density:

Estimate the number of pods that can be deployed on a single node, considering the resource requests and limits.

- Calculate Node Resource Requirements:

Multiply the resource requirements per pod by the estimated number of pods per node. Account for the operating system and Kubernetes overhead.

- Account for Overhead:

Include a buffer for the operating system, Kubernetes components, and any other background processes. A common practice is to reserve a percentage of the node’s resources (e.g., 10-20%) for overhead.

- Select Node Hardware:

Choose node hardware specifications that meet or exceed the calculated resource requirements.

Example:

Consider a workload consisting of a web application that requires 1 vCPU and 2 GB of memory per pod. We estimate that we can run 5 pods on a single node.

Calculations:

- CPU Requirement: 1 vCPU/pod

– 5 pods = 5 vCPUs - Memory Requirement: 2 GB/pod

– 5 pods = 10 GB

Adding a 10% overhead:

- CPU: 5 vCPUs + (5 vCPUs

– 0.10) = 5.5 vCPUs - Memory: 10 GB + (10 GB

– 0.10) = 11 GB

Based on these calculations, a node with at least 6 vCPUs and 12 GB of memory would be suitable for this workload.

Real-World Case:

A large e-commerce company, “E-Mart,” migrated its online shopping platform to Kubernetes. Initially, they chose medium-sized nodes with 4 vCPUs and 8 GB of RAM. However, during peak traffic periods, the application experienced performance issues and frequent OOM errors. By analyzing the application’s resource consumption, they determined that each pod required an average of 1.5 vCPUs and 3 GB of RAM.

After implementing node rightsizing, they upgraded to larger nodes with 8 vCPUs and 16 GB of RAM, allowing them to accommodate more pods per node and handle the increased traffic load. This resulted in a 30% improvement in application performance and a reduction in the number of nodes required, leading to significant cost savings.

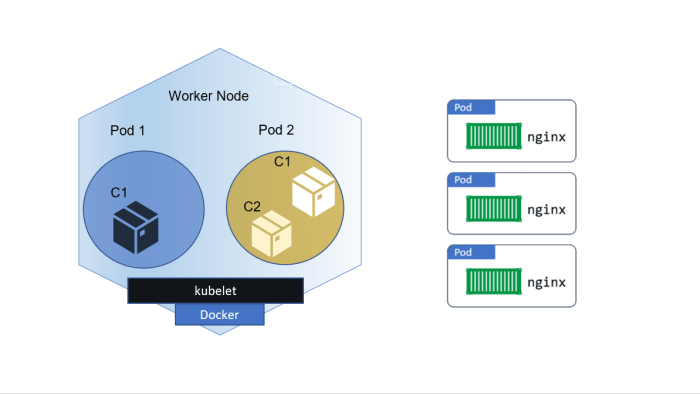

Node Rightsizing

![[not a] Kubernetes 101 - Pods, Deployments, and Services As an Attempt ... [not a] Kubernetes 101 - Pods, Deployments, and Services As an Attempt ...](https://wp.ahmadjn.dev/wp-content/uploads/2025/06/15u_D2khGJBUq1Mr-q4to8w.png)

Effective node rightsizing in Kubernetes is crucial for cost optimization, performance, and resource utilization. This involves carefully matching the resources allocated to nodes with the demands of the workloads they host. This section delves into the practical aspects of node rightsizing, focusing on workload placement and scheduling strategies.

Node Rightsizing: Workload Placement and Scheduling

Kubernetes’ scheduler plays a pivotal role in node rightsizing. It’s responsible for placing pods onto nodes, considering resource requests, constraints, and other factors. The scheduler’s decisions directly influence how efficiently nodes are utilized and whether workloads are optimally placed.The Kubernetes scheduler’s impact on node rightsizing decisions involves several key aspects:

- Resource Requests and Limits: The scheduler uses pod resource requests (CPU and memory) to determine if a node has sufficient capacity to accommodate a pod. Setting appropriate requests is fundamental; under-requesting can lead to pods being scheduled on nodes with insufficient resources, resulting in performance degradation. Over-requesting can lead to inefficient resource utilization, as nodes may be underutilized. Setting limits provides a hard cap on resource usage, preventing a pod from consuming excessive resources and impacting other pods on the same node.

- Node Capacity: The scheduler considers the available resources on each node when making placement decisions. This involves understanding the total CPU and memory available on each node, as well as the resources already consumed by existing pods and system processes.

- Scheduling Constraints: The scheduler respects constraints such as node selectors, node affinity, and anti-affinity rules. These constraints guide the scheduler in placing pods on specific nodes or avoiding placement on others, based on factors such as hardware requirements, workload dependencies, or fault tolerance considerations.

- Pod Priority and Preemption: Kubernetes allows defining pod priorities. Higher-priority pods can preempt lower-priority pods if the node lacks resources. This can affect node rightsizing decisions by influencing how resources are distributed during periods of high demand.

- Taints and Tolerations: Taints and tolerations allow you to ensure that pods are only scheduled on certain nodes. This can be useful for separating workloads or ensuring that pods that require specific hardware are placed on nodes with that hardware.

Optimizing pod placement through node selectors, affinity, and anti-affinity is crucial for effective node rightsizing. These mechanisms allow administrators to guide the scheduler in placing pods onto the most appropriate nodes based on various criteria.

- Node Selectors: Node selectors are the simplest form of constraint. They allow you to specify a label that a node must have for a pod to be scheduled on it. For example, a pod requiring a GPU could use a node selector to specify `kubernetes.io/gpu: “true”`. This ensures that the pod is only scheduled on nodes that have the `kubernetes.io/gpu` label.

- Node Affinity: Node affinity provides more flexibility than node selectors. It allows you to define rules that the scheduler should try to satisfy when placing pods. There are two types of node affinity:

- Required During Scheduling (Hard Affinity): This requires that the pod be scheduled on a node that matches the affinity rules. If no matching nodes are available, the pod will not be scheduled.

- Preferred During Scheduling (Soft Affinity): This indicates a preference for scheduling the pod on a node that matches the affinity rules. The scheduler will attempt to satisfy these rules, but if no matching nodes are available, the pod can still be scheduled on a different node.

Node affinity can be used to place pods on nodes with specific hardware, such as GPUs or high-memory machines, or to ensure that pods are scheduled in a specific availability zone or region.

- Pod Anti-Affinity: Pod anti-affinity allows you to prevent pods from being scheduled on the same node or in the same availability zone. This is useful for improving fault tolerance and ensuring that a failure on one node does not affect all instances of a pod. For example, you might use pod anti-affinity to ensure that multiple replicas of a database pod are not scheduled on the same node.

Regular evaluation and adjustment of node resource utilization are essential for maintaining optimal node rightsizing. This involves monitoring resource usage, analyzing trends, and making adjustments to node configurations and workload placement strategies.The following procedure can be implemented for regularly evaluating and adjusting node resource utilization:

- Monitoring Resource Usage: Implement comprehensive monitoring of node and pod resource utilization. Use tools like Prometheus, Grafana, or the Kubernetes Dashboard to track CPU, memory, disk I/O, and network usage. Collect metrics over time to establish baselines and identify trends.

- Analyzing Trends: Analyze the collected metrics to identify patterns and anomalies. Look for nodes that are consistently over-utilized or under-utilized. Determine the causes of resource bottlenecks or inefficiencies. For instance, a node consistently at 90% CPU utilization might benefit from an upgrade or workload redistribution.

- Identifying Optimization Opportunities: Based on the analysis, identify opportunities to optimize resource utilization. This may involve:

- Adjusting Resource Requests and Limits: Review and adjust pod resource requests and limits based on observed usage. Increase requests for pods consistently experiencing resource constraints, and reduce requests for pods that are over-requesting resources.

- Rescheduling Pods: Utilize node selectors, affinity, and anti-affinity to reschedule pods to nodes with more appropriate resources or to improve resource distribution.

- Scaling Nodes: Scale up or down the number of nodes in your cluster to match workload demands. Add nodes to handle increased workloads or remove nodes to reduce costs during periods of low demand.

- Horizontal Pod Autoscaling (HPA): Implement HPA to automatically scale the number of pod replicas based on resource utilization metrics.

- Implementing Changes: Implement the identified optimizations. This may involve updating pod specifications, adjusting node configurations, or modifying scheduling policies.

- Monitoring and Iterating: Continuously monitor the impact of the implemented changes. Repeat the analysis and adjustment process regularly (e.g., weekly or monthly) to ensure that node resource utilization remains optimal.

Monitoring and Metrics for Rightsizing

Effective monitoring is crucial for Kubernetes rightsizing. It provides the necessary data to understand resource utilization patterns and identify areas for optimization. This proactive approach allows for efficient resource allocation, cost savings, and improved application performance.

Key Metrics for Monitoring

Understanding the key metrics at both the pod and node levels is fundamental to rightsizing. Analyzing these metrics provides insights into resource consumption, allowing for informed decisions about resource allocation. This proactive monitoring enables optimization, ensuring efficient use of resources and improved application performance.

- CPU Utilization: Monitors the percentage of CPU cores used by pods and nodes. High CPU utilization indicates potential bottlenecks and the need for scaling or resource adjustments. At the pod level, this metric reveals if a specific application instance is CPU-bound. At the node level, it indicates overall CPU pressure.

- Memory Utilization: Tracks the amount of memory consumed by pods and nodes. High memory utilization can lead to performance degradation, swapping, and potential application crashes. Monitoring memory at the pod level identifies memory-intensive applications, while node-level monitoring reveals overall memory pressure.

- Storage Utilization: Measures the amount of storage space used by pods and nodes, including disk I/O operations. Analyzing storage metrics helps identify potential storage bottlenecks and the need for storage scaling or optimization. This is particularly important for stateful applications.

- Network Utilization: Examines network traffic, including bandwidth usage and packet loss, at both the pod and node levels. Network metrics help identify potential network bottlenecks that can affect application performance. High network traffic may indicate the need for horizontal scaling or network optimization.

Monitoring Tools and Features

Several monitoring tools are available to track Kubernetes resource utilization. The selection of a monitoring tool depends on specific requirements and preferences. These tools offer diverse features, from basic metrics collection to advanced analytics and alerting capabilities.

| Monitoring Tool | Key Features | Data Visualization | Alerting Capabilities |

|---|---|---|---|

| Prometheus | Open-source time-series database; Pull-based metric collection; Multi-dimensional data model; Service discovery. | Integration with Grafana; Customizable dashboards; Support for various visualization types (graphs, charts). | Alerting rules based on PromQL queries; Integration with various notification channels (email, Slack, etc.). |

| Grafana | Open-source data visualization and monitoring tool; Supports various data sources (Prometheus, InfluxDB, etc.); Customizable dashboards. | Highly customizable dashboards; Support for various chart types (graphs, gauges, heatmaps); Real-time data visualization. | Alerting rules based on query results; Integration with various notification channels. |

| Kubernetes Dashboard | Official Kubernetes web UI; Provides a general overview of cluster resources; Simple interface for monitoring. | Basic charts and graphs for resource utilization; Overview of pod and node status. | Basic alerting capabilities; Notifications for critical events. |

| Datadog | Commercial monitoring and analytics platform; Comprehensive monitoring of Kubernetes and other infrastructure components; APM and log management. | Customizable dashboards; Support for various visualization types; Real-time data visualization. | Advanced alerting capabilities; Customizable alerts; Integration with various notification channels. |

Using Monitoring Data for Rightsizing

Analyzing monitoring data is crucial for identifying opportunities to optimize resource allocation. This involves examining the trends in CPU, memory, storage, and network utilization at both the pod and node levels. The insights gained from this analysis allow for informed rightsizing decisions.

- Identifying Over-Provisioned Resources: Look for pods and nodes that consistently have low CPU or memory utilization. These resources may be over-provisioned and can be reduced to improve resource efficiency and reduce costs. For example, if a pod is consistently using only 20% of its allocated CPU, the CPU request can be reduced.

- Detecting Resource Bottlenecks: Identify pods or nodes that are consistently experiencing high CPU or memory utilization. This may indicate a need to increase resource requests or scale the application horizontally. If a node consistently reaches 90% memory utilization, it may be necessary to add more memory to the node or scale the application.

- Analyzing Resource Usage Patterns: Examine resource utilization trends over time to identify peak usage periods and idle periods. This information can be used to optimize resource allocation by adjusting resource requests and limits dynamically based on demand. For instance, an e-commerce website might experience peak resource usage during sales events; therefore, it is crucial to be prepared for that.

- Optimizing Storage and Network: Monitoring storage and network utilization can help to identify potential bottlenecks and optimize resource allocation. If a pod is experiencing high storage I/O, consider increasing storage capacity or optimizing data access patterns. If network bandwidth is constrained, scale the application horizontally or optimize network configuration.

- Implementing Resource Requests and Limits: Utilize monitoring data to set appropriate resource requests and limits for pods. Resource requests define the minimum resources a pod needs to run, while resource limits prevent a pod from consuming excessive resources. Properly configured requests and limits improve resource efficiency and prevent resource starvation.

Tools and Technologies for Automated Rightsizing

Automating the rightsizing process is crucial for efficiently managing Kubernetes resources and optimizing application performance. Several tools and technologies are available to streamline this process, ranging from native Kubernetes features to third-party solutions. These tools help in dynamically adjusting pod and node resources based on real-time metrics, ensuring optimal resource utilization and cost efficiency.

Horizontal Pod Autoscaling (HPA) Implementation

Horizontal Pod Autoscaling (HPA) automatically scales the number of pods in a deployment or replica set based on observed CPU utilization, memory usage, or custom metrics. It ensures that the application has enough resources to handle the workload.To implement HPA, follow these steps:

- Define the HPA resource: Create an HPA manifest file (e.g., `hpa.yaml`) that specifies the target deployment, the minimum and maximum number of replicas, and the scaling metrics.

- Specify scaling metrics: The `metrics` section in the HPA manifest defines the metrics used for scaling. These can be CPU utilization, memory usage, or custom metrics from sources like Prometheus. For example, to scale based on CPU utilization:

apiVersion: autoscaling/v1 kind: HorizontalPodAutoscaler metadata: name: my-app-hpa spec: scaleTargetRef: apiVersion: apps/v1 kind: Deployment name: my-app-deployment minReplicas: 1 maxReplicas: 10 targetCPUUtilizationPercentage: 80

- Apply the HPA manifest: Use `kubectl apply -f hpa.yaml` to create the HPA resource in your Kubernetes cluster.

- Monitor and observe scaling: Kubernetes will automatically adjust the number of pods based on the specified metrics. You can monitor the HPA status using `kubectl get hpa`.

Vertical Pod Autoscaling (VPA) Implementation

Vertical Pod Autoscaling (VPA) automatically adjusts the resource requests (CPU and memory) of pods based on their historical resource usage. VPA helps to optimize resource allocation within existing pods.

Implementing VPA involves these steps:

- Deploy the VPA controller: Install the VPA controller in your Kubernetes cluster. This typically involves applying a YAML manifest provided by the VPA project.

- Create a VPA object: Define a VPA resource (e.g., `vpa.yaml`) that specifies the target deployment or replica set.

apiVersion: autoscaling.k8s.io/v1 kind: VerticalPodAutoscaler metadata: name: my-app-vpa spec: targetRef: apiVersion: apps/v1 kind: Deployment name: my-app-deployment updatePolicy: updateMode: "Auto"

- Apply the VPA manifest: Use `kubectl apply -f vpa.yaml` to create the VPA resource.

- Monitor and observe resource recommendations: VPA will analyze the resource usage of the pods and provide recommendations for CPU and memory requests. With `updateMode: “Auto”`, VPA automatically updates the pod resource requests. You can observe the recommendations using `kubectl describe vpa my-app-vpa`.

Integration of Rightsizing Tools with CI/CD Pipelines

Integrating rightsizing tools into CI/CD pipelines automates the process of resource optimization, ensuring that applications are deployed with the correct resource configurations. This integration helps to detect and correct resource inefficiencies early in the development lifecycle.

Here are ways to integrate rightsizing tools into CI/CD pipelines:

- Automated testing: Include resource usage analysis tools (e.g., Prometheus, Grafana) in the CI/CD pipeline to monitor application performance under load tests. These tools can identify resource bottlenecks and provide insights for rightsizing.

- Automated deployments: Integrate HPA and VPA into the deployment process. When a new version of an application is deployed, the CI/CD pipeline can automatically configure and apply the necessary HPA and VPA settings.

- Resource request validation: Use tools that validate resource requests in the CI/CD pipeline before deployment. This can prevent applications from being deployed with inadequate or excessive resource requests.

Example: Using a linter tool that checks for best practices in Kubernetes resource definitions.

- Continuous monitoring and feedback: Configure the CI/CD pipeline to continuously monitor the application’s resource usage and provide feedback to the development team. This feedback loop helps to refine resource configurations over time.

Rightsizing Strategies for Different Workloads

Rightsizing strategies are not one-size-fits-all. The optimal resource allocation for an application depends heavily on its nature and operational characteristics. Stateless, stateful, and batch workloads each have distinct requirements that necessitate tailored approaches. Understanding these differences is crucial for maximizing resource utilization, minimizing costs, and ensuring optimal performance. This section explores specific rightsizing techniques adapted to each workload type, along with considerations for application architecture.

Rightsizing Stateless Applications

Stateless applications, which do not retain any client-specific information on the server, are generally easier to scale and rightsize. They are often designed to be highly available and can be easily replicated across multiple pods. This characteristic allows for dynamic scaling based on demand.

- Horizontal Pod Autoscaling (HPA): HPA is a key component for rightsizing stateless applications. It automatically adjusts the number of pod replicas based on observed metrics, such as CPU utilization, memory usage, or custom metrics. For example, if CPU utilization exceeds a predefined threshold, HPA will create more pod replicas to handle the increased load. Conversely, if utilization drops, HPA will scale down the number of replicas.

- Resource Requests and Limits: Defining appropriate resource requests and limits for CPU and memory is essential. Over-requesting resources leads to wasted capacity, while under-requesting can cause performance bottlenecks. For instance, a web server that consistently uses 200m CPU can be configured with a request of 250m CPU and a limit of 500m CPU, providing headroom for bursts of traffic.

- Load Testing and Performance Analysis: Regularly performing load tests and analyzing application performance is critical for identifying resource bottlenecks. Tools like Apache JMeter or Locust can simulate user traffic, allowing you to assess the application’s behavior under load and adjust resource allocations accordingly.

- Optimizing Image Size: Smaller container images lead to faster pod startup times. This is particularly important for stateless applications, where rapid scaling is common. Regularly review and optimize Dockerfiles to minimize image size.

Rightsizing Stateful Applications

Stateful applications, such as databases and message queues, require a more cautious approach to rightsizing. Data persistence and consistency are paramount, and scaling operations can be more complex and time-consuming. The core challenge is to balance resource efficiency with data integrity and application availability.

- Persistent Volumes and Storage Considerations: Ensure sufficient storage capacity and I/O performance for persistent volumes. Rightsizing involves matching storage size and performance characteristics (e.g., IOPS) to the application’s data access patterns. For instance, a database requiring high read/write speeds might benefit from SSD-backed storage with provisioned IOPS.

- StatefulSet vs. Deployment: StatefulSets are specifically designed for stateful applications, providing stable network identities and persistent storage. Use StatefulSets instead of Deployments when ordering or uniqueness of pods matters.

- Resource Requests and Limits (with Caution): Carefully define resource requests and limits. Over-provisioning is often preferred to avoid performance degradation, but over-provisioning significantly impacts costs. Monitor resource usage closely and adjust requests and limits gradually.

- Database-Specific Rightsizing: The approach depends heavily on the database technology. For example, with a MySQL database, monitor key metrics like CPU utilization, memory usage, disk I/O, and query performance. Consider scaling the database vertically (increasing the resources of a single pod) or horizontally (adding more database replicas) based on these metrics.

- Backup and Recovery Strategy: Implement a robust backup and recovery strategy to protect against data loss. This is especially critical when scaling down stateful applications, as it allows for the restoration of data if a pod fails or if the scaling operation is problematic.

Rightsizing Batch Jobs

Batch jobs, designed for executing finite tasks, present unique rightsizing considerations. These jobs often have variable resource requirements depending on the task. Rightsizing involves efficiently allocating resources to complete jobs within acceptable timeframes while minimizing costs.

- Job Scheduling and Resource Allocation: Kubernetes Jobs manage batch tasks. Configure resource requests and limits for each job based on its known requirements. Utilize Kubernetes’ resource quotas and limits to prevent resource exhaustion.

- Parallelism and Concurrency: Leverage parallelism to speed up job execution. Kubernetes allows configuring the number of parallel instances for a job. Carefully consider the dependencies and resource constraints of the individual tasks when defining the degree of parallelism.

- Job-Specific Metrics: Monitor job completion time, resource utilization (CPU, memory, disk I/O), and any custom metrics relevant to the task. For example, if a batch job processes images, track the number of images processed per second or the average processing time per image.

- Dynamic Resource Allocation: For jobs with variable resource needs, consider using tools or techniques that can dynamically adjust resource requests. This could involve analyzing historical job data to predict resource requirements or using a mechanism to scale the job’s resources based on the workload.

- Cost Optimization: Consider using spot instances or preemptible VMs for batch jobs, as they can significantly reduce costs. Implement mechanisms to gracefully handle job preemption and ensure that jobs can resume from where they left off.

Continuous Rightsizing and Optimization

Ongoing rightsizing and optimization are essential for maintaining a healthy and cost-effective Kubernetes environment. Kubernetes clusters and the applications they host are dynamic; their resource needs change over time due to factors like traffic patterns, code deployments, and evolving business requirements. A one-time rightsizing effort is insufficient. Continuous monitoring, analysis, and adjustment are crucial to prevent resource waste, ensure optimal performance, and control cloud spending.

This section details the importance of continuous rightsizing and provides a practical framework for its implementation.

Importance of Ongoing Rightsizing Efforts

Continuous rightsizing is not a one-time activity but a cyclical process. It’s driven by the ever-changing nature of applications, user behavior, and infrastructure costs. Regularly reviewing and refining resource allocations offers several key benefits.

- Cost Optimization: By continuously monitoring resource utilization, you can identify and eliminate wasted resources. This includes instances where pods are allocated more CPU or memory than they need, or where nodes are underutilized. Reduced resource consumption directly translates to lower cloud infrastructure costs. For example, a company might realize a 15-20% reduction in its Kubernetes infrastructure bill after implementing a continuous rightsizing program, as demonstrated by successful case studies in the financial services industry.

- Performance Improvement: Over-provisioning resources can lead to inefficient use of infrastructure, while under-provisioning can result in performance bottlenecks. Continuous rightsizing ensures that applications have the resources they need to operate efficiently. This can lead to improved response times, higher throughput, and a better user experience.

- Scalability and Availability: Properly sized resources contribute to improved scalability. If an application experiences a sudden surge in traffic, the cluster can automatically scale up to meet the demand if resources are appropriately allocated. Continuous rightsizing helps ensure that resources are available when and where they are needed.

- Proactive Issue Detection: Continuous monitoring and analysis can reveal performance trends and potential problems before they impact users. This allows for proactive adjustments to resource allocations, preventing outages and ensuring a stable environment.

- Adaptability to Change: Applications and workloads evolve over time. Code changes, new features, and changes in user behavior can all impact resource requirements. Continuous rightsizing ensures that the resource allocation adapts to these changes, maintaining optimal performance and cost efficiency.

Workflow for Regularly Reviewing and Refining Resource Allocations

Implementing a robust workflow is critical for ensuring continuous rightsizing. This involves a series of steps that should be executed regularly, typically on a set schedule, such as weekly or monthly. The frequency depends on the volatility of the workload and the rate of change within the application environment.

- Monitoring and Data Collection: The first step is to collect data on resource utilization. This includes metrics such as CPU usage, memory usage, network I/O, and disk I/O for both pods and nodes. Use monitoring tools like Prometheus, Grafana, and the Kubernetes resource metrics API to gather these metrics.

- Analysis and Reporting: Analyze the collected data to identify trends and anomalies. Look for pods and nodes that are consistently over- or under-utilized. Generate reports that summarize resource usage, identify potential optimization opportunities, and highlight performance bottlenecks.

- Resource Adjustment Recommendations: Based on the analysis, recommend adjustments to resource requests and limits for pods, and potentially, changes to node sizes or autoscaling configurations. Consider using automated tools to generate these recommendations, such as those mentioned in the section on automated rightsizing.

- Testing and Validation: Before implementing changes in production, test them in a staging or pre-production environment. This allows you to validate the impact of the changes on performance and identify any unforeseen issues. Use load testing tools to simulate production traffic and measure the application’s response times, throughput, and error rates.

- Implementation and Rollout: Implement the recommended changes in a controlled manner. Start with a small subset of pods or nodes and gradually roll out the changes across the entire environment. Monitor the impact of the changes and be prepared to roll back if necessary.

- Feedback and Iteration: Continuously monitor the performance and cost after implementing the changes. Collect feedback from application owners and users. Iterate on the process by refining the analysis, recommendations, and implementation steps based on the observed results.

Process for Documenting Rightsizing Changes and Their Impact

Maintaining thorough documentation is crucial for tracking rightsizing efforts and their effectiveness. This documentation serves as a historical record, providing valuable insights into the performance and cost impacts of the changes made. It also facilitates knowledge sharing and helps in the continuous improvement of the rightsizing process.

- Baseline Documentation: Before making any changes, establish a baseline. Document the current resource requests and limits for all pods, the node sizes and configurations, and the associated costs. Include performance metrics such as average response times, throughput, and error rates.

- Change Log: Maintain a detailed change log that records all rightsizing actions taken. For each change, document the following:

- Date and time of the change

- Description of the change (e.g., increased CPU request for a specific pod)

- Reason for the change (e.g., based on monitoring data)

- Affected pods or nodes

- Tools or methods used to implement the change

- Impact Analysis: After implementing each change, analyze its impact on performance and cost. Document the following:

- Changes in resource utilization (e.g., CPU usage, memory usage)

- Changes in performance metrics (e.g., response times, throughput, error rates)

- Changes in cost (e.g., cloud infrastructure costs)

- Any observed side effects or unexpected outcomes

- Reporting and Review: Regularly generate reports that summarize the rightsizing activities and their impact. Review these reports with stakeholders, including application owners, DevOps engineers, and finance teams. This ensures transparency, facilitates collaboration, and helps in making informed decisions.

- Continuous Improvement: Use the documentation to identify areas for improvement in the rightsizing process. Analyze the effectiveness of different strategies and tools. Refine the workflow and documentation based on the insights gained from the historical data.

Final Summary

In conclusion, mastering what is Kubernetes rightsizing for pods and nodes is essential for anyone managing a Kubernetes cluster. By understanding the core principles, utilizing monitoring tools, and implementing automated solutions, you can create a more efficient and cost-effective infrastructure. Remember that rightsizing is not a one-time task but a continuous process of monitoring, evaluation, and adjustment. Embracing a proactive approach to rightsizing ensures your Kubernetes environment remains optimized, scalable, and aligned with your evolving application needs.

Question Bank

What is the primary benefit of Kubernetes rightsizing?

The primary benefit is improved resource utilization, which leads to reduced infrastructure costs and enhanced application performance by avoiding bottlenecks and ensuring optimal resource allocation.

How often should I review my rightsizing configurations?

It’s recommended to review your rightsizing configurations regularly, ideally on a weekly or monthly basis, and more frequently if your workload patterns change significantly. Continuous monitoring is crucial.

What are some common tools for monitoring resource utilization in Kubernetes?

Popular monitoring tools include Prometheus, Grafana, and Kubernetes Dashboard, which provide insights into CPU, memory, storage, and network usage at both the pod and node levels.

What is the difference between Horizontal Pod Autoscaling (HPA) and Vertical Pod Autoscaling (VPA)?

HPA automatically scales the number of pods based on observed metrics (e.g., CPU utilization), while VPA automatically adjusts the resource requests and limits of individual pods.