Embark on a journey to understand Kubernetes, the powerful orchestration platform that has revolutionized application deployment and management. In today’s dynamic digital landscape, efficiently managing applications is paramount. Kubernetes, often abbreviated as K8s, offers a robust solution, enabling organizations to streamline their operations and unlock new levels of agility.

This guide will delve into the core functionalities of Kubernetes, from its fundamental purpose and architecture to its practical applications in modern software development. We’ll explore essential concepts like Pods, Nodes, and Clusters, along with deployment strategies, scaling techniques, and configuration management. Whether you’re a seasoned developer or new to container orchestration, this guide will provide valuable insights into leveraging Kubernetes for your projects.

Introduction to Kubernetes

Kubernetes, often shortened to K8s, is a powerful open-source system designed to automate the deployment, scaling, and management of containerized applications. Think of it as a sophisticated orchestra conductor for your software, ensuring all the different parts work together seamlessly and efficiently. This section will delve into the core purpose, definition, and the problems Kubernetes solves in the modern application landscape.

Fundamental Purpose of Kubernetes

The primary function of Kubernetes is to simplify and streamline the process of managing containerized applications. It takes care of the complexities of running these applications, allowing developers to focus on writing code rather than worrying about infrastructure. Kubernetes provides a consistent and automated way to deploy, scale, and operate applications across various environments, from your laptop to a public cloud.

Definition of Kubernetes

Kubernetes is an open-source container orchestration platform. Its core function is to automate the deployment, scaling, and management of containerized applications. It groups containers that make up an application into logical units for easy management and discovery. Kubernetes provides services for service discovery, load balancing, automated rollouts and rollbacks, and self-healing.

Problems Kubernetes Solves in Modern Application Deployment and Management

Modern application deployment and management face several challenges. Kubernetes addresses these challenges through automation and orchestration.

Kubernetes tackles these issues by:

- Automating Deployment and Scaling: Kubernetes automates the deployment of applications, making it easier and faster to get applications up and running. It also allows for automatic scaling, increasing or decreasing the number of application instances based on demand.

- Managing Containerized Applications: Kubernetes is designed to manage containerized applications, which are packages of software that include everything an application needs to run, such as code, runtime, system tools, system libraries and settings. This makes applications portable and consistent across different environments.

- Ensuring High Availability: Kubernetes ensures high availability by automatically restarting failed containers and distributing application instances across multiple nodes in a cluster. If a container fails, Kubernetes will automatically restart it, minimizing downtime.

- Facilitating Service Discovery and Load Balancing: Kubernetes provides built-in service discovery and load balancing, allowing applications to communicate with each other easily and ensuring that traffic is distributed evenly across application instances. This is achieved through the use of Services, which act as an abstraction layer over the underlying application instances.

- Simplifying Rollouts and Rollbacks: Kubernetes simplifies the process of rolling out new versions of applications and rolling back to previous versions if necessary. This minimizes the risk of downtime and allows for quick recovery from issues. The rollout process can be controlled, allowing for gradual updates to minimize impact.

Kubernetes helps organizations achieve greater agility, efficiency, and scalability in their application deployments. For example, companies like Spotify and Airbnb have successfully used Kubernetes to manage their large-scale, containerized applications, enabling them to scale their services to meet growing user demands. These organizations have benefited from faster deployments, reduced operational overhead, and improved resource utilization.

Core Concepts

Now that we’ve established what Kubernetes is and its introductory aspects, let’s delve into the core components that make it function. Understanding these elements is crucial for grasping how Kubernetes orchestrates containerized applications effectively.

Pods

Pods represent the smallest deployable units in Kubernetes. They are designed to run one or more tightly coupled containers.A Pod encapsulates:

- One or more application containers: These containers share resources, such as storage and network, and are always co-located and co-scheduled.

- Storage resources: Volumes are attached to the Pod, allowing data to persist beyond the container’s lifecycle.

- A unique network IP address: Each Pod is assigned its own IP address, enabling communication with other Pods and services within the cluster.

- Configuration information: Metadata about the Pod, such as labels and annotations, which provide organizational and operational details.

Consider a scenario where you have a web application running in a container and a sidecar container for logging. These two containers, which form a single logical unit, would be packaged together within a single Pod. The web application container serves HTTP requests, while the sidecar container collects logs and forwards them to a centralized logging system. This architecture ensures that both components are managed and scaled together, promoting operational consistency.

Nodes

Nodes are the worker machines in a Kubernetes cluster. They can be either physical or virtual machines. Nodes are responsible for running the Pods.Each Node:

- Runs a container runtime: such as Docker or containerd, to execute the containers within the Pods.

- Includes the kubelet: an agent that manages the Pods on the node, communicating with the Kubernetes control plane.

- Contains the kube-proxy: which maintains network rules on the node and facilitates network communication.

- Has resources: CPU, memory, and storage that are allocated to the Pods running on it.

Nodes are crucial for Kubernetes operations, acting as the infrastructure where the applications are deployed. The Kubernetes scheduler determines which Node is best suited for a Pod based on resource availability and other constraints. If a Node fails, Kubernetes automatically moves the Pods from that Node to a healthy one, ensuring high availability and resilience.

Clusters

A Kubernetes Cluster is the core structure, composed of a set of Nodes that run containerized applications. It’s the operational environment where Pods are deployed, managed, and scaled.A Kubernetes Cluster comprises:

- A control plane: Responsible for managing the cluster. It includes components like the API server, scheduler, controller manager, and etcd (a distributed key-value store).

- Worker nodes: Where the Pods run. These nodes are managed by the control plane.

- Networking: Kubernetes provides a networking model that allows Pods to communicate with each other and with services.

The control plane manages the state of the cluster and makes decisions about how to deploy and run applications. The worker nodes execute the applications. The cluster’s architecture allows for automatic scaling, self-healing, and updates without downtime. For example, when a service needs more resources, the Kubernetes scheduler can automatically deploy additional Pods to handle the increased load. This scaling capability ensures that applications remain responsive and available even during peak demand.

Deployment Strategies and Management

Kubernetes offers a robust set of tools for managing the lifecycle of applications, including how they are deployed and updated. Effective deployment strategies are crucial for minimizing downtime, ensuring application stability, and enabling rapid iteration. These strategies provide mechanisms to update applications without disrupting service, allowing for seamless transitions and controlled rollouts.

Deployment Strategies

Several deployment strategies are available within Kubernetes, each with its own characteristics, advantages, and disadvantages. Choosing the right strategy depends on the specific requirements of the application, the desired level of risk tolerance, and the operational constraints.

- Rolling Updates: This is the default and most common deployment strategy. It updates pods one at a time, or in batches, while ensuring that a minimum number of pods are always available to serve traffic. This approach allows for zero-downtime deployments.

- Blue/Green Deployments: This strategy involves running two identical environments: the “blue” environment, which serves live traffic, and the “green” environment, which is the new version. Once the green environment is tested and ready, traffic is switched over to it. The blue environment can then be decommissioned. This method minimizes downtime and provides an easy rollback option.

- Canary Deployments: This strategy involves releasing a new version of an application to a small subset of users or traffic. This allows for testing the new version in a production environment with minimal risk. If the canary deployment performs well, the traffic can be gradually increased until all users are using the new version.

- Recreate Deployments: This is the simplest strategy, where all existing pods are terminated, and new pods are created. This results in a brief downtime and is generally not recommended for production environments.

Advantages and Disadvantages of Different Deployment Methods

Each deployment method presents a unique set of trade-offs in terms of complexity, risk, and operational overhead. Understanding these trade-offs is crucial for selecting the optimal strategy.

- Rolling Updates:

- Advantages: Zero-downtime deployments, gradual rollout, automatic rollback on failure.

- Disadvantages: Potential for version incompatibility issues during the update, requires careful configuration of readiness and liveness probes.

- Blue/Green Deployments:

- Advantages: Minimal downtime, fast rollback capabilities, clear separation between versions.

- Disadvantages: Requires twice the infrastructure (double the resources), more complex setup, potential for data synchronization issues if the new version has schema changes.

- Canary Deployments:

- Advantages: Reduced risk of major failures, allows for testing in production with a small user base, provides early feedback.

- Disadvantages: Requires sophisticated traffic management and monitoring tools, can be complex to set up, requires careful analysis of metrics to determine success.

- Recreate Deployments:

- Advantages: Simple to implement.

- Disadvantages: Downtime during the update, not suitable for production environments with high availability requirements.

Implementing a Basic Rolling Update Deployment

Rolling updates are a core feature of Kubernetes deployments, offering a straightforward way to update applications with minimal disruption. This section provides a step-by-step guide for implementing a basic Rolling Update deployment.

- Define a Deployment: Begin by creating a deployment manifest file (e.g., `deployment.yaml`). This file specifies the desired state of your application, including the image, number of replicas, and other configuration parameters.

- Apply the Deployment: Use the `kubectl apply -f deployment.yaml` command to create or update the deployment in your Kubernetes cluster.

- Update the Image: Modify the image tag in your deployment manifest to point to the new version of your application. For example, change `image: my-app:1.0` to `image: my-app:1.1`.

- Apply the Updated Deployment: Apply the updated deployment manifest using `kubectl apply -f deployment.yaml`. Kubernetes will automatically orchestrate the rolling update, creating new pods with the updated image and gradually replacing the old pods.

- Monitor the Update: Use `kubectl get deployments` and `kubectl get pods` to monitor the progress of the rolling update. Observe the status of the pods and ensure that the new pods are becoming ready before the old pods are terminated.

- Verify the Update: Once the rolling update is complete, verify that the application is functioning correctly by accessing it and checking for any issues.

- Rollback (if necessary): If the update introduces problems, use `kubectl rollout undo deployment/

` to roll back to the previous version.

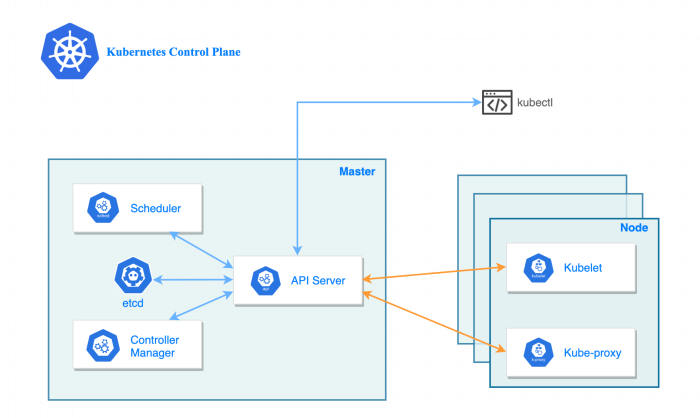

Kubernetes Architecture

Understanding the architecture of Kubernetes is crucial for effectively managing and operating containerized applications. Kubernetes employs a master-slave architecture, where the control plane acts as the brain, and the data plane executes the instructions. This division of responsibilities allows for a robust and scalable system.

Control Plane Components

The Control Plane is the central management unit of Kubernetes, responsible for making global decisions about the cluster. It monitors and responds to cluster events, ensuring the desired state of the system is maintained. Several key components comprise the Control Plane.

- API Server: The API Server is the front-end for the Kubernetes control plane. It exposes the Kubernetes API, allowing users and other components to interact with the cluster. All operations, such as creating, updating, and deleting resources, are initiated through the API Server. It validates and processes requests, storing data in etcd.

- Scheduler: The Scheduler is responsible for assigning Pods to Nodes. It considers factors such as resource availability (CPU, memory), node affinity, and taints/tolerations when making scheduling decisions. The scheduler ensures that Pods are placed on the most appropriate nodes to optimize resource utilization and application performance.

- Controller Manager: The Controller Manager runs various controllers that watch the state of the cluster and make changes to bring the cluster to the desired state. It encompasses several controllers, each responsible for a specific aspect of the system’s functionality. Examples include:

- Node Controller: Monitors the health of nodes and responds to node failures.

- Replication Controller: Ensures the desired number of Pod replicas are running.

- Deployment Controller: Manages deployments and rollouts.

- Service Account & Token Controllers: Manages service accounts and API tokens.

- etcd: etcd is a distributed key-value store that serves as the source of truth for all cluster data. It stores the configuration data, state data, and other essential information required for the operation of the cluster. etcd provides a reliable and consistent storage solution for the control plane.

Data Plane Components

The Data Plane, also known as the worker nodes, is where the actual workload runs. Each worker node has several components that work together to execute the Pods and services deployed in the cluster.

- kubelet: The kubelet is an agent that runs on each node in the cluster. It is responsible for managing the Pods on its node. The kubelet communicates with the API Server to receive instructions and ensures that the containers in each Pod are running and healthy. It also reports the node’s status back to the API Server.

- kube-proxy: The kube-proxy is a network proxy that runs on each node. It maintains network rules and facilitates communication between Pods, services, and external clients. It can operate in different modes, including userspace, iptables, and IPVS, each with its performance characteristics. kube-proxy ensures that services are accessible within the cluster and from outside.

- Container Runtime: The Container Runtime is responsible for running containers. Kubernetes supports various container runtimes, such as Docker, containerd, CRI-O, and others. The Container Runtime manages the lifecycle of the containers, including creating, starting, stopping, and deleting them.

Interaction between Control Plane and Data Plane

The interaction between the Control Plane and the Data Plane is a continuous cycle of monitoring, decision-making, and execution. The following diagram illustrates this interaction.

Diagram Description: The diagram depicts the interaction between the Kubernetes Control Plane and the Data Plane. The Control Plane, at the top, includes the API Server, Scheduler, and Controller Manager. The Data Plane, represented by a Node, is at the bottom and contains the kubelet, kube-proxy, and Container Runtime. Arrows show the flow of information and control. The API Server acts as the central point of communication, receiving requests from users and other components and storing data in etcd.

The Scheduler assigns Pods to Nodes based on resource availability. The Controller Manager monitors the cluster’s state and makes changes to maintain the desired state. The kubelet on each Node receives instructions from the API Server, manages Pods, and interacts with the Container Runtime. The kube-proxy handles network traffic, routing requests to the appropriate Pods. The Container Runtime is responsible for running the containers.

- User Interaction: Users or other components interact with the cluster through the API Server.

- API Server Processing: The API Server validates and processes requests, storing data in etcd.

- Scheduling: The Scheduler assigns Pods to Nodes based on resource availability and other constraints.

- Controller Manager Actions: The Controller Manager continuously monitors the cluster’s state and takes actions to reconcile the actual state with the desired state.

- kubelet Execution: The kubelet on each node receives instructions from the API Server and interacts with the Container Runtime to create and manage Pods.

- Networking: kube-proxy configures network rules to enable communication between Pods and services.

Services and Networking in Kubernetes

Kubernetes’ networking capabilities are crucial for enabling communication between different components of an application and for exposing applications to the outside world. Services, Ingress, and Network Policies work together to provide a robust and flexible networking infrastructure. Understanding these concepts is fundamental for effectively deploying and managing applications in Kubernetes.

Kubernetes Services: Stable Endpoints for Pod Access

Services in Kubernetes act as an abstraction layer over a set of Pods. They provide a stable IP address and DNS name, even if the underlying Pods are created, destroyed, or scaled. This abstraction is critical for application availability and resilience.Services achieve this through several mechanisms:

- Service Types: Kubernetes offers several service types, each with different characteristics:

- ClusterIP: The default service type. It exposes the service on a cluster-internal IP. This makes the service accessible only from within the cluster.

- NodePort: Exposes the service on each Node’s IP address at a static port. This allows external access to the service.

- LoadBalancer: Provisions a cloud provider’s load balancer, which exposes the service externally. This is often the preferred method for external access in cloud environments.

- ExternalName: Maps the service to the externalName field by returning a CNAME record with the value of the externalName. No proxying of any kind is set up. This is used to access services outside of the cluster.

- Selectors: Services use selectors to identify the Pods they should target. Selectors are labels that are applied to Pods, and the service uses these labels to select the matching Pods. When a request is made to the Service, it is routed to one of the Pods matching the selector.

- Endpoints: The service controller automatically creates and updates endpoints, which are the actual IP addresses and ports of the Pods that the service is routing traffic to.

For example, consider a web application consisting of multiple Pods running a frontend and a backend. A ClusterIP service can be used to expose the backend Pods internally to the frontend Pods. A LoadBalancer service can be used to expose the frontend Pods externally to the internet. This allows the application to be accessed without needing to know the specific IP addresses of the backend or frontend Pods.

If a backend Pod fails, the service automatically routes traffic to a healthy Pod.

Kubernetes Networking Concepts: Ingress and Network Policies

Beyond Services, Kubernetes provides additional networking resources for more advanced control and access management. Ingress and Network Policies play important roles in this.

- Ingress: Ingress is an API object that manages external access to the services in a cluster, typically HTTP(S) traffic. It acts as a reverse proxy and provides features like:

- Host-based routing: Directing traffic to different services based on the hostname in the HTTP request.

- Path-based routing: Directing traffic to different services based on the URL path in the HTTP request.

- SSL/TLS termination: Handling SSL/TLS encryption for secure communication.

- Network Policies: Network Policies control the traffic flow at the IP address or port level (OSI layer 3 or 4). They define rules that specify which Pods can communicate with each other and with other network endpoints. Network Policies enhance security by:

- Isolating Pods: Restricting communication to only authorized Pods.

- Segmenting the network: Dividing the cluster into logical network segments to control traffic flow.

- Enforcing security policies: Controlling which Pods can communicate with external networks.

Ingress controllers are responsible for implementing the Ingress resource. They monitor the Kubernetes API for Ingress resources and configure a reverse proxy (e.g., Nginx, HAProxy) to route traffic accordingly. Network Policies require a network plugin that supports them, such as Calico, Cilium, or Weave Net.

Communication Facilitation Between Application Parts within a Cluster

Services are fundamental for enabling communication within a Kubernetes cluster. They provide a stable and discoverable way for different parts of an application to interact.Consider a microservices architecture where different services, such as authentication, order processing, and payment processing, are deployed as Pods. Services enable the following:

- Service Discovery: Pods can discover each other using the DNS name of the Service. For example, a frontend Pod can access a backend service by using the service name (e.g., `backend-service.default.svc.cluster.local`).

- Load Balancing: Services automatically load balance traffic across the Pods that match their selector. This ensures high availability and efficient resource utilization.

- Decoupling: Services decouple the frontend from the backend by providing a stable interface. If the backend Pods are updated or scaled, the frontend Pods do not need to be modified, as they will continue to access the backend through the service.

- Simplified Configuration: Services simplify the configuration of network communication. Instead of hardcoding IP addresses, developers can use service names, making deployments more manageable and less prone to errors.

For example, imagine an e-commerce application. The frontend service, which handles user interaction, needs to communicate with the product catalog service. The frontend Pods can use the product catalog service’s DNS name to access it. The product catalog service then routes the traffic to the underlying Pods. If a new Pod is added to the product catalog service, the frontend service automatically starts routing traffic to the new Pod, without any manual configuration changes.

This dynamic nature is essential for scalability and resilience.

Containerization with Kubernetes

Kubernetes is fundamentally intertwined with containerization, acting as its primary orchestrator. Understanding this relationship is key to grasping how Kubernetes functions and the benefits it provides. Kubernetes leverages containerization technologies, such as Docker, to package, deploy, and manage applications in a consistent and scalable manner. This section will explore the symbiotic relationship between Kubernetes and containerization, demonstrating how Kubernetes orchestrates containers and providing a practical example of deploying a containerized application.

Kubernetes and Containerization Technologies

Kubernetes works hand-in-hand with containerization technologies to manage and deploy applications. Containerization provides a lightweight and portable way to package applications and their dependencies, while Kubernetes automates the deployment, scaling, and management of these containerized applications.The most common containerization technology used with Kubernetes is Docker. Docker allows developers to package applications and their dependencies into self-contained units called containers. These containers can then be easily deployed and run on any system that has a container runtime, like Docker Engine, installed.

Kubernetes orchestrates these Docker containers, managing their lifecycle, ensuring their availability, and scaling them based on demand. Other container runtimes, like containerd and CRI-O, are also supported by Kubernetes.Kubernetes’ role in containerization can be summarized as follows:

- Container Image Management: Kubernetes uses container images (typically built with Dockerfiles) to create and run containers. These images contain the application code, runtime, system tools, and libraries needed to run the application.

- Container Orchestration: Kubernetes orchestrates the deployment, scaling, and management of these containerized applications. This includes scheduling containers on nodes, managing their networking, and ensuring their health.

- Resource Allocation: Kubernetes manages the allocation of resources (CPU, memory, etc.) to containers, ensuring that applications have the resources they need to run effectively.

- Service Discovery: Kubernetes provides service discovery, allowing containers to communicate with each other and with external services.

- Health Monitoring: Kubernetes monitors the health of containers and automatically restarts or replaces unhealthy containers.

Orchestrating Containers with Kubernetes

Kubernetes orchestrates containers through a declarative approach. Instead of specifyinghow* to run containers, you define the desired state of your application, and Kubernetes works to achieve that state. This declarative approach simplifies deployment and management, allowing for automation and scalability.Here’s a breakdown of how Kubernetes orchestrates containers:

- Pods: The fundamental building block in Kubernetes is the Pod. A Pod represents a single instance of your application and can contain one or more containers that share resources and networking.

- Deployments: Deployments manage the desired state of your application. They define the number of Pod replicas to run, the container image to use, and other configuration details.

- Controllers: Kubernetes controllers constantly monitor the state of the system and reconcile it with the desired state defined in the Deployment. For example, the ReplicaSet controller ensures that the specified number of Pod replicas are running.

- Scheduling: The Kubernetes scheduler assigns Pods to nodes (physical or virtual machines) based on resource availability and other constraints.

- Networking: Kubernetes provides networking capabilities that allow containers to communicate with each other and with external services. This includes service discovery, load balancing, and network policies.

- Health Checks: Kubernetes uses health checks to monitor the health of containers. If a container fails a health check, Kubernetes will automatically restart or replace it.

The orchestration process can be visualized as follows:

Image Description: A diagram illustrating the orchestration process. At the top, a “Deployment” object is shown, containing specifications for the desired application state, including the number of replicas and container image. The Deployment interacts with a “ReplicaSet” controller, which ensures the specified number of Pods are running. The “Scheduler” assigns Pods to available nodes.

The “Kubelet” on each node manages the Pods running on that node, pulling container images and starting containers. The “kube-proxy” provides networking services, allowing Pods to communicate with each other and with external services. The entire process is managed by the Kubernetes control plane.

Deploying a Containerized Application on Kubernetes: A Simple Example

Let’s create a simple example of deploying a containerized application on Kubernetes. This example will use a basic “Hello, World!” web application built with Node.js and packaged in a Docker container.First, we’ll create a Dockerfile to build the container image:“`dockerfileFROM node:16WORKDIR /appCOPY package*.json ./RUN npm installCOPY . .EXPOSE 8080CMD [“node”, “index.js”]“`This Dockerfile defines a Node.js application, copies necessary files, installs dependencies, exposes port 8080, and specifies the command to run the application.Next, we create the `index.js` file:“`javascriptconst http = require(‘http’);const port = 8080;const server = http.createServer((req, res) => res.statusCode = 200; res.setHeader(‘Content-Type’, ‘text/plain’); res.end(‘Hello, World!\n’););server.listen(port, () => console.log(`Server running at port $port`););“`This JavaScript code creates a simple HTTP server that responds with “Hello, World!”.Now, build the Docker image:“`bashdocker build -t hello-world-app .“`This command builds a Docker image tagged as `hello-world-app`.After building the image, we push it to a container registry (e.g., Docker Hub) so Kubernetes can access it.

For this example, we assume the image is pushed to a public registry:“`bashdocker push

name

hello-world-container image:

containerPort

8080 – –apiVersion: v1kind: Servicemetadata: name: hello-world-servicespec: selector: app: hello-world ports:

protocol

TCP port: 80 targetPort: 8080 type: LoadBalancer“`This YAML file defines:

- Deployment: Creates two replicas of the `hello-world-app` container.

- Service: Exposes the application on port 80, using a LoadBalancer to provide external access. The `selector` ensures the service targets the Pods created by the deployment.

Apply the configuration:“`bashkubectl apply -f deployment.yaml“`Once the deployment and service are created, Kubernetes will deploy the application. You can then access the application through the external IP address of the service, which you can obtain using:“`bashkubectl get service hello-world-service“`This command will show the external IP address or hostname assigned to your service, which you can use in a web browser to see “Hello, World!”.

This simple example demonstrates the core concepts of deploying a containerized application using Kubernetes, showcasing the power of orchestration and automation. This setup allows for scaling the application by simply modifying the `replicas` field in the deployment YAML file.

Scaling and Resource Management

Kubernetes excels at managing application resources and scaling deployments to meet fluctuating demands. This section details how Kubernetes allocates resources, scales applications horizontally, and the advantages this provides. Understanding these aspects is crucial for building resilient and efficient applications.

Resource Allocation for Pods

Kubernetes provides robust mechanisms for managing the resources consumed by Pods. This involves specifying the amount of CPU and memory each container within a Pod requires and the limits it can use.Kubernetes manages resource allocation through:

- Resource Requests: These are the minimum resources a container needs to function. Kubernetes uses requests to schedule Pods onto nodes with sufficient capacity. If a Pod’s request is for 1 CPU and 2GB of memory, Kubernetes will only schedule it on a node that has at least those resources available.

- Resource Limits: These define the maximum resources a container can consume. Limits prevent a single container from monopolizing node resources, ensuring fair resource distribution. For example, a limit of 2 CPUs and 4GB of memory would restrict a container’s resource usage.

- Quality of Service (QoS): Kubernetes assigns a QoS class to each Pod based on its resource requests and limits. This helps Kubernetes prioritize Pods during resource contention. There are three QoS classes: Guaranteed, Burstable, and BestEffort. Guaranteed Pods have both requests and limits defined, ensuring they are allocated the requested resources. Burstable Pods have requests but no limits, or requests that are less than limits.

BestEffort Pods have no resource requests or limits.

Resource requests and limits are defined in the Pod specification file, typically in YAML format. For example:“`yamlapiVersion: v1kind: Podmetadata: name: my-podspec: containers:

name

my-container image: nginx:latest resources: requests: cpu: “1” memory: “2Gi” limits: cpu: “2” memory: “4Gi”“`In this example, the container `my-container` requests 1 CPU and 2Gi of memory and is limited to 2 CPUs and 4Gi of memory.

Horizontal Scaling of Applications

Horizontal scaling involves increasing the number of Pods running a particular application. Kubernetes makes this straightforward using Deployments and ReplicaSets.Deployments and ReplicaSets enable horizontal scaling through:

- ReplicaSets: These ensure a specified number of Pod replicas are running at all times. If a Pod fails, the ReplicaSet automatically creates a new one to maintain the desired number of replicas.

- Deployments: These manage ReplicaSets and provide declarative updates to Pods and ReplicaSets. Deployments allow for rolling updates, ensuring zero-downtime deployments.

- Horizontal Pod Autoscaler (HPA): This automatically scales the number of Pods in a Deployment based on observed CPU utilization or other metrics. The HPA monitors the average CPU utilization of the Pods and increases or decreases the number of replicas accordingly.

To scale an application, you can:

- Manually Scale: Modify the `replicas` field in the Deployment specification. For example, to scale a Deployment named “my-app” to 5 replicas: `kubectl scale deployment my-app –replicas=5`.

- Use HPA: Configure an HPA to automatically scale based on resource utilization. For example, to create an HPA that targets a Deployment named “my-app” and scales between 1 and 10 replicas based on CPU utilization: `kubectl autoscale deployment my-app –cpu-percent=50 –min=1 –max=10`.

Horizontal scaling is crucial for handling increased traffic and ensuring application availability. For example, an e-commerce website might scale its application during a holiday sale to handle the surge in user requests.

Benefits of Scaling with Kubernetes

Kubernetes offers significant advantages when scaling applications. The following table summarizes the key benefits:

| Benefit | Description | Impact | Example |

|---|---|---|---|

| High Availability | Kubernetes automatically restarts failed Pods and schedules new Pods on healthy nodes. | Reduces downtime and ensures application availability. | If a Pod crashes, Kubernetes immediately replaces it, maintaining service continuity. |

| Improved Performance | Horizontal scaling allows distributing the workload across multiple Pods, reducing the load on individual instances. | Enhances application responsiveness and throughput. | During peak traffic, a web application can scale to handle increased requests without slowing down. |

| Efficient Resource Utilization | Kubernetes intelligently schedules Pods based on resource requests and limits, optimizing node resource usage. | Maximizes the utilization of infrastructure resources, reducing costs. | By packing Pods efficiently, Kubernetes minimizes the number of nodes required to run an application. |

| Simplified Management | Kubernetes automates scaling and resource management, simplifying operations and reducing manual intervention. | Allows developers to focus on application development rather than infrastructure management. | Using HPA, the system automatically adjusts the number of Pods based on demand, without manual configuration. |

Configuration Management with Kubernetes

Managing application configurations effectively is crucial for the reliability, portability, and maintainability of applications deployed in Kubernetes. Kubernetes provides two primary resources for handling configuration data: ConfigMaps and Secrets. These resources allow you to decouple configuration from application code, making it easier to update configurations without rebuilding or redeploying containers.

ConfigMaps and Secrets: Overview

ConfigMaps are used to store non-sensitive configuration data, such as environment variables, configuration files, and command-line arguments. They are designed to be easily accessible and modifiable. Secrets, on the other hand, are used to store sensitive information, such as passwords, API keys, and certificates. Kubernetes Secrets provide mechanisms for encrypting and protecting this sensitive data. Both ConfigMaps and Secrets are accessible to Pods running within the Kubernetes cluster.

Storing and Accessing Sensitive Information Securely in Kubernetes

Secrets are designed to store sensitive information securely within a Kubernetes cluster. The data within a Secret is not stored in plain text by default. It is encoded using Base

64. Kubernetes offers several ways to manage and protect Secrets

- Base64 Encoding: By default, Kubernetes encodes the data stored in Secrets using Base64. This provides a basic level of obfuscation but is not considered a strong form of encryption.

- Encryption at Rest: Kubernetes supports encryption of Secrets at rest using a key management service (KMS). This ensures that Secrets are encrypted in the underlying storage, adding an extra layer of security. You can configure KMS providers such as Google Cloud KMS, AWS KMS, or HashiCorp Vault.

- Access Control: Kubernetes uses Role-Based Access Control (RBAC) to manage access to Secrets. You can define roles and role bindings to control which users, service accounts, and pods can access specific Secrets. This helps prevent unauthorized access to sensitive information.

- Secrets as Files or Environment Variables: Secrets can be mounted as files within a Pod or exposed as environment variables. When mounted as files, the file permissions can be controlled to restrict access. When exposed as environment variables, the data is available to the application running inside the Pod.

For example, to store a database password securely:

1. Create a Secret

“`yaml apiVersion: v1 kind: Secret metadata: name: database-credentials type: Opaque # or kubernetes.io/tls for TLS certificates data: password:

2. Use the Secret in a Pod

“`yaml apiVersion: v1 kind: Pod metadata: name: database-pod spec: containers:

name

database-container image: mysql:latest env:

name

MYSQL_ROOT_PASSWORD valueFrom: secretKeyRef: name: database-credentials key: password “` This example shows how to use the `secretKeyRef` to inject the password from the `database-credentials` Secret into the `MYSQL_ROOT_PASSWORD` environment variable within the container.

Creating and Applying a ConfigMap for an Application: Steps

Creating and applying a ConfigMap involves a series of steps to ensure the application can access the necessary configuration data.

- Define Configuration Data: Prepare the configuration data you want to store in the ConfigMap. This can be in various formats, such as key-value pairs, configuration files, or environment variables.

- Create a ConfigMap Definition: Create a YAML file that defines the ConfigMap. This file specifies the name of the ConfigMap and the data it will contain.

- Apply the ConfigMap: Use the `kubectl apply` command to create or update the ConfigMap in your Kubernetes cluster.

- Use the ConfigMap in Pods: Configure your Pods to access the data stored in the ConfigMap. This can be done by mounting the ConfigMap as files or exposing the data as environment variables.

- Testing and Validation: Verify that the application can correctly access and utilize the configuration data from the ConfigMap.

For example, to create a ConfigMap for an application’s logging configuration:

1. Create a ConfigMap definition file (`logging-configmap.yaml`)

“`yaml apiVersion: v1 kind: ConfigMap metadata: name: logging-config data: log_level: “INFO” log_format: “json” max_log_size: “10MB” “`

2. Apply the ConfigMap using `kubectl`

“`bash kubectl apply -f logging-configmap.yaml “`

3. Use the ConfigMap in a Pod (example using environment variables)

“`yaml apiVersion: v1 kind: Pod metadata: name: my-app-pod spec: containers:

name

my-app-container image: my-app-image:latest env:

name

LOG_LEVEL valueFrom: configMapKeyRef: name: logging-config key: log_level

name

LOG_FORMAT valueFrom: configMapKeyRef: name: logging-config key: log_format “` In this example, the `my-app-container` will have access to the `log_level` and `log_format` values from the `logging-config` ConfigMap as environment variables.

The application running inside the container can then use these environment variables to configure its logging behavior.

Kubernetes for Different Use Cases

Kubernetes’ versatility allows it to be deployed across a wide array of use cases, far beyond its initial design for managing containerized applications. Its flexible architecture and powerful features make it suitable for various workloads, from simple web applications to complex, distributed systems. This section explores how Kubernetes adapts to different application types and operational needs, providing insights into its broad applicability.

Kubernetes for Microservices Architectures

Kubernetes is exceptionally well-suited for orchestrating microservices architectures. Microservices, characterized by their small, independent, and deployable units, benefit greatly from Kubernetes’ ability to manage and scale these services individually.The benefits of using Kubernetes for microservices include:

- Simplified Deployment and Updates: Kubernetes automates the deployment and updates of microservices, ensuring zero-downtime deployments through features like rolling updates and blue-green deployments. This allows for continuous integration and continuous delivery (CI/CD) pipelines to be easily implemented.

- Scalability and Resource Management: Kubernetes can automatically scale microservices based on demand. The Horizontal Pod Autoscaler (HPA) adjusts the number of pods (the smallest deployable units in Kubernetes) based on metrics like CPU utilization or custom metrics, ensuring optimal resource utilization.

- Service Discovery and Load Balancing: Kubernetes provides built-in service discovery and load balancing. Services are exposed through a stable IP address and DNS name, allowing microservices to communicate with each other without hardcoding endpoint addresses. Kubernetes’ load balancing distributes traffic across multiple instances of a service.

- Health Checks and Fault Tolerance: Kubernetes monitors the health of microservices and automatically restarts unhealthy pods. This self-healing capability ensures high availability and resilience. Kubernetes’ readiness and liveness probes help to ensure that traffic is only routed to healthy instances.

- Isolation and Security: Kubernetes provides namespaces for isolating microservices, preventing conflicts and improving security. Network policies can be defined to control communication between services. Role-Based Access Control (RBAC) allows for fine-grained control over who can access and manage Kubernetes resources.

Support for Stateless and Stateful Applications

Kubernetes effectively manages both stateless and stateful applications. The approach differs based on the requirements of the application, but Kubernetes provides the necessary tools and features to handle both types of workloads.Stateless applications, such as web servers or API gateways, are relatively straightforward to manage in Kubernetes.

- Stateless Application Management: Stateless applications don’t store any persistent data. Kubernetes deployments and services can easily handle these applications. Scaling stateless applications is generally simpler, as new instances can be created without impacting data consistency.

- Deployment Strategies for Stateless Applications: Rolling updates are a common deployment strategy for stateless applications. Kubernetes gradually replaces old instances with new ones, minimizing downtime. Blue-green deployments can also be used, where a new version of the application is deployed alongside the old one, and traffic is switched over when the new version is ready.

Stateful applications, such as databases or message queues, require special considerations.

- StatefulSet for Stateful Applications: Kubernetes provides StatefulSets to manage stateful applications. StatefulSets provide unique network identifiers and persistent storage for each pod, ensuring data consistency and order.

- Persistent Volumes and Claims: Persistent Volumes (PVs) and Persistent Volume Claims (PVCs) are used to manage persistent storage for stateful applications. PVCs request storage from PVs, and Kubernetes manages the allocation and lifecycle of the storage.

- Examples of Stateful Applications: Databases like MySQL and PostgreSQL, message queues like Kafka and RabbitMQ, and distributed databases like Cassandra are examples of stateful applications that can be managed with Kubernetes using StatefulSets and persistent storage.

Other Possible Uses for Kubernetes Beyond Microservices

Kubernetes’ flexibility extends to various other use cases, demonstrating its adaptability and broad applicability.

- Batch Processing: Kubernetes can be used to run batch jobs, such as data processing pipelines or machine learning training tasks. Tools like CronJobs allow for scheduling these jobs, and Kubernetes handles resource allocation and execution.

- CI/CD Pipelines: Kubernetes is an excellent platform for running CI/CD pipelines. Developers can define pipelines as code, leveraging Kubernetes’ scalability and automation capabilities to build, test, and deploy applications.

- IoT Edge Computing: Kubernetes can be deployed on edge devices to manage and orchestrate applications in a distributed environment. This allows for real-time data processing and analysis closer to the data source, reducing latency and bandwidth usage.

- Machine Learning (ML) Workloads: Kubernetes can be used to deploy and manage ML models, including training and inference. Tools like Kubeflow provide a comprehensive platform for managing ML workflows within Kubernetes.

- Serverless Computing: Kubernetes can serve as the underlying infrastructure for serverless platforms, providing a scalable and efficient environment for running functions as a service (FaaS).

- Hybrid and Multi-Cloud Deployments: Kubernetes allows organizations to manage applications across multiple cloud providers and on-premises infrastructure. This provides flexibility, reduces vendor lock-in, and enables organizations to choose the best infrastructure for their needs.

Monitoring and Logging in Kubernetes

Monitoring and logging are critical for the operational health and performance of any Kubernetes cluster. They provide valuable insights into the state of applications and infrastructure, enabling proactive identification and resolution of issues. Effective monitoring and logging are essential for debugging, performance optimization, capacity planning, and security auditing. They ensure that Kubernetes deployments are reliable, scalable, and secure.

Importance of Monitoring and Logging

Monitoring and logging in Kubernetes are indispensable for maintaining a healthy and efficient cluster. They allow for real-time visibility into various aspects of the system, facilitating informed decision-making and proactive problem-solving. Without these capabilities, it becomes extremely difficult to diagnose issues, optimize performance, and ensure the overall stability of the environment.

- Performance Monitoring: Monitoring helps track resource utilization (CPU, memory, network I/O), application response times, and other key performance indicators (KPIs). This information is vital for identifying bottlenecks and optimizing application performance. For example, if a pod consistently experiences high CPU usage, it might indicate a need for scaling or resource adjustments.

- Debugging and Troubleshooting: Logs provide detailed information about events and errors occurring within the cluster and applications. They are essential for diagnosing problems, identifying the root cause of failures, and understanding application behavior. For instance, error logs can quickly pinpoint the source of an application crash.

- Capacity Planning: Monitoring data helps in understanding resource consumption trends over time. This information is crucial for capacity planning, allowing administrators to anticipate future resource needs and proactively scale the cluster to avoid performance degradation.

- Security Auditing: Logs can be used for security auditing, allowing administrators to track user activity, identify suspicious behavior, and detect potential security breaches. Monitoring tools can also detect anomalies that might indicate a security threat.

- Alerting: Monitoring systems can be configured to generate alerts based on predefined thresholds or anomalies. This enables timely notification of critical issues, allowing for rapid response and minimizing downtime.

Popular Monitoring and Logging Tools

Several powerful tools are available for monitoring and logging in Kubernetes, each with its strengths and weaknesses. These tools often integrate seamlessly with Kubernetes and provide comprehensive visibility into the cluster’s operations. The choice of tools often depends on the specific needs and preferences of the user.

- Prometheus: Prometheus is a popular open-source monitoring and alerting toolkit. It excels at collecting and storing time-series data. It uses a pull-based model to scrape metrics from configured targets. Prometheus is often used for monitoring resource utilization, application performance, and custom metrics. It is highly configurable and integrates well with Kubernetes.

Prometheus provides a powerful query language (PromQL) for analyzing metrics and generating alerts. It also offers a web interface for visualizing data.

- Grafana: Grafana is a data visualization and monitoring platform that integrates with various data sources, including Prometheus. It allows users to create interactive dashboards that display metrics in a clear and intuitive way. Grafana supports a wide range of visualization options, including graphs, tables, and gauges.

Grafana dashboards can be customized to display specific metrics and provide valuable insights into the performance of the cluster and applications.

- ELK Stack (Elasticsearch, Logstash, Kibana): The ELK stack (now often referred to as the Elastic Stack) is a powerful logging and analytics solution. Elasticsearch is a distributed, RESTful search and analytics engine. Logstash is a data processing pipeline that ingests data from various sources, transforms it, and sends it to Elasticsearch. Kibana is a visualization and dashboarding tool that allows users to explore and analyze log data stored in Elasticsearch.

The ELK stack is commonly used for centralized log management, enabling users to collect, analyze, and visualize logs from all parts of the Kubernetes cluster. It supports searching, filtering, and aggregating log data to identify patterns and troubleshoot issues.

- Fluentd: Fluentd is a popular open-source data collector that unifies the collection and consumption of logs. It supports a wide variety of input and output plugins, allowing it to collect logs from various sources and forward them to different destinations, such as Elasticsearch or cloud-based logging services. Fluentd is designed for high performance and scalability.

- Promtail: Promtail is a log aggregator designed to work with Loki, a horizontally scalable, highly available log aggregation system. Promtail is often used as an agent on each Kubernetes node to collect logs from containers and forward them to Loki for storage and analysis.

Setting Up Basic Monitoring

Setting up basic monitoring for a Kubernetes deployment typically involves deploying monitoring agents, configuring data collection, and setting up dashboards or alerts. This provides a foundational level of visibility into the cluster’s health and performance. The exact steps will vary depending on the chosen tools.

- Deploying Prometheus: Deploying Prometheus involves creating a deployment and a service. The deployment defines the Prometheus server, and the service makes the Prometheus server accessible within the cluster. Prometheus is configured to scrape metrics from various targets, such as kubelet, kube-state-metrics, and application pods.

A typical deployment might involve a YAML file that defines the Prometheus deployment and service.This file specifies the container image, resource requests, and the service port.

- Deploying kube-state-metrics: kube-state-metrics is a service that generates metrics about the state of Kubernetes objects, such as deployments, pods, and services. It is deployed as a separate deployment and service.

kube-state-metrics provides valuable insights into the health and status of Kubernetes resources, which are essential for monitoring and troubleshooting. - Deploying Grafana: Deploying Grafana involves creating a deployment and a service. The deployment defines the Grafana server, and the service makes the Grafana server accessible within the cluster. Grafana is then configured to connect to Prometheus as a data source.

Once Grafana is deployed and connected to Prometheus, dashboards can be created to visualize the collected metrics. - Configuring Alerts: Alerts are configured within Prometheus based on predefined thresholds or anomalies. When a metric exceeds a threshold, Prometheus generates an alert, which can be sent to various notification channels, such as email, Slack, or PagerDuty.

Alerts are essential for proactively identifying and addressing issues in the cluster.For example, an alert can be configured to notify administrators if the CPU usage of a pod exceeds a certain percentage.

Final Conclusion

In conclusion, Kubernetes stands as a cornerstone of modern application deployment, offering unparalleled control, scalability, and efficiency. From its intricate architecture to its diverse use cases, Kubernetes empowers developers to build, deploy, and manage applications with ease. By embracing the principles of containerization and orchestration, you can unlock the full potential of your applications and drive innovation in the ever-evolving world of software development.

Questions and Answers

What is the primary benefit of using Kubernetes?

The primary benefit is automating the deployment, scaling, and management of containerized applications, improving efficiency and resource utilization.

Is Kubernetes only for large-scale applications?

No, Kubernetes is adaptable and beneficial for projects of all sizes, from small personal projects to large enterprise deployments. It provides scalability and management features that are advantageous regardless of application size.

What is a Pod in Kubernetes?

A Pod is the smallest deployable unit in Kubernetes, representing one or more containers that share resources and network configurations.

What is the difference between Kubernetes and Docker?

Docker is a containerization platform, while Kubernetes is a container orchestration platform. Docker builds and runs containers, and Kubernetes manages and scales them.

How does Kubernetes handle application updates?

Kubernetes offers various deployment strategies, such as rolling updates and blue/green deployments, to ensure minimal downtime during application updates.