The convergence of serverless computing and containerization has birthed a paradigm shift in application deployment: the serverless container platform. This innovative approach allows developers to build, deploy, and manage containerized applications without the burden of server infrastructure management. This is achieved through automatic scaling, simplified orchestration, and pay-per-use cost models, offering unprecedented efficiency and agility.

This technology streamlines the development lifecycle by abstracting away the complexities of infrastructure management, enabling developers to focus on writing code. By leveraging this platform, organizations can achieve higher resource utilization, reduce operational overhead, and accelerate time-to-market for their applications. We will delve into the core components, benefits, and practical applications of these platforms, providing a clear understanding of their capabilities and impact.

Introduction to Serverless Container Platforms

Serverless container platforms represent a significant evolution in cloud computing, merging the benefits of serverless architecture with the flexibility of containerization. This convergence aims to streamline application deployment and management, offering developers a more efficient and scalable environment. By abstracting away infrastructure concerns, these platforms enable developers to focus primarily on code, accelerating the development lifecycle.

Core Concept of Serverless Container Platforms

The core concept revolves around the automated management of containerized applications without requiring developers to provision or manage the underlying infrastructure. This includes servers, operating systems, and scaling mechanisms. A serverless container platform automatically handles tasks such as container orchestration, resource allocation, and scaling based on demand. This contrasts with traditional container platforms where developers must manage the infrastructure and manually configure scaling rules.

The platform essentially acts as an intermediary, receiving application code and automatically deploying it within containers, which are then scaled up or down based on incoming traffic or other predefined metrics.

History of Serverless Computing and Containerization Convergence

Serverless computing emerged as a paradigm shift in cloud computing, offering developers a way to execute code without managing servers. Early serverless offerings, such as AWS Lambda, focused on event-driven function execution. Containerization, with technologies like Docker, provided a way to package applications with their dependencies, ensuring consistent execution across different environments. The convergence of these two technologies occurred as the demand for more complex, stateful applications grew, and the need for a more flexible serverless experience arose.

This convergence leverages the portability and isolation of containers while providing the automated scaling and management benefits of serverless.

Benefits of Using a Serverless Container Platform, Focusing on Developer Experience

Serverless container platforms significantly enhance the developer experience by simplifying application deployment and management. The focus shifts from infrastructure management to application development.

- Reduced Operational Overhead: Developers are freed from the burden of managing servers, operating systems, and container orchestration tools. The platform handles these tasks automatically, reducing the time and effort required for deployment and maintenance.

- Automated Scaling: Serverless container platforms automatically scale applications based on demand, ensuring optimal resource utilization and cost efficiency. Developers do not need to manually configure scaling rules or monitor resource usage. This automated scaling provides significant advantages during peak traffic periods.

- Faster Deployment Cycles: With infrastructure management automated, developers can deploy applications more quickly. This accelerates the development lifecycle, enabling faster iterations and quicker time to market.

- Improved Resource Utilization: Serverless platforms typically employ pay-per-use pricing models, where developers are charged only for the resources their applications consume. This leads to more efficient resource utilization and reduced operational costs compared to traditional server-based deployments.

- Enhanced Focus on Code: By abstracting away infrastructure complexities, developers can concentrate on writing and optimizing code. This leads to increased productivity and allows developers to focus on the core functionality of their applications.

Key Components and Architecture

Serverless container platforms represent a paradigm shift in application deployment, offering developers a way to run containerized applications without managing the underlying infrastructure. These platforms abstract away the complexities of server provisioning, scaling, and maintenance, allowing developers to focus on writing code. Understanding the key components and architecture is crucial for grasping the operational mechanics of these platforms.

Essential Components

A serverless container platform comprises several interconnected components that work together to provide a seamless and efficient container execution environment. These components, when combined, enable the platform to dynamically manage container lifecycles, handle traffic, and ensure high availability.

- Container Runtime: This component is responsible for executing the container images. It is the core engine that pulls the container images, creates the container instances, and manages their lifecycle (start, stop, etc.). Popular container runtimes include containerd and runC.

- Orchestration Engine: The orchestration engine is the brain of the platform, managing the deployment, scaling, and management of containers. It receives requests from users, schedules container deployments, and monitors the health of running containers. Kubernetes, or a derivative of it, is commonly used as the orchestration engine.

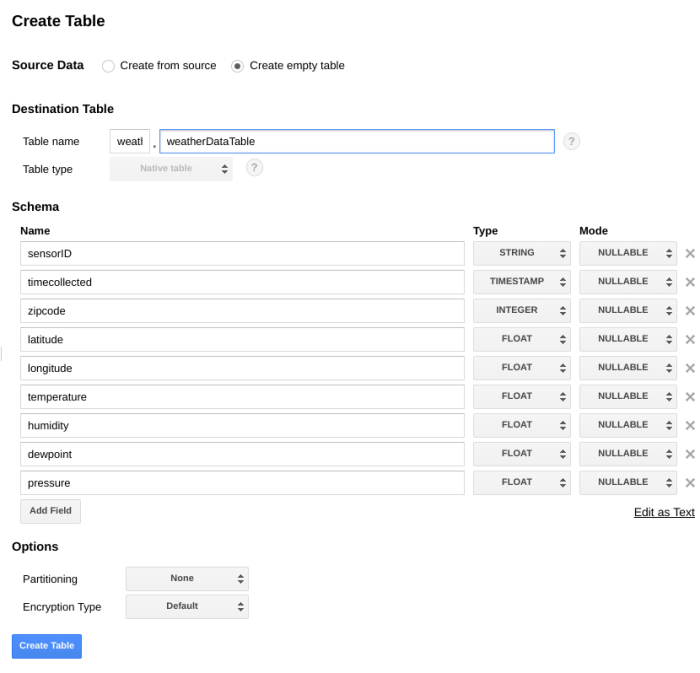

- Image Registry: A container image registry stores and manages container images. It acts as a central repository for container images, allowing users to store, retrieve, and version their images. Popular image registries include Docker Hub, Amazon Elastic Container Registry (ECR), and Google Container Registry (GCR).

- Event Triggering System: This system triggers the execution of container instances based on various events, such as HTTP requests, database updates, or scheduled events. It acts as the entry point for incoming requests and routes them to the appropriate container instances.

- Scaling Engine: The scaling engine automatically adjusts the number of container instances based on demand. It monitors resource utilization (CPU, memory, etc.) and scales the number of instances up or down to meet the current workload. This engine leverages metrics from the container runtime and orchestration engine.

- Load Balancer: A load balancer distributes incoming traffic across multiple container instances, ensuring high availability and performance. It monitors the health of the container instances and routes traffic only to healthy instances.

- Monitoring and Logging System: This system collects and analyzes metrics and logs from the container instances. It provides insights into the performance and health of the platform and enables troubleshooting and debugging.

Architecture and Container Orchestration

The architecture of a serverless container platform is designed to be highly scalable, resilient, and efficient. It relies on a combination of container orchestration, event-driven processing, and automatic scaling to manage containerized applications. The core of this architecture involves a sophisticated orchestration engine that dynamically manages the lifecycle of containers.

The orchestration engine, typically Kubernetes, operates on a declarative model. Developers define the desired state of their application (e.g., the number of container instances, resource allocation) and the orchestration engine automatically works to achieve that state. This declarative approach simplifies deployment and management and allows for automated scaling and self-healing.

The platform’s architecture is typically organized around several key layers:

- API Gateway/Ingress Controller: The entry point for all incoming requests. It handles routing, authentication, and other edge functions.

- Event Triggering: Processes incoming requests and triggers the deployment of container instances based on demand.

- Orchestration Layer: Manages the deployment, scaling, and health of container instances using the orchestration engine.

- Container Runtime: Executes the container images, providing the actual runtime environment for the application.

- Storage Layer: Provides persistent storage for container data. This can be object storage, databases, or other storage solutions.

The platform handles container orchestration in a dynamic and automated manner. When a request arrives, the event triggering system activates. The orchestration engine then deploys or scales container instances as needed. This orchestration is often based on a “pull” model, where the platform proactively checks for changes in demand and adjusts resources accordingly. The platform continuously monitors the health of the container instances and automatically restarts or replaces unhealthy instances.

This self-healing capability ensures high availability and minimizes downtime.

Request Flow Diagram

The following diagram illustrates the flow of a request through a serverless container platform. This diagram clarifies the interaction between the various components and demonstrates the overall processing pipeline.

Diagram Description:

The diagram depicts the flow of an HTTP request through a serverless container platform. The flow begins with a client sending an HTTP request to an API Gateway. The API Gateway acts as the entry point and handles initial routing and security checks. The API Gateway forwards the request to an Event Triggering System. The Event Triggering System determines the appropriate container based on the request.

The request is then passed to the Orchestration Engine (e.g., Kubernetes). The Orchestration Engine schedules and deploys the relevant container instance if one is not already available or scales up existing instances if the demand increases. The request is then routed to a Load Balancer, which distributes the traffic across multiple container instances for the same service. Finally, the Load Balancer directs the request to the Container Runtime where the application code within the container processes the request and returns a response.

The response then travels back through the Load Balancer, Orchestration Engine, Event Triggering System, and API Gateway before being sent back to the client.

Benefits

Serverless container platforms offer significant advantages over traditional container deployments, primarily in terms of scalability, cost-effectiveness, and operational efficiency. These benefits stem from the platforms’ architecture, which abstracts away infrastructure management, allowing developers to focus on application logic. The following sections detail each of these key advantages.

Automatic Scaling

Serverless container platforms achieve automatic scaling through a combination of event-driven execution and dynamic resource allocation. This means the platform automatically adjusts the resources allocated to a container based on real-time demand.

- Event-Driven Architecture: The core of automatic scaling is often an event-driven architecture. When an event, such as an HTTP request, a message in a queue, or a database update, triggers a container execution, the platform can quickly respond. This is in contrast to traditional container deployments where resources are often pre-allocated, regardless of the actual load.

- Dynamic Resource Allocation: Based on the event, the platform dynamically allocates resources. This might involve starting new container instances, increasing the memory or CPU allocated to existing instances, or scaling down resources when the load decreases. This allocation is typically based on pre-configured scaling rules or, in some cases, machine learning models that predict future demand.

- Horizontal Scaling: Serverless container platforms primarily employ horizontal scaling, adding more container instances to handle increased load. This is generally more efficient and resilient than vertical scaling (increasing the resources of a single instance), which can have limitations.

- Scaling Triggers: Scaling is triggered by various metrics, such as CPU utilization, memory usage, request rates, or custom metrics defined by the application. These triggers ensure that resources are allocated in response to actual demand.

- Example: Consider an e-commerce website using a serverless container platform. During a flash sale, the platform would automatically detect the surge in traffic (e.g., number of incoming requests per second). Based on pre-defined scaling rules, the platform would then automatically launch additional container instances to handle the increased load, ensuring that customers experience minimal latency and the website remains responsive.

After the sale, the platform would automatically scale down the resources to minimize costs.

Cost Comparison

The cost models of serverless container platforms differ significantly from traditional container deployments, offering potential for substantial cost savings, especially for applications with variable workloads. The pricing structure is generally based on consumption rather than pre-provisioned resources.

- Traditional Container Deployments: In traditional deployments, you typically pay for the resources you provision, regardless of their actual utilization. This means you are paying for idle resources during periods of low traffic. The costs include the infrastructure (servers, virtual machines), operating system, and any supporting services.

- Serverless Container Deployments: Serverless platforms adopt a pay-per-use model. You are charged only for the compute time, memory, and other resources your containers consume. This is often measured in milliseconds of execution time, requests served, or data processed.

- Cost Savings: The cost savings are most significant for applications with spiky or unpredictable workloads. Because you only pay for what you use, you avoid paying for idle capacity.

- Cost Calculation Example:

Assume a serverless container platform charges $0.000002 per GB-second of memory used. If a container uses 512MB (0.5 GB) for 100 milliseconds (0.1 seconds) per execution, the cost per execution would be:

Cost = 0.000002

– 0.5

– 0.1 = $0.0000001For 10,000 executions, the cost would be $0.001.

In comparison, a traditional deployment might require a server with a fixed cost, regardless of the number of executions.

- Optimizations: While the pay-per-use model offers significant cost advantages, optimizing container images for size and efficiency, as well as writing efficient code, can further reduce costs. Using appropriate memory limits and CPU allocation settings can also contribute to cost optimization.

Operational Advantages

Serverless container platforms provide several operational advantages that streamline deployment and management, leading to increased developer productivity and reduced operational overhead.

- Simplified Deployment: Serverless platforms often offer simplified deployment workflows. Developers can deploy container images directly to the platform without needing to manage the underlying infrastructure. The platform handles the provisioning of resources, container orchestration, and scaling.

- Reduced Infrastructure Management: The platform abstracts away the complexities of infrastructure management, such as server provisioning, patching, and scaling. This reduces the operational burden on development and operations teams, allowing them to focus on building and deploying applications rather than managing infrastructure.

- Automated Scaling and Load Balancing: As discussed, the platform automatically handles scaling and load balancing, ensuring that applications can handle varying levels of traffic without manual intervention. This eliminates the need for manual scaling and load balancing configurations.

- Monitoring and Logging: Serverless platforms often provide built-in monitoring and logging capabilities, simplifying the process of observing application performance and troubleshooting issues. These platforms typically offer centralized logging, real-time metrics, and alerting.

- Faster Time to Market: The simplified deployment and reduced infrastructure management lead to faster time to market for applications. Developers can deploy and iterate on their applications more quickly, accelerating the development lifecycle.

- Simplified Updates and Rollbacks: Many platforms support features like blue/green deployments and automated rollbacks, which enable seamless updates with minimal downtime and the ability to quickly revert to a previous version if issues arise.

Comparison with Traditional Container Orchestration

Serverless container platforms and traditional container orchestration systems, such as Kubernetes, represent distinct approaches to managing and deploying containerized applications. Understanding the differences and trade-offs between these approaches is crucial for selecting the optimal solution based on specific application requirements and operational constraints. This section provides a comparative analysis of these two paradigms, focusing on their management characteristics, benefits, and drawbacks.

Comparison with Kubernetes

Kubernetes, a widely adopted open-source platform, provides robust container orchestration capabilities. Serverless container platforms, in contrast, abstract away much of the underlying infrastructure management.

- Management Overhead: Kubernetes requires significant manual configuration and management. This includes cluster setup, node management, resource allocation, and ongoing maintenance. Serverless platforms automate these tasks, reducing operational overhead. For example, a Kubernetes administrator might spend considerable time managing pod deployments, scaling deployments, and monitoring resource utilization. A serverless platform, however, automatically scales container instances based on incoming requests, eliminating the need for manual intervention.

- Scaling: Kubernetes offers flexible scaling options, but requires careful configuration of autoscalers and resource requests. Serverless platforms provide automatic, horizontal scaling based on demand, typically with zero configuration. The scaling behavior in serverless is reactive, instantly responding to incoming traffic, while Kubernetes often requires proactive configuration and monitoring to achieve similar responsiveness.

- Resource Provisioning: Kubernetes requires users to define resource requests and limits for each container, influencing scheduling and resource allocation. Serverless platforms often abstract away these details, automatically provisioning resources based on application needs. This simplifies resource management, but can potentially lead to higher costs if resource utilization is not optimized.

- Operational Complexity: Kubernetes has a steep learning curve and demands expertise in containerization, networking, and infrastructure management. Serverless platforms aim to simplify operations by providing a managed service with a reduced operational footprint. The complexity of Kubernetes can lead to extended deployment times and increased potential for configuration errors.

Trade-offs Between Serverless Container Platforms and Traditional Container Deployments

The choice between serverless container platforms and traditional container deployments involves trade-offs between control, cost, and operational complexity. The following points highlight these considerations:

- Control vs. Abstraction: Kubernetes offers granular control over infrastructure and application deployments, enabling fine-tuning of resource allocation, networking, and security. Serverless platforms abstract away many of these details, simplifying operations but reducing control. The level of control offered by Kubernetes allows for customized deployments tailored to specific application needs, while serverless platforms prioritize ease of use and rapid deployment.

- Cost: Kubernetes deployments may offer lower costs if resource utilization is carefully optimized. Serverless platforms often employ a pay-per-use pricing model, which can be cost-effective for workloads with fluctuating demand. However, consistent, high-volume workloads might be more economically deployed on Kubernetes where resources can be pre-provisioned.

- Operational Efficiency: Serverless platforms reduce operational overhead, simplifying deployment, scaling, and monitoring. Kubernetes deployments require dedicated teams for infrastructure management and application operations. This difference in operational efficiency can significantly impact the total cost of ownership (TCO).

- Vendor Lock-in: Serverless platforms can lead to vendor lock-in due to the reliance on specific cloud provider services. Kubernetes, being open-source, provides portability and flexibility, allowing for deployment across different cloud providers or on-premises infrastructure. This portability offers greater freedom of choice and reduces dependency on a single vendor.

Feature Comparison Table

The following table summarizes the key differences between serverless container platforms and traditional container deployments (Kubernetes) across several important features.

| Feature | Serverless Container Platform | Traditional Container Deployment (Kubernetes) |

|---|---|---|

| Scaling | Automatic, horizontal scaling based on demand; zero configuration. | Requires configuration of autoscalers and resource requests; can be complex. |

| Cost | Pay-per-use pricing model; can be cost-effective for variable workloads. | Potential for lower costs with optimized resource utilization; requires proactive management. |

| Complexity | Simplified operations; reduced operational overhead; lower learning curve. | Steep learning curve; requires expertise in containerization, networking, and infrastructure management. |

| Management | Managed service; automated infrastructure management; less manual configuration. | Requires manual cluster setup, node management, and resource allocation. |

Use Cases and Real-World Examples

Serverless container platforms are finding increasing adoption across various industries due to their ability to streamline application deployment, reduce operational overhead, and optimize resource utilization. These platforms are particularly well-suited for modern application architectures, including web applications, APIs, and event-driven systems. By examining specific use cases, we can understand how serverless containers address specific business challenges and drive innovation.

Web Application Hosting

Web applications, often characterized by fluctuating traffic patterns, benefit significantly from the scalability and elasticity of serverless container platforms. These platforms automatically scale resources up or down based on demand, ensuring optimal performance and cost efficiency.For example:

- A retail company utilizes a serverless container platform to host its e-commerce website. During peak shopping seasons, such as Black Friday, the platform automatically scales the containerized application to handle increased traffic, preventing performance degradation and ensuring a smooth user experience. During off-peak times, the platform scales down resources, reducing operational costs. This dynamic scaling capability ensures that the application remains responsive regardless of the traffic volume.

- A media company uses a serverless container platform to host its content delivery network (CDN). The CDN distributes media files to users globally, and the platform automatically provisions and manages the necessary infrastructure to handle the distribution. The platform’s ability to quickly scale up resources allows the company to efficiently serve a large number of concurrent users, minimizing latency and improving user satisfaction.

- A software-as-a-service (SaaS) provider leverages a serverless container platform to host its web application. The platform handles the underlying infrastructure management, including server provisioning, patching, and scaling. This allows the SaaS provider to focus on developing and deploying new features without the burden of managing the underlying infrastructure.

API Development and Deployment

Serverless container platforms provide an ideal environment for developing and deploying APIs, enabling rapid iteration, simplified management, and efficient resource utilization. The event-driven nature of these platforms facilitates API endpoints that are triggered by specific events, such as HTTP requests or database updates.Consider the following examples:

- A financial services company builds a serverless API to provide real-time stock quotes. The API is triggered by incoming requests from mobile applications and web clients. The serverless platform automatically scales the API instances to handle a large number of concurrent requests, ensuring that users receive up-to-date information without delays. The API can be easily updated and deployed without downtime.

- An e-commerce platform develops a serverless API to manage order processing. The API is triggered by events such as new order creation, payment confirmation, and shipment updates. The serverless platform automatically triggers containerized functions to handle these events, ensuring that orders are processed efficiently and that customers receive timely updates.

- A healthcare provider uses a serverless API to integrate patient data from various sources. The API receives data from electronic health records (EHR) systems, wearable devices, and patient portals. The serverless platform automatically scales the API to handle the volume of data, and the API can be easily updated and maintained.

Event-Driven Architectures

Event-driven architectures, which rely on asynchronous communication between components, are well-suited for serverless container platforms. These platforms can efficiently handle events, such as message queues, database updates, and file uploads, by triggering containerized functions in response to these events.For instance:

- An online gaming company uses a serverless container platform to process game events, such as player actions, score updates, and chat messages. The platform automatically triggers containerized functions in response to these events, allowing the company to scale its infrastructure based on player activity. This approach enables the company to handle a large number of concurrent players without performance issues.

- A social media platform employs a serverless container platform to process user-generated content, such as images and videos. The platform automatically triggers containerized functions to perform tasks like image resizing, video transcoding, and content moderation. This approach enables the platform to handle a large volume of user-generated content efficiently and cost-effectively.

- A manufacturing company uses a serverless container platform to process data from sensors in its factories. The platform triggers containerized functions in response to events from sensors, such as temperature changes or equipment failures. This approach allows the company to monitor its operations in real time, and quickly respond to any issues.

Platform Providers and Solutions

The landscape of serverless container platforms is dominated by a few major cloud providers, each offering a suite of services designed to simplify the deployment, scaling, and management of containerized applications. These platforms abstract away much of the underlying infrastructure, allowing developers to focus on writing code and delivering value. Understanding the offerings of each provider, including their specific services and pricing models, is crucial for making informed decisions about platform selection.

Major Providers of Serverless Container Platforms

Several cloud providers have emerged as key players in the serverless container platform space. Each provider offers a unique set of services and features, catering to different needs and preferences.

- Amazon Web Services (AWS): AWS provides a comprehensive suite of services, including AWS Fargate and AWS Lambda. AWS Fargate is a serverless compute engine for containers, and AWS Lambda allows you to run code without provisioning or managing servers.

- Google Cloud Platform (GCP): Google Cloud offers Google Cloud Run, a managed platform that enables you to run stateless containers. Google Cloud Functions provides a serverless compute environment for event-driven applications.

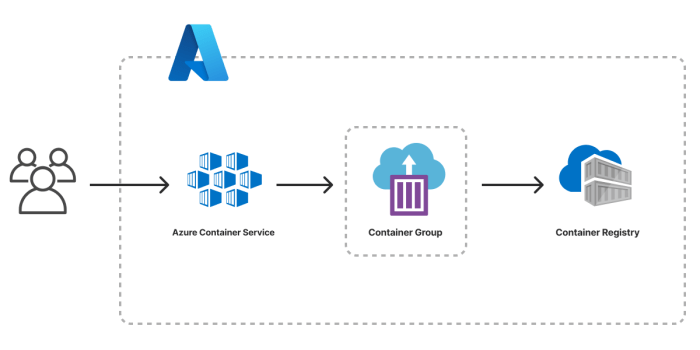

- Microsoft Azure: Azure offers Azure Container Instances (ACI) and Azure Container Apps. ACI provides a serverless container execution environment, while Azure Container Apps is designed for running microservices and containerized applications.

Specific Services Offered by Each Provider

Each provider’s serverless container platform offers a range of services, each designed to address specific aspects of containerized application deployment and management. These services vary in their features, capabilities, and integration with other cloud services.

- AWS:

- AWS Fargate: Allows you to run containers without managing servers or clusters. You specify the container image, CPU, and memory requirements, and Fargate handles the rest.

- AWS Lambda: Enables you to run code in response to events, such as changes in data, updates to files, or HTTP requests. Lambda can be triggered by various AWS services and supports multiple programming languages.

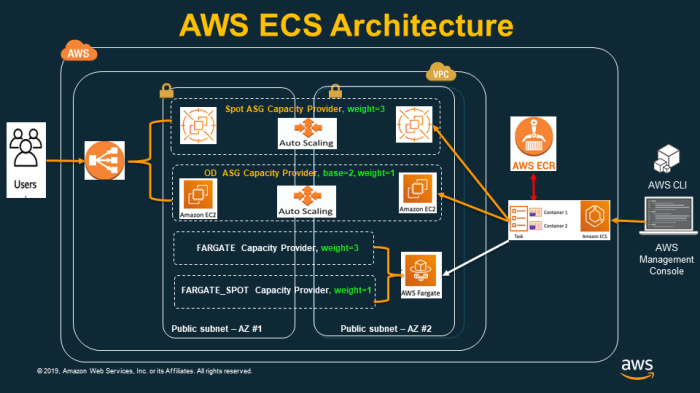

- Amazon ECS (Elastic Container Service) and Amazon EKS (Elastic Kubernetes Service): While not strictly serverless in the same way as Fargate, ECS and EKS with Fargate integration offer serverless options for container orchestration.

- Google Cloud:

- Google Cloud Run: A fully managed platform that automatically scales your stateless containers. It supports various programming languages and frameworks and integrates with other Google Cloud services.

- Cloud Functions: A serverless execution environment for event-driven applications. It allows you to write functions in various languages and trigger them from various events.

- Google Kubernetes Engine (GKE) Autopilot: GKE Autopilot simplifies cluster management by automating infrastructure provisioning and scaling. It can be considered a serverless offering for Kubernetes workloads.

- Microsoft Azure:

- Azure Container Instances (ACI): Provides a serverless container execution environment, allowing you to run containers without managing any underlying infrastructure.

- Azure Container Apps: A platform for running microservices and containerized applications, built on Kubernetes and Dapr. It provides features like scaling, service discovery, and traffic management.

- Azure Functions: A serverless compute service that enables you to run event-triggered code. It supports multiple programming languages and integrates with various Azure services.

Pricing Models of Different Platforms

Understanding the pricing models of serverless container platforms is crucial for cost optimization. Pricing typically varies based on resource consumption, such as CPU time, memory usage, and the number of requests. Each provider has its own pricing structure, which can influence the overall cost of running applications.

- AWS: AWS Fargate pricing is based on vCPU and memory requested by the container, calculated per second. AWS Lambda pricing is based on the number of requests and the duration of the function execution, measured in milliseconds.

- Google Cloud: Google Cloud Run pricing is based on CPU and memory usage, as well as the number of requests. Cloud Functions pricing is based on the number of invocations, the duration of the function execution, and the memory allocated.

- Microsoft Azure: Azure Container Instances (ACI) pricing is based on the amount of CPU and memory allocated, calculated per second. Azure Container Apps pricing is based on the number of requests and the CPU and memory consumption. Azure Functions pricing is based on the number of executions and the resource consumption.

For instance, consider a scenario where a small web application is deployed using serverless containers. The application receives a moderate amount of traffic.

- AWS Fargate: You’d pay based on the vCPU and memory consumed by the containers, plus any data transfer costs.

- Google Cloud Run: Costs would be based on the CPU and memory allocated to the containers, along with request costs.

- Azure Container Instances: The pricing model would be similar to Fargate, based on the resources used by the containers.

A detailed comparison of pricing would require considering factors such as:

- Region: Pricing can vary based on the geographical region where the application is deployed.

- Usage Patterns: Applications with spiky traffic patterns may benefit from auto-scaling capabilities of serverless platforms.

- Reserved Instances/Committed Use Discounts: Some providers offer discounts for committing to a certain level of resource usage.

Containerization Technologies

Serverless container platforms rely heavily on containerization technologies to package and deploy applications. These technologies encapsulate applications and their dependencies into isolated units, ensuring consistent execution across different environments. This section explores the role of Docker and other containerization tools in enabling the functionality of serverless container platforms.

Docker in Serverless Container Platforms

Docker plays a pivotal role in serverless container platforms by providing a standardized way to package applications. Docker’s containerization allows developers to define application environments, including code, runtime, system tools, system libraries, and settings, within a Docker image. These images are then used to create containers, which are isolated instances of the application. This approach facilitates portability and consistency, crucial for serverless environments where applications must run reliably across various infrastructure components.The Docker ecosystem also provides robust tools for building, managing, and distributing container images.

This streamlined process significantly simplifies the deployment pipeline for serverless applications. The ability to version images allows for efficient updates and rollbacks, crucial for maintaining application stability and availability. Docker’s widespread adoption and maturity within the industry make it a natural fit for the serverless container platform.

Integration of Containerd and Other Technologies

While Docker has historically been the dominant containerization technology, the underlying container runtime has evolved. Serverless container platforms increasingly integrate with alternative container runtimes like containerd. Containerd provides a more streamlined and efficient runtime environment focused on core container execution.Containerd is designed to be lightweight and highly performant, making it suitable for the rapid scaling and resource efficiency requirements of serverless platforms.

The separation of concerns, with containerd focusing solely on container execution, also enhances security and simplifies platform management. Many serverless container platforms now utilize containerd as the primary runtime engine, abstracting the complexities of container management from the user. This allows for improved performance and resource utilization.Other containerization technologies, such as CRI-O, which implements the Kubernetes Container Runtime Interface (CRI), are also finding their place.

CRI-O is a lightweight, OCI-compliant container runtime designed specifically for Kubernetes, offering an alternative to Docker. The adoption of these alternative runtimes allows serverless platforms to optimize for specific performance and security needs. The choice of runtime often depends on factors like performance, resource constraints, and the specific features supported by the platform.

Dockerfile Example

A Dockerfile defines the steps needed to build a Docker image. Here’s a basic example demonstrating how to create a Dockerfile for a simple web application using Node.js:“`dockerfileFROM node:16WORKDIR /appCOPY package*.json ./RUN npm installCOPY . .EXPOSE 3000CMD [“node”, “index.js”]“`This Dockerfile does the following:

- Specifies the base image as Node.js 16.

- Sets the working directory inside the container.

- Copies the `package.json` and installs dependencies.

- Copies the application code.

- Exposes port 3000.

- Defines the command to run the application.

This Dockerfile is a straightforward example of how Docker can be used to containerize a Node.js application. This process is fundamental to the deployment of applications in serverless container platforms, allowing them to be packaged, deployed, and scaled efficiently.

Event-Driven Architectures and Serverless Containers

Serverless container platforms are intrinsically linked to event-driven architectures, offering a powerful combination for building scalable, resilient, and responsive applications. This integration allows developers to create systems that react dynamically to events, leading to improved efficiency and reduced operational overhead. This section will delve into how these platforms leverage event-driven principles.

Integration of Serverless Containers with Event-Driven Architectures

Event-driven architectures, at their core, are predicated on the concept of asynchronous communication and decoupled components. Serverless container platforms excel in this environment by providing the necessary infrastructure to deploy and manage containerized functions that can be triggered by various events. The platform handles the underlying infrastructure, allowing developers to focus on the business logic encapsulated within the containers.

Role of Event Triggers and Message Queues in Serverless Deployments

Event triggers and message queues are fundamental components of event-driven serverless deployments. These mechanisms facilitate the flow of events and the invocation of container functions.

- Event Triggers: Event triggers are the mechanisms that initiate the execution of a serverless container function in response to a specific event. These events can originate from various sources, including:

- HTTP requests (e.g., API calls)

- Database changes (e.g., updates to a record)

- File uploads (e.g., to cloud storage)

- Scheduled events (e.g., cron jobs)

- Message queue events (e.g., a message arriving in a queue)

- Message Queues: Message queues act as intermediaries between event producers and consumers (serverless container functions). They provide several crucial benefits:

- Asynchronous Communication: They decouple the event producer from the event consumer, allowing for asynchronous processing. The producer can publish an event to the queue without waiting for the consumer to process it.

- Scalability: They enable the independent scaling of event producers and consumers. Multiple consumers can process events from the same queue, improving throughput.

- Reliability: They provide a durable storage mechanism for events, ensuring that events are not lost even if the consumer is temporarily unavailable.

Example of an Event Trigger and Container Function Initiation

Consider a scenario involving an e-commerce platform. When a customer places an order, an event is triggered. This event, such as a message published to a message queue, can initiate a container function to process the order.

The sequence of events would typically unfold as follows:

- Order Placement: A customer places an order through the e-commerce platform’s frontend.

- Event Publication: The platform publishes an “order placed” event to a message queue (e.g., AWS SQS, Google Cloud Pub/Sub, or Azure Service Bus). This event would contain relevant order details like the customer’s ID, the items ordered, and the order total.

- Event Trigger: A serverless container platform monitors the message queue for new events.

- Container Function Invocation: Upon receiving the “order placed” event, the platform triggers a pre-defined container function.

- Order Processing: The container function, which contains the order processing logic, is executed. This function might perform tasks such as:

- Validating the order details.

- Updating the inventory.

- Generating an invoice.

- Sending a confirmation email to the customer.

This architecture offers several advantages. The e-commerce platform’s frontend does not need to wait for the order processing to complete, providing a faster user experience. The container function can be scaled independently to handle a large volume of orders. And the platform is more resilient, as the message queue ensures that no orders are lost, even if the order processing function experiences temporary issues.

This example showcases the power of event-driven architectures in conjunction with serverless containers, resulting in a responsive, scalable, and robust e-commerce system.

Security Considerations

Serverless container platforms introduce a paradigm shift in application deployment, and with this shift comes a new set of security considerations. These platforms abstract away much of the underlying infrastructure management, but the shared responsibility model necessitates a deep understanding of the security aspects at each layer, from the platform itself to the application code. Failure to address these considerations can lead to vulnerabilities and potential breaches.

Security Management within Serverless Container Platforms

Serverless container platforms offer built-in security features, but the responsibility for their effective utilization rests with the user. The platform provider manages the underlying infrastructure security, including physical security, network security, and operating system patching. However, users are responsible for securing their application code, container images, and the configurations within the platform. This shared responsibility model requires a proactive approach to security.

- Identity and Access Management (IAM): IAM is a cornerstone of security. The platform provides tools to define roles and permissions, controlling access to resources and services. Implement the principle of least privilege, granting only the necessary permissions to users and services. Regularly review and audit IAM configurations to ensure they align with security policies.

- Container Image Security: Container images are the building blocks of serverless container applications. Secure container images by scanning them for vulnerabilities before deployment. Utilize tools like vulnerability scanners and image signing to ensure the integrity of the images. Implement a robust image registry and restrict access to authorized users only.

- Network Security: Serverless container platforms often provide virtual networks and security groups to control network traffic. Configure these networks to restrict access to only the necessary ports and protocols. Implement network segmentation to isolate different application components and reduce the blast radius of potential attacks.

- Secrets Management: Secrets, such as API keys and database credentials, should be securely stored and managed. Leverage the platform’s secrets management features to encrypt and protect sensitive information. Avoid hardcoding secrets in application code. Rotate secrets regularly to minimize the impact of a potential compromise.

- Logging and Monitoring: Comprehensive logging and monitoring are crucial for detecting and responding to security incidents. Enable logging for all relevant events, including access attempts, API calls, and container deployments. Monitor logs for suspicious activity and set up alerts to notify security teams of potential threats.

- Compliance and Auditing: Serverless container platforms often support compliance with industry standards, such as PCI DSS and HIPAA. Understand the platform’s compliance certifications and implement security controls to meet the requirements of these standards. Regularly audit security configurations and logs to ensure compliance.

Security Best Practices for Serverless Container Platform Deployments

Implementing security best practices is essential for protecting serverless container applications from various threats. These practices span across the entire application lifecycle, from development to deployment and operation. A proactive and layered approach to security is crucial for mitigating risks.

- Secure Development Practices: Employ secure coding practices to minimize vulnerabilities in application code. Conduct regular code reviews and static analysis to identify and address security flaws. Use secure libraries and frameworks and keep dependencies up to date.

- Container Image Hardening: Harden container images by removing unnecessary packages and services. Use a minimal base image to reduce the attack surface. Implement security scanning tools to identify and address vulnerabilities in the images. Sign the images to ensure their integrity.

- Least Privilege Principle: Grant only the necessary permissions to users, services, and containers. Avoid using overly permissive roles and policies. Regularly review and audit permissions to ensure they align with the principle of least privilege.

- Network Segmentation: Segment the network to isolate different application components and reduce the impact of a security breach. Use virtual networks and security groups to control network traffic. Implement firewalls to restrict access to only the necessary ports and protocols.

- Secrets Management: Store secrets securely and manage them effectively. Use a secrets management service to encrypt and protect sensitive information. Rotate secrets regularly to minimize the impact of a potential compromise. Avoid hardcoding secrets in application code.

- Monitoring and Alerting: Implement comprehensive monitoring and alerting to detect and respond to security incidents. Monitor logs for suspicious activity, such as unauthorized access attempts and unusual network traffic. Set up alerts to notify security teams of potential threats.

- Regular Security Audits: Conduct regular security audits to identify and address vulnerabilities in the application and infrastructure. Perform penetration testing to simulate real-world attacks and assess the effectiveness of security controls. Review security configurations and logs regularly.

- Stay Updated: Keep the platform, container images, and dependencies up to date with the latest security patches. Regularly update the platform to benefit from the latest security features and bug fixes. Subscribe to security advisories and mailing lists to stay informed about potential threats.

Future Trends and Evolution

Serverless container platforms are poised for significant evolution, driven by advancements in container orchestration, cloud computing, and the broader shift towards more efficient and scalable application deployment models. This section explores emerging trends and provides a descriptive illustration of how these platforms may evolve.

Advancements in Container Orchestration

Container orchestration technologies are continuously evolving to meet the demands of serverless container platforms. Several key advancements are shaping the future:

- Enhanced Automation: Automation will become even more sophisticated, enabling platforms to automatically scale container instances based on real-time demand, optimize resource allocation, and manage deployments with minimal human intervention. This includes automated container image building, testing, and deployment pipelines.

- Improved Resource Efficiency: Orchestration platforms will focus on improving resource utilization. This involves techniques such as bin-packing, which optimizes the placement of containers on physical or virtual machines to minimize wasted resources, and more intelligent scheduling algorithms that consider factors like CPU, memory, and network I/O.

- Advanced Networking: Container networking will become more flexible and sophisticated. Technologies like service meshes will provide advanced features such as traffic management, security, and observability, enabling more complex and resilient application architectures.

- Edge Computing Integration: Orchestration platforms will increasingly integrate with edge computing environments, allowing serverless containers to be deployed and managed across geographically distributed infrastructure. This will enable low-latency applications and services closer to end-users.

Evolution of Serverless Container Platforms

Serverless container platforms are expected to evolve in several key areas:

- Increased Abstraction: Platforms will continue to abstract away the underlying infrastructure complexities, making it easier for developers to deploy and manage applications without having to deal with container runtime, networking, or storage. This will be achieved through improved user interfaces, command-line tools, and automation capabilities.

- Enhanced Developer Experience: The developer experience will be significantly improved, with features such as integrated development environments (IDEs), debugging tools, and monitoring dashboards specifically tailored for serverless container applications. This will streamline the development lifecycle and make it easier for developers to build and deploy applications.

- Greater Support for Diverse Workloads: Platforms will expand their support for a wider range of workloads, including batch processing, machine learning, and data streaming applications. This will involve providing optimized container images, runtime environments, and integration with various cloud services.

- Improved Security and Compliance: Security will remain a top priority, with platforms incorporating advanced security features such as container image scanning, vulnerability management, and access control. Compliance with industry regulations and standards will also be a key focus.

The following diagram illustrates a potential future scenario:

Descriptive Illustration: The diagram shows a multi-layered architecture. The bottom layer represents the underlying infrastructure, including physical servers, virtual machines, and cloud resources. Above this is the container orchestration layer, which manages container deployments, scaling, and networking. The next layer represents the serverless container platform, which provides an abstraction layer for developers, allowing them to deploy and manage containers without having to deal with the underlying infrastructure complexities.

Finally, the top layer represents the applications, which are deployed as serverless containers and can be accessed by end-users.

Key elements within the illustration include:

- Automated Scaling: The platform automatically scales container instances based on real-time demand.

- Integrated Development Environment (IDE): Developers use a fully integrated IDE to build, test, and deploy applications.

- Monitoring Dashboard: A centralized dashboard provides real-time monitoring of application performance and resource utilization.

- Security Features: Container image scanning, vulnerability management, and access control are integrated throughout the platform.

This architecture represents a future where serverless container platforms are highly automated, developer-friendly, secure, and capable of supporting a wide range of workloads.

Final Thoughts

In conclusion, serverless container platforms represent a significant advancement in cloud computing, offering a compelling solution for modern application development and deployment. By combining the benefits of serverless architecture with the portability of containers, these platforms empower developers to build scalable, cost-effective, and easily manageable applications. As the technology matures, we can anticipate further innovations that will continue to transform how we build and deploy software, solidifying its position as a key enabler for future-proof applications.

Essential Questionnaire

What is the primary advantage of using a serverless container platform?

The primary advantage is the abstraction of infrastructure management, allowing developers to focus on application logic rather than server administration.

How does a serverless container platform handle scaling?

Serverless container platforms automatically scale container instances up or down based on demand, eliminating the need for manual intervention.

What are the cost benefits of using a serverless container platform?

Cost benefits include pay-per-use pricing models, which eliminate costs associated with idle resources, and reduced operational overhead.

How do serverless container platforms differ from traditional container orchestration tools like Kubernetes?

Serverless platforms abstract away the complexity of managing the underlying infrastructure, while Kubernetes requires more hands-on configuration and management.