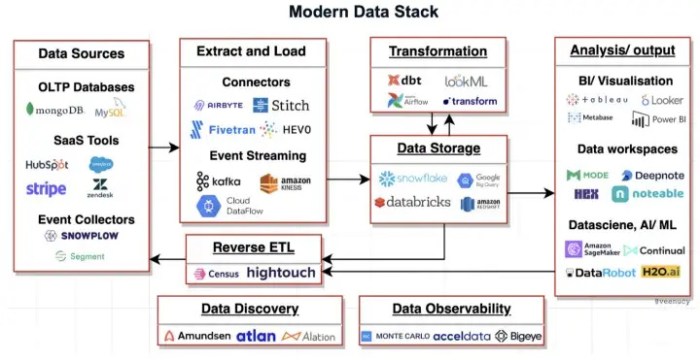

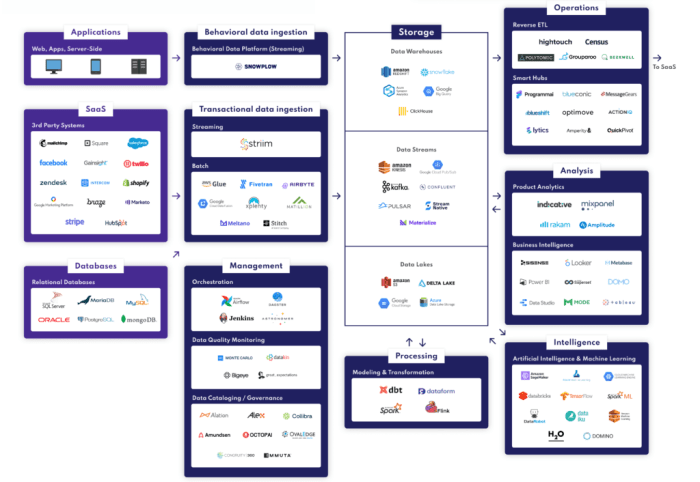

Understanding the components of a modern data stack is crucial for businesses seeking to leverage data effectively. This exploration delves into the intricate details, from data ingestion to visualization, security, and cloud implementation, providing a comprehensive understanding of the building blocks that power modern data solutions.

This framework will dissect the key elements of a modern data stack, highlighting their individual roles and their interconnectedness. We’ll examine the evolving landscape of data technologies, demonstrating how they work together to extract value from data, enabling informed decisions and driving business growth.

Defining a Modern Data Stack

A modern data stack is a collection of interconnected tools and technologies designed to efficiently capture, process, store, analyze, and visualize data. It represents a significant evolution from traditional data warehousing approaches, emphasizing agility, scalability, and flexibility to adapt to the dynamic needs of modern businesses. These stacks are crucial for extracting insights from data, driving informed decision-making, and fostering innovation.A modern data stack, unlike its predecessors, emphasizes speed and adaptability.

It leverages cloud-based technologies, enabling businesses to scale resources as needed and respond quickly to market changes. This shift from traditional, on-premises solutions to cloud-based architectures fosters a culture of continuous improvement and experimentation, allowing organizations to swiftly iterate on data strategies.

Key Characteristics of a Modern Data Stack

Modern data stacks are characterized by their modularity, allowing businesses to select the tools that best meet their specific needs. This adaptability is a crucial differentiator from the rigid, monolithic structures of traditional data warehouses. The ability to integrate different tools seamlessly, regardless of their origin, is paramount for efficient data flow and analysis. These characteristics contribute to improved data quality and reduce bottlenecks in the data pipeline.

Evolution from Traditional Data Stacks

Traditional data stacks often relied on rigid, on-premises infrastructure. Data was typically stored in relational databases and processed using batch-oriented ETL (Extract, Transform, Load) processes. This approach often proved cumbersome and inflexible when dealing with the high volume and velocity of data generated by modern applications and business operations. The modern data stack embraces the flexibility and scalability of cloud-based technologies, allowing for a more dynamic and adaptable data strategy.

Core Functionalities of a Modern Data Stack

A modern data stack encompasses several key functionalities. It facilitates data ingestion from diverse sources, enabling organizations to capture data from various platforms and applications. The stack also facilitates data transformation and cleaning, ensuring data quality and consistency. Further, it enables data storage and management in scalable cloud environments. Finally, it supports data analysis and visualization, enabling businesses to derive meaningful insights from their data.

Hierarchical Structure of a Modern Data Stack

The components of a modern data stack can be organized hierarchically into several key layers:

- Data Ingestion and Preparation: This layer encompasses tools for collecting data from various sources, including databases, APIs, and streaming platforms. Crucial steps include data validation and cleansing to ensure data quality.

- Data Storage and Processing: This layer includes cloud-based data warehouses or lakes for storing and managing large volumes of data. Key tools support processing, such as batch and stream processing engines, for optimized data transformation and preparation.

- Data Analysis and Visualization: This layer encompasses tools for exploring and analyzing data, including business intelligence (BI) tools, dashboards, and data visualization platforms. These tools empower data-driven decision-making.

- Data Governance and Security: This crucial layer ensures data security, compliance, and access control, crucial for maintaining trust and integrity.

Examples of Successful Implementations

Numerous organizations have successfully implemented modern data stacks. For example, companies in e-commerce have leveraged these stacks to gain real-time insights into customer behavior, enabling personalized recommendations and optimized inventory management. Similarly, in the financial sector, data stacks have enabled faster fraud detection and risk management. These successes highlight the transformative power of modern data stacks in driving business agility and innovation.

Data Ingestion and Storage

Data ingestion and storage form the bedrock of any modern data stack. Effective data ingestion ensures that data from diverse sources is readily available for analysis. Robust storage solutions are critical for scalability and performance, enabling efficient access to large datasets. This section details the various methods for data ingestion, storage options, the importance of transformation and cleaning, and the role of data pipelines in this process.Data ingestion is a crucial step in building a modern data stack, as it is the process of collecting and loading data from various sources into a central repository.

Different ingestion methods have varying strengths and weaknesses, and the optimal choice depends on the specific needs of the organization. The selection of storage solutions is equally critical, as it impacts the scalability and performance of the entire data stack. Data transformation and cleaning are essential steps to ensure data quality and consistency before it’s stored. Data pipelines, orchestrated workflows, play a pivotal role in automating these processes, improving efficiency and enabling timely data access.

Data Ingestion Methods

Various methods exist for ingesting data, each with its own advantages and disadvantages. Batch ingestion processes large volumes of data at scheduled intervals, while streaming ingestion handles data in real-time. Batch ingestion is suitable for periodic updates, such as daily or weekly reports, while streaming ingestion is ideal for near real-time analysis, like fraud detection or customer churn prediction.

- Batch Ingestion: This method is well-suited for scheduled data updates, such as loading daily sales figures or weekly website traffic data. Its strength lies in its ability to handle large volumes of data efficiently. However, it might not be suitable for real-time applications due to the delay in data processing. Examples include using tools like Apache Kafka for message queues, followed by batch processing with tools like Apache Spark or Hadoop.

Common use cases are ETL (Extract, Transform, Load) processes for data warehousing.

- Streaming Ingestion: This method is crucial for applications requiring real-time data processing. It involves continuously capturing and processing data as it becomes available, such as stock market data feeds or social media activity. A key strength is its speed, enabling immediate analysis and reaction to events. However, the complexity of handling high-volume, high-velocity data streams can be challenging.

Examples include using Apache Kafka for message queuing and stream processing frameworks like Apache Flink or Spark Streaming.

Storage Solutions

The choice of storage solution significantly impacts the scalability and performance of the data stack. Different storage types are suitable for different data types and use cases. Cloud-based storage options offer flexibility and scalability, while on-premises solutions offer greater control and security.

- Cloud Storage: Cloud-based storage solutions like Amazon S3, Azure Blob Storage, and Google Cloud Storage provide scalability and flexibility. They are cost-effective for large datasets and can be easily scaled up or down based on demand. They offer high availability and durability, making them suitable for critical applications.

- Relational Databases: Relational databases, such as PostgreSQL, MySQL, and Oracle, are well-suited for structured data. They offer ACID properties (Atomicity, Consistency, Isolation, Durability) ensuring data integrity. However, they might not be optimal for handling unstructured or semi-structured data.

- NoSQL Databases: NoSQL databases, such as MongoDB, Cassandra, and Redis, excel at handling unstructured and semi-structured data. Their flexibility makes them ideal for applications dealing with evolving data schemas. They often provide better scalability and performance for large datasets compared to relational databases.

Data Transformation and Cleaning

Data transformation and cleaning are critical steps during ingestion to ensure data quality and consistency. This process involves converting data into a usable format and resolving inconsistencies or errors.Data transformation and cleaning are essential processes that prepare the data for analysis. It often involves converting data from different formats, handling missing values, standardizing data types, and removing inconsistencies.

These steps are crucial for maintaining data quality and ensuring reliable analysis.

Data Pipelines

Data pipelines are the backbone of a modern data stack, orchestrating the entire ingestion process. They automate the flow of data from source to storage, ensuring data quality and consistency. They facilitate data transformation and cleaning, ensuring data is ready for analysis. Robust data pipelines are essential for reliable data access.

- Data Pipeline Architecture: A typical data pipeline architecture includes stages for data ingestion, transformation, validation, loading, and monitoring. Each stage is responsible for a specific task, and they are often implemented using tools like Apache Airflow, Luigi, or Prefect. This structure ensures that data is processed efficiently and reliably. A robust data pipeline helps with real-time monitoring and alerting, ensuring that issues are caught quickly.

Simple Data Ingestion Pipeline Architecture

- Data Source: This could be a database, file system, or streaming data source.

- Ingestion Tool: This tool reads data from the source and converts it into a standardized format.

- Data Transformation: This step involves cleaning, validating, and transforming the data. Tools like Apache Spark or Pandas can be used for this.

- Storage: The transformed data is stored in a designated data warehouse or data lake.

- Monitoring: Tools like Apache Kafka can track pipeline status and trigger alerts.

Data Processing and Transformation

Data processing and transformation are crucial steps in a modern data stack. They involve taking raw data from various sources, cleaning it, converting it into a usable format, and preparing it for analysis and insights. Effective data processing ensures the quality and reliability of the data used for decision-making. The choice of tools and techniques directly impacts the efficiency and accuracy of the entire data pipeline.

Data Processing Tools and Technologies

Various tools and technologies are available for data processing and transformation. These tools vary in their capabilities, performance, and cost. Popular choices include Apache Spark, Apache Hadoop, Fivetran, Matillion, and various cloud-based data processing services. The selection of the appropriate tool depends on factors such as data volume, complexity of transformations, and required performance.

Significance of Data Transformation

Data transformation is a critical step in preparing data for analysis. Raw data often comes in disparate formats and structures, making it unsuitable for direct analysis. Transformations standardize the data, handle missing values, correct inconsistencies, and convert data into a format suitable for specific analytical tools or models. This process ensures that the data is accurate, consistent, and usable for informed decision-making.

For example, transforming customer purchase data into a unified format allows for accurate identification of trends and patterns.

Common Data Transformation Tasks and Techniques

Data transformation tasks include cleaning, structuring, enriching, and aggregating data. Data cleaning involves handling missing values, correcting errors, and removing duplicates. Structuring data involves transforming data into a consistent format, such as converting different date formats to a single format. Data enrichment involves adding relevant information from external sources to enhance the data’s completeness. Aggregation combines multiple data points into summary statistics, such as calculating total sales or average customer spending.

Techniques include scripting languages like Python with libraries such as Pandas, SQL queries, and dedicated ETL (Extract, Transform, Load) tools.

Comparison of Data Processing Engines

Different data processing engines exhibit varying performance characteristics. Factors such as data volume, processing speed, scalability, and cost influence the choice of engine. For instance, Apache Spark excels in handling large datasets due to its distributed processing capabilities. Hadoop, on the other hand, is well-suited for batch processing of massive datasets. Cloud-based services often offer scalability and flexibility, making them suitable for dynamic workloads.

A detailed comparison would involve evaluating factors like processing speed, scalability, fault tolerance, and cost per operation.

Data Processing Steps in a Modern Data Stack

A flowchart illustrating the data processing steps in a modern data stack would begin with data ingestion from various sources. Next, the data would be transformed using tools like Apache Spark or cloud-based services. This would involve tasks such as cleaning, structuring, and enriching the data. Subsequently, the transformed data would be loaded into a data warehouse or a data lake for storage and analysis.

The final step would involve querying and analyzing the data using business intelligence tools. The flowchart would visually depict the sequence and interactions between these steps, highlighting the crucial role of data transformation in connecting various stages of the data pipeline.

Data Warehousing and Lakes

A crucial component of any modern data stack is the method used to store and manage processed data. Data warehousing and data lakes represent two distinct approaches, each with its own strengths and weaknesses. Understanding these differences is vital for making informed decisions about data storage and subsequent analysis.Data warehouses and data lakes serve different purposes within a data stack.

Data warehouses are structured, relational databases designed for analytical queries, while data lakes are large repositories that store raw, unstructured data in its native format. The choice between them depends on the specific needs and priorities of the organization.

Differences Between Data Warehouses and Data Lakes

Data warehouses are meticulously organized, structured databases optimized for querying and analysis. Data lakes, on the other hand, are essentially large storage repositories capable of holding vast amounts of raw data in various formats. This difference in structure and design fundamentally impacts how data is accessed and utilized.

Benefits of Data Warehouses

Data warehouses excel at providing a structured environment for analytical queries. This structure allows for fast, efficient query performance, enabling business users to extract insights quickly. Data quality is often higher due to rigorous data cleansing and transformation processes. The predefined schemas and controlled data format reduce the complexity of analysis and reporting. The ability to perform complex joins and aggregations is significantly easier with a well-structured data warehouse.

Drawbacks of Data Warehouses

The rigid structure of a data warehouse can hinder flexibility. Adding new data types or changing schemas can be complex and time-consuming. The initial data loading and transformation process can be lengthy and expensive, requiring significant upfront investment. The need for predefined schemas can lead to data loss or transformation if the data doesn’t fit the predefined model.

Benefits of Data Lakes

Data lakes offer unparalleled flexibility. Storing data in its native format enables the storage of a wide variety of data types, including structured, semi-structured, and unstructured data. This flexibility allows organizations to accommodate evolving data needs without significant restructuring. Data lakes are typically cost-effective, as they don’t require extensive upfront investment in data transformation. The ability to store raw data in its original format allows for future exploration and analysis.

Drawbacks of Data Lakes

The lack of structure in data lakes can make querying and analysis more challenging. Extracting meaningful insights from raw data requires sophisticated data processing and transformation techniques. Data quality can be a concern without robust data governance processes. Query performance can be slower compared to data warehouses, especially when dealing with large volumes of data.

Real-World Implementations

Companies across industries have successfully implemented data warehouses and data lakes. For instance, retail giants use data warehouses to analyze sales trends and customer behavior for targeted marketing campaigns. Similarly, financial institutions use data lakes to store and analyze vast amounts of transactional data to identify fraud and improve risk management.

Use Cases for Data Warehouses

Data warehouses are ideal for reporting, dashboards, and ad-hoc queries. Business intelligence (BI) applications, financial reporting, and operational analytics are key use cases. Data warehouses are suitable when a clear understanding of the data structure and predefined analytical needs are required.

Use Cases for Data Lakes

Data lakes are suitable for exploratory data analysis, machine learning, and advanced analytics. When raw data needs to be stored and explored without predefining the specific analysis, data lakes are the optimal choice. Scientific research, fraud detection, and personalization are other areas where data lakes are highly valuable.

Choosing Between a Data Warehouse and a Data Lake

The decision between a data warehouse and a data lake hinges on several factors, including data volume, data variety, analytical needs, and budget constraints. Organizations that require rapid reporting and pre-defined analyses will likely find a data warehouse more suitable. Conversely, companies prioritizing flexibility, experimentation, and the potential for future unknown analyses will favor a data lake. Consider a hybrid approach combining both for optimal results.

Data Modeling and Architecture

A robust modern data stack relies heavily on well-defined data models and architectures. Effective data modeling ensures data integrity, consistency, and usability, while a sound architecture dictates how data is stored, accessed, and processed, enabling efficient and scalable data operations. These elements are critical for extracting meaningful insights and driving informed decision-making.Data modeling in a modern data stack is more than just creating tables; it’s about understanding the relationships between different data entities and defining how they interact.

A well-structured model not only supports current needs but also anticipates future requirements, promoting adaptability and longevity of the data stack. This ensures the data stack remains flexible and can be scaled as the business evolves.

Importance of Data Modeling

Data modeling is crucial for defining the structure and relationships of data within a data stack. It provides a blueprint for how data will be organized, stored, and accessed, ultimately impacting the quality, usability, and maintainability of the entire system. A well-defined model ensures data integrity and consistency, facilitating easier data analysis and reporting. This also reduces errors and improves the overall efficiency of data-driven processes.

Data Modeling Techniques

Various data modeling techniques exist, each with its strengths and weaknesses. The choice depends on the specific needs of the data stack and the nature of the data being modeled.

- Entity-Relationship (ER) Modeling: This technique focuses on representing entities and their relationships using diagrams. It’s a widely used approach for conceptual modeling, especially in relational databases. It visually represents entities and their attributes, along with the relationships between them, creating a clear understanding of the data structure. ER diagrams provide a high-level view of the data and aid in communication among stakeholders.

- Dimensional Modeling: Specifically designed for analytical purposes, dimensional models organize data into dimensions (e.g., time, product, customer) and facts (e.g., sales figures). This structure optimizes query performance for reporting and analysis. It is particularly effective when dealing with large datasets and complex analytical queries, often found in business intelligence and data warehousing environments.

- Graph Databases: For data with complex relationships, graph databases are ideal. They represent data as nodes and edges, making it easier to traverse and explore relationships. This is particularly useful in social networks, recommendation systems, and fraud detection applications. This allows for efficient retrieval of connected data, making them highly suitable for exploring relationships.

Conceptual Data Model for E-commerce

Let’s consider a hypothetical e-commerce company. A conceptual data model might include entities like “Customers,” “Products,” “Orders,” and “Payments.”

| Entity | Attributes |

|---|---|

| Customers | CustomerID, Name, Email, Address, PaymentInfo |

| Products | ProductID, Name, Description, Price, Category |

| Orders | OrderID, CustomerID, ProductID, OrderDate, Quantity, TotalAmount |

| Payments | PaymentID, OrderID, PaymentMethod, Amount, TransactionDate |

The model demonstrates relationships between entities (e.g., a customer can place multiple orders, an order contains multiple products).

Data Modeling Challenges

Effective data modeling isn’t without its challenges. Maintaining consistency and accuracy across the entire data stack requires meticulous planning and execution.

- Data Volume and Velocity: Managing the sheer volume and velocity of data in modern applications can create complexities in modeling and maintenance. Scalability and efficiency are paramount for large-scale applications.

- Data Silos: In large organizations, data is often scattered across different systems and departments, making it difficult to establish a unified model. Data consistency and integration are crucial to avoid inconsistencies and silos.

- Data Schema Evolution: Data models need to adapt to changing business requirements and new data sources. This necessitates ongoing maintenance and updates to the model, ensuring it remains relevant and efficient.

Impact of Data Architecture on Scalability and Performance

Data architecture significantly influences scalability and performance. A well-designed architecture anticipates future growth and allows for efficient data access and processing.

- Scalability: A flexible architecture that allows for horizontal scaling (adding more resources) is crucial for handling increasing data volumes. The design should support future growth and accommodate evolving needs. This allows for the stack to adapt to changing needs and accommodate future data growth.

- Performance: Optimized query performance is achieved through proper indexing and data partitioning strategies. The choice of storage mechanisms and processing technologies directly impacts query speed and responsiveness. Optimized query performance directly impacts the speed and responsiveness of data access.

Data Visualization and Analytics

A modern data stack is incomplete without robust data visualization and analytics capabilities. Effective visualization tools transform raw data into actionable insights, enabling data-driven decision-making across organizations. These tools allow stakeholders to understand complex data patterns, trends, and anomalies, ultimately accelerating business growth and efficiency.Data visualization is crucial for translating complex data sets into easily digestible formats. It allows users to identify patterns, outliers, and correlations that might otherwise be missed.

This process fosters a deeper understanding of the data, leading to more informed decisions. Data visualization tools are instrumental in the entire data analysis lifecycle, from exploration and discovery to reporting and communication.

Role of Data Visualization Tools

Data visualization tools play a pivotal role in a modern data stack. They act as a bridge between raw data and actionable insights. These tools empower users to explore data interactively, identify trends, and communicate findings effectively. Through interactive visualizations, stakeholders can gain a comprehensive understanding of the data, enabling them to make informed decisions and drive business strategies.

Different Types of Data Visualizations

Various visualization types cater to different data characteristics and use cases. Bar charts and line graphs effectively display trends over time or comparisons between categories. Scatter plots reveal relationships between variables. Histograms illustrate data distribution. Maps effectively display geographic data.

Choosing the appropriate visualization type ensures accurate representation and optimal communication of insights.

Interactive Dashboards and Reporting Tools

Interactive dashboards and reporting tools provide dynamic visualizations that allow users to drill down into data, explore different perspectives, and uncover hidden patterns. These tools typically feature customizable visualizations, allowing users to tailor the presentation to their specific needs. Examples include Tableau, Power BI, and Qlik Sense, which empower users to create interactive visualizations that dynamically respond to user input.

Example of an Interactive Dashboard

Consider a retail company wanting to understand sales performance across different regions. An interactive dashboard could display sales figures for each region on a map. Users could click on a specific region to drill down into detailed sales data by product category, customer segment, or specific time periods. Interactive filters and controls allow users to isolate and analyze various factors affecting sales.

Color-coded maps and charts, along with real-time data updates, make the dashboard visually engaging and provide a clear overview of the current state of sales performance.

Data Storytelling in Analytics

Data storytelling is a powerful technique that uses data visualizations to convey a narrative, making insights more impactful and memorable. A compelling story crafted from data insights helps stakeholders understand the “why” behind the data, fostering a deeper understanding of the business implications and promoting better decision-making. The goal is to present data in a context that is relatable and meaningful, rather than just presenting numbers.

Sample Dashboard Design (Retail Sales)

| Visual Element | Description | Purpose |

|---|---|---|

| Map | Geographic representation of sales performance across regions | Visualize regional sales trends |

| Bar Charts | Comparative representation of sales by product category in each region | Highlight top-performing product categories |

| Line Graphs | Trend analysis of sales figures over time for a specific region | Identify seasonal trends and growth patterns |

| Interactive Filters | Enable users to isolate data based on region, product, or time | Provide granular analysis and customization options |

This sample dashboard design illustrates how different visualization types can be combined to create a comprehensive overview of retail sales performance. Interactive elements empower users to explore data from multiple perspectives and uncover valuable insights. The design is built around user-friendliness and intuitive exploration.

Data Security and Governance

A robust modern data stack hinges on the secure and responsible handling of data. Data security is paramount to maintaining trust, complying with regulations, and preventing breaches that can have severe financial and reputational consequences. Effective data governance policies and procedures ensure that data is used ethically and legally while also optimizing its value.Data security and governance are no longer optional add-ons but integral components of any modern data stack.

Comprehensive strategies must address the entire lifecycle of data, from ingestion and storage to processing, analysis, and eventual disposal. This includes implementing stringent access controls, robust encryption mechanisms, and regular security audits to safeguard sensitive information.

Critical Role of Security

Data breaches can result in significant financial losses, reputational damage, and legal repercussions. Protecting sensitive data is critical for maintaining trust with customers, partners, and stakeholders. A robust security posture reduces the risk of unauthorized access, data loss, and misuse. This involves implementing layered security measures across the entire data stack, from physical security of servers to sophisticated encryption techniques.

Data Governance Policies and Procedures

Data governance policies and procedures define how data is managed, accessed, and used. These policies establish clear roles and responsibilities, outlining who has access to specific data and under what conditions. They also define data quality standards, ensuring accuracy and consistency across the organization. Comprehensive data governance ensures compliance with regulations like GDPR and CCPA, reducing the risk of legal issues and reputational damage.

Security Measures for Protecting Data

Protecting data necessitates a multi-layered approach. This includes strong encryption of sensitive data both in transit and at rest. Access controls limit who can access specific data, using principles like least privilege. Regular security audits and penetration testing help identify and mitigate vulnerabilities. Employing intrusion detection systems and firewalls helps to prevent unauthorized access.

Importance of Data Access Controls

Data access controls are fundamental to security. They define who can access what data and under what circumstances. This is based on the principle of least privilege, granting only the necessary access to perform a task. Robust access controls reduce the risk of unauthorized data modification or disclosure, protecting sensitive information. They also aid in compliance by demonstrating adherence to data protection regulations.

Best Practices for Data Security and Governance

Best practices in data security and governance include establishing a clear data security policy. Regular security awareness training for all employees is crucial to prevent phishing and social engineering attacks. Implement automated security monitoring and alerts to quickly detect and respond to potential threats. Regular data backups and recovery plans are essential to mitigate the impact of data loss.

Finally, ongoing security assessments and vulnerability management are critical to maintain a proactive security posture.

Cloud-Based Data Stack

Cloud-based data stacks offer significant advantages over on-premises solutions, including scalability, flexibility, and cost-effectiveness. Leveraging the power of cloud computing allows businesses to adapt to changing data demands and focus on strategic initiatives rather than infrastructure management. This approach enables faster deployment, easier management, and reduced capital expenditure.Cloud platforms provide a vast array of services for every stage of the data lifecycle, from ingestion and storage to processing, analysis, and visualization.

These services are often integrated and optimized for seamless data flow, facilitating efficient and cost-effective data management.

Benefits of Cloud-Based Data Stacks

Cloud-based data stacks offer numerous advantages over traditional on-premises solutions. These include elasticity and scalability, enabling businesses to easily adjust resources to meet fluctuating demands. Reduced capital expenditure is another significant benefit, as businesses avoid the upfront costs of purchasing and maintaining hardware. Furthermore, cloud platforms provide access to advanced analytics tools and services, enabling deeper insights into data.

Finally, enhanced security features and improved disaster recovery capabilities contribute to overall data protection and business continuity.

Different Cloud Platforms and Their Offerings

Several prominent cloud providers offer comprehensive data stack solutions. Amazon Web Services (AWS) provides a wide range of services, including Amazon S3 for object storage, Amazon Redshift for data warehousing, and Amazon EMR for data processing. Microsoft Azure offers Azure Blob Storage for data storage, Azure Synapse Analytics for data warehousing, and Azure Databricks for data processing. Google Cloud Platform (GCP) provides Google Cloud Storage for object storage, BigQuery for data warehousing, and Dataproc for data processing.

These platforms offer various services tailored to different data stack components.

Comparison of Cloud-Based Data Solutions

A comparison of cloud-based data solutions necessitates evaluating factors like pricing models, scalability, security features, and available tools. AWS often excels in its broad ecosystem and mature services, while Azure’s strength lies in its integration with existing Microsoft technologies. GCP often attracts users seeking cutting-edge innovations and advanced machine learning capabilities. Each platform has unique strengths and weaknesses, making the ideal choice contingent on specific business needs and requirements.A table illustrating key features and offerings of leading cloud providers is provided below:

| Feature | AWS | Azure | GCP |

|---|---|---|---|

| Storage | S3, Glacier | Blob Storage, Azure Files | Cloud Storage, Cloud SQL |

| Processing | EMR, Glue | Databricks, HDInsight | Dataproc, BigQuery |

| Analytics | Redshift, Athena | Synapse Analytics, Azure SQL | BigQuery, Looker |

| Security | IAM, KMS | Azure Active Directory, Azure Key Vault | IAM, Cloud KMS |

Migrating Data to a Cloud-Based Environment

Migrating data to a cloud-based environment involves careful planning and execution. A phased approach, starting with pilot projects, can minimize disruption and identify potential challenges early on. Data validation and transformation are crucial steps to ensure data integrity and consistency in the new environment. Data migration tools and specialized expertise can streamline the process, enabling efficient and error-free transfers.

Cost Considerations of a Cloud-Based Data Stack

Cost optimization is a critical aspect of cloud-based data stack implementation. Understanding the various pricing models, including pay-as-you-go and reserved instances, is crucial for effective cost management. Optimizing resource utilization, implementing automated scaling strategies, and carefully evaluating service tiers can significantly reduce cloud costs. Proper data governance and efficient data storage practices are essential for controlling data costs.

Open-Source Tools and Technologies

Open-source tools and technologies play a crucial role in modern data stacks, offering significant advantages in terms of cost-effectiveness, flexibility, and community support. These tools empower organizations to build robust and scalable data solutions without significant upfront investment. Their adaptability allows for customized solutions tailored to specific business needs.Popular open-source tools offer a diverse range of functionalities, from data ingestion and processing to visualization and analytics.

Their widespread adoption fosters a vibrant ecosystem where developers can leverage a wealth of resources and collaborate on improving existing tools or creating new ones. This collective effort ultimately benefits the entire data community.

Popular Open-Source Tools

Open-source tools are extensively used across various stages of the data pipeline, from collecting data to generating insights. These tools are often chosen for their cost-effectiveness and the ability to adapt to specific business needs.

- Apache Hadoop: A framework for processing large datasets across clusters of commodity hardware. It offers fault tolerance and scalability, making it suitable for handling massive volumes of data. Hadoop’s distributed processing capabilities are particularly beneficial for organizations with significant data volumes, allowing for efficient storage and analysis of large datasets.

- Apache Spark: A fast and general-purpose cluster computing system. It excels in processing large datasets faster than Hadoop, enabling faster insights and quicker turnaround times. Spark’s in-memory computing capabilities provide significant performance improvements compared to Hadoop’s disk-based processing.

- Apache Kafka: A distributed streaming platform for handling real-time data streams. Its high throughput and low latency make it ideal for applications requiring real-time data processing, such as fraud detection or stock market analysis. Kafka’s ability to handle massive volumes of data streams efficiently contributes to real-time data-driven decision-making.

- PostgreSQL: A powerful, open-source relational database management system (RDBMS). Its robust features and extensive support make it a popular choice for storing structured data. PostgreSQL’s reliability and scalability are valuable assets for organizations needing a reliable platform for storing and managing relational data.

- MySQL: Another widely used open-source RDBMS. Its ease of use and extensive community support make it a popular choice for various applications. MySQL’s efficiency and wide availability contribute to its widespread use, especially in smaller to medium-sized businesses.

Comparing Open-Source Options

A comparison of open-source tools highlights their distinct strengths and weaknesses.

| Tool | Strengths | Weaknesses |

|---|---|---|

| Apache Hadoop | Scalability, fault tolerance, cost-effectiveness for massive datasets | Slower processing speed compared to Spark, complex setup |

| Apache Spark | Faster processing speed, in-memory computing, versatile | Can be more complex to deploy and manage than Hadoop in some cases |

| Apache Kafka | High throughput, low latency, real-time data processing | Requires specialized expertise for setup and management |

| PostgreSQL | Robust features, extensive support, reliable | Can be more complex to manage than simpler database systems |

| MySQL | Ease of use, extensive community support, widely available | May not be as scalable as PostgreSQL for extremely large datasets |

Benefits of Open-Source Tools

Open-source tools offer several key benefits for organizations.

- Cost-Effectiveness: Open-source tools often have no licensing fees, significantly reducing upfront costs for implementation and maintenance.

- Flexibility: Open-source tools are adaptable and can be customized to meet specific business needs. This customization capability allows organizations to tailor their data stack to specific use cases.

- Community Support: A vast and active community provides readily available support and resources, reducing the time required to resolve issues.

Importance of Community Support

Strong community support is crucial for the success of open-source tools.

Active communities provide a wealth of knowledge, resources, and troubleshooting assistance, enabling developers to effectively use the tools and overcome challenges. This collaborative environment is essential for the continued development and improvement of open-source projects.

Data Visualization Tools

Open-source tools also excel in data visualization, allowing users to gain insights from data.

- Tableau Public: A powerful tool for creating interactive dashboards and reports, offering a range of features to visualize data in a clear and comprehensive way.

- Plotly: A comprehensive library for creating various types of interactive visualizations, supporting interactive plots, maps, and dashboards.

- D3.js: A JavaScript library for creating dynamic and complex visualizations. D3.js provides high flexibility and customization options for building custom visualizations.

Data Quality and Maintenance

Maintaining high-quality data is paramount in a modern data stack. Poor data quality can lead to inaccurate insights, flawed decisions, and ultimately, wasted resources. Ensuring data accuracy, consistency, and completeness throughout the data lifecycle is crucial for deriving meaningful value from the vast amounts of data collected and processed. A robust data quality program is essential for the long-term success of any data-driven organization.

Importance of Data Quality

Data quality is critical for the reliability and trustworthiness of insights derived from the data stack. Accurate and consistent data enables informed decision-making, improved operational efficiency, and enhanced customer experiences. Poor data quality can lead to incorrect predictions, misleading analyses, and ultimately, a loss of confidence in the entire system.

Monitoring and Maintaining Data Quality

Data quality monitoring and maintenance is an ongoing process, not a one-time event. It requires a comprehensive strategy encompassing various stages of the data lifecycle. This includes proactive measures to prevent data quality issues from arising, as well as reactive steps to address problems that do occur.

Data Quality Tools and Techniques

Several tools and techniques are available to ensure and maintain data quality. These include data profiling tools to identify patterns and anomalies in the data, data cleansing tools to correct errors and inconsistencies, and data validation rules to ensure data integrity. Additionally, employing data lineage tracking and version control helps to understand the data’s history and maintain a clear audit trail.

Data Quality Metrics

Defining and tracking relevant data quality metrics is essential for measuring progress and identifying areas needing improvement. Common metrics include data accuracy, completeness, consistency, timeliness, and validity. For example, a high percentage of missing values might indicate a data completeness issue. Similarly, discrepancies in the same data field across multiple records suggest potential consistency problems.

Data Quality Checklist for a Modern Data Stack

A comprehensive data quality checklist should cover all aspects of the data lifecycle, from ingestion to analysis. This checklist can include specific criteria for each stage, ensuring consistency and accuracy.

- Data Ingestion: Verify data formats, validate data types, and check for missing or incorrect values during ingestion. Implement standardized data entry procedures to ensure consistent input across various data sources.

- Data Storage: Regularly audit data storage for accuracy and completeness. Implement data validation rules at the storage level to catch errors early on. Establish data governance policies to enforce data quality standards.

- Data Processing and Transformation: Implement data validation rules throughout the processing and transformation stages. Use data profiling tools to identify and correct errors before they propagate to downstream processes.

- Data Warehousing and Lakes: Ensure data accuracy and consistency in the warehousing or lake environment. Regularly cleanse and update data within these repositories. Use data lineage tracking to understand the history and lineage of data within the system.

- Data Visualization and Analytics: Employ data quality checks in the data visualization and analytics stages. Ensure the data used in analysis is accurate and consistent to avoid misleading results. Verify the data visualizations against known expected outcomes or established patterns.

- Data Security and Governance: Implement data security measures to protect data integrity. Ensure that data access controls and policies align with data quality standards. Data governance processes should actively monitor and address data quality issues.

Emerging Trends and Future Directions

The modern data stack is constantly evolving, driven by advancements in technology and the ever-increasing complexity of data. Understanding emerging trends and future directions is crucial for organizations seeking to leverage data effectively. This section explores key trends shaping the future of data stacks, including advancements in AI, the rise of decentralized data, and the increasing need for data democratization.

Advancements in Artificial Intelligence (AI)

AI is rapidly transforming data analysis and processing. Machine learning algorithms are increasingly used for tasks such as predictive modeling, anomaly detection, and automated feature engineering. This allows for more sophisticated data insights and enables faster decision-making. For example, AI-powered tools can analyze vast datasets to identify patterns and trends that would be impossible for humans to detect, enabling organizations to gain a competitive edge.

The Rise of Decentralized Data

Decentralized data storage and processing models, like blockchain technology, are gaining traction. These systems offer enhanced security, transparency, and trust, particularly for sensitive data. This is especially important in regulated industries and for organizations seeking to improve data integrity and compliance.

Data Democratization

Data democratization is becoming increasingly important. This involves making data accessible and understandable to a wider range of users, beyond just data scientists and analysts. This fosters collaboration and empowers individuals to leverage data for their specific needs. Tools and platforms are being developed to simplify data access and visualization, enabling non-technical users to extract insights and drive better decisions.

Cloud-Native Data Stacks

Cloud-native data stacks are gaining significant momentum. These stacks leverage cloud computing resources to provide scalability, flexibility, and cost-effectiveness. Organizations can easily scale their data processing capabilities as needed, eliminating the need for extensive on-premises infrastructure.

Composable Data Stacks

Composable data stacks offer greater flexibility and adaptability compared to traditional, monolithic solutions. They allow organizations to select and integrate various tools and technologies tailored to their specific needs, enabling a more customized and efficient data ecosystem. This contrasts with the often inflexible, “one-size-fits-all” approach of traditional data warehouses.

Data Security and Privacy

Data security and privacy are paramount in today’s digital landscape. Advanced encryption techniques and robust access controls are crucial for protecting sensitive data. Compliance with data privacy regulations, such as GDPR and CCPA, is critical for maintaining trust and avoiding legal repercussions. Emerging technologies like differential privacy are being explored to allow for data analysis while protecting individual privacy.

Final Conclusion

In conclusion, a modern data stack is a sophisticated ecosystem of tools and technologies. From data ingestion to analysis, and ultimately, actionable insights, this system is crucial for extracting maximum value from data. The components, from storage and processing to visualization and governance, are interdependent and need careful consideration in their selection and implementation to ensure efficiency and scalability.

User Queries

What are some common data ingestion methods?

Common data ingestion methods include batch ingestion, streaming ingestion, and change data capture (CDC). Each method has its own strengths and weaknesses, impacting speed, cost, and data volume. Choosing the right method depends on the specific needs of the data stack.

What are the key differences between a data warehouse and a data lake?

A data warehouse is structured, optimized for analytical queries, and typically contains well-defined data schemas. A data lake, on the other hand, is a more flexible, unstructured storage repository that can hold diverse data types. The choice between the two depends on the specific use cases and the type of analysis to be performed.

What are some essential considerations for data security within a modern data stack?

Data security is paramount. Critical considerations include access controls, encryption, data masking, and compliance with relevant regulations (like GDPR or HIPAA). Robust security measures safeguard sensitive information and maintain trust.

How can open-source tools enhance a modern data stack?

Open-source tools offer cost-effectiveness and flexibility, often providing a wide range of features and functionalities. They also benefit from community support, leading to active development and rapid innovation. However, proper integration and maintenance are necessary to leverage their full potential.