Strategies for handling state in serverless applications presents a critical challenge in modern software architecture. Unlike traditional stateful applications, serverless environments are inherently stateless, designed for short-lived function executions. This architectural paradigm necessitates innovative approaches to manage data persistence, consistency, and synchronization, which is essential for building robust and scalable serverless solutions. Understanding these complexities and implementing effective state management strategies is paramount to unlocking the full potential of serverless computing, from enhancing performance to optimizing cost efficiency.

This guide will explore the core concepts, techniques, and best practices for effectively managing state in serverless applications. We will delve into various data storage solutions, caching mechanisms, and synchronization strategies, alongside essential security considerations. Furthermore, this analysis will dissect the implications of eventual consistency, idempotency, and the role of serverless frameworks in streamlining state management. The objective is to provide developers with a thorough understanding of how to build and deploy serverless applications that are reliable, performant, and secure.

Introduction to Serverless State Management

Serverless computing, characterized by its event-driven and stateless nature, presents unique challenges when managing application state. The ephemeral nature of serverless functions, designed to execute quickly and scale dynamically, complicates the traditional approaches to state management that rely on persistent connections and local storage. Understanding these challenges is crucial for building robust and scalable serverless applications.Serverless applications are designed to be stateless, meaning that each function invocation should be independent and not rely on any information from previous invocations.

However, many real-world applications require the ability to store and retrieve data, which necessitates effective state management strategies. This involves understanding the fundamental differences between stateless and stateful architectures and addressing the specific problems associated with data persistence in serverless environments.

Stateless vs. Stateful Serverless Applications

The primary distinction between stateless and stateful serverless applications lies in how they handle data persistence and function context. Stateless functions are inherently designed to operate without retaining any information about previous executions, whereas stateful functions require the ability to store and retrieve data across multiple invocations.

- Stateless Applications: These applications treat each function invocation as an isolated event. Data processing is typically limited to the input provided in the event payload, with any resulting output being returned as the function’s response. Examples include image resizing or simple data transformations. The architecture is simpler, as there’s no need for persistent storage or complex state management mechanisms.

The advantages are scalability, resilience, and reduced operational overhead.

- Stateful Applications: These applications require the ability to maintain state across multiple function invocations. This often involves storing data in external databases, caches, or other persistent storage solutions. Examples include user session management, shopping cart functionality, or complex data analysis. Stateful applications introduce complexities in terms of data consistency, synchronization, and potential bottlenecks related to external dependencies.

Core Problems of Managing Data Persistence in Serverless Environments

Managing data persistence in serverless environments presents several core problems, including data consistency, scalability, and cost optimization. Serverless functions, by design, are ephemeral, and therefore, cannot store data locally. The solutions chosen must be designed to handle the scaling of the functions and the persistence of data.

- Data Consistency: Ensuring data consistency across multiple function invocations is a significant challenge. When multiple functions access and modify the same data concurrently, it’s crucial to implement mechanisms to prevent data corruption or inconsistencies. Techniques such as optimistic locking, transactions, and careful consideration of eventual consistency models are often employed. For instance, when managing a user’s shopping cart in a serverless e-commerce application, the system needs to ensure that inventory counts are updated accurately and that the cart’s contents reflect the latest changes, even with multiple concurrent requests.

- Scalability: Serverless applications often experience rapid scaling, which means that the underlying storage solutions must be able to handle a significant increase in read and write operations. Choosing the right database technology, such as a NoSQL database designed for high throughput or a relational database with appropriate scaling capabilities, is crucial. The chosen storage solution should be able to handle the anticipated load without becoming a bottleneck.

For example, a social media application using serverless functions to process user posts must be able to scale the database to handle a sudden surge in posts during peak hours.

- Cost Optimization: Data storage and retrieval can be a significant cost factor in serverless applications. Choosing the right storage solution and optimizing data access patterns are essential for controlling costs. For instance, using caching mechanisms to reduce the number of database queries or employing data compression techniques to minimize storage space can help to reduce expenses. Monitoring the usage of storage resources and optimizing data access patterns is crucial for cost efficiency.

Choosing the Right Data Storage Solution

Selecting the optimal data storage solution is a critical architectural decision in serverless application development. The choice significantly impacts performance, scalability, cost, and operational complexity. This section will explore the available data storage options, compare their strengths and weaknesses, and provide a decision framework to guide developers in making informed choices.Data storage solutions for serverless applications must align with the principles of serverless computing: automatic scaling, pay-per-use pricing, and minimal operational overhead.

Various options cater to different application requirements and data characteristics.

Common Data Storage Options

Several data storage options are commonly employed in serverless architectures. Each solution offers a unique set of features and trade-offs.

- Databases: Databases provide structured data storage, indexing, and querying capabilities. They are broadly categorized into relational and NoSQL databases. Relational databases, such as PostgreSQL and MySQL, enforce schema and relationships through tables. NoSQL databases, like DynamoDB and MongoDB, offer flexible schemas and are designed for scalability.

- Object Storage: Object storage services, such as Amazon S3, are designed for storing large, unstructured data. They provide high durability, availability, and cost-effectiveness for storing files, images, videos, and other binary objects.

- Key-Value Stores: Key-value stores offer simple data storage based on key-value pairs. Services like Redis provide fast read/write operations and are suitable for caching and session management.

- Document Databases: Document databases store data in a semi-structured format, such as JSON documents. They offer flexibility in data modeling and are well-suited for applications with evolving data structures. MongoDB is a popular example.

- Other specialized storage: Specialized storage options exist for specific use cases, such as time-series databases (e.g., InfluxDB) for time-series data and graph databases (e.g., Neo4j) for relationship-intensive data.

Relational Databases vs. NoSQL Databases

The choice between relational and NoSQL databases is a fundamental decision. Each approach offers distinct advantages and disadvantages concerning data modeling, scalability, consistency, and cost.

- Relational Databases: Relational databases excel at maintaining data integrity and consistency through ACID properties (Atomicity, Consistency, Isolation, Durability). They are ideal for applications with well-defined data schemas and complex relationships between data entities. PostgreSQL and MySQL are popular choices.

ACID properties ensure data reliability and transactional integrity, crucial for financial transactions and other critical operations.

- NoSQL Databases: NoSQL databases prioritize scalability and flexibility. They often employ a “schema-less” approach, allowing for rapid development and evolution of data models. They are well-suited for applications with high write throughput, such as social media platforms and e-commerce sites. DynamoDB and MongoDB are widely used.

NoSQL databases typically trade consistency for availability and partition tolerance, following the CAP theorem.

Decision Tree for Data Storage Selection

Selecting the appropriate data storage solution depends on the specific requirements of the application. This decision tree provides a structured approach to guide developers through the selection process.

| Requirement Category | Question | Options | Recommended Solution(s) |

|---|---|---|---|

| Data Structure | Is the data highly structured with well-defined relationships? | Yes / No | Yes: Relational Database (PostgreSQL, MySQL) / No: Proceed to next question |

| Data Consistency | Does the application require strong data consistency (ACID properties)? | Yes / No | Yes: Relational Database (PostgreSQL, MySQL) / No: Proceed to next question |

| Scalability | Is the application expected to handle a large volume of data or high write throughput? | Yes / No | Yes: NoSQL Database (DynamoDB, MongoDB) / No: Consider Relational Database or NoSQL Database depending on other factors |

| Data Access Patterns | Are complex queries and joins required? | Yes / No | Yes: Relational Database (PostgreSQL, MySQL) / No: Consider NoSQL Database (DynamoDB, MongoDB) or Key-Value Store (Redis) |

| Cost Optimization | Are cost considerations a primary factor, particularly in terms of pay-per-use? | Yes / No | Yes: Consider DynamoDB (pay-per-request pricing) or Object Storage (S3 for large files) / No: Evaluate based on performance and other factors |

| Data Type | Is the primary data type large, unstructured data (e.g., images, videos)? | Yes / No | Yes: Object Storage (S3) / No: Consider Databases or Key-Value Stores |

The decision tree helps developers analyze their application requirements and choose the most suitable data storage solution. The choice should be based on a thorough understanding of the application’s data characteristics, performance needs, and cost constraints. The flexibility offered by serverless environments often allows for the combination of different storage solutions to address specific requirements.

Utilizing Databases for Serverless State

Serverless applications, by their ephemeral nature, often require persistent storage to maintain state. Databases provide a robust and scalable solution for managing this state. Their ability to handle complex data structures, provide transactional guarantees, and offer various indexing and querying capabilities makes them indispensable for many serverless use cases. This section will explore the best practices for interacting with databases within a serverless environment, focusing on performance optimization and practical code examples.

Best Practices for Connecting to and Interacting with Databases from Serverless Functions

Establishing efficient and reliable connections to databases is crucial for serverless function performance. Serverless functions are typically short-lived, making connection management a critical aspect of design. Directly creating and closing database connections for each function invocation can lead to significant overhead. Several strategies exist to mitigate this and ensure optimal database interaction.

- Connection Pooling: Implementing connection pooling is a highly effective method for optimizing database connections. Instead of establishing a new connection for every function invocation, a pool of pre-established connections is maintained. When a function needs to interact with the database, it borrows a connection from the pool and returns it when the operation is complete. This minimizes the overhead of connection establishment and teardown, improving performance, especially under heavy load.

- Environment Variables for Credentials: Securely storing database credentials is paramount. Avoid hardcoding usernames, passwords, and database endpoints directly into the function code. Instead, store these sensitive values as environment variables. This allows for easy configuration management and prevents accidental exposure of credentials in source code repositories.

- IAM Roles (for AWS): For serverless functions deployed on cloud platforms like AWS, utilize IAM roles to grant the function the necessary permissions to access the database. This eliminates the need to manage and rotate access keys within the function code, improving security posture. The IAM role should be configured with the least privilege principle, granting only the necessary permissions for database operations.

- Connection Timeouts and Retries: Implement robust error handling, including connection timeouts and retry mechanisms. Network issues or temporary database unavailability can disrupt function execution. Setting appropriate connection timeouts and implementing retry logic with exponential backoff can help mitigate these issues and improve application resilience.

- Minimize Database Calls: Reduce the number of database calls by optimizing data retrieval strategies. Fetch only the required data by crafting efficient queries and using appropriate indexes. Avoid unnecessary round trips to the database. Consider caching frequently accessed data to reduce database load.

Strategies for Optimizing Database Performance in a Serverless Context

Optimizing database performance is paramount for the overall efficiency and scalability of serverless applications. Serverless functions are often subject to rapid scaling, and database performance bottlenecks can quickly become a major issue. Employing specific techniques, such as connection pooling and caching, can greatly improve performance.

- Connection Pooling: As mentioned earlier, connection pooling is critical. Connection pools reduce latency by reusing existing connections, avoiding the overhead of establishing new ones for each function invocation. The size of the connection pool should be carefully tuned to match the expected load and database capacity.

- Caching: Implement caching to store frequently accessed data. Caching can be done at multiple levels: within the serverless function itself (in-memory caching), using a dedicated caching service like Redis or Memcached, or using the database’s built-in caching mechanisms. Caching reduces the number of database reads, improving response times and reducing database load.

- Indexing: Properly indexing database tables is essential for query performance. Indexes speed up data retrieval by enabling the database to quickly locate specific rows. Analyze query patterns and create indexes on columns used in WHERE clauses, JOIN conditions, and ORDER BY clauses. Avoid over-indexing, as this can slow down write operations.

- Query Optimization: Optimize database queries to minimize execution time. Use the database’s query optimizer to analyze query performance and identify bottlenecks. Rewrite complex queries, use appropriate data types, and avoid unnecessary joins.

- Database Selection: Choosing the right database technology is crucial. Consider the data model, query patterns, and scalability requirements of the application. For example, NoSQL databases are often a good choice for handling unstructured or semi-structured data and offer excellent scalability. Relational databases provide strong consistency and data integrity guarantees.

- Read Replicas: For read-heavy workloads, consider using read replicas. Read replicas are copies of the primary database that are used for read operations. This distributes the read load, improving performance and reducing the impact on the primary database.

- Database Connection Limits: Be aware of the connection limits imposed by the database service. Serverless functions can rapidly scale, potentially exceeding the database’s connection limits. Monitor connection usage and adjust the connection pool size or consider scaling the database to accommodate the load.

Code Examples: CRUD Operations with a Specific Database

The following code examples demonstrate how to perform CRUD (Create, Read, Update, Delete) operations with a PostgreSQL database using Python. This provides a practical illustration of connecting to a database, executing queries, and handling results within a serverless function context. The examples assume that you have a PostgreSQL database instance running and accessible, along with the necessary database credentials configured as environment variables.

Note: These examples use the `psycopg2` library, a popular PostgreSQL adapter for Python. You’ll need to install it using `pip install psycopg2-binary`.

Example 1: Create (Insert) Operation

“`pythonimport osimport psycopg2def create_record(event, context): “”” Creates a new record in the database. “”” try: # Retrieve database credentials from environment variables db_host = os.environ[‘DB_HOST’] db_name = os.environ[‘DB_NAME’] db_user = os.environ[‘DB_USER’] db_password = os.environ[‘DB_PASSWORD’] # Establish a database connection conn = psycopg2.connect( host=db_host, database=db_name, user=db_user, password=db_password ) cur = conn.cursor() # Extract data from the event (e.g., API Gateway request) data = event[‘body’] # Assuming data is passed in the request body # Example: data = ‘name’: ‘John Doe’, ‘age’: 30 # Construct the SQL query sql = “INSERT INTO users (name, age) VALUES (%s, %s)” values = (data[‘name’], data[‘age’]) # Execute the query cur.execute(sql, values) # Commit the transaction conn.commit() # Close the cursor and connection cur.close() conn.close() return ‘statusCode’: 200, ‘body’: ‘Record created successfully!’ except Exception as e: # Handle errors print(f”Error creating record: e”) return ‘statusCode’: 500, ‘body’: f”Error: str(e)” “`

- Import `psycopg2`: Imports the necessary library for interacting with PostgreSQL.

- Retrieve Credentials: Retrieves database connection details (host, database name, user, and password) from environment variables. This keeps the credentials secure and configurable.

- Establish Connection: Establishes a connection to the PostgreSQL database using the retrieved credentials. This creates a `conn` object.

- Create Cursor: Creates a cursor object `cur` that allows executing SQL queries.

- Extract Data: Retrieves the data to be inserted from the event object (e.g., from an API Gateway request body).

- Construct Query: Constructs an SQL INSERT statement, using parameterized queries to prevent SQL injection vulnerabilities. The `%s` placeholders are replaced with the actual values.

- Execute Query: Executes the INSERT statement with the provided values.

- Commit Transaction: Commits the changes to the database. This saves the new record.

- Close Connection: Closes the cursor and database connection to release resources.

- Error Handling: Includes a `try-except` block to catch potential errors during the process and returns an appropriate HTTP status code and error message.

Example 2: Read (Select) Operation

“`pythonimport osimport psycopg2def read_records(event, context): “”” Retrieves records from the database. “”” try: # Retrieve database credentials from environment variables db_host = os.environ[‘DB_HOST’] db_name = os.environ[‘DB_NAME’] db_user = os.environ[‘DB_USER’] db_password = os.environ[‘DB_PASSWORD’] # Establish a database connection conn = psycopg2.connect( host=db_host, database=db_name, user=db_user, password=db_password ) cur = conn.cursor() # Construct the SQL query sql = “SELECT

FROM users” # Retrieve all records

# Execute the query cur.execute(sql) # Fetch all results records = cur.fetchall() # Convert the results to a list of dictionaries (optional, for easier handling) columns = [col[0] for col in cur.description] # Get column names results = [] for row in records: results.append(dict(zip(columns, row))) # Close the cursor and connection cur.close() conn.close() return ‘statusCode’: 200, ‘body’: json.dumps(results) # Return the results as JSON except Exception as e: # Handle errors print(f”Error reading records: e”) return ‘statusCode’: 500, ‘body’: f”Error: str(e)” “`

- Establish Connection: Similar to the create example, it establishes a connection to the database using environment variables.

- Construct Query: Constructs a SELECT statement to retrieve all records from the `users` table.

- Execute Query: Executes the SELECT statement.

- Fetch Results: Fetches all the results from the query using `cur.fetchall()`.

- Format Results (Optional): Converts the results to a list of dictionaries, which is often easier to work with in a serverless function. This involves getting the column names and mapping them to the row values.

- Close Connection: Closes the cursor and connection.

- Return Results: Returns the results as a JSON string in the response body.

- Error Handling: Includes error handling to catch and report any issues.

Example 3: Update Operation

“`pythonimport osimport psycopg2import jsondef update_record(event, context): “”” Updates an existing record in the database. “”” try: # Retrieve database credentials from environment variables db_host = os.environ[‘DB_HOST’] db_name = os.environ[‘DB_NAME’] db_user = os.environ[‘DB_USER’] db_password = os.environ[‘DB_PASSWORD’] # Establish a database connection conn = psycopg2.connect( host=db_host, database=db_name, user=db_user, password=db_password ) cur = conn.cursor() # Extract data from the event (e.g., API Gateway request) data = json.loads(event[‘body’]) # Parse JSON from request body user_id = data[‘id’] # Assuming the id is provided in the body new_name = data[‘name’] # Example, updating the name new_age = data[‘age’] # Construct the SQL query sql = “UPDATE users SET name = %s, age = %s WHERE id = %s” values = (new_name, new_age, user_id) # Execute the query cur.execute(sql, values) # Commit the transaction conn.commit() # Close the cursor and connection cur.close() conn.close() return ‘statusCode’: 200, ‘body’: ‘Record updated successfully!’ except Exception as e: # Handle errors print(f”Error updating record: e”) return ‘statusCode’: 500, ‘body’: f”Error: str(e)” “`

- Establish Connection: Establishes a connection to the PostgreSQL database, similar to the previous examples.

- Extract Data: Parses the JSON data from the event body, extracting the `id` of the user to update and the new values for the `name` and `age` fields.

- Construct Query: Constructs an SQL UPDATE statement to update the user’s name and age, using the `id` to identify the record.

- Execute Query: Executes the UPDATE statement with the new values and the user ID.

- Commit Transaction: Commits the changes to the database.

- Close Connection: Closes the cursor and connection.

- Return Success: Returns a success message.

- Error Handling: Includes error handling for any potential issues.

Example 4: Delete Operation

“`pythonimport osimport psycopg2import jsondef delete_record(event, context): “”” Deletes a record from the database. “”” try: # Retrieve database credentials from environment variables db_host = os.environ[‘DB_HOST’] db_name = os.environ[‘DB_NAME’] db_user = os.environ[‘DB_USER’] db_password = os.environ[‘DB_PASSWORD’] # Establish a database connection conn = psycopg2.connect( host=db_host, database=db_name, user=db_user, password=db_password ) cur = conn.cursor() # Extract data from the event (e.g., API Gateway request) data = json.loads(event[‘body’]) # Parse JSON from request body user_id = data[‘id’] # Assuming the id is provided in the body # Construct the SQL query sql = “DELETE FROM users WHERE id = %s” values = (user_id,) # Note the comma for a single-element tuple # Execute the query cur.execute(sql, values) # Commit the transaction conn.commit() # Close the cursor and connection cur.close() conn.close() return ‘statusCode’: 200, ‘body’: ‘Record deleted successfully!’ except Exception as e: # Handle errors print(f”Error deleting record: e”) return ‘statusCode’: 500, ‘body’: f”Error: str(e)” “`

- Establish Connection: Establishes a database connection.

- Extract Data: Parses the JSON data from the request body to obtain the `id` of the user to be deleted.

- Construct Query: Constructs a DELETE statement to remove the record from the `users` table, using the `id` as the criteria.

- Execute Query: Executes the DELETE statement.

- Commit Transaction: Commits the changes to the database.

- Close Connection: Closes the cursor and connection.

- Return Success: Returns a success message.

- Error Handling: Includes comprehensive error handling.

These examples demonstrate the fundamental CRUD operations. In a real-world serverless application, you’d likely implement these functions as part of an API, with API Gateway acting as the entry point. Remember to adapt the event handling and data extraction based on the specific event source (e.g., API Gateway, SQS, etc.) and data format (e.g., JSON, XML).

Leveraging Object Storage for State

Object storage offers a cost-effective and scalable solution for managing state in serverless applications, particularly when dealing with large, unstructured data. Its design, focused on storing and retrieving objects, makes it suitable for various use cases, including storing static assets, application logs, and user-generated content. This section will explore the mechanisms of object storage, its application in serverless environments, and best practices for secure data handling.

Object Storage for Application State Management

Object storage, such as Amazon S3, Google Cloud Storage, and Azure Blob Storage, provides a flat namespace for storing data as objects. Each object consists of data, metadata (key-value pairs describing the object), and a unique identifier. This architecture contrasts with hierarchical file systems, making object storage highly scalable and resilient. Serverless applications can interact with object storage through APIs, enabling the storage, retrieval, and management of application state.

- Key Characteristics: Object storage offers several key characteristics that make it attractive for serverless state management. These include:

- Scalability: Designed to handle massive amounts of data and high request volumes.

- Durability: Data is typically replicated across multiple availability zones, ensuring high data durability.

- Cost-Effectiveness: Often priced based on storage capacity and data transfer, providing a pay-as-you-go model.

- Accessibility: Data can be accessed via APIs, making it easy to integrate with serverless functions.

- Data Storage: Object storage stores data as objects, each comprising the data itself, metadata describing the data (e.g., content type, creation date), and a unique identifier (key).

- Data Retrieval: Serverless functions can retrieve data by specifying the object’s key. Access control mechanisms, such as access control lists (ACLs) and identity and access management (IAM) roles, govern data access.

- Data Consistency: While object storage typically offers eventual consistency for certain operations, it’s crucial to consider consistency models when designing serverless applications. For instance, if an application requires strong consistency, alternative solutions like databases might be more suitable for specific state management tasks.

Use Cases for Object Storage in Serverless Applications

Object storage is a suitable solution for serverless state management in several scenarios.

- Storing Static Assets: Object storage can efficiently serve static content like images, videos, and JavaScript files for web applications. Serverless functions can be used to generate or process these assets before storing them in object storage.

- User-Generated Content: Applications that handle user-uploaded files, such as images, videos, or documents, can leverage object storage to store this content. Serverless functions can handle tasks like resizing images or generating thumbnails.

- Application Logging: Object storage provides a cost-effective way to store application logs. Serverless functions can collect and write logs to object storage for later analysis and monitoring.

- Data Backups and Archives: Object storage can be used to back up application data or archive historical data. Serverless functions can automate the backup and archival processes.

- Content Delivery Networks (CDNs): Object storage integrates well with CDNs, improving content delivery performance. The CDN caches the content closer to the user, reducing latency.

Secure Data Handling with Serverless Functions

Securely storing and retrieving data from object storage is critical. Serverless functions can be used to interact with object storage, ensuring data security and integrity.

- Authentication and Authorization: Serverless functions should be authenticated and authorized to access object storage. This can be achieved using IAM roles (in AWS) or service accounts (in Google Cloud and Azure), which grant the function specific permissions to access the object storage service.

- Encryption: Encrypting data at rest and in transit is essential for security. Object storage services typically support server-side encryption, where the storage service encrypts the data before storing it, and client-side encryption, where the client encrypts the data before uploading it. Encryption in transit can be achieved using HTTPS.

- Access Control Lists (ACLs) and Policies: ACLs and policies control who can access objects and what actions they can perform. Using the principle of least privilege, granting only the necessary permissions to serverless functions is crucial.

- Example: Securely Uploading a File to AWS S3: The following demonstrates a simplified example using Node.js and the AWS SDK:

const AWS = require('aws-sdk'); const s3 = new AWS.S3(); exports.handler = async (event) => const bucketName = 'your-bucket-name'; const fileName = 'your-file-name.txt'; const fileContent = 'This is the content of the file.'; const params = Bucket: bucketName, Key: fileName, Body: fileContent, ACL: 'private' // Ensures the object is not publicly accessible ; try const data = await s3.upload(params).promise(); console.log(`File uploaded successfully.Location: $data.Location`); return statusCode: 200, body: JSON.stringify( message: 'File uploaded successfully' ) ; catch (err) console.error(err); return statusCode: 500, body: JSON.stringify( message: 'Error uploading file' ) ; ;

In this example:

- An AWS SDK for JavaScript is used to interact with S3.

- The function receives an event, which may contain data for upload.

- The `params` object configures the upload, including the bucket name, file name, file content, and access control (ACL) set to ‘private’.

- The `s3.upload()` method uploads the file.

- Error handling ensures that any upload failures are logged and handled gracefully.

Caching Mechanisms in Serverless

Caching plays a pivotal role in optimizing the performance and cost-effectiveness of serverless applications. By storing frequently accessed data closer to the compute functions, caching minimizes the need to repeatedly fetch data from slower, more expensive data sources, thus reducing latency and the overall operational expenses. This approach is particularly crucial in serverless environments where functions are often short-lived and scaled rapidly, making efficient data access paramount.

Importance of Caching in Serverless Applications

Caching is critical in serverless architectures for several compelling reasons. Serverless functions typically execute in response to events and have a limited lifespan, often scaling up and down based on demand. Repeatedly accessing external data stores for each function invocation can lead to significant performance bottlenecks and increased costs. Caching mitigates these issues by storing frequently accessed data locally or in a readily accessible location, reducing the time and resources needed to retrieve information.

This translates directly into faster response times for users and lower operational costs due to reduced database access and compute time.

Different Caching Strategies

Various caching strategies can be employed in serverless environments, each with its own strengths and weaknesses. The choice of strategy depends on the specific requirements of the application, including data access patterns, data size, and performance needs.

- In-Memory Caching: This strategy involves storing data within the memory of the serverless function’s execution environment. It is exceptionally fast for retrieving data, as the data is readily available within the function’s process. However, in-memory caching is limited by the function’s memory capacity and the function’s lifecycle. When a function instance is terminated, the cached data is lost. It’s best suited for small datasets and frequently accessed data that can be easily reconstructed if lost.

- Distributed Caching: This approach uses a dedicated caching service, separate from the serverless functions, to store and manage cached data. Services like Redis or Memcached are commonly used for this purpose. Distributed caching offers several advantages: larger storage capacity, data persistence (depending on the configuration), and the ability to share cached data across multiple function instances. This strategy is suitable for larger datasets and scenarios where data needs to be shared among different function executions.

It introduces a slight latency penalty compared to in-memory caching due to the network overhead of accessing the caching service.

- Edge Caching: Edge caching places cached data closer to the end-users, typically at the edge of a content delivery network (CDN). This strategy is particularly effective for static content and content that is frequently accessed globally. By caching content at the edge, the application can significantly reduce latency and improve user experience. The cache is managed by the CDN provider and typically includes features like cache invalidation and content distribution optimization.

Caching Workflow Diagram

The following diagram illustrates a caching workflow in a serverless environment, detailing the interactions between serverless functions, caching services, and data sources.

Diagram Description:

The diagram depicts a simplified caching workflow within a serverless architecture. It showcases the interaction between a user request, a serverless function, a cache (specifically a distributed cache like Redis), and a data source (e.g., a database).

+---------------------+ +---------------------+ +---------------------+ | User Request | --> | Serverless Function | --> | Cache (e.g., Redis) | +---------------------+ +---------------------+ +---------------------+ | | | Cache Miss | V | +---------------------+ | Data Source | +---------------------+ | V +---------------------+ | Update Cache | +---------------------+ | V +---------------------+ | Return Data | +---------------------+ | V +---------------------+ | Response to User | +---------------------+

Annotations:

- User Request: The process starts with a user request, triggering the execution of a serverless function.

- Serverless Function: This function acts as the intermediary. Upon receiving a request, it first checks the cache for the requested data.

- Cache (e.g., Redis): The cache stores frequently accessed data. The serverless function queries the cache to check for the data.

- Cache Hit: If the data is present in the cache (a “cache hit”), the function retrieves the data from the cache and returns it to the user, resulting in a fast response.

- Cache Miss: If the data is not present in the cache (a “cache miss”), the function retrieves the data from the data source.

- Data Source: The data source, such as a database, is accessed when a cache miss occurs.

- Update Cache: After retrieving the data from the data source, the function updates the cache with the retrieved data, so that subsequent requests for the same data will result in a cache hit.

- Return Data: The function returns the data (either from the cache or the data source) to the user.

- Response to User: The user receives the requested data as a response.

Idempotency and Serverless Functions

Serverless functions, by their nature, are designed for scalability and event-driven execution. This inherent characteristic introduces complexities related to state management and, critically, the need for idempotent operations. Ensuring idempotency – the ability to execute an operation multiple times without unintended side effects – is paramount in serverless architectures to guarantee data integrity and prevent erroneous outcomes, especially in the face of potential retries and concurrent invocations.

Defining Idempotency in Serverless Contexts

Idempotency in the context of serverless functions refers to the property where a function, when executed multiple times with the same inputs, produces the same result as if it were executed only once. This is a crucial concept for several reasons: serverless platforms often automatically retry function invocations in case of transient errors (e.g., network hiccups); concurrent function executions triggered by the same event can lead to multiple invocations; and external factors like client-side retries can cause the same request to be processed multiple times.

Without idempotency, these factors can lead to data corruption, inconsistent states, and unexpected application behavior. For example, consider a function that processes financial transactions. Without idempotency, a function that successfully debits an account could be retried, resulting in the account being debited multiple times.

Techniques for Ensuring Idempotency

Several techniques can be employed to ensure idempotency in serverless function executions. The choice of technique often depends on the specific use case and the nature of the operation.

- Unique Request IDs: Assigning a unique identifier to each incoming request is a fundamental approach. This ID can be used to track the execution status of a function and prevent duplicate processing. The unique request ID can be generated on the client side or assigned by an API gateway. The function then checks for the existence of a record associated with that request ID before proceeding.

If the record exists, the function either returns the previously computed result or simply skips the operation.

- State Checks: Before performing an operation that modifies state, the function should check the current state to determine if the operation has already been performed. This is particularly useful when the operation involves updating or creating a resource. For instance, if a function is designed to update a database record, it can first check if the record already has the desired values.

If it does, the update is skipped.

- Idempotent Operations in Databases: Leveraging database features designed for idempotency is highly effective. Many databases support operations like `INSERT … ON CONFLICT DO UPDATE` or similar constructs that ensure an insertion only happens if a record with a specific key does not already exist, and an update only happens if the record exists. This ensures that the database state is consistent, regardless of the number of times the function is invoked.

- Transaction Management: Transactions, especially those with ACID properties (Atomicity, Consistency, Isolation, Durability), can be used to ensure that a set of operations are either all completed successfully or none are. This is especially critical for complex operations involving multiple steps. Serverless platforms often provide integrations with database services that support transactions.

- Checksums and Hashes: For operations involving data processing or transformations, checksums or cryptographic hashes can be used to detect if the input data has already been processed. The function can calculate a hash of the input data and compare it against a stored hash value. If the hashes match, the function can skip the processing step and return the previously generated result.

Checklist for Idempotency in Serverless Function Design

To systematically ensure idempotency, a checklist can guide the design and implementation of serverless functions.

- Define Idempotent Operations: Identify which operations within the function need to be idempotent. This involves analyzing the function’s logic and identifying potential points of failure or duplication.

- Implement Unique Request ID Handling: Implement a mechanism to generate and track unique request IDs. Ensure these IDs are passed through the entire execution flow and stored persistently.

- Implement State Checks: Integrate state checks before performing any state-altering operations. This may involve querying a database, checking object storage, or examining other relevant data stores.

- Choose Idempotent Database Operations: Use database operations that inherently support idempotency, such as `INSERT … ON CONFLICT DO UPDATE` or similar features.

- Employ Transactional Operations: For complex operations, utilize transactions to ensure atomicity. Ensure that transactions are properly managed and that rollback mechanisms are in place.

- Utilize Caching: Implement caching mechanisms to store the results of idempotent operations, especially those that involve computationally expensive processes. This can significantly improve performance and reduce the need for repeated executions.

- Handle Retries and Concurrency: Design the function to handle potential retries and concurrent invocations gracefully. This includes accounting for the impact of retries on state and data consistency.

- Test Idempotency: Thoroughly test the function to verify its idempotent behavior. This involves simulating retries, concurrent executions, and various error scenarios.

- Monitor and Log: Implement comprehensive logging and monitoring to track function executions, identify potential idempotency issues, and provide insights into function behavior.

- Document Idempotency Strategies: Document the idempotency strategies employed in the function design, including the rationale behind the chosen techniques and any limitations. This documentation should be accessible to other developers.

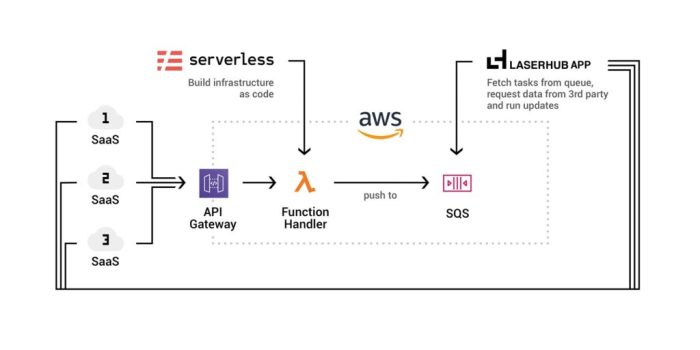

State Management with Serverless Frameworks

Serverless frameworks streamline the development, deployment, and management of serverless applications, including the crucial aspect of state management. These frameworks provide abstractions that simplify the complexities of provisioning and configuring cloud resources, such as databases, storage buckets, and other stateful components. By defining infrastructure as code, they enable developers to treat infrastructure as an integral part of their application code, fostering consistency, repeatability, and automation.

Simplification of State Management by Serverless Frameworks

Serverless frameworks significantly simplify state management by automating several key tasks. They handle the provisioning and configuration of underlying infrastructure resources, eliminating the need for manual setup and management. This automation extends to the deployment process, where the framework automatically creates, updates, and removes resources based on the defined configuration. Furthermore, they often provide built-in support for common state management patterns, such as database connections, caching mechanisms, and event handling, reducing the amount of boilerplate code required.

Defining and Managing State Resources with AWS SAM

AWS Serverless Application Model (SAM) is a framework that simplifies building serverless applications on AWS. It extends AWS CloudFormation, providing a more concise and developer-friendly syntax for defining serverless resources. Here’s an example of how to define and manage a DynamoDB table and an S3 bucket using SAM:

“`yaml

Resources:

MyDynamoDBTable:

Type: AWS::DynamoDB::Table

Properties:

TableName: my-serverless-table

AttributeDefinitions:

-AttributeName: id

AttributeType: S

KeySchema:

-AttributeName: id

KeyType: HASH

ProvisionedThroughput:

ReadCapacityUnits: 5

WriteCapacityUnits: 5

MyS3Bucket:

Type: AWS::S3::Bucket

Properties:

BucketName: my-serverless-bucket-unique-name # Replace with a globally unique name

VersioningConfiguration:

Status: Enabled

“`

This YAML configuration defines a DynamoDB table named `my-serverless-table` with a primary key `id` and a provisioned throughput of 5 read and write capacity units. It also defines an S3 bucket named `my-serverless-bucket-unique-name` (remember to replace this with a globally unique name). The `Resources` section describes the infrastructure components needed by the application. SAM translates this configuration into CloudFormation templates, which are then used to provision the resources in the AWS cloud.

Deploying a Serverless Application with Stateful Components Using SAM

Deploying the application involves several steps, typically automated using the AWS CLI and the SAM CLI.

1. Packaging the application: The `sam package` command packages the application code and any dependent libraries, and uploads them to an S3 bucket. This creates a deployment package.

2. Deploying the application: The `sam deploy` command deploys the packaged application to AWS. It uses the CloudFormation template generated from the SAM template to create or update the resources. The command automatically creates or updates the defined DynamoDB table and S3 bucket based on the `template.yaml` file shown previously.

“`bash

sam package –output-template-file packaged.yaml –s3-bucket

sam deploy –template-file packaged.yaml –stack-name my-serverless-app –capabilities CAPABILITY_IAM

“`

Replace `

This process automates the creation and management of stateful resources, enabling developers to focus on application logic rather than infrastructure configuration. This is especially useful for managing databases, where schemas, indexes, and capacity must be configured, and object storage buckets, which require naming, versioning, and access control.

Eventual Consistency and Serverless

Eventual consistency is a critical concept in distributed systems, particularly relevant to serverless architectures. Serverless applications often rely on geographically distributed data stores and asynchronous communication patterns, making eventual consistency a common characteristic. Understanding and effectively managing eventual consistency is crucial for building robust and reliable serverless applications.

Understanding Eventual Consistency

Eventual consistency defines a data consistency model where, given sufficient time and no further updates, all replicas of a piece of data will eventually converge to the same value. This differs from strong consistency, where all reads immediately reflect the most recent write. Eventual consistency prioritizes availability and performance over strict consistency, allowing systems to remain operational even during network partitions or temporary outages.

Eventual consistency can be better understood by considering the following points:

- Data Replication: Data is often replicated across multiple nodes or regions for redundancy and performance.

- Asynchronous Updates: Writes to one replica are propagated to others asynchronously, meaning updates may not be immediately reflected everywhere.

- Convergence: Eventually, all replicas will reflect the same state, assuming no further updates occur.

- Read-After-Write Consistency: In some implementations, mechanisms are provided to ensure that a user’s subsequent reads see the results of their own writes.

Strategies for Handling Eventual Consistency

Managing eventual consistency requires careful consideration of data access patterns and application logic. Several strategies can be employed to mitigate the challenges it presents.

- Idempotent Operations: Designing functions to be idempotent is crucial. This ensures that multiple executions of the same operation, even if triggered by eventual consistency issues, do not lead to unintended side effects.

- Optimistic Locking: Implement optimistic locking using version numbers or timestamps. When updating a record, check if the version has changed since the read operation. If it has, the update is rejected, and the operation must be retried. This helps to prevent conflicting updates.

- Conflict Resolution Strategies: Develop strategies to handle conflicting updates that may occur due to eventual consistency. This can involve:

- Last-Write-Wins: The most recent write overwrites older writes.

- Custom Merge Functions: Implement custom logic to merge conflicting updates based on application-specific rules.

- Timestamp-Based Resolution: Utilize timestamps to determine the order of updates.

- Eventual Consistency Awareness in Data Access: Be aware of the potential for stale data when reading from a data store. Consider using techniques like:

- Read Repair: When reading, check multiple replicas and reconcile any inconsistencies.

- Vector Clocks: Use vector clocks to track the causality of updates and detect conflicts.

- Queues and Message Brokers: Employ message queues (e.g., Amazon SQS, Azure Service Bus, Google Cloud Pub/Sub) to decouple operations and handle asynchronous updates. This allows for retries and conflict resolution mechanisms.

- Monitoring and Alerting: Implement monitoring and alerting to detect and respond to eventual consistency-related issues. Monitor for data inconsistencies, failed operations, and long propagation times.

Scenarios and Mitigation of Challenges

Eventual consistency can introduce challenges in several scenarios. Understanding these scenarios and employing appropriate mitigation strategies is vital.

- Data Synchronization Issues: In a distributed system, it can take time for data to propagate across all nodes. This can result in a user reading stale data.

Mitigation: Implement read-after-write consistency for critical operations. Use mechanisms like optimistic locking or versioning to detect and resolve conflicts. Consider using a cache with a short TTL (Time-To-Live) for frequently accessed data.

- Conflicting Updates: Concurrent updates to the same data can lead to conflicts.

Mitigation: Employ optimistic locking, conflict resolution strategies (last-write-wins, custom merge functions), and idempotent operations. Use transactions where appropriate.

- Reporting and Analytics: Generating accurate reports from data that is eventually consistent can be challenging.

Mitigation: Employ eventual consistency-aware reporting strategies. Consider using an analytical database (e.g., Amazon Redshift, Google BigQuery) that can handle eventual consistency. Implement techniques like data warehousing and ETL (Extract, Transform, Load) processes to reconcile data.

- User Experience Issues: Users might experience inconsistent data, leading to confusion and frustration.

Mitigation: Design the user interface to handle eventual consistency. Provide visual cues or notifications to indicate when data is being updated. Implement optimistic updates to provide a more responsive user experience. For example, if a user updates a profile picture, immediately display the new picture locally while the update propagates to the backend.

The application can display a loading indicator until the change is confirmed.

- Complex Transactions: Transactions that span multiple data stores or services can be difficult to manage with eventual consistency.

Mitigation: Avoid distributed transactions whenever possible. Break down large transactions into smaller, idempotent operations. Use sagas or compensation transactions to handle failures and rollbacks. Consider using event-driven architectures to coordinate operations.

State Synchronization and Coordination

Serverless applications, by their nature, often involve multiple function instances operating concurrently. This concurrency necessitates robust mechanisms for synchronizing state across these instances and coordinating updates to maintain data consistency and prevent conflicts. Effective state synchronization and coordination are critical for building reliable and scalable serverless systems.

Methods for Synchronizing State

Several methods are employed to synchronize state across multiple serverless functions or instances, each with its own trade-offs regarding complexity, performance, and consistency guarantees.

- Shared Storage: Utilizing a shared data store, such as a database (e.g., Amazon DynamoDB, Google Cloud Datastore) or object storage (e.g., Amazon S3, Google Cloud Storage), is a fundamental approach. Functions read and write state to this central repository. Synchronization is achieved implicitly through the data store’s built-in consistency mechanisms (e.g., strong consistency, eventual consistency).

- Distributed Locking: Implementing distributed locks allows functions to acquire exclusive access to a specific resource or state element. This prevents concurrent modifications and ensures data integrity. Distributed locks can be implemented using services like Amazon ElastiCache for Redis or dedicated locking libraries within the serverless functions.

- Eventual Consistency with Eventual Coordination: Leveraging event-driven architectures and message queues (e.g., Amazon SQS, Google Cloud Pub/Sub) allows functions to publish state changes as events. Other functions subscribe to these events and update their local state accordingly. This approach often results in eventual consistency, where state changes are propagated asynchronously. Coordination mechanisms are then needed to handle potential conflicts or ordering issues.

- Gossip Protocols: In certain scenarios, particularly for metadata management or service discovery, gossip protocols can be used to disseminate state information among function instances. Each instance periodically exchanges state updates with its peers, eventually converging on a consistent view. This approach is well-suited for scenarios where strict consistency is not critical.

- Optimistic Locking with Versioning: This technique assumes that conflicts are rare. When a function reads a state, it also reads a version number. Before writing, it checks if the version number in the database matches the one it read. If they match, the write proceeds; otherwise, a conflict is detected, and the function must retry or merge the changes.

Strategies for Coordinating State Updates

Coordinating state updates is essential to prevent data corruption and ensure that concurrent operations do not interfere with each other. Various strategies are employed to manage these updates.

- Optimistic Locking: Optimistic locking assumes that conflicts are infrequent. Functions read the state and a version identifier. Before writing, they check if the version identifier has changed since the read operation. If it has, a conflict is detected, and the update is rejected. This strategy minimizes locking overhead but requires mechanisms to handle conflicts (e.g., retries, merging changes).

Example: Consider a shopping cart system. A function reads the cart’s version number and the items in the cart. If another function updates the cart in the meantime, the version number will change. When the first function attempts to save its changes, the database will detect the version mismatch and reject the update.

- Pessimistic Locking: Pessimistic locking involves acquiring a lock on a resource before modifying it, ensuring exclusive access. This guarantees strong consistency but can introduce performance bottlenecks and increase latency, especially in high-concurrency scenarios. Pessimistic locking is typically implemented using database transactions or distributed locking mechanisms.

Example: In a financial transaction system, a function might acquire a lock on a bank account before debiting it to prevent concurrent withdrawals from exceeding the account balance.

- Atomic Operations: Databases and other data stores often provide atomic operations that guarantee atomicity, consistency, isolation, and durability (ACID) properties. These operations, such as compare-and-swap (CAS), allow functions to update state in a single, indivisible operation, eliminating the risk of conflicts.

Example: DynamoDB’s `UpdateItem` operation with conditional expressions allows functions to update an item only if certain conditions are met, providing atomic updates.

- Conflict Resolution Strategies: When conflicts do occur, mechanisms are needed to resolve them. These strategies include:

- Last-Write-Wins: The most recent update overwrites previous ones.

- Merging: Combining changes from conflicting updates.

- Application-Specific Logic: Implementing custom logic to handle conflicts based on the application’s requirements.

- Idempotency: Designing functions to be idempotent ensures that multiple executions of the same function, even with the same input, produce the same result. This is crucial in scenarios where function invocations might be retried due to failures or network issues.

State Synchronization Process Flow Chart

The following flow chart illustrates a typical state synchronization process using optimistic locking and a shared database.

+-----------------------+ +-----------------------+ +-----------------------+| Function Instance A | | Shared Database | | Function Instance B |+-----------------------+ +-----------------------+ +-----------------------+| | | | | || 1.Read State & |----->| 2. Store State & | | 6. Read State & || Version (v1) | | Version (v1) | | Version (v1) || | | | | |+-----------------------+ +-----------------------+ +-----------------------+ | | | | | Modify State | | | Modify State | | | | | | | | | | | |+----------v----------+ +----------v----------+ +----------v----------+| 3.

Write State & | | 4. Check Version | | 7. Write State & || Version (v2) |----->| (v1 == v1?) | | Version (v2) || | | Yes: Write |----->| || | | No: Conflict | | |+-----------------------+ +-----------------------+ +-----------------------+ | | | | | Successful Update | | | Conflict Detected | | | | | | | | | | | |+----------v----------+ +----------v----------+ +----------v----------+| 5.

Operation Complete | | | | 8. Retry/Resolve |+-----------------------+ +-----------------------+ +-----------------------+

The process begins with two function instances (A and B) reading the state and its associated version from a shared database.

Each function then modifies the state locally. Instance A attempts to write its modified state back to the database, including an updated version (v2). The database checks if the version during the write operation (v1) matches the version currently stored in the database (v1). If they match, the write succeeds. Meanwhile, instance B also attempts to write its modified state, resulting in a version conflict, which requires a retry or conflict resolution.

Security Considerations for Serverless State

Serverless architectures, while offering scalability and agility, introduce unique security challenges related to state management. Protecting sensitive data stored within serverless applications requires a proactive and multi-layered approach. This involves securing access to data storage, implementing encryption, and adhering to security best practices throughout the application lifecycle.

Security Best Practices for Protecting Sensitive Data

Implementing robust security measures is critical to safeguard sensitive data in serverless environments. These practices minimize the attack surface and protect against data breaches.

- Principle of Least Privilege: Grant serverless functions only the minimum necessary permissions to access data storage resources. Avoid broad, overly permissive access policies. This principle limits the potential damage from compromised functions.

- Input Validation and Sanitization: Validate and sanitize all inputs received by serverless functions. This helps prevent injection attacks, such as SQL injection or cross-site scripting (XSS).

- Regular Security Audits and Penetration Testing: Conduct regular security audits and penetration testing to identify and address vulnerabilities in the application code, infrastructure, and configuration. This proactive approach helps uncover potential weaknesses before they can be exploited.

- Secrets Management: Securely store and manage sensitive information like API keys, database credentials, and other secrets. Utilize dedicated secrets management services provided by cloud providers (e.g., AWS Secrets Manager, Azure Key Vault, Google Cloud Secret Manager).

- Monitoring and Logging: Implement comprehensive monitoring and logging to track function invocations, data access, and security events. Analyze logs for suspicious activity and potential security threats. Centralized logging allows for faster detection and response to security incidents.

- Network Security: Employ network security best practices, such as restricting access to data storage resources to only trusted networks and using firewalls to control inbound and outbound traffic.

- Regular Updates and Patching: Keep all dependencies, libraries, and the serverless platform itself up-to-date with the latest security patches. This mitigates known vulnerabilities that could be exploited.

- Data Minimization: Only store the minimum amount of sensitive data required. This reduces the potential impact of a data breach. Consider techniques like data masking or tokenization.

- Security Information and Event Management (SIEM): Integrate the serverless application with a SIEM system to collect, analyze, and correlate security events from various sources. This provides a centralized view of security posture and facilitates threat detection.

Securing Access to Data Storage Solutions

Securing access to data storage is crucial for protecting sensitive information from unauthorized access. This involves using appropriate authentication and authorization mechanisms.

- IAM Roles (AWS): AWS Identity and Access Management (IAM) roles provide a secure way for serverless functions (e.g., Lambda functions) to access other AWS resources. Functions assume an IAM role that grants them specific permissions. This eliminates the need to embed credentials directly in the function code.

- Managed Identities (Azure): Azure Managed Identities offer a similar functionality to IAM roles, allowing Azure Functions to access other Azure resources securely without requiring credentials in the code.

- Service Accounts (GCP): Google Cloud Platform (GCP) uses service accounts to provide access to resources. Serverless functions (e.g., Cloud Functions) can be configured to run as a specific service account with defined permissions.

- API Keys: API keys can be used for authentication, but they should be used with caution. Never hardcode API keys directly into the function code. Store them securely using secrets management services. Implement rate limiting and other security measures to protect against misuse.

- Network Access Control: Configure network access controls to restrict access to data storage resources to only authorized networks or IP addresses. This can prevent unauthorized access from external sources.

- Authentication and Authorization within the Application: Implement authentication and authorization mechanisms within the serverless application itself to control access to specific data and functionality. This could involve using tokens, API gateways, or other authentication providers.

Implementing Encryption at Rest and In Transit

Encryption is a fundamental security measure for protecting sensitive data. Encryption ensures that data is unreadable to unauthorized parties, both when stored (at rest) and when transmitted (in transit).

Encryption is implemented at two primary stages:

- Encryption at Rest: This protects data stored in data storage solutions, such as databases or object storage. The data is encrypted using a key, and the key is managed securely.

- Encryption in Transit: This protects data while it is being transmitted between the serverless function and the data storage solution or between the user and the serverless application. This is typically achieved using Transport Layer Security (TLS) or Secure Sockets Layer (SSL).

Here’s a breakdown of how to implement encryption:

- Encryption at Rest:

- Database Encryption: Many database services offer built-in encryption capabilities. Enable encryption at the database level. This protects the data stored in the database even if the underlying infrastructure is compromised. For example, AWS RDS offers encryption at rest using KMS keys. Azure SQL Database also provides encryption features.

- Object Storage Encryption: Object storage services (e.g., AWS S3, Azure Blob Storage, Google Cloud Storage) provide encryption options for data stored in the object storage. Choose server-side encryption (SSE) or client-side encryption (CSE). Server-side encryption is typically managed by the storage service provider, while client-side encryption requires the client to encrypt the data before uploading it.

- Key Management: Securely manage encryption keys using a key management service (KMS). KMS services provide a centralized and secure way to generate, store, and manage encryption keys. AWS KMS, Azure Key Vault, and Google Cloud KMS are examples of KMS services.

- Encryption in Transit:

- TLS/SSL: Use TLS/SSL to encrypt all communication between the serverless function and the data storage solution. This protects data from eavesdropping during transmission. Ensure that the serverless function and the data storage solution support TLS/SSL.

- HTTPS: Use HTTPS for all communication between the user and the serverless application. This encrypts the data transmitted between the user’s browser and the server.

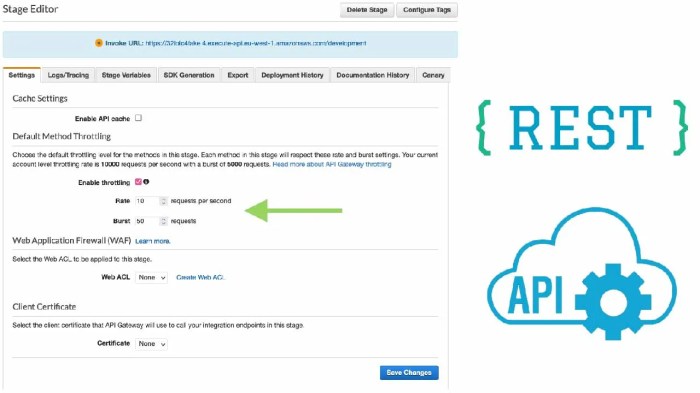

- API Gateway: Utilize an API gateway (e.g., AWS API Gateway, Azure API Management, Google Cloud API Gateway) to manage API traffic and provide TLS/SSL termination.

Example (AWS S3 with SSE-KMS):

To encrypt data stored in an AWS S3 bucket using SSE-KMS:

- Create an AWS KMS key.

- Configure the S3 bucket to use the KMS key for server-side encryption.

- When uploading an object to the S3 bucket, specify the `x-amz-server-side-encryption` header with the value `aws:kms` and the `x-amz-server-side-encryption-aws-kms-key-id` header with the ARN of the KMS key.

Example (HTTPS for API Gateway):

To enable HTTPS for an API Gateway endpoint:

- Configure the API Gateway to use a custom domain name and SSL certificate.

- Ensure that the API Gateway endpoint is accessed using HTTPS.

Implementing encryption at rest and in transit adds a significant layer of protection to sensitive data in serverless applications, mitigating the risk of data breaches and unauthorized access.

Monitoring and Debugging State Management

Effective monitoring and debugging are critical for maintaining the reliability, performance, and security of serverless applications, especially when managing state. Serverless architectures, by their nature, introduce complexities in tracing the flow of data and identifying the root causes of issues. Robust monitoring and debugging practices are therefore essential to ensure the smooth operation of stateful components and to quickly resolve any problems that arise.

Without these capabilities, debugging becomes significantly more challenging, potentially leading to prolonged downtime and degraded user experience.

Importance of Monitoring and Debugging

Monitoring and debugging are essential for serverless state management, primarily due to the distributed and ephemeral nature of serverless functions. They provide visibility into the health and performance of stateful components, enabling proactive issue detection and resolution.

- Proactive Issue Detection: Monitoring systems continuously collect data on key performance indicators (KPIs) such as latency, error rates, and resource utilization. This allows for the early identification of anomalies and potential problems before they impact users.

- Rapid Root Cause Analysis: When issues arise, debugging tools provide detailed insights into the execution of serverless functions, including logs, traces, and performance metrics. This information helps developers quickly pinpoint the root cause of problems, reducing mean time to resolution (MTTR).

- Performance Optimization: Monitoring provides valuable data for optimizing the performance of stateful components. By analyzing metrics such as database query times and cache hit ratios, developers can identify bottlenecks and improve the efficiency of their applications.

- Security Auditing: Monitoring and logging play a crucial role in security auditing. They provide a record of all actions performed on stateful resources, enabling the detection of suspicious activity and the identification of security vulnerabilities.

- Cost Management: Monitoring resource utilization helps identify opportunities to optimize costs. By tracking metrics like database storage and network bandwidth, developers can make informed decisions about resource allocation and cost optimization.

Strategies for Monitoring Stateful Component Performance and Health

Implementing effective monitoring strategies involves collecting and analyzing data from various sources to gain a comprehensive understanding of the performance and health of stateful components. This includes metrics, logs, and tracing data.

- Define Key Performance Indicators (KPIs): Identify the critical metrics that reflect the performance and health of stateful components. These may include:

- Latency: The time it takes to complete a request or operation.

- Error Rates: The percentage of requests that result in errors.

- Throughput: The number of requests processed per unit of time.

- Resource Utilization: Metrics such as CPU usage, memory consumption, and disk I/O.

- Cache Hit/Miss Ratio: The percentage of requests that are served from the cache.

- Database Query Times: The time it takes to execute database queries.

- Implement Monitoring Tools: Use specialized monitoring tools to collect, aggregate, and visualize data from stateful components. Popular choices include:

- CloudWatch (AWS): Provides comprehensive monitoring capabilities for AWS services, including Lambda functions, databases, and object storage.

- Cloud Monitoring (GCP): Offers similar monitoring features for Google Cloud services.

- Azure Monitor (Azure): Provides monitoring and diagnostic capabilities for Azure resources.

- Prometheus and Grafana: Open-source tools for collecting, storing, and visualizing metrics.

- New Relic, Datadog, and Dynatrace: Third-party monitoring platforms offering advanced features and integrations.

- Establish Alerts and Notifications: Configure alerts based on predefined thresholds for KPIs. When a metric exceeds a threshold, the monitoring system should trigger notifications to notify the relevant teams.

- Monitor Database Performance: Monitor database query times, connection pool usage, and storage capacity. Optimize database queries and schema design to improve performance. Implement database replication and backups for high availability and disaster recovery.

- Monitor Object Storage Performance: Track object storage access times, data transfer rates, and storage capacity. Optimize object storage configurations for cost and performance. Consider using Content Delivery Networks (CDNs) to improve content delivery speeds.

- Monitor Caching Mechanisms: Track cache hit/miss ratios, cache size, and cache eviction rates. Adjust cache configurations to optimize performance. Implement cache invalidation strategies to ensure data consistency.

Using Logging and Tracing Tools for Debugging State-Related Issues

Logging and tracing are essential tools for debugging state-related issues in serverless applications. They provide detailed insights into the execution of serverless functions and the interactions between different components.

- Implement Comprehensive Logging: Log relevant information at various points in the code, including:

- Function Invocations: Log the start and end of each function invocation, along with input parameters and return values.

- State Changes: Log any changes to the state of the application, such as updates to databases, object storage, or caches.

- Error Handling: Log any errors or exceptions that occur, including the error message, stack trace, and relevant context.

- Business Logic: Log key business logic operations to provide insights into the application’s behavior.

- Utilize Structured Logging: Use structured logging formats, such as JSON, to facilitate parsing and analysis of logs. This allows for easier searching, filtering, and aggregation of log data.

- Implement Distributed Tracing: Use distributed tracing tools to track the flow of requests across multiple serverless functions and services. Popular tracing tools include:

- AWS X-Ray: Provides distributed tracing for AWS services.

- Cloud Trace (GCP): Provides distributed tracing for Google Cloud services.

- Azure Application Insights: Provides distributed tracing for Azure services.

- Jaeger and Zipkin: Open-source distributed tracing systems.

- Correlate Logs and Traces: Correlate logs and traces using unique request IDs or trace IDs. This allows developers to easily trace a request through the entire system and identify the root cause of issues.

- Use Debugging Tools: Employ debugging tools, such as debuggers and profilers, to analyze the performance of serverless functions and identify bottlenecks.

- Local Debugging: Utilize local debugging tools to test and debug serverless functions locally before deploying them to the cloud.

- Remote Debugging: Use remote debugging tools to debug functions running in the cloud.

- Analyze Log and Trace Data: Analyze log and trace data to identify performance bottlenecks, errors, and other issues. Use log analysis tools to search, filter, and aggregate log data.

- Example: Debugging a Database Connection Issue: Suppose a serverless function fails to connect to a database.

- Logging: The function’s logs would reveal an error message, such as “Connection refused” or “Timeout”. The logs would also include the database connection details, such as the hostname and port.

- Tracing: A distributed trace would show the function’s interaction with the database, including the time spent on each database operation.

- Analysis: Analyzing the logs and traces would help identify the root cause of the issue, such as a misconfigured database connection string, a network connectivity problem, or a database server outage.

Closing Notes

In conclusion, mastering strategies for handling state in serverless applications is crucial for developers seeking to leverage the benefits of this architecture. From selecting the right data storage solutions to implementing robust synchronization mechanisms and security protocols, the techniques explored provide a comprehensive roadmap for building scalable and resilient serverless systems. By understanding the nuances of state management and adopting best practices, developers can overcome the challenges of serverless computing and create applications that are both efficient and highly performant.

The adoption of these strategies is essential for unlocking the full potential of serverless and driving innovation in the modern software landscape.

Q&A

What are the key differences between stateless and stateful serverless applications?

Stateless serverless applications treat each function invocation independently, without preserving state between executions. Stateful applications, on the other hand, require mechanisms to persist and manage data across multiple invocations, often using external storage solutions like databases or object storage.

How does caching improve performance in serverless applications?

Caching reduces the latency of retrieving frequently accessed data by storing it in a fast-access location, such as in-memory or a distributed cache. This minimizes the need to repeatedly access slower data sources, such as databases or object storage, resulting in faster response times and reduced costs.

What is idempotency, and why is it important in serverless functions?

Idempotency ensures that a function produces the same result when executed multiple times with the same inputs. This is critical in serverless environments, where function invocations can be retried due to network issues or other failures. Idempotent functions prevent data corruption and ensure data consistency.

How do serverless frameworks simplify state management?