Embarking on a journey into the realm of modern application architectures, we find ourselves at the intersection of innovation and security. The rise of microservices has revolutionized how we build and deploy applications, but it has also introduced new challenges in securing these complex environments. This guide delves into the critical role of service meshes in fortifying security policies.

This guide explores how service meshes act as a crucial layer, providing robust mechanisms for authentication, authorization, encryption, and network policy enforcement. We will examine the core concepts, practical implementation, and advanced features, empowering you to safeguard your applications effectively. Furthermore, we will explore the benefits of centralized policy management and how to integrate service meshes with existing security tools to achieve a comprehensive security posture.

Introduction to Service Mesh Security

Modern application architectures, particularly those built with microservices, present unique security challenges. A service mesh emerges as a critical component in addressing these complexities, providing a dedicated infrastructure layer for managing service-to-service communication and enforcing security policies. This introduction explores the core concepts of service meshes, highlights common security vulnerabilities in microservices, and demonstrates how service meshes enhance security compared to traditional approaches.

Fundamental Concepts of a Service Mesh

A service mesh is an infrastructure layer that handles service-to-service communication. It is designed to manage and control traffic between microservices, providing features such as traffic management, observability, and security. The core component of a service mesh is the sidecar proxy, which is deployed alongside each service instance. This proxy intercepts all network traffic to and from the service, allowing the service mesh to control and monitor the communication.

Common Security Challenges in Microservices Environments

Microservices architectures introduce several security challenges that traditional security approaches struggle to address. These challenges stem from the distributed nature of microservices and the increased complexity of managing a large number of independent services. The following are some of the key security concerns:

- Increased Attack Surface: With numerous microservices, each with its own set of dependencies and potential vulnerabilities, the attack surface expands significantly.

- Service-to-Service Communication Security: Securing communication between services is critical. Without proper measures, sensitive data can be exposed during transit.

- Authentication and Authorization Complexity: Managing authentication and authorization across a distributed environment becomes challenging. Ensuring that services only access authorized resources requires robust mechanisms.

- Observability and Monitoring: Gaining visibility into service interactions is crucial for detecting and responding to security incidents. Traditional monitoring tools may not be sufficient in a microservices environment.

- Compliance and Policy Enforcement: Adhering to security policies and compliance requirements can be complex in a dynamic microservices environment.

How a Service Mesh Enhances Security Compared to Traditional Methods

Service meshes provide several advantages over traditional security approaches in microservices environments. They offer a centralized and consistent way to enforce security policies, manage traffic, and monitor service interactions. Here are some of the key security enhancements:

- Mutual TLS (mTLS) Encryption: Service meshes can automatically encrypt all service-to-service communication using mTLS. This ensures that all traffic is encrypted in transit, protecting against eavesdropping and man-in-the-middle attacks.

- Identity-Based Authentication and Authorization: Service meshes can manage service identities and enforce fine-grained access control policies. This allows organizations to define which services can access which resources, reducing the risk of unauthorized access.

- Centralized Policy Enforcement: Security policies can be defined and enforced centrally, ensuring consistency across all services. This simplifies security management and reduces the risk of misconfiguration.

- Improved Observability and Monitoring: Service meshes provide detailed visibility into service interactions, including traffic patterns, latency, and error rates. This enables organizations to detect and respond to security incidents more quickly.

- Simplified Security Updates: Service meshes can be updated independently of the services they manage. This simplifies the process of applying security patches and updates, reducing the risk of vulnerabilities.

Understanding Security Policies in a Service Mesh

Service meshes significantly enhance the security posture of microservices architectures by providing a dedicated infrastructure layer for managing and enforcing security policies. This centralized approach allows for consistent security across all services, simplifying operations and reducing the attack surface. Understanding the types of security policies and how they are implemented is crucial for leveraging the full security benefits of a service mesh.

Types of Security Policies

Service meshes offer a range of security policies to protect inter-service communication and overall application security. These policies address various aspects of security, from verifying the identity of services to ensuring data confidentiality.

- Authentication: This policy verifies the identity of services attempting to communicate. It ensures that only authorized services can access others. Authentication is typically achieved through mutual Transport Layer Security (mTLS), where both the client and server present certificates to each other to verify their identity.

- Authorization: Once a service is authenticated, authorization policies determine what resources and actions the service is permitted to access. This often involves defining roles and permissions to restrict access based on the principle of least privilege. Policies can be based on attributes like the requesting service, the target service, or the specific path being accessed.

- Traffic Encryption: Encryption policies ensure that all communication between services is encrypted in transit. This protects sensitive data from eavesdropping. mTLS is commonly used to encrypt traffic, creating a secure channel between services.

- Rate Limiting: Rate limiting policies control the number of requests a service can handle within a specific time period. This helps prevent denial-of-service (DoS) attacks and protects services from being overwhelmed by excessive traffic.

- Access Control Lists (ACLs): ACLs define which services are allowed to communicate with each other. This provides fine-grained control over network traffic and helps to isolate services, limiting the impact of a security breach.

- Identity Management: This encompasses the creation, management, and revocation of service identities. It ensures that each service has a unique and verifiable identity, crucial for authentication and authorization.

Service-to-Service Policy Enforcement

Service meshes enforce security policies at the service-to-service level, transparently intercepting and managing all network traffic. This enforcement mechanism operates independently of the application code, ensuring consistent security regardless of the programming language or framework used by individual services.

Here’s how these policies are enforced:

- Sidecar Proxies: Each service instance is deployed alongside a sidecar proxy (e.g., Envoy, Linkerd). These proxies intercept all inbound and outbound traffic for the service.

- Policy Configuration: Security policies are defined and configured centrally within the service mesh control plane. This configuration is then propagated to the sidecar proxies.

- Traffic Interception and Enforcement: When a service attempts to communicate with another, the sidecar proxies intercept the traffic. The source proxy performs authentication and authorization checks based on the configured policies. If the checks pass, the traffic is allowed to proceed; otherwise, it is blocked.

- mTLS Implementation: For mTLS, the sidecar proxies automatically handle the encryption and decryption of traffic, as well as the certificate exchange. This ensures that all communication is encrypted by default.

Advantages of Centralized Policy Management

A centralized policy management system within a service mesh offers several advantages over decentralized approaches. This centralization streamlines security operations, improves consistency, and reduces the risk of misconfiguration.

- Consistency: Centralized policy management ensures that security policies are consistently applied across all services. This eliminates inconsistencies that can arise when policies are managed individually by each service.

- Simplified Operations: Managing security policies centrally simplifies operations and reduces the overhead associated with security administration. Updates and changes to policies can be made in one place and automatically propagated to all services.

- Reduced Attack Surface: Centralized policy management reduces the attack surface by providing a single point of control for security configurations. This makes it easier to detect and respond to security threats.

- Auditability: Centralized policy management provides a clear audit trail of security policies and their enforcement. This simplifies compliance efforts and provides valuable insights into security posture.

- Scalability: Service meshes are designed to scale with the application. Centralized policy management allows the security policies to scale with the application, providing consistent security as the application grows.

Authentication and Authorization with Service Mesh

Authentication and authorization are fundamental to securing any distributed system. A service mesh provides a powerful platform for implementing these security controls, ensuring that only authorized services can communicate with each other and that all communication is authenticated. This section explores how service meshes enable robust authentication and authorization mechanisms.

Implementing Mutual TLS (mTLS) for Secure Communication

Mutual TLS (mTLS) is a critical security mechanism that provides strong authentication and encryption for communication between services. It ensures that both the client and the server verify each other’s identities using digital certificates, preventing man-in-the-middle attacks and eavesdropping.To implement mTLS within a service mesh, consider these steps:

- Certificate Authority (CA) Setup: A trusted CA is essential for issuing and managing the digital certificates used for mTLS. The service mesh typically includes its own built-in CA, or you can integrate with an existing CA. The CA’s root certificate is distributed to all services within the mesh, allowing them to verify the authenticity of other service certificates.

- Service Certificate Issuance: Each service within the mesh receives a digital certificate from the CA. This certificate contains the service’s identity and public key. The service mesh automatically handles certificate issuance and renewal, simplifying the management overhead.

- mTLS Configuration: The service mesh’s configuration defines how mTLS is enforced. This involves specifying which services require mTLS for inbound and outbound traffic. The mesh intercepts all service-to-service communication and enforces mTLS policies.

- Traffic Encryption: When two services communicate, the service mesh automatically establishes an encrypted TLS connection. The client service presents its certificate to the server, and the server verifies it against the CA’s root certificate. The server also presents its certificate, and the client verifies it.

- Policy Enforcement: The service mesh can enforce policies that mandate mTLS for all communication, or it can allow exceptions based on specific service configurations or traffic patterns. For instance, you might require mTLS for sensitive data transfers while allowing plain text communication for less critical services.

An example of a typical mTLS configuration in a service mesh might involve setting the `mtls.enabled` field to `true` within the service’s configuration. This setting automatically enables mTLS for all inbound and outbound traffic for that service. The service mesh handles the complexities of certificate management, key exchange, and encryption, allowing developers to focus on application logic.

Configuring Identity Verification within a Service Mesh

Identity verification is the process of confirming the identity of a service before allowing it to access resources. A service mesh leverages service accounts and cryptographic identities to provide robust identity verification.The process involves these steps:

- Service Account Creation: Each service is assigned a unique service account. This account represents the service’s identity within the mesh. The service account is associated with a digital identity, typically a certificate and private key.

- Identity Propagation: When a service makes a request to another service, the service mesh propagates the service’s identity along with the request. This allows the receiving service to verify the sender’s identity.

- Identity Verification: The receiving service uses the service mesh’s capabilities to verify the sender’s identity. This typically involves checking the certificate against the CA and validating the identity against the service account.

- Access Control Decisions: Based on the verified identity, the service mesh can enforce access control policies. For example, only services with a specific identity might be allowed to access certain resources.

- Auditing and Logging: The service mesh logs all identity verification events, providing valuable information for auditing and security analysis. This allows you to track which services are accessing which resources and to detect any suspicious activity.

For example, consider a scenario where a “payments” service needs to access a “database” service. The service mesh would:

- Verify the “payments” service’s identity (e.g., by checking its certificate).

- Based on the verified identity, determine whether the “payments” service is authorized to access the “database” service.

- If authorized, allow the request to proceed; otherwise, deny access.

This approach ensures that only authorized services can access sensitive resources, mitigating the risk of unauthorized access and data breaches.

Designing a Procedure for Implementing Role-Based Access Control (RBAC) using a Service Mesh

Role-Based Access Control (RBAC) allows you to define and enforce access control policies based on the roles of services. This is a powerful way to manage permissions and ensure that services only have the access they need to perform their tasks.To implement RBAC using a service mesh, follow this procedure:

- Define Roles: Identify the different roles within your application (e.g., “admin,” “read-only,” “payments-processor”). Each role represents a set of permissions.

- Assign Permissions to Roles: Define the specific permissions associated with each role. For example, the “admin” role might have permission to create, read, update, and delete resources, while the “read-only” role might only have read permission.

- Map Services to Roles: Assign each service to one or more roles. This determines the level of access that the service has. For instance, the “payments” service might be assigned the “payments-processor” role.

- Configure RBAC Policies in the Service Mesh: Configure the service mesh to enforce RBAC policies. This involves defining rules that specify which roles have access to which resources. The service mesh intercepts requests and uses the service’s identity and role to determine whether to grant or deny access.

- Test and Validate: Thoroughly test the RBAC policies to ensure they are working as expected. Verify that services can only access the resources they are authorized to access.

- Regular Auditing and Review: Regularly audit and review the RBAC policies to ensure they remain effective and aligned with your security requirements.

For instance, consider a scenario with a “users” service and a “payments” service. You could define the following RBAC rules:

- The “admin” role can access both the “users” and “payments” services (create, read, update, delete).

- The “payments-processor” role can only access the “payments” service (create, read, update).

- The “users” service can only read user data.

This approach ensures that services have the appropriate level of access based on their roles, enhancing the security posture of the application. Implementing RBAC using a service mesh allows for centralized access control management, making it easier to manage and enforce security policies across a distributed environment.

Traffic Encryption and Data Protection

Securing data in transit is a fundamental aspect of service mesh security, ensuring that communication between services remains confidential and protected from eavesdropping. Implementing encryption provides a critical layer of defense, safeguarding sensitive information as it traverses the network. This section explores how to configure end-to-end encryption within a service mesh, providing a checklist for data confidentiality, and comparing different encryption methods.

Configuring End-to-End Encryption

Service meshes simplify the process of securing traffic by automating the configuration and management of encryption. End-to-end encryption ensures that data is encrypted from the source service to the destination service, protecting it throughout its entire journey. This is typically achieved using mutual Transport Layer Security (mTLS).To configure end-to-end encryption, the service mesh usually handles the following:

- Automatic Certificate Management: The service mesh automatically generates and distributes TLS certificates to each service. This eliminates the need for manual certificate management, reducing operational overhead and the potential for human error.

- mTLS Enforcement: The service mesh enforces mTLS, meaning that all communication between services is encrypted and authenticated. Services are configured to require mTLS connections, ensuring that only authorized services can communicate with each other.

- Policy-Based Configuration: Encryption policies are typically defined at the service mesh level, allowing administrators to control which services communicate securely. These policies can be granular, allowing for different encryption configurations based on service identity or other criteria.

The process generally involves the following steps:

- Enable mTLS: Enable mTLS globally or for specific services within the service mesh configuration. This is often a simple setting within the service mesh control plane.

- Certificate Authority (CA) Setup: The service mesh uses a built-in CA or integrates with an existing CA to issue and manage certificates. Ensure the CA is properly configured and trusted.

- Service Deployment: Deploy services into the service mesh. The service mesh automatically injects sidecar proxies into each service pod, which handle encryption and decryption.

- Policy Enforcement: Configure policies to enforce mTLS between services. This might involve setting the “strict” mode, which only allows mTLS connections.

For example, in Istio, enabling mTLS typically involves setting the `meshConfig.defaultConfig.proxyMetadata.ISTIO_MUTUAL` value to `true`. Additionally, you would configure the `PeerAuthentication` resource to enforce mTLS. This will ensure all traffic between services is encrypted.

Ensuring Data Confidentiality in Transit: A Checklist

Data confidentiality is critical for protecting sensitive information. The following checklist provides a structured approach to ensure data confidentiality in transit within a service mesh.

- Enable mTLS: Ensure that mTLS is enabled and enforced for all service-to-service communication. This is the foundation of secure communication.

- Use Strong Cipher Suites: Configure the service mesh to use strong and modern TLS cipher suites. This helps protect against known vulnerabilities. Avoid using weak or deprecated ciphers.

- Regular Certificate Rotation: Implement automated certificate rotation to minimize the risk of compromised certificates. Certificates should be rotated frequently, following industry best practices.

- Monitor Certificate Expiration: Monitor certificate expiration dates to prevent service disruptions. Implement alerts to notify administrators before certificates expire.

- Restrict Traffic Access: Use network policies to restrict traffic access to only authorized services. This limits the potential attack surface.

- Implement Data Encryption at Rest: While the focus is on data in transit, consider encrypting data at rest within your services and databases to provide an additional layer of security.

- Regular Security Audits: Conduct regular security audits to identify and address any vulnerabilities in the encryption configuration.

- Compliance with Regulations: Ensure that your encryption configuration complies with relevant industry regulations and standards (e.g., GDPR, HIPAA).

- Use of Service Mesh Features: Leverage features of your service mesh, such as request tracing and access logging, to monitor encrypted traffic and detect anomalies.

- Least Privilege Principle: Apply the principle of least privilege to service identities, ensuring that services only have access to the resources they need.

Comparing Encryption Methods

Service meshes support various encryption methods, each with its own characteristics and trade-offs. Understanding these differences is crucial for selecting the most appropriate method for a given use case. The following table compares common encryption methods supported by service meshes.

| Encryption Method | Description | Advantages | Disadvantages |

|---|---|---|---|

| mTLS (Mutual TLS) | Uses TLS to encrypt traffic between services and authenticates both client and server. | Provides end-to-end encryption, strong authentication, and is generally easy to configure within a service mesh. | Requires certificate management and can introduce slight performance overhead. |

| TLS with Custom Certificates | Uses TLS with certificates managed outside the service mesh. | Allows for integration with existing certificate infrastructure and potentially offers greater control over certificate management. | Requires manual configuration and management of certificates, increasing operational complexity. |

| TLS with Sidecar Injection | Uses TLS and sidecar proxies to handle encryption and decryption. | Simplifies TLS configuration and management for applications, making it easier to secure traffic. | Can introduce latency and require additional resources for the sidecar proxies. |

| WireGuard (or other VPN) | Uses a VPN technology to encrypt all traffic between services. | Provides strong encryption and is suitable for protecting traffic across different networks or environments. | Can be more complex to configure and manage than mTLS, and may introduce additional latency. |

Implementing Network Policies

Network policies are a crucial component of service mesh security, enabling granular control over service-to-service communication. They act as a firewall for your microservices, defining which services can communicate with each other and how. This level of control is essential for minimizing the attack surface and preventing lateral movement in the event of a security breach.

Creating Network Policies to Restrict Service-to-Service Communication

Network policies are defined to control the flow of traffic between services within the service mesh. These policies specify the allowed communication patterns, restricting access to only necessary services. They typically operate at the Layer 3 and Layer 4 levels of the OSI model, using information like IP addresses, ports, and protocols to enforce rules.Here’s how network policies are generally implemented:

- Policy Definition: Policies are defined using declarative configuration files, often in YAML format, specifying the allowed ingress and egress traffic for each service.

- Policy Enforcement: The service mesh control plane, such as Istio or Linkerd, uses these policy definitions to configure the sidecar proxies deployed alongside each service. These proxies intercept and enforce the defined rules.

- Rule Specification: Rules typically specify the source and destination services, the allowed ports, and the protocols. More advanced policies might include matching on HTTP headers or other application-level data.

- Policy Application: Once defined, the policies are applied to the service mesh, and the sidecar proxies begin enforcing the rules. Any traffic that doesn’t match the defined policies is typically dropped.

For example, consider a scenario with three services: `frontend`, `backend`, and `database`. A network policy could be defined to allow the `frontend` service to communicate with the `backend` service on port 8080, and the `backend` service to communicate with the `database` service on port 5432. Any other communication attempts would be blocked. This approach drastically reduces the risk of unauthorized access and data breaches.

Zero-Trust Network Architecture within a Service Mesh Context

A zero-trust network architecture operates under the principle of “never trust, always verify.” This means that no user or service is inherently trusted, regardless of their location inside or outside the network perimeter. In a service mesh, this principle is implemented through a combination of authentication, authorization, and network policies.The service mesh enforces zero-trust by:

- Authentication: Verifying the identity of every service requesting access to another. This is often achieved using mutual TLS (mTLS), where both services authenticate each other.

- Authorization: Determining whether a service is allowed to access a particular resource or service based on its identity and other attributes. This is often based on the identity of the service, its role, and the requested action.

- Network Policies: Restricting communication to only authorized services and defining the allowed traffic patterns. This prevents unauthorized access and limits the impact of a compromised service.

- Continuous Monitoring: Regularly monitoring and auditing all service interactions to detect and respond to security threats.

In essence, the service mesh acts as the enforcement point for the zero-trust model. Every request is authenticated, authorized, and subject to network policies before it is allowed to proceed. This drastically reduces the attack surface and enhances overall security.

Best Practices for Defining and Enforcing Network Policies

Defining and enforcing effective network policies requires careful planning and consideration. Adhering to best practices is essential for maximizing security benefits.Here are some key best practices:

- Start with a “Deny All” Policy: Begin by creating a default network policy that denies all traffic. This ensures that no communication is allowed until explicitly permitted.

- Define Granular Policies: Create policies that are specific and tailored to the needs of each service. Avoid overly broad policies that allow unnecessary communication.

- Use Labels and Selectors: Leverage labels and selectors to group services and apply policies to multiple services simultaneously. This simplifies policy management and reduces the risk of errors.

- Regularly Review and Update Policies: Network policies should be reviewed and updated regularly to reflect changes in the application architecture and security requirements.

- Implement Least Privilege: Grant services only the minimum necessary permissions. This reduces the potential impact of a security breach.

- Monitor Policy Enforcement: Monitor the enforcement of network policies to ensure that they are working as expected and to identify any potential issues. Use logging and monitoring tools to track policy violations.

- Test Policies Thoroughly: Before deploying policies to production, test them in a staging environment to ensure that they are working correctly and do not disrupt application functionality.

- Automate Policy Management: Automate the process of defining, deploying, and managing network policies to reduce the risk of human error and improve efficiency. Use Infrastructure as Code (IaC) tools to manage policies.

By following these best practices, organizations can create and maintain a robust network policy framework that effectively protects their microservices and supports a zero-trust security posture.

Observability and Monitoring for Security

Effective security in a service mesh hinges on robust observability and monitoring capabilities. These tools provide critical insights into the behavior of your services, enabling you to detect and respond to security incidents proactively. Without proper monitoring, you’re essentially flying blind, unable to identify and mitigate potential threats. This section delves into how service mesh monitoring tools function, the key security metrics to track, and the setup of alerts to address security anomalies.

Detecting and Responding to Security Incidents

Service mesh monitoring tools act as a central nervous system for your application, providing real-time visibility into network traffic, service interactions, and security events. These tools collect and analyze data from various sources, including service mesh proxies, logs, and metrics, to provide a comprehensive view of your system’s security posture. This visibility allows security teams to quickly identify and respond to incidents.The process typically involves:

- Data Collection: Service mesh proxies, like Envoy or Istio, intercept and record all traffic flowing between services. This data is then aggregated and sent to monitoring and logging systems.

- Data Analysis: Monitoring tools analyze the collected data to identify patterns, anomalies, and potential security threats. This often involves the use of machine learning algorithms to detect unusual behavior.

- Alerting: When a potential security incident is detected, the monitoring system triggers alerts, notifying security teams of the issue. These alerts can be sent via email, Slack, or other communication channels.

- Incident Response: Security teams use the information provided by the monitoring tools to investigate the incident, identify the root cause, and take appropriate action to mitigate the threat. This might involve blocking malicious traffic, isolating compromised services, or patching vulnerabilities.

For example, if a sudden spike in traffic to a specific service is observed, coupled with an increase in 403 Forbidden errors, this could indicate a potential denial-of-service attack or unauthorized access attempt. The monitoring system would alert the security team, who could then investigate and implement mitigation strategies.

Security-Related Metrics to Monitor

Monitoring the right metrics is crucial for effective security. These metrics provide insights into various aspects of your service mesh’s security posture, enabling you to identify and respond to potential threats. Here are some key security-related metrics to monitor:

- Authentication and Authorization Failures: Track the number of failed authentication and authorization attempts. A high number of failures could indicate an attempt to brute-force credentials or unauthorized access attempts.

- Traffic Volume and Patterns: Monitor traffic volume and patterns to detect anomalies, such as sudden spikes in traffic or unusual communication between services.

- Error Rates: Monitor the rate of HTTP errors (e.g., 400, 500 series errors). An increase in errors can indicate issues like misconfigured services, malicious requests, or denial-of-service attempts.

- Latency: Monitor the latency of service-to-service communication. Increased latency can indicate performance bottlenecks, network issues, or potential denial-of-service attacks.

- Policy Violations: Track the number of policy violations, such as attempts to access restricted resources or traffic that does not conform to defined policies.

- TLS/SSL Certificate Expiry: Monitor the expiration dates of TLS/SSL certificates to ensure that services are always using valid and secure certificates.

- Access Logs: Review access logs for suspicious activity, such as unauthorized access attempts, unusual user behavior, and potential data breaches.

Consider a scenario where a monitoring system detects a sudden increase in failed authentication attempts against a sensitive service. This metric, combined with other indicators like increased error rates, would trigger an alert, prompting the security team to investigate a possible brute-force attack or credential stuffing attempt.

Setting Up Alerts for Security Anomalies

Setting up effective alerts is critical for proactively responding to security incidents. Alerts should be configured to notify the appropriate teams when critical security events occur. These alerts should be specific, actionable, and provide sufficient context for the recipient to understand the issue and take appropriate action.The following steps are crucial for setting up alerts:

- Define Alert Thresholds: Establish clear thresholds for each security metric. These thresholds should be based on your organization’s security policies, risk tolerance, and the baseline behavior of your services. For example, you might set an alert threshold for authentication failures at 10 attempts within a 5-minute window.

- Choose Alert Severity Levels: Assign severity levels (e.g., critical, high, medium, low) to each alert based on the potential impact of the security event. Critical alerts should trigger immediate action, while lower-severity alerts might require less urgent investigation.

- Configure Alert Notifications: Configure alert notifications to be sent to the appropriate teams via the preferred communication channels (e.g., email, Slack, PagerDuty). Ensure that the notifications include relevant information, such as the metric that triggered the alert, the affected service(s), and the time of the event.

- Test Alerting System: Regularly test your alerting system to ensure that alerts are being triggered correctly and that the notification process is working as expected.

- Automate Remediation: Consider automating remediation steps for certain types of alerts. For example, you could configure an alert to automatically block suspicious IP addresses or scale up resources to mitigate a denial-of-service attack.

For instance, if the system detects an unusual spike in traffic to a critical service, exceeding a predefined threshold, a high-severity alert is generated. This alert is then sent to the security operations team via their preferred channels, accompanied by relevant contextual information, such as the source IP addresses and the service affected. This information enables the team to quickly assess the situation and take appropriate action, potentially blocking the traffic or investigating the source.

Advanced Security Features

Service meshes offer a robust platform for enhancing application security beyond basic authentication, authorization, and encryption. They provide the flexibility to integrate advanced security features, bolstering defenses against sophisticated threats. This section explores some of these capabilities, including Web Application Firewall (WAF) integration, rate limiting, and vulnerability scanning.

Web Application Firewall (WAF) Integration

Integrating a Web Application Firewall (WAF) within a service mesh provides an additional layer of protection for web applications. This integration helps to filter malicious traffic and protect against common web application vulnerabilities.A WAF, when deployed in a service mesh, acts as a central point of control for inspecting incoming HTTP/S traffic. This allows for:

- Protection against OWASP Top 10 vulnerabilities: WAFs can be configured with rulesets to detect and block attacks like SQL injection, cross-site scripting (XSS), and cross-site request forgery (CSRF).

- Centralized Policy Enforcement: Security policies can be defined and enforced consistently across all services within the mesh. This reduces the likelihood of misconfigurations and ensures uniform security.

- Improved Visibility and Logging: WAFs provide detailed logs of traffic, including both allowed and blocked requests. This information is invaluable for security monitoring, incident response, and identifying potential vulnerabilities.

For example, consider a microservices application deployed within a service mesh using Istio. A WAF like ModSecurity, integrated through an Envoy proxy, can be deployed as a sidecar to each service or at the ingress gateway. This setup allows the WAF to inspect all incoming requests before they reach the application services. If a request contains a malicious payload, the WAF can block it, preventing the attack from reaching the vulnerable service.

The WAF logs can then be analyzed to identify the source of the attack and refine the security policies.

Rate Limiting

Rate limiting is a crucial technique for preventing denial-of-service (DoS) attacks and protecting application resources. A service mesh facilitates the implementation of rate limiting policies across all services.Rate limiting works by controlling the number of requests a client can make within a specific time period. This prevents a single client from overwhelming a service with excessive requests.Here’s how rate limiting can be applied in a service mesh:

- Preventing Resource Exhaustion: Rate limiting protects against attacks that aim to consume all available resources of a service, such as CPU, memory, or network bandwidth.

- Mitigating DoS Attacks: By limiting the number of requests from a single source, rate limiting can effectively mitigate DoS and distributed denial-of-service (DDoS) attacks.

- Enforcing Service Level Agreements (SLAs): Rate limiting can be used to ensure that services meet their SLAs by preventing overuse by specific clients.

For instance, using Istio, you can define rate limiting policies at the service level. These policies can be configured to limit the number of requests per second or per minute from a specific source IP address or based on other criteria, such as user identity or API key. If a client exceeds the defined rate limit, the service mesh can return an HTTP 429 Too Many Requests error, preventing the client from further consuming resources.Consider a scenario where an e-commerce application is under a DDoS attack.

Without rate limiting, the attackers could flood the application with requests, causing it to become unavailable to legitimate users. By implementing rate limiting, the service mesh can automatically limit the number of requests from each attacking IP address, allowing the application to continue serving legitimate users.

Vulnerability Scanning

Service meshes can be used to facilitate vulnerability scanning, helping to identify and address security flaws within the application and its dependencies.Vulnerability scanning involves identifying known vulnerabilities in software components, libraries, and configurations. Integrating this process into a service mesh allows for automated and continuous vulnerability assessments.Here’s how service meshes support vulnerability scanning:

- Automated Scanning: Service meshes can be integrated with vulnerability scanning tools to automatically scan container images and service configurations.

- Early Detection: By scanning images before deployment, vulnerabilities can be detected and remediated early in the development lifecycle.

- Continuous Monitoring: Vulnerability scanning can be integrated into CI/CD pipelines, providing continuous monitoring and alerting for newly discovered vulnerabilities.

For example, you could integrate a vulnerability scanning tool like Trivy or Clair into your CI/CD pipeline. When a new container image is built, the scanning tool can automatically scan the image for known vulnerabilities. If any vulnerabilities are found, the build can be blocked, or alerts can be generated to notify the development team. The service mesh can then be configured to prevent the deployment of images with known vulnerabilities.

This ensures that only secure images are deployed to the production environment, reducing the risk of exploitation.

Choosing the Right Service Mesh for Security

Selecting the appropriate service mesh is a crucial decision for organizations aiming to bolster their application security posture. The choice significantly impacts the effectiveness and ease of enforcing security policies across a microservices architecture. This section will delve into the critical factors to consider when choosing a service mesh, focusing on comparing popular implementations to aid in making an informed decision.

Comparing Security Features of Popular Service Mesh Implementations

Different service mesh implementations offer varying degrees of security capabilities. Understanding these differences is essential for aligning the chosen mesh with specific security requirements.

Here’s a comparison of some key security features across Istio, Linkerd, and Consul Connect:

| Feature | Istio | Linkerd | Consul Connect |

|---|---|---|---|

| Authentication | Supports multiple authentication methods including JWT, mutual TLS (mTLS), and custom authentication. Offers flexible integration with identity providers. | Primarily relies on mTLS for service-to-service authentication, ensuring strong identity verification. Provides a simplified authentication setup. | Employs mTLS by default for secure communication. Supports integration with external identity providers for more advanced authentication scenarios. |

| Authorization | Offers robust authorization capabilities through its policy engine (e.g., using authorization policies based on request attributes). Provides fine-grained access control based on service identity, path, and other criteria. | Provides authorization through service accounts and network policies, focusing on a zero-trust model. Simplified configuration compared to Istio. | Offers policy-based authorization, allowing administrators to define access control lists (ACLs) based on service identities and other attributes. |

| Traffic Encryption | Enforces mTLS by default, encrypting all service-to-service traffic. Supports encryption of traffic between services and external clients. Provides flexible configuration options for TLS certificates. | Enforces mTLS by default, encrypting all service-to-service communication. Simplified certificate management. | Uses mTLS to encrypt all traffic by default, securing communications within the service mesh. |

| Network Policies | Provides advanced network policy capabilities through its configuration resources. Allows for granular control over traffic flow, including ingress and egress rules. | Offers network policies to control traffic flow at the service level, enabling segmentation. Simpler configuration than Istio. | Supports network policies to define which services can communicate with each other. Provides a simplified approach to network segmentation. |

| Observability | Integrates with popular observability tools like Prometheus, Grafana, and Jaeger. Provides detailed metrics, logs, and traces for security monitoring. | Offers built-in metrics and dashboards for monitoring security-related events. Simplified observability setup. | Integrates with standard observability tools for monitoring traffic and security events. |

| Certificate Management | Provides built-in certificate authority (CA) for managing mTLS certificates. Supports integration with external CA solutions. | Includes a built-in CA for certificate management, simplifying the mTLS setup process. | Provides a built-in CA and supports integration with external CA solutions. |

Key Factors to Consider When Selecting a Service Mesh for Security Enforcement

Several factors should guide the selection process to ensure the chosen service mesh aligns with the organization’s security objectives.

Here are key considerations:

- Security Features: Evaluate the available security features, including authentication, authorization, encryption, and network policies.

- Ease of Use: Consider the complexity of configuration and management. Some service meshes offer a more user-friendly experience.

- Performance: Assess the performance overhead introduced by the service mesh, especially in latency-sensitive environments.

- Integration: Ensure the service mesh integrates with existing infrastructure, including identity providers, monitoring tools, and CI/CD pipelines.

- Community and Support: Evaluate the size and activity of the community, as well as the availability of commercial support.

- Compliance Requirements: Determine if the service mesh supports the security standards and compliance regulations relevant to your industry. For example, in the financial sector, compliance with PCI DSS might influence the choice of a service mesh that offers robust encryption and access control features.

- Scalability: Consider the ability of the service mesh to scale to meet the demands of a growing microservices architecture.

Deployment and Configuration Best Practices

Implementing a service mesh securely requires careful planning and execution. This section Artikels best practices to ensure your service mesh deployment is robust, resilient, and protected against potential vulnerabilities. Following these guidelines will help you establish a strong security posture for your microservices architecture.

Secure Key Management

Secure key management is crucial for the overall security of your service mesh. Compromised keys can lead to unauthorized access, data breaches, and significant operational disruptions. It’s vital to adopt a robust key management strategy from the outset.

- Use Hardware Security Modules (HSMs): HSMs provide a secure, tamper-resistant environment for storing and managing cryptographic keys. They offer strong protection against key compromise and are often required for compliance with industry regulations. HSMs generate, store, and protect cryptographic keys used for TLS encryption, mutual TLS (mTLS) authentication, and other security functions within the service mesh. This approach enhances security by isolating the keys from the application environment.

For example, in a Kubernetes environment, you can integrate your service mesh with an HSM via a CSI (Container Storage Interface) driver.

- Automated Key Rotation: Implement automated key rotation to minimize the impact of a potential key compromise. Regularly rotating keys limits the time a compromised key can be used to access sensitive data. Key rotation frequency depends on your security requirements and compliance standards, but a common practice is to rotate keys every 90 days or less. Automating this process reduces the risk of human error and ensures consistent key management.

- Centralized Key Management System (KMS): Employ a centralized KMS to manage keys across your entire infrastructure. A KMS provides a single point of control for key lifecycle management, including generation, storage, rotation, and revocation. This centralized approach simplifies key management, enhances security, and improves compliance. Services like HashiCorp Vault, AWS KMS, and Azure Key Vault are popular choices for managing keys in a service mesh environment.

- Principle of Least Privilege: Grant only the necessary permissions to service mesh components for key access. Avoid providing overly permissive access, which could increase the risk of a key compromise. Implement role-based access control (RBAC) to define granular access policies and limit the impact of potential breaches.

- Regular Auditing and Monitoring: Implement regular auditing and monitoring of key usage to detect any suspicious activity. Monitor key access, rotation, and other key-related events to identify and respond to potential security incidents. Log all key management operations to provide an audit trail for compliance and incident investigation.

Common Configuration Mistakes and How to Avoid Them

Configuration errors can introduce vulnerabilities into your service mesh, potentially leading to security breaches and operational disruptions. Understanding common mistakes and implementing preventative measures is crucial for a secure deployment.

- Default Configuration Settings: Avoid using default configuration settings, as they often lack proper security hardening. Default configurations might expose your services to vulnerabilities. Always customize the configuration to meet your specific security requirements. For example, the default configurations for mTLS may not be enabled. Ensure you enable mTLS and configure appropriate certificates.

- Insecure Certificates: Using self-signed certificates in production environments is highly discouraged. Self-signed certificates lack the trust and security of certificates issued by a trusted Certificate Authority (CA). Always use certificates signed by a trusted CA to establish secure and verified communication between services. Configure certificate validation within the service mesh.

- Excessive Permissions: Granting excessive permissions to service mesh components can lead to unauthorized access and data breaches. Apply the principle of least privilege by assigning only the necessary permissions to each component. Carefully review and restrict access to sensitive resources.

- Lack of Network Segmentation: Failure to segment your network can allow attackers to move laterally within your environment. Implement network policies to restrict communication between services and enforce zero-trust principles. Network segmentation limits the blast radius of a security breach.

- Ignoring Security Updates: Failing to apply security updates promptly can expose your service mesh to known vulnerabilities. Regularly update your service mesh control plane and data plane components to patch security flaws and benefit from the latest security enhancements. Subscribe to security alerts and notifications from your service mesh vendor.

- Insufficient Logging and Monitoring: Without proper logging and monitoring, it is difficult to detect and respond to security incidents. Implement comprehensive logging and monitoring to capture security-related events, such as authentication failures, unauthorized access attempts, and policy violations. Use a centralized logging and monitoring system to aggregate and analyze security data.

- Example of a Configuration Mistake: Consider a scenario where a developer, for convenience, disables mTLS between services in the service mesh. This action simplifies initial deployment but significantly increases the risk of eavesdropping and man-in-the-middle attacks. To avoid this, enforce mTLS by default and use a configuration management tool to ensure that all services adhere to the security policies.

- Example of Avoiding a Configuration Mistake: Instead of using a default service account with broad permissions, create a custom service account for the service mesh control plane with only the required permissions to access resources. This approach follows the principle of least privilege, reducing the attack surface.

Integrating with Existing Security Tools

Integrating a service mesh with existing security tools is crucial for maintaining a comprehensive security posture. This integration allows organizations to leverage their existing investments in security infrastructure while gaining the benefits of a service mesh. It enables centralized logging, monitoring, and analysis of security events across the service mesh environment.

Forwarding Service Mesh Logs for Security Analysis

Effective security analysis relies on the ability to collect and analyze logs from various sources. Service meshes generate a wealth of information, including access logs, traffic metrics, and security events. Forwarding these logs to existing security tools allows for comprehensive security monitoring and incident response.There are several methods to forward service mesh logs:

- Log Aggregation and Forwarding: Service mesh components often provide mechanisms to export logs in standard formats like JSON or plain text. These logs can then be aggregated using a log management system (e.g., Splunk, ELK stack) and forwarded to a Security Information and Event Management (SIEM) system for analysis.

- Direct Integration with SIEM: Some service meshes offer direct integrations with popular SIEM solutions. This simplifies the process of forwarding logs and allows for pre-built dashboards and alerts tailored to service mesh events.

- Custom Log Pipelines: For more complex scenarios, organizations can create custom log pipelines using tools like Fluentd or Fluent Bit. These pipelines can transform, filter, and forward logs to various destinations, including SIEM systems, data lakes, and other security tools.

Example Integration Code Snippets

Here are some example code snippets illustrating how to integrate a service mesh with a SIEM system using Fluentd.For instance, to configure Fluentd to collect Envoy access logs and forward them to a Splunk instance:

<source> @type tail path /var/log/envoy/access.log pos_file /var/log/envoy/access.log.pos tag envoy.access <parse> @type json </parse> </source> <match envoy.access> @type splunk_hec host your_splunk_host port 8088 token your_splunk_token index your_splunk_index <format> @type json </format> </match>

This configuration reads access logs from `/var/log/envoy/access.log`, parses them as JSON, and forwards them to a Splunk instance using the HTTP Event Collector (HEC). Replace `your_splunk_host`, `your_splunk_token`, and `your_splunk_index` with your Splunk environment’s details. This configuration provides a starting point; the specifics will vary based on the service mesh, log format, and SIEM system. The goal is to ensure that all relevant service mesh logs are ingested into the SIEM for security analysis and incident response.

Case Studies: Security in Action

Service mesh security implementations are not theoretical exercises; they are tangible solutions adopted by organizations to address real-world challenges. Examining these case studies provides invaluable insights into the practical application of service mesh security principles, the architectures employed, and the lessons learned during deployment and operation. These examples highlight the benefits of a service mesh approach, including improved security posture, enhanced observability, and streamlined policy enforcement.

Real-World Application of Service Mesh Security

Numerous organizations have successfully leveraged service meshes to fortify their security posture. These deployments showcase the adaptability of service meshes across various industries and application architectures. They provide concrete examples of how security policies are enforced, how threats are mitigated, and how operational efficiency is improved.

- Financial Services: A large financial institution implemented a service mesh to secure its microservices-based trading platform. The primary goal was to enhance security and ensure compliance with stringent regulatory requirements. They employed mutual TLS (mTLS) for all inter-service communication, enforced fine-grained access control policies, and integrated with existing security information and event management (SIEM) systems. This led to a significant reduction in security incidents and improved auditability.

The organization also observed a measurable decrease in the time required to onboard new services due to the automated security configurations provided by the service mesh.

- E-commerce Platform: An e-commerce company used a service mesh to protect its online shopping application from distributed denial-of-service (DDoS) attacks and other malicious activities. They implemented rate limiting, traffic shaping, and Web Application Firewall (WAF) policies at the service mesh level. The service mesh allowed them to dynamically adjust these policies based on real-time traffic patterns, effectively mitigating attacks and ensuring service availability.

The adoption resulted in improved application resilience and enhanced customer experience during peak shopping seasons.

- Healthcare Provider: A healthcare provider utilized a service mesh to secure its patient data and comply with HIPAA regulations. They enforced strict access controls, encrypted sensitive data in transit, and implemented robust auditing capabilities. The service mesh facilitated the segmentation of the application, isolating sensitive components and minimizing the attack surface. This resulted in improved data security and facilitated compliance with regulatory mandates.

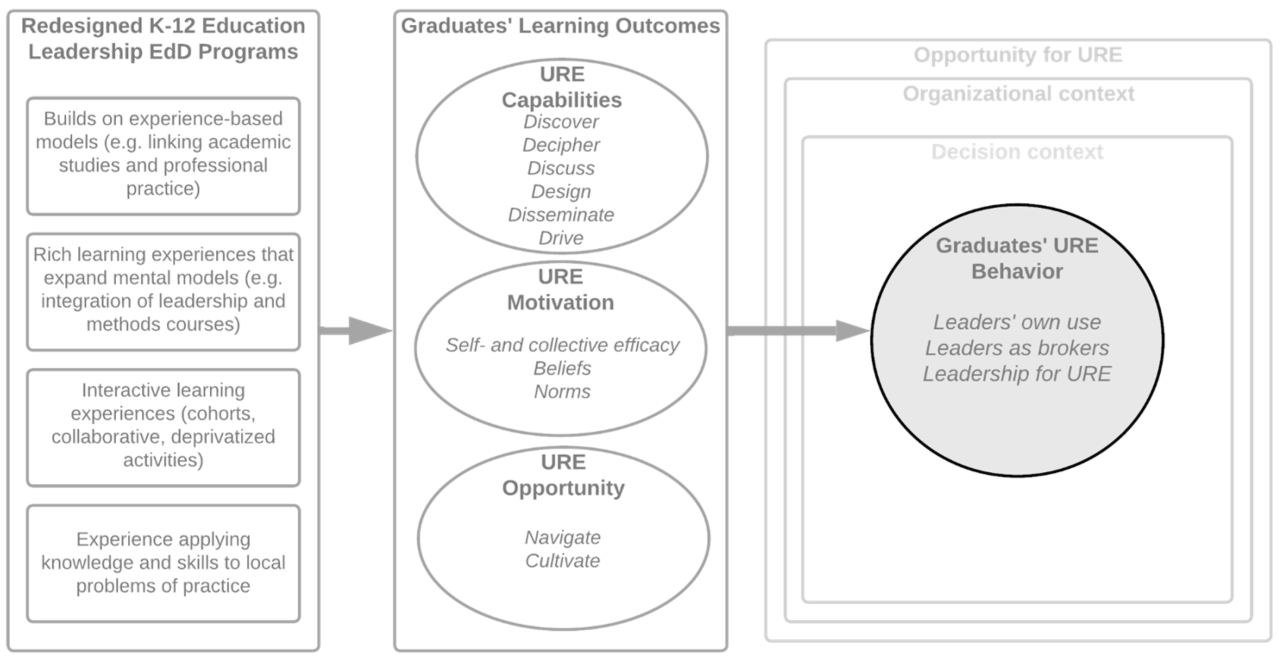

Illustrative Service Mesh Architecture Diagram: Security Components

The following illustrates a simplified service mesh architecture, focusing on security components.

Imagine a diagram representing a service mesh architecture with a focus on security. The diagram depicts a collection of microservices, each represented by a distinct rectangular box. These microservices are interconnected, illustrating the flow of traffic between them.

The diagram highlights several key security components:

- Service Mesh Control Plane: A central component responsible for managing the service mesh configuration and security policies. This is depicted as a large, central box, connected to all the microservices. Inside this box are sub-components like the Certificate Authority (CA), policy engine, and metrics aggregation.

- Sidecar Proxies (Envoy): Each microservice has a sidecar proxy, represented by a smaller box next to the microservice. These proxies intercept all inbound and outbound traffic for the associated microservice. They are responsible for enforcing security policies, such as mTLS encryption, authentication, and authorization.

- Mutual TLS (mTLS): This is visually represented by a lock icon on the lines connecting the microservices. mTLS ensures that all communication between services is encrypted and authenticated.

- Authentication and Authorization (AuthN/AuthZ): The diagram shows components for authentication and authorization, often integrated with an identity provider (IdP). These components are responsible for verifying the identity of service users and granting access based on defined policies.

- Network Policies: These are visualized as firewall rules or access control lists (ACLs) applied to the sidecar proxies. They restrict the flow of traffic between services based on various criteria, such as source, destination, and port.

- Web Application Firewall (WAF): A WAF component, often integrated within the sidecar proxy, is shown protecting the application from common web-based attacks.

- Observability and Monitoring: The diagram also includes components for observability and monitoring, such as logging and metrics dashboards. These components collect data on service traffic, security events, and performance metrics, providing insights into the security posture of the application.

This architectural overview clearly demonstrates the core elements that work together to create a secure and resilient microservices environment.

Challenges and Lessons Learned from Service Mesh Security Implementations

Implementing service mesh security, while offering significant advantages, is not without its challenges. Understanding these hurdles and the lessons learned is critical for a successful deployment.

- Complexity: Service meshes introduce a layer of complexity to the infrastructure. This can be particularly challenging for teams new to service mesh concepts.

- Lesson Learned: Start with a well-defined scope and gradually expand the service mesh coverage. Implement security policies in phases to avoid overwhelming the team. Invest in training and documentation.

- Operational Overhead: Managing and monitoring a service mesh requires dedicated resources and expertise.

- Lesson Learned: Automate as much as possible, using tools like Infrastructure as Code (IaC) to manage configurations. Implement robust monitoring and alerting to proactively identify and address issues.

- Performance Impact: The introduction of sidecar proxies and policy enforcement can impact service performance.

- Lesson Learned: Conduct thorough performance testing before and after deployment. Optimize proxy configurations and select appropriate resource allocations. Consider using performance profiling tools to identify and address bottlenecks.

- Integration with Existing Tools: Integrating the service mesh with existing security tools and processes can be complex.

- Lesson Learned: Plan the integration strategy carefully. Choose a service mesh that offers good integration capabilities. Test the integration thoroughly to ensure compatibility and proper functionality.

- Policy Management: Defining and managing security policies can be challenging, especially in complex environments.

- Lesson Learned: Use a declarative approach to policy definition. Implement a robust change management process. Use version control and automated testing to ensure policy accuracy and consistency.

Closing Notes

In conclusion, utilizing service meshes for security policy enforcement is no longer a luxury but a necessity in today’s dynamic application landscape. By understanding the fundamentals, implementing best practices, and leveraging advanced features, organizations can significantly enhance their security posture. Embrace the power of service meshes to build secure, resilient, and scalable applications. Remember to continuously monitor and adapt your security strategies to meet the ever-evolving threat landscape.

FAQ Resource

What is a service mesh, and why is it important for security?

A service mesh is a dedicated infrastructure layer that handles service-to-service communication. It’s important for security because it provides a centralized place to enforce security policies like authentication, authorization, and encryption, making microservices environments more secure.

How does a service mesh implement mTLS?

A service mesh uses mTLS (mutual TLS) to encrypt all communication between services. This typically involves the service mesh automatically managing and rotating certificates, ensuring that only authorized services can communicate securely.

Can a service mesh help with compliance requirements?

Yes, service meshes can help with compliance by providing tools to enforce security policies, log and monitor traffic, and meet regulatory requirements such as those related to data encryption and access control.

What are the key considerations when choosing a service mesh for security?

When choosing a service mesh for security, consider its features (mTLS support, policy enforcement), integration with existing tools, community support, and performance impact on your applications.