Beginning with how to secure cloud storage buckets from misconfiguration, the digital landscape has witnessed an exponential rise in cloud storage adoption, making the security of these repositories paramount. Cloud storage buckets, the fundamental building blocks of modern data management, offer unparalleled scalability and accessibility. However, their very nature presents unique security challenges. Misconfigurations, from open access permissions to inadequate encryption, can expose sensitive data to unauthorized access, data breaches, and significant financial and reputational damage.

This guide provides a thorough exploration of the strategies and best practices required to fortify your cloud storage buckets against vulnerabilities. We’ll delve into the core principles of secure configuration, covering everything from access control and encryption to monitoring and compliance. Our goal is to equip you with the knowledge and tools needed to build a robust security posture for your cloud storage infrastructure, ensuring the confidentiality, integrity, and availability of your valuable data.

Understanding Cloud Storage Bucket Basics

Cloud storage buckets are fundamental components of cloud computing, providing a scalable and cost-effective way to store and manage large amounts of data. They serve as containers for objects, such as files, images, videos, and backups. Understanding the basics of cloud storage buckets is crucial for anyone looking to secure them effectively.

Fundamental Concepts of Cloud Storage Buckets

Cloud storage buckets operate on the principle of object storage. Unlike traditional file systems, object storage treats data as objects, each with associated metadata. This metadata provides context and allows for flexible data management.

- Object Storage: Data is stored as objects, each consisting of the data itself, metadata (e.g., file name, size, creation date), and a unique identifier.

- Scalability: Cloud storage buckets are designed to scale automatically, allowing you to store petabytes of data without manual intervention.

- Durability: Data is typically replicated across multiple devices and locations to ensure high durability and protect against data loss. Providers offer various storage classes with different durability guarantees. For example, Amazon S3 offers 99.999999999% (11 nines) durability for Standard storage.

- Accessibility: Data stored in buckets can be accessed from anywhere with an internet connection, providing global access.

- Cost-Effectiveness: Cloud storage offers pay-as-you-go pricing, allowing you to pay only for the storage you use. This eliminates the need for upfront hardware investments and reduces operational costs.

Different Cloud Storage Providers

Several major cloud providers offer cloud storage bucket services, each with its own features, pricing, and regional availability. Understanding the differences between these providers is essential for choosing the right solution for your needs.

- Amazon Web Services (AWS) S3: Amazon Simple Storage Service (S3) is one of the most widely used cloud storage services. It offers a wide range of features, including various storage classes, lifecycle policies, and integration with other AWS services. S3 provides high durability and availability, with multiple options for data redundancy and access control.

- Microsoft Azure Blob Storage: Azure Blob Storage is Microsoft’s object storage service, offering scalable and cost-effective storage for unstructured data. It integrates well with other Azure services and provides features like lifecycle management, data encryption, and access control. Azure Blob Storage is a popular choice for storing backups, archives, and large datasets.

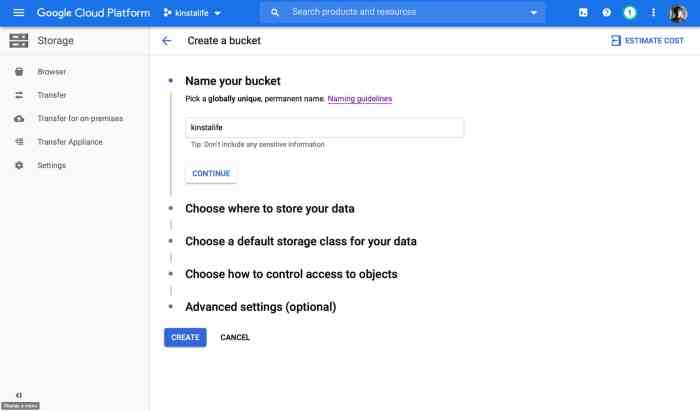

- Google Cloud Storage (GCS): Google Cloud Storage (GCS) is Google’s object storage service, known for its high performance and global reach. It offers various storage classes, including multi-regional, regional, and nearline/coldline storage, to meet different performance and cost requirements. GCS integrates seamlessly with other Google Cloud services and provides robust security features.

Common Use Cases for Cloud Storage Buckets

Cloud storage buckets are versatile and can be used for a wide range of applications. Here are some common use cases:

- Data Backup and Disaster Recovery: Cloud storage buckets are ideal for backing up critical data and creating disaster recovery solutions. Data can be replicated across multiple regions to ensure data availability in case of a disaster. Companies like Netflix use cloud storage to back up their content libraries, ensuring business continuity.

- Content Delivery: Cloud storage buckets can be used to store and serve content to users globally. Content Delivery Networks (CDNs) often use cloud storage buckets as their origin servers, allowing for fast and reliable content delivery. Websites and applications commonly use this approach to host images, videos, and other static assets.

- Data Archiving: Cloud storage provides a cost-effective solution for archiving infrequently accessed data. Storage classes like “cold storage” offer lower storage costs for data that needs to be retained for long periods but doesn’t require frequent access. The National Archives and Records Administration (NARA) uses cloud storage for long-term preservation of digital records.

- Big Data Analytics: Cloud storage buckets are used to store large datasets for big data analytics. Data from various sources can be stored in buckets and then processed using tools like Hadoop or Spark. Companies analyze vast amounts of data stored in buckets to gain insights and make data-driven decisions.

- Application Hosting: Cloud storage buckets can be used to host static websites and application assets. This provides a cost-effective and scalable solution for serving web content. Many small businesses and developers use cloud storage to host their websites.

Identifying Potential Misconfiguration Risks

Understanding the potential risks associated with misconfigured cloud storage buckets is crucial for maintaining data security and preventing unauthorized access. This section will delve into the specific vulnerabilities that arise from common misconfigurations, enabling you to proactively secure your cloud storage environment.

Publicly Accessible Buckets and Their Implications

Making a cloud storage bucket publicly accessible creates significant security vulnerabilities. This means that anyone on the internet can potentially access the data stored within the bucket, leading to data breaches, unauthorized data modification, and compliance violations.

- Data Breaches: Sensitive information, such as customer data, financial records, or intellectual property, can be exposed to malicious actors. For instance, in 2017, a misconfigured Amazon S3 bucket belonging to a consulting firm exposed the personal data of millions of Americans.

- Unauthorized Data Modification: Attackers can potentially upload, delete, or modify data within the bucket, leading to data corruption, service disruption, or the injection of malicious content.

- Compliance Violations: Publicly accessible buckets can violate data privacy regulations such as GDPR, HIPAA, and CCPA, resulting in significant fines and legal repercussions. For example, a healthcare provider that exposes patient data through a public bucket would face severe penalties under HIPAA.

- Malware Distribution: Attackers can use publicly accessible buckets to host and distribute malware, which can then be downloaded by unsuspecting users. This can lead to the spread of viruses and other malicious software.

Risks Associated with Incorrect Access Control Lists (ACLs) and Bucket Policies

Both Access Control Lists (ACLs) and bucket policies are essential mechanisms for controlling access to cloud storage buckets. Incorrectly configured ACLs and bucket policies can create significant security risks, leading to unauthorized access or data leakage.

Access Control Lists (ACLs) and bucket policies serve different purposes but both contribute to overall bucket security. ACLs are older, simpler access control mechanisms that grant permissions to specific users or groups. Bucket policies offer a more flexible and comprehensive way to define access control, allowing for complex rules based on various conditions.

- Incorrect ACL Configuration: ACLs that grant overly permissive access, such as “read” or “write” permissions to “all users,” can lead to unauthorized data access. For example, if an ACL allows “read” access to all users, anyone can potentially view the data stored in the bucket.

- Conflicting ACLs and Bucket Policies: When ACLs and bucket policies conflict, the most permissive setting typically takes precedence. This can create vulnerabilities if an overly permissive ACL overrides a more restrictive bucket policy.

- Bucket Policies with Weak Conditions: Bucket policies that use weak conditions or do not adequately restrict access based on factors such as source IP addresses or user roles can allow unauthorized access.

- Lack of Least Privilege Principle: Granting users or services more access than they require increases the attack surface. For example, if a service only needs “read” access to a bucket, it should not be granted “write” or “delete” permissions.

- Ignoring Multi-Factor Authentication (MFA) Requirements: Some bucket policies might not enforce MFA for sensitive operations, potentially allowing attackers to compromise accounts and access data.

Vulnerabilities Arising from Default Bucket Settings

Default settings for cloud storage buckets can often introduce security vulnerabilities if not properly reviewed and adjusted. Many cloud providers offer default configurations that, while convenient, may not be secure by default.

- Public Access by Default: Some cloud providers may have default settings that allow public access to newly created buckets. This is a major security risk if not addressed immediately.

- Lack of Encryption: If encryption is not enabled by default, data stored in the bucket may be vulnerable to unauthorized access if the storage provider’s systems are compromised.

- No Versioning Enabled: Without versioning, accidental deletions or data corruption can be irreversible. This can lead to data loss and make it difficult to recover from security incidents.

- Insufficient Logging and Monitoring: Default settings may not include adequate logging and monitoring, making it difficult to detect and respond to security incidents. Without proper logging, it’s challenging to identify who accessed data, when, and how.

- Default ACLs Granting Full Control: Default ACLs that grant full control to the bucket owner can be problematic if the owner’s account is compromised. An attacker with full control can then potentially modify or delete data.

Access Control Best Practices

Implementing robust access control is crucial for securing cloud storage buckets. This involves defining who can access your data, what actions they can perform, and under what conditions. A well-defined access control strategy minimizes the risk of unauthorized data access, modification, or deletion, thereby protecting your valuable information and ensuring compliance with relevant regulations. This section delves into the core principles and practical implementations of access control best practices.

Implementing Least Privilege Access

The principle of least privilege is fundamental to secure cloud storage. It dictates that users and applications should only be granted the minimum level of access necessary to perform their required tasks. This approach significantly reduces the attack surface and limits the potential damage from compromised credentials or misconfigurations.Implementing least privilege access involves several key steps:

- Identify Roles and Responsibilities: Determine the different roles within your organization and the tasks each role performs related to cloud storage. For example, you might have roles like “Data Scientist,” “Data Engineer,” and “Auditor.”

- Define Access Requirements: For each role, identify the specific data they need to access and the actions they need to perform (e.g., read, write, delete). Consider the specific buckets and objects involved.

- Create IAM Policies: Develop IAM policies that grant only the necessary permissions to each role. Avoid using overly permissive policies like “AdministratorAccess” unless absolutely required.

- Assign Roles to Users and Groups: Assign users and groups to the appropriate roles based on their responsibilities. This simplifies management and ensures consistent access control.

- Regularly Review and Audit Access: Periodically review user access and audit logs to ensure that access permissions are still appropriate and that no unauthorized activity is occurring. Revoke unnecessary permissions promptly.

Designing a Robust IAM Strategy for Cloud Storage Buckets

A well-designed IAM strategy provides a framework for managing access to your cloud storage buckets. It should be flexible, scalable, and aligned with your organization’s security policies. This strategy should also consider automation to ensure that it can be easily maintained.A robust IAM strategy includes the following components:

- Centralized Identity Management: Integrate your cloud provider’s IAM service with your existing identity provider (e.g., Active Directory, Okta) to manage user identities and access centrally. This allows you to reuse existing user accounts and enforce consistent authentication and authorization policies.

- Role-Based Access Control (RBAC): Implement RBAC to define roles and assign permissions to those roles, as described above. This simplifies access management and makes it easier to scale your security policies as your organization grows.

- Principle of Separation of Duties: Ensure that no single individual has excessive privileges. Implement separation of duties by assigning different roles to different users or groups to prevent conflicts of interest and reduce the risk of insider threats.

- Multi-Factor Authentication (MFA): Enforce MFA for all users with access to your cloud storage buckets. MFA adds an extra layer of security by requiring users to verify their identity using multiple factors, such as a password and a one-time code from a mobile device.

- Regular Policy Reviews and Audits: Establish a process for regularly reviewing and auditing your IAM policies. This should include identifying and removing unused or unnecessary permissions, as well as verifying that access controls are aligned with your organization’s security policies and compliance requirements.

- Automated Provisioning and De-provisioning: Automate the process of provisioning and de-provisioning user access to cloud storage buckets. This can be achieved through the use of Infrastructure as Code (IaC) tools or cloud-native services. Automation reduces the risk of human error and ensures that access is granted and revoked promptly.

Organizing Examples of Bucket Policies That Enforce Restricted Access

Bucket policies are a powerful tool for controlling access to your cloud storage buckets. They allow you to define who can access your data and under what conditions. The following examples demonstrate how to create bucket policies that enforce restricted access. These are conceptual and may require adjustments based on the specific cloud provider and configuration.

Example 1: Restricting Access to Specific IP Addresses

This policy restricts access to the bucket to only requests originating from a specific IP address or a range of IP addresses. This is useful for limiting access to authorized networks.

"Version": "2012-10-17", "Statement": [ "Sid": "RestrictAccessByIP", "Effect": "Allow", "Principal": "*", "Action": "s3:GetObject", "Resource": "arn:aws:s3:::your-bucket-name/*", "Condition": "IpAddress": "aws:SourceIp": [ "192.0.2.0/24", // Allow access from this IP range "203.0.113.42" // Allow access from this specific IP ] ]

Example 2: Requiring Encryption at Rest

This policy ensures that all objects uploaded to the bucket are encrypted using server-side encryption (SSE). This protects data at rest from unauthorized access.

"Version": "2012-10-17", "Statement": [ "Sid": "RequireEncryption", "Effect": "Deny", "Principal": "*", "Action": "s3:PutObject", "Resource": "arn:aws:s3:::your-bucket-name/*", "Condition": "StringNotEquals": "s3:x-amz-server-side-encryption": "AES256" // or "aws:kms" for KMS encryption ]

Example 3: Limiting Access to Specific Users or Groups

This policy restricts access to the bucket to only specified users or groups, based on their IAM user or role ARNs.

"Version": "2012-10-17", "Statement": [ "Sid": "AllowSpecificUsers", "Effect": "Allow", "Principal": "AWS": [ "arn:aws:iam::123456789012:user/username1", // Allow access for this user "arn:aws:iam::123456789012:role/DataScientistRole" // Allow access for users in this role ] , "Action": [ "s3:GetObject", "s3:PutObject" ], "Resource": "arn:aws:s3:::your-bucket-name/*" ]

Example 4: Implementing MFA Authentication

This policy requires users to authenticate with MFA before they can access the bucket.

"Version": "2012-10-17", "Statement": [ "Sid": "DenyAllButMFA", "Effect": "Deny", "Principal": "*", "Action": "*", "Resource": [ "arn:aws:s3:::your-bucket-name", "arn:aws:s3:::your-bucket-name/*" ], "Condition": "Bool": "aws:MultiFactorAuthPresent": "false" ]

These examples illustrate some common use cases for bucket policies.

Remember to tailor these policies to your specific requirements and regularly review them to ensure they remain effective.

Encryption and Data Protection Measures

Protecting data stored in cloud storage buckets requires robust encryption and data protection measures. Encryption ensures that data is unreadable without the proper decryption key, safeguarding it from unauthorized access even if the storage infrastructure is compromised. Implementing these measures is critical for maintaining data confidentiality, integrity, and compliance with regulatory requirements.

Enabling Server-Side Encryption for Data at Rest

Server-side encryption (SSE) automatically encrypts objects before they are stored in the cloud storage bucket and decrypts them when they are accessed. This process is transparent to the user; the data is encrypted and decrypted by the cloud provider. Enabling SSE is a fundamental step in securing data at rest.

To enable server-side encryption, the following steps are generally involved:

- Select an Encryption Type: Cloud providers offer different server-side encryption options. Common choices include:

- SSE-S3: The cloud provider manages the encryption keys. This is the simplest option.

- SSE-KMS: The cloud provider uses a Key Management Service (KMS) to manage the encryption keys. This provides more control over the keys.

- SSE-C: You provide the encryption keys. This provides the most control, but also requires managing the keys yourself.

- Configure Bucket Settings: In the cloud provider’s console, navigate to the bucket settings. There should be an option to enable encryption.

- Choose Encryption Type: Select the desired server-side encryption type. If using SSE-KMS, specify the KMS key to use.

- Apply to New Objects: The encryption settings are typically applied to new objects uploaded to the bucket after the settings are enabled.

- Consider Existing Objects: If you want to encrypt existing objects, you might need to re-upload them or use a specific tool provided by the cloud provider.

For example, in Amazon S3, you can enable SSE-S3 by selecting the “Default encryption” option in the bucket properties and choosing “Amazon S3 managed keys (SSE-S3).” For SSE-KMS, you would choose “AWS KMS managed keys (SSE-KMS)” and select or create a KMS key. In Google Cloud Storage, you enable server-side encryption by default by setting the default encryption algorithm when creating a new bucket or editing an existing bucket’s configuration.

The default encryption method is often AES-256.

Configuring Client-Side Encryption

Client-side encryption involves encrypting data before it is uploaded to the cloud storage bucket. This means the data is encrypted on the client-side (e.g., a user’s computer or a server) and remains encrypted throughout its lifecycle in the cloud. This offers an additional layer of security, as the cloud provider never has access to the unencrypted data.

To configure client-side encryption, consider these steps:

- Choose an Encryption Library: Select a cryptographic library compatible with your programming language or platform. Popular choices include:

- For Python:

cryptography - For Java:

Bouncy Castleor the Java Cryptography Extension (JCE) - For JavaScript:

crypto-js - Generate or Obtain Encryption Keys: You need to generate or obtain encryption keys. It’s crucial to manage these keys securely. Consider using a key management service (KMS).

- Encrypt Data: Use the chosen library and encryption key to encrypt the data before uploading it to the cloud storage bucket. The encryption algorithm is typically AES (Advanced Encryption Standard) with a key size of 256 bits for strong security.

- Upload Encrypted Data: Upload the encrypted data to the cloud storage bucket.

- Decrypt Data: When retrieving the data, download it and use the same encryption key and library to decrypt it.

An example would be using the Python library cryptography. First, you would install it via pip:

pip install cryptography. Then, you could encrypt a file like this:

“`python

from cryptography.fernet import Fernet

# Generate a key

key = Fernet.generate_key()

f = Fernet(key)

# Read the file

with open(“my_file.txt”, “rb”) as file:

original = file.read()

# Encrypt the file

encrypted = f.encrypt(original)

# Write the encrypted file

with open(“my_file.txt.encrypted”, “wb”) as file:

file.write(encrypted)

# To decrypt, you would use the same key and Fernet object

# to decrypt the encrypted data

“`

This example demonstrates the fundamental steps. Remember to handle the key securely, for instance, by storing it in a KMS.

Demonstrating the Use of Key Management Services (KMS) for Encryption Key Protection

Key Management Services (KMS) provide a centralized and secure way to manage encryption keys. KMS solutions are designed to protect the keys themselves, allowing you to encrypt data without directly handling the keys. Using a KMS significantly enhances security compared to managing keys manually.

The key features and steps for using a KMS are:

- Key Generation: The KMS generates, stores, and manages the encryption keys. The keys are typically encrypted at rest and are protected by hardware security modules (HSMs).

- Key Storage: The KMS securely stores the encryption keys. Access to the keys is strictly controlled and audited.

- Key Rotation: KMS allows for automatic key rotation, which involves regularly replacing encryption keys to minimize the impact of a potential key compromise. This is a best practice.

- Access Control: You define access control policies to determine who can use the keys and what operations they can perform (e.g., encrypt, decrypt, generate).

- Integration with Cloud Storage: The KMS integrates with cloud storage services. You configure the cloud storage bucket to use a KMS key for encryption.

- Encryption and Decryption Operations: When data is uploaded, the cloud storage service requests the KMS to encrypt the data using the KMS key. When data is downloaded, the service requests the KMS to decrypt the data. The KMS never exposes the key directly to the service.

Consider the following scenario: A company uses AWS KMS to protect the data stored in an S3 bucket. They create a KMS key and configure the S3 bucket to use this key for server-side encryption (SSE-KMS). The company then defines IAM (Identity and Access Management) policies that grant specific users and roles permission to use the KMS key for encrypting and decrypting objects in the bucket.

When a user uploads a file, S3 automatically requests the KMS to encrypt the file using the KMS key. When another user downloads the file, S3 requests the KMS to decrypt the file using the same key. The KMS ensures that the key is securely stored and managed, and the access is controlled via the IAM policies. If the company needs to rotate the key, they can do so within the KMS without re-encrypting the existing objects, making the key rotation process more manageable.

Monitoring and Auditing Cloud Storage Buckets

Effective monitoring and auditing are crucial for maintaining the security posture of your cloud storage buckets. By continuously tracking activities and configurations, you can promptly identify and address potential vulnerabilities, ensuring data integrity and compliance. This section details procedures for setting up robust monitoring and auditing practices.

Setting Up Logging and Monitoring for Bucket Activities

Implementing comprehensive logging and monitoring is the cornerstone of a proactive security strategy. This involves capturing detailed information about all bucket operations and establishing real-time alerts for suspicious activities.

To effectively set up logging and monitoring, follow these steps:

- Enable Server Access Logging: Activate server access logging within your cloud storage service. This feature logs every request made to your buckets, including details such as the requester’s identity, the time of the request, the operation performed, the source IP address, and the resources accessed.

- Configure Log Storage: Designate a secure and centralized location for storing your logs. This could be another bucket specifically for logging, or a dedicated logging service. Ensure this storage location has appropriate access controls to prevent unauthorized access or modification of log data.

- Define Monitoring Metrics: Identify key performance indicators (KPIs) to monitor. These metrics can include the number of requests, the types of operations performed (e.g., uploads, downloads, deletions), the amount of data transferred, and the frequency of access attempts.

- Set Up Alerting Rules: Establish alerting rules based on the defined KPIs. For instance, create alerts for unusual spikes in download activity, unauthorized access attempts, or suspicious deletion patterns. Integrate these alerts with your incident response system to ensure prompt notification of security events.

- Integrate with Security Information and Event Management (SIEM) Systems: Integrate your cloud storage logs with a SIEM system. This allows for centralized log aggregation, analysis, and correlation with other security data sources, providing a holistic view of your security posture.

Analyzing Logs to Detect Suspicious Access Patterns

Analyzing logs is critical for uncovering malicious activities and security breaches. This involves examining log data for unusual patterns, unauthorized access attempts, and other indicators of compromise.

To analyze logs effectively, consider these techniques:

- Identify Anomalies: Utilize log analysis tools to detect anomalies in access patterns. These tools can use machine learning algorithms to establish a baseline of normal activity and flag deviations from this baseline. For example, a sudden increase in data downloads from an unfamiliar IP address could indicate a potential breach.

- Investigate Unauthorized Access Attempts: Scrutinize logs for failed access attempts, particularly those originating from unusual locations or IP addresses. This may signify brute-force attacks or attempts to exploit misconfigured access controls.

- Monitor for Data Exfiltration: Analyze logs for patterns indicative of data exfiltration, such as large-scale downloads or uploads to unfamiliar locations. Correlate these activities with user identities and access permissions to identify potential data breaches.

- Review User Activity: Regularly review user activity logs to identify unusual behavior, such as access to sensitive data by users who do not typically require it. This can help uncover insider threats or compromised accounts.

- Track Configuration Changes: Monitor logs for any changes to bucket configurations, such as permission modifications or access control list (ACL) updates. Unapproved changes may indicate a malicious attempt to compromise the security of your buckets.

Automating Security Audits of Cloud Storage Configurations

Automating security audits is essential for ensuring consistent and efficient security assessments. This involves using tools and scripts to periodically evaluate your bucket configurations against established security best practices and compliance requirements.

Here’s how to automate security audits:

- Utilize Cloud Provider Security Tools: Leverage the built-in security assessment tools provided by your cloud provider. These tools can automatically scan your buckets for common misconfigurations, such as open access permissions, missing encryption, and inadequate access controls.

- Implement Infrastructure as Code (IaC): Use IaC tools to define your bucket configurations in code. This allows you to version control your configurations, automate the deployment of secure configurations, and easily replicate them across multiple environments.

- Develop Custom Scripts: Create custom scripts or use third-party tools to automate security checks. These scripts can be designed to assess specific security controls, such as encryption settings, access control lists, and data retention policies.

- Schedule Regular Audits: Schedule regular automated audits to ensure that your bucket configurations remain compliant with security policies and best practices. This can be done on a daily, weekly, or monthly basis, depending on your organization’s security requirements.

- Generate Audit Reports: Generate audit reports that document the results of your automated security checks. These reports should include a summary of findings, recommendations for remediation, and evidence of compliance with security standards.

Implementing Versioning and Data Backup Strategies

Implementing robust versioning and data backup strategies is crucial for ensuring the integrity and availability of data stored in cloud storage buckets. These practices safeguard against data loss due to accidental deletion, corruption, or malicious attacks. A well-defined plan minimizes downtime and facilitates swift recovery in the event of a disaster.

Benefits of Enabling Bucket Versioning

Enabling versioning in cloud storage buckets provides several key advantages in data management and protection. It creates a history of every object stored in the bucket, allowing for easy restoration of previous versions.

- Data Recovery: Versioning facilitates the recovery of data from accidental deletions or overwrites. When an object is deleted, a “delete marker” is created, and the previous versions of the object are preserved. This allows for the restoration of a previous version of the object.

- Protection Against Corruption: Versioning provides a way to revert to a known good version of an object if data corruption occurs. If a file becomes corrupted, you can simply retrieve a previous, uncorrupted version.

- Auditing and Compliance: Versioning supports auditing and compliance requirements by maintaining a complete history of object changes. This provides valuable insights into data modifications and access patterns.

- Rollback Capabilities: Versioning allows for easy rollbacks to previous states of data. This is especially useful in scenarios where a software update or data migration leads to unexpected issues.

- Data Retention Policies: Versioning can be combined with data retention policies to automatically manage the lifecycle of object versions. This ensures that older versions are automatically deleted based on predefined rules, optimizing storage costs.

Methods for Creating and Managing Data Backups

Creating and managing data backups is a critical component of a comprehensive cloud storage security strategy. Several methods can be employed to ensure data resilience and availability. These methods vary in complexity and cost, depending on the specific requirements of the organization.

- Replication: Data can be replicated to another bucket, either within the same region or in a different region. This provides redundancy and protects against regional outages. Replication can be synchronous or asynchronous, depending on the need for real-time data consistency.

- Object Lifecycle Management: Object lifecycle management rules can be configured to automatically archive older versions of objects to a lower-cost storage tier. This allows for long-term data retention at a reduced cost while still maintaining access to historical data. For example, an object can be moved to a cold storage tier after a certain period.

- Third-Party Backup Solutions: Various third-party backup solutions offer advanced features such as incremental backups, data deduplication, and encryption. These solutions often integrate seamlessly with cloud storage providers and provide centralized management capabilities.

- Snapshotting: Some cloud storage providers offer snapshotting capabilities, allowing users to create point-in-time copies of their data. Snapshots are typically more cost-effective than full data replication and can be used for disaster recovery and data recovery purposes.

- Manual Backups: While not recommended as a primary method, manual backups can be performed by downloading data from the bucket and storing it in a separate location. This method is time-consuming and prone to errors and should only be used as a last resort.

Designing a Disaster Recovery Plan that Incorporates Cloud Storage Buckets

A well-defined disaster recovery (DR) plan is essential for minimizing downtime and data loss in the event of a disaster. When cloud storage buckets are used, the DR plan must incorporate specific considerations related to data availability, recovery time objectives (RTO), and recovery point objectives (RPO).

- Define RTO and RPO: The first step is to define the RTO and RPO for the data stored in the cloud storage buckets. The RTO is the maximum acceptable downtime after a disaster, while the RPO is the maximum acceptable data loss. These objectives will determine the backup and recovery strategies.

- Choose a Recovery Strategy: Several recovery strategies can be employed, including failover to a secondary region, restoring from backups, or a combination of both. The chosen strategy should align with the defined RTO and RPO.

- Implement Data Replication: For critical data, consider replicating data to a secondary region using features like cross-region replication. This ensures that data is available even if the primary region experiences an outage.

- Test the Recovery Plan Regularly: The DR plan should be tested regularly to ensure that it works as expected. This includes simulating disaster scenarios and verifying that data can be recovered within the defined RTO and RPO. Regular testing also helps identify and address any gaps in the plan.

- Automate Recovery Processes: Automate as much of the recovery process as possible. This includes automating data replication, backup and restore operations, and failover procedures. Automation reduces the time required for recovery and minimizes the risk of human error.

- Document the DR Plan: A comprehensive DR plan should be documented, including detailed procedures, contact information, and responsibilities. The documentation should be readily accessible to all relevant personnel.

- Monitor and Alert: Implement monitoring and alerting to detect potential issues that could impact data availability. This includes monitoring the health of cloud storage buckets, replication status, and backup jobs. Set up alerts to notify the appropriate personnel in case of any issues.

Securing Data Transfer and Network Access

Securing data transfer and network access is crucial for protecting cloud storage buckets from unauthorized access and data breaches. Implementing robust security measures ensures that data is transmitted securely and that only authorized users and networks can interact with the storage buckets. This section details essential strategies for achieving this goal.

Configuring Secure Data Transfer Protocols (HTTPS)

Using HTTPS (Hypertext Transfer Protocol Secure) is fundamental for securing data transfer. It encrypts the communication between the client (e.g., a web browser or application) and the cloud storage service, protecting data in transit from eavesdropping and tampering.

To configure HTTPS for data transfer:

- Enable HTTPS for Bucket Access: Cloud providers typically offer options to enforce HTTPS for all access to the storage bucket. This ensures that all data transfers use the secure protocol.

- Configure TLS/SSL Certificates: You may need to configure TLS/SSL certificates for your bucket. This involves obtaining a certificate from a trusted Certificate Authority (CA) and associating it with your bucket. This allows for the verification of the server’s identity, preventing man-in-the-middle attacks.

- Use HTTPS Endpoints: When accessing the bucket, always use the HTTPS endpoint (e.g., `https://your-bucket-name.s3.amazonaws.com`) to ensure that data is transmitted securely.

- Implement Redirects: Configure redirects from HTTP to HTTPS to automatically redirect users attempting to access the bucket via HTTP to the secure HTTPS version. This ensures that users always use the secure connection.

- Regularly Update TLS/SSL Configurations: Ensure that your TLS/SSL configurations are up to date with the latest security standards. This includes regularly renewing certificates and using strong cipher suites to protect against known vulnerabilities.

By implementing these measures, you can ensure that data is protected during transit, significantly reducing the risk of data breaches.

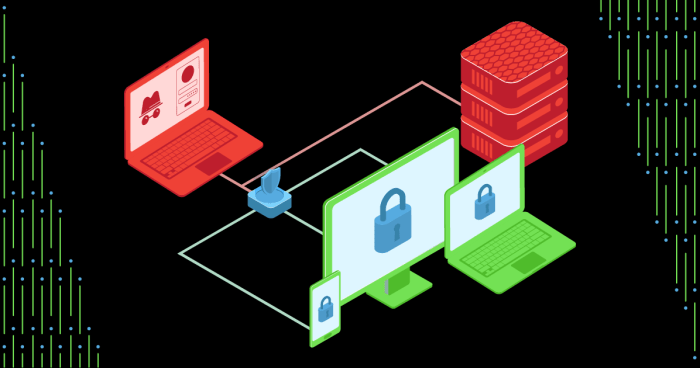

Using Private Endpoints or Virtual Private Clouds (VPCs) for Bucket Access

Private endpoints and Virtual Private Clouds (VPCs) provide a secure and isolated network environment for accessing cloud storage buckets. They restrict access to the bucket from the public internet, enhancing security and reducing the attack surface.

Here’s how to leverage private endpoints and VPCs:

- Create a VPC: Set up a VPC within your cloud provider’s environment. This creates an isolated network that only you control. Within the VPC, you can define subnets, security groups, and other network configurations.

- Configure Private Endpoints (e.g., VPC Endpoints): Most cloud providers offer private endpoints that allow you to access storage buckets within your VPC without using public IP addresses. This traffic remains within the provider’s network.

- Restrict Public Access: After setting up private endpoints, configure the bucket to deny public access. This prevents unauthorized access from the internet.

- Use Security Groups: Employ security groups to control inbound and outbound traffic to and from the resources within your VPC. Define rules that allow only authorized traffic to access the storage bucket.

- Monitor Network Traffic: Regularly monitor network traffic within your VPC to detect any unusual activity or potential security threats. Use monitoring tools to identify any unauthorized access attempts or data transfer patterns.

Using private endpoints and VPCs significantly enhances security by isolating your storage buckets within a private network, reducing the risk of external threats.

Providing Examples of Network Access Control Lists (ACLs) to Restrict Access

Network Access Control Lists (ACLs) act as firewalls at the network level, allowing or denying traffic based on predefined rules. They are an essential component of securing network access to cloud storage buckets. ACLs enable granular control over which IP addresses, CIDR blocks, or other network entities can access the bucket.

Here are examples of how to use ACLs:

- Allowing Access from Specific IP Addresses:

- Create an ACL that allows access to the bucket only from a specific IP address (e.g., `192.168.1.100`). This restricts access to a single authorized machine.

- Allowing Access from a CIDR Block:

- Define an ACL that allows access from a specific CIDR block (e.g., `192.168.1.0/24`). This permits access from a range of IP addresses within a specific subnet.

- Denying Access from Unauthorized IP Addresses:

- Create an ACL that denies access from specific IP addresses or CIDR blocks that are known to be malicious or unauthorized. This proactively blocks potentially harmful traffic.

- Using ACLs in Combination with Security Groups:

- Combine ACLs with security groups to create a layered security approach. ACLs can control network-level access, while security groups can control access at the instance level.

- Implementing Least Privilege:

- Apply the principle of least privilege when configuring ACLs. Only grant the minimum necessary access to each IP address or CIDR block.

By implementing these ACL examples, you can control and restrict network access to your cloud storage buckets, significantly enhancing security.

Automating Security with Infrastructure as Code (IaC)

Infrastructure as Code (IaC) represents a pivotal shift in cloud security, enabling the automation of infrastructure provisioning and configuration. This approach allows security best practices to be embedded directly into the code that defines your cloud resources, ensuring consistent and repeatable security configurations across your storage buckets. By using IaC, you can significantly reduce the risk of human error and misconfiguration, leading to a more robust and secure cloud environment.

Creating IaC Templates for Secure Bucket Configurations

IaC templates, such as those created with Terraform or CloudFormation, allow you to define the desired state of your cloud storage buckets programmatically. This means you can specify access controls, encryption settings, and other security parameters within code, which can then be automatically applied to your cloud resources.

Here’s how IaC templates help secure your buckets:

- Defining Access Control: IaC templates enable precise control over who can access your buckets and what actions they can perform. You can define IAM policies and bucket policies directly within your code, ensuring that only authorized users and services have access. For example, a Terraform configuration might define an IAM policy that grants read-only access to a specific group of users for a particular bucket.

- Enforcing Encryption: Encryption is a critical aspect of data security. IaC templates can be used to ensure that all data stored in your buckets is encrypted at rest. You can specify the encryption algorithm and key management system (KMS) within your template, guaranteeing that encryption is enabled from the outset.

- Configuring Versioning: Versioning allows you to track changes to your objects and recover previous versions if needed. IaC templates can be used to enable versioning for your buckets, providing an additional layer of data protection.

- Setting Up Lifecycle Policies: Lifecycle policies manage the lifecycle of your objects, such as automatically moving older objects to cheaper storage tiers or deleting them after a specified period. IaC templates allow you to define these policies, optimizing storage costs and managing data retention.

Example: Terraform Configuration for an S3 Bucket with Encryption and Versioning:

resource "aws_s3_bucket" "example" bucket = "my-secure-bucket" acl = "private" server_side_encryption_configuration rule apply_server_side_encryption_configuration sse_algorithm = "AES256" versioning enabled = true

In this example, the Terraform code creates an S3 bucket, sets the ACL to private (preventing public access), enables server-side encryption using AES256, and enables versioning.

Demonstrating Automated Security Checks and Validation

Automated security checks and validation are crucial for ensuring that your IaC templates adhere to your organization’s security policies and best practices. This can be achieved using tools like linters, static analysis tools, and policy-as-code frameworks.

Here’s how automated checks and validation work:

- Linters and Static Analysis: Linters analyze your IaC code for syntax errors, style issues, and potential security vulnerabilities. For example, a linter might flag a misconfigured IAM policy that grants excessive permissions.

- Policy-as-Code Frameworks: Policy-as-code frameworks allow you to define security policies as code and then validate your IaC templates against those policies. Tools like Open Policy Agent (OPA) can be used to enforce policies such as “all buckets must have encryption enabled” or “public access must be blocked.”

- Automated Testing: Automated testing can be incorporated into your IaC pipeline to verify that your infrastructure is deployed correctly and functions as expected. This can include tests to validate access controls, encryption settings, and other security configurations.

Example: Using tfsec (a Terraform security scanner) to check for security vulnerabilities:

tfsec .

This command will scan your Terraform code for security issues and report any findings.

Designing a CI/CD Pipeline to Enforce Security Best Practices During Deployment

A Continuous Integration and Continuous Deployment (CI/CD) pipeline automates the process of building, testing, and deploying your IaC templates. Integrating security checks into your CI/CD pipeline ensures that security best practices are enforced throughout the deployment process.

Here’s how to design a secure CI/CD pipeline:

- Code Repository: Store your IaC templates in a version control system like Git. This allows you to track changes, collaborate with your team, and roll back to previous versions if needed.

- Automated Build and Test: Configure your CI/CD pipeline to automatically build and test your IaC templates whenever changes are pushed to the code repository. This should include running linters, static analysis tools, and policy-as-code checks.

- Security Scanning: Integrate security scanning tools into your pipeline to identify and address vulnerabilities before deployment. This can include vulnerability scanning of container images (if applicable) and checks for misconfigurations.

- Deployment: Once the build and tests pass, the pipeline should automatically deploy your IaC templates to your cloud environment. This can involve using tools like Terraform, CloudFormation, or Ansible.

- Monitoring and Alerting: Implement monitoring and alerting to track the health and security of your deployed infrastructure. This can include monitoring for unauthorized access attempts, suspicious activity, and configuration changes.

Example: A simplified CI/CD pipeline using Jenkins:

- Developer commits changes to the IaC code in Git.

- Jenkins detects the change and triggers a build.

- Jenkins runs linters (e.g., tfsec) and policy checks (e.g., OPA).

- If the checks pass, Jenkins deploys the infrastructure using Terraform.

- Jenkins monitors the deployed infrastructure and sends alerts if any security issues are detected.

By integrating security into your CI/CD pipeline, you can automate the enforcement of security best practices, reduce the risk of misconfiguration, and ensure that your cloud storage buckets are secure.

Compliance and Regulatory Considerations

Aligning cloud storage bucket security with industry compliance standards is crucial for organizations handling sensitive data. Failure to comply can result in significant financial penalties, legal repercussions, and reputational damage. Understanding and implementing the necessary security controls to meet these requirements is therefore paramount.

Aligning Cloud Storage Bucket Security with Industry Compliance Standards

Organizations must tailor their cloud storage bucket security measures to meet the specific requirements of the industry regulations they are subject to. This involves identifying the relevant standards, understanding their security mandates, and implementing appropriate controls within their cloud storage environment. For instance, healthcare providers dealing with Protected Health Information (PHI) must adhere to HIPAA, while organizations processing the personal data of EU citizens must comply with GDPR.

Financial institutions often need to comply with regulations such as PCI DSS.

Comparing Security Requirements of Different Compliance Frameworks

Different compliance frameworks impose varying security requirements. The degree of rigor and specific controls needed depend on the type of data being stored and the industry regulations governing that data.

- HIPAA (Health Insurance Portability and Accountability Act): Focuses on protecting the privacy and security of PHI. Key requirements include:

- Data encryption both in transit and at rest.

- Access controls to limit who can view or modify PHI.

- Audit trails to track access and modifications.

- Regular security risk assessments.

- GDPR (General Data Protection Regulation): Governs the processing of personal data of individuals within the EU. Key requirements include:

- Data minimization: collecting only the necessary data.

- Data encryption and pseudonymization.

- Data access and portability rights for individuals.

- Incident response plans for data breaches.

- PCI DSS (Payment Card Industry Data Security Standard): Applies to organizations that handle credit card information. Key requirements include:

- Secure network configurations and firewalls.

- Encryption of cardholder data.

- Access controls to limit data access.

- Regular vulnerability scanning and penetration testing.

The specific security controls and their implementation will vary based on the chosen cloud provider and the features offered.

Examples of Security Controls Needed to Meet Specific Regulatory Requirements

Meeting regulatory requirements often necessitates a combination of technical, administrative, and physical security controls. The following examples illustrate how these controls are applied in practice.

- HIPAA Compliance Example: A healthcare provider using cloud storage must implement encryption for all PHI stored in buckets. This might involve using server-side encryption provided by the cloud provider, with keys managed either by the provider or by the organization. Access controls must be strictly enforced, utilizing role-based access control (RBAC) to ensure that only authorized personnel can access the data.

Furthermore, the provider needs to enable detailed audit logging to track every access attempt, modification, and deletion of PHI. Regular security audits and risk assessments are essential to identify and mitigate potential vulnerabilities.

- GDPR Compliance Example: An e-commerce company storing customer data must implement pseudonymization or anonymization techniques to reduce the risk of data breaches. Encryption should be used to protect data at rest and in transit. The company must provide individuals with the ability to access, rectify, and erase their personal data. This requires implementing data access controls, data retention policies, and incident response plans.

Data processing agreements with cloud providers are also crucial to ensure that the provider complies with GDPR requirements.

- PCI DSS Compliance Example: A payment processor storing cardholder data must segment its network to isolate the storage buckets containing this data. Strong firewalls and intrusion detection systems are essential to prevent unauthorized access. All cardholder data must be encrypted both at rest and in transit, using strong cryptographic algorithms. Access to the data must be tightly controlled, limiting access to only authorized personnel and logging all access attempts.

Regular vulnerability scans and penetration tests must be conducted to identify and address security weaknesses.

In all cases, continuous monitoring and regular reviews of security controls are crucial to maintain compliance and adapt to evolving threats and regulatory changes.

Outcome Summary

In conclusion, securing cloud storage buckets from misconfiguration is not merely a technical necessity; it’s a fundamental aspect of responsible data stewardship in the cloud era. By understanding the inherent risks, implementing best practices, and leveraging automation, you can significantly reduce your exposure to data breaches and ensure the long-term security of your data assets. Remember, a proactive and vigilant approach to cloud storage security is the key to safeguarding your information and maintaining the trust of your users.

Questions and Answers

What are the most common types of misconfigurations that lead to cloud storage bucket vulnerabilities?

Common misconfigurations include publicly accessible buckets, overly permissive access control lists (ACLs) or bucket policies, lack of encryption, and insufficient monitoring and logging.

How often should I review my cloud storage bucket configurations?

Regular reviews are crucial. Aim for at least quarterly reviews, but consider more frequent audits based on the sensitivity of the data stored and the rate of changes to your infrastructure. Automated security checks can also be run continuously.

What are the key differences between server-side and client-side encryption?

Server-side encryption encrypts data at rest on the cloud provider’s servers, while client-side encryption encrypts the data before it’s uploaded to the cloud. Client-side encryption offers greater control over the encryption keys but requires more management on your part.

How can I automate security audits for my cloud storage buckets?

Implement Infrastructure as Code (IaC) tools like Terraform or CloudFormation to define your bucket configurations and integrate automated security checks into your CI/CD pipeline. Tools like Cloud Custodian can also automate compliance checks.

What are the essential steps for creating a disaster recovery plan for cloud storage buckets?

Your plan should include enabling bucket versioning, regularly backing up your data to a separate location (potentially a different region or provider), and defining a clear process for restoring data in the event of a disaster. Regularly test your recovery plan.