In the intricate landscape of modern software development, microservices architecture has emerged as a dominant paradigm. This approach, while offering numerous benefits like scalability and flexibility, introduces the critical challenge of inter-service communication. Effectively managing how these independent services interact is paramount to the overall health and performance of the entire system. This guide delves into the core principles, best practices, and essential tools required to navigate the complexities of inter-service communication successfully.

This exploration will cover a wide array of topics, from choosing the right communication protocols and understanding synchronous versus asynchronous patterns, to implementing robust error handling and ensuring secure communication. We will also examine the crucial roles of service discovery, API gateways, monitoring, and logging in maintaining a resilient and observable microservices environment. The goal is to equip you with the knowledge and insights needed to design and implement a robust inter-service communication strategy that fosters efficiency, scalability, and maintainability.

Introduction to Inter-Service Communication

In a microservices architecture, applications are built as a collection of small, independent services that communicate with each other. This communication, known as inter-service communication (ISC), is a critical aspect of microservices design, enabling the different parts of an application to work together and fulfill user requests. The efficiency, reliability, and scalability of an application heavily depend on how these services interact.

Core Concept of Inter-Service Communication

Inter-service communication facilitates the exchange of data and coordination between independent services. Each service typically handles a specific business function or capability, and they must collaborate to complete complex tasks. For example, an e-commerce application might have separate services for product catalog, user accounts, shopping cart, and order processing. When a user places an order, these services need to communicate to update inventory, deduct funds, and confirm the order.

The chosen communication method impacts performance, fault tolerance, and the overall complexity of the system.

Challenges Associated with Inter-Service Communication

Implementing inter-service communication introduces several challenges. These challenges must be carefully addressed to ensure a robust and maintainable microservices architecture.

- Network Latency: Communication across a network inherently introduces latency. Each service call adds to the overall response time, potentially degrading user experience.

- Service Discovery: Services are often deployed dynamically, with changing IP addresses and port numbers. Services need a mechanism to locate and connect to each other.

- Fault Tolerance: Individual services can fail independently. The communication strategy must handle failures gracefully, preventing cascading failures and ensuring system availability. Techniques like circuit breakers and retries are crucial.

- Data Consistency: Maintaining data consistency across multiple services is complex. Distributed transactions and eventual consistency models need to be considered to handle data updates.

- Security: Secure communication is essential. Services must authenticate and authorize requests to prevent unauthorized access and data breaches.

- Monitoring and Tracing: Monitoring the interactions between services and tracing requests across the system are essential for debugging and performance analysis.

Benefits of Adopting a Well-Defined Inter-Service Communication Strategy

A well-defined inter-service communication strategy provides significant advantages for microservices architectures. It’s crucial to plan the communication methods and patterns to realize these benefits.

- Improved Scalability: Individual services can be scaled independently based on their specific needs. This allows for efficient resource allocation and better handling of traffic spikes.

- Increased Agility: Teams can develop and deploy services independently, leading to faster development cycles and easier adoption of new technologies.

- Enhanced Resilience: The isolation of services reduces the impact of failures. When one service fails, it ideally doesn’t bring down the entire application.

- Better Maintainability: Small, focused services are easier to understand, maintain, and update. This simplifies troubleshooting and reduces the risk of introducing bugs.

- Technology Diversity: Different services can be built using different technologies and programming languages, allowing for the selection of the best tools for the job.

Choosing Communication Protocols

Choosing the right communication protocol is crucial for the success of inter-service communication. The selected protocol directly impacts performance, scalability, and maintainability. Careful consideration of the specific requirements of the services involved, including data volume, latency sensitivity, and complexity tolerance, is essential. This section delves into the advantages and disadvantages of various protocols, guiding the selection process.

HTTP/REST for Inter-Service Communication

HTTP/REST (Representational State Transfer) is a widely adopted architectural style for building web services, making it a popular choice for inter-service communication. Its simplicity and ease of use contribute to its widespread adoption, but it’s essential to understand its limitations in a microservices context.

- Pros of HTTP/REST:

- Simplicity and Familiarity: HTTP/REST is based on well-understood standards, and most developers are already familiar with it. This reduces the learning curve and accelerates development.

- Ease of Implementation: Numerous libraries and frameworks are available for building RESTful APIs in various programming languages, simplifying the implementation process.

- Wide Compatibility: HTTP/REST is universally supported by various platforms and devices, making it highly compatible across different service environments.

- Human-Readable Data Formats: REST typically uses JSON (JavaScript Object Notation) or XML (Extensible Markup Language) for data exchange, which are human-readable and easy to debug.

- Caching Capabilities: HTTP supports caching mechanisms, which can improve performance by reducing the load on the server and decreasing latency.

- Cons of HTTP/REST:

- Performance Overhead: HTTP/REST, especially when using JSON, can introduce overhead due to the verbose nature of the data format.

- Latency: The text-based nature of HTTP/REST can lead to higher latency compared to binary protocols, especially for high-volume data transfer.

- Tight Coupling: REST APIs often lead to tight coupling between services, as changes in one service’s API can impact other services that depend on it.

- Limited Support for Streaming: While HTTP/2 offers improved streaming capabilities, REST’s support for true bidirectional streaming is limited compared to protocols designed for this purpose.

- Versioning Challenges: Managing API versions in REST can be complex, requiring careful planning and implementation to avoid breaking changes.

Comparing gRPC with HTTP/REST

gRPC (gRPC Remote Procedure Calls) is a high-performance, open-source RPC framework developed by Google. It utilizes Protocol Buffers (Protobuf) for data serialization, offering significant performance advantages over REST, particularly in scenarios requiring high throughput and low latency.

- Performance: gRPC generally outperforms REST in terms of latency and throughput.

- Data Serialization: gRPC uses Protobuf, a binary serialization format, which is more compact and efficient than JSON or XML used by REST. This results in smaller payloads and faster serialization/deserialization times.

- HTTP/2: gRPC leverages HTTP/2, which supports multiplexing, allowing multiple requests and responses to be sent over a single connection. This reduces connection overhead and improves efficiency.

- Data Serialization:

- REST (JSON): REST typically uses JSON for data serialization. JSON is human-readable but can be verbose, leading to larger payloads and slower processing times.

- gRPC (Protobuf): gRPC uses Protobuf, a binary format that is significantly more compact and efficient. Protobuf is designed for fast serialization and deserialization, making it ideal for high-performance applications.

- Communication Style:

- REST: REST typically uses a request-response model, where a client sends a request and receives a response.

- gRPC: gRPC supports various communication patterns, including unary RPC (request-response), server-streaming RPC, client-streaming RPC, and bidirectional-streaming RPC, offering more flexibility.

- Code Generation: gRPC provides code generation tools that automatically generate client and server stubs in various programming languages based on the Protobuf definition. This simplifies development and reduces boilerplate code. REST often requires manual coding for API interactions.

- Complexity: gRPC has a steeper learning curve than REST due to the use of Protobuf and the RPC paradigm. REST is generally easier to understand and implement.

Message Queues as a Preferred Choice

Message queues, such as Kafka and RabbitMQ, excel in asynchronous communication, decoupling services and enhancing resilience. They are particularly well-suited for scenarios where immediate responses are not critical and where scalability and fault tolerance are paramount.

- Use Cases for Message Queues:

- Asynchronous Communication: When a service needs to perform a task without waiting for an immediate response, such as sending notifications or processing background jobs.

- Decoupling Services: When services should not be directly dependent on each other, allowing for independent scaling and updates.

- Event-Driven Architectures: When services need to react to events generated by other services, enabling a more flexible and responsive system.

- High Throughput and Scalability: When handling a large volume of messages, message queues can distribute the workload across multiple consumers.

- Fault Tolerance: When services need to be resilient to failures, message queues can store messages and ensure they are delivered even if a service is temporarily unavailable.

- Examples:

- E-commerce Order Processing: A service that receives an order can publish a message to a queue. Separate services can then consume this message to handle tasks such as inventory updates, payment processing, and shipping notifications.

- Social Media Feeds: When a user posts a message, a service can publish an event to a queue. Other services can consume this event to update the user’s feed, notify followers, and perform other related actions.

- Log Aggregation: Services can publish log messages to a queue. A log aggregation service can consume these messages to collect, process, and store logs.

Protocol Comparison Chart

The following table provides a comparative analysis of the discussed protocols, focusing on key performance indicators. The values are approximate and can vary based on implementation, network conditions, and hardware.

| Protocol | Latency (ms) | Bandwidth Usage | Complexity |

|---|---|---|---|

| HTTP/REST | 50-200+ | High (due to JSON/XML) | Low |

| gRPC | 1-50 | Low (Protobuf) | Medium |

| Message Queues (Kafka/RabbitMQ) | Variable (depends on queue and configuration) | Medium (depends on message size) | Medium-High |

Synchronous vs. Asynchronous Communication

Understanding the differences between synchronous and asynchronous communication patterns is crucial for designing resilient and efficient inter-service communication. The choice between these two approaches significantly impacts system performance, fault tolerance, and the overall user experience. This section explores these contrasting paradigms, highlighting their respective strengths and weaknesses.

Synchronous Communication Explained

Synchronous communication involves a direct interaction where the calling service (client) waits for a response from the called service (server) before continuing its operation. This is a blocking operation; the client is effectively “frozen” until the server replies. This pattern is akin to a phone call – the caller waits on the line until the recipient answers and the conversation is complete.The primary advantage of synchronous communication lies in its simplicity and ease of implementation.

It’s a straightforward approach, particularly well-suited for scenarios where the calling service needs immediate access to the result of the operation. However, it introduces potential bottlenecks and single points of failure. If the server is unavailable or slow, the client service will be blocked, potentially impacting the performance and availability of the entire system.Here’s a breakdown of the advantages and disadvantages:

- Advantages:

- Simplicity: Easy to implement and understand, especially for simple request-response interactions.

- Real-time Results: Provides immediate results, which is essential for certain types of operations.

- Transaction Management: Simplifies transaction management as the client waits for the transaction to complete.

- Disadvantages:

- Blocking Operations: Clients are blocked, waiting for responses, which can lead to performance degradation and reduced throughput if the server is slow or unavailable.

- Increased Latency: The overall response time is directly affected by the server’s processing time and network latency.

- Single Point of Failure: The calling service is directly dependent on the availability of the called service; a failure in the called service can bring down the calling service.

Here are some examples of synchronous interactions:

- User Authentication: When a user logs in, the authentication service is called synchronously to verify the credentials before granting access.

- Database Queries: Retrieving data from a database is often performed synchronously, as the calling service needs the data immediately.

- Payment Processing: Processing a credit card payment usually involves synchronous communication with a payment gateway to authorize and capture funds in real-time.

Asynchronous Communication Explained

Asynchronous communication, on the other hand, allows the calling service to send a request to the called service and immediately continue its operations without waiting for a response. The called service processes the request and, at a later time, may send a response or notify the calling service of the outcome. This is a non-blocking operation; the client doesn’t need to wait.

This is analogous to sending an email – the sender doesn’t wait for the recipient to read and respond before continuing with other tasks.Asynchronous communication excels in scenarios where immediate results aren’t required, and fault tolerance and scalability are critical. It enables services to operate independently and handle failures gracefully. A failure in one service doesn’t necessarily bring down other services, as requests can be queued and retried.

However, it introduces complexity in managing message delivery, handling eventual consistency, and dealing with potential data inconsistencies.Here are some examples of use cases where asynchronous communication is essential:

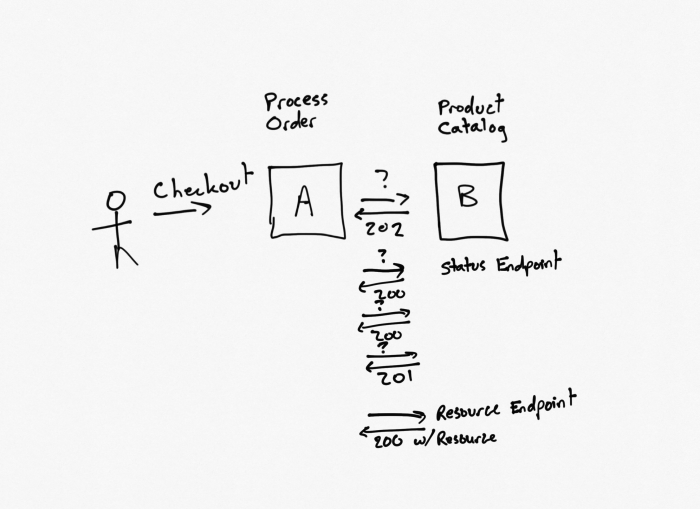

- Order Processing: When a customer places an order, the order service can asynchronously notify other services, such as the inventory service, shipping service, and payment service, without waiting for their immediate responses. This allows the order service to quickly confirm the order to the customer.

- Background Tasks: Long-running tasks, such as image processing, video encoding, or generating reports, are often handled asynchronously to avoid blocking the main application thread.

- Event-Driven Architectures: In event-driven architectures, services publish events (e.g., “user created,” “order shipped”) that other services can subscribe to and react to asynchronously.

- Microservices communication: Communication between microservices in a distributed system, especially for non-critical tasks.

Here are some examples of asynchronous interactions:

- Message Queues: Using message queues (e.g., Kafka, RabbitMQ) to decouple services and handle message delivery.

- Event-Driven Systems: Services publish and subscribe to events to communicate asynchronously.

- Background Job Processing: Services offload tasks to background job processors (e.g., Celery, Sidekiq) to handle them asynchronously.

Service Discovery and Registration

In a microservices architecture, services are often deployed dynamically, with instances scaling up or down based on demand. This dynamism necessitates a mechanism for services to locate and communicate with each other without hardcoded addresses. Service discovery and registration provide this essential functionality, enabling a resilient and scalable microservices environment.

Importance of Service Discovery in a Dynamic Microservices Environment

Service discovery is paramount in microservices for several reasons. Without it, managing the interactions between services becomes overly complex and brittle.

- Dynamic Nature of Microservices: Microservices environments are inherently dynamic. Service instances can be created, scaled, updated, and terminated frequently. Service discovery ensures that services can always find the current available instances, regardless of these changes.

- Scalability and Resilience: Service discovery facilitates horizontal scaling. When a service needs to handle increased load, new instances can be launched and automatically registered with the service discovery mechanism. If an instance fails, the service discovery mechanism detects this and routes traffic to healthy instances, enhancing resilience.

- Loose Coupling: Service discovery promotes loose coupling between services. Services don’t need to know the specific location of other services; they only need to know the service name. The service discovery mechanism handles the actual address resolution.

- Simplified Configuration: By centralizing service location information, service discovery simplifies configuration management. Instead of updating configuration files across multiple services whenever a service’s address changes, only the service discovery registry needs to be updated.

Common Service Discovery Mechanisms

Several tools and techniques are available for service discovery. The choice of mechanism depends on factors such as the cloud provider, the complexity of the environment, and the desired features.

- Consul: Consul is a distributed service mesh and service discovery tool. It provides features such as service discovery, health checking, and key-value storage. Consul uses a gossip protocol to maintain a consistent view of service registrations across a cluster.

- etcd: etcd is a distributed key-value store that can be used for service discovery. It’s known for its reliability and consistency. Services register themselves with etcd, and other services can query etcd to find the addresses of registered services.

- Kubernetes DNS: Kubernetes, a container orchestration platform, offers built-in service discovery through its DNS service. When a service is created in Kubernetes, it’s assigned a stable DNS name that other pods within the cluster can use to access it. This DNS resolution is managed automatically by Kubernetes.

- ZooKeeper: Apache ZooKeeper, though originally designed for distributed coordination, can also be used for service discovery. It provides a hierarchical namespace where services can register themselves and other services can discover them.

Service Registration Demonstration Using a Service Discovery Tool (Consul Example)

This section demonstrates how service registration works using Consul as an example. The steps involve registering a service with Consul, checking its registration, and then discovering the service from another service.

- Service Registration: A service registers itself with Consul by sending a registration request to the Consul agent. This request typically includes the service name, the service’s IP address, and the port number.

- Health Checks: Consul allows defining health checks for registered services. These checks verify that the service is healthy and responsive. Consul can perform HTTP checks, TCP checks, or script-based checks.

- Service Discovery: Another service, wishing to communicate with the registered service, queries Consul for the service’s information. Consul returns the IP address and port of the healthy instances of the requested service.

Consider a simple Python service that registers itself with Consul:“`pythonimport consulimport socket# Get the service’s IP addresshostname = socket.gethostname()IPAddr = socket.gethostbyname(hostname)# Initialize Consul clientc = consul.Consul()# Service detailsservice_name = “my-python-service”service_port = 8000service_id = “my-python-service-1″ # Unique ID for the service instance# Register the servicetry: c.agent.service.register( name=service_name, service_id=service_id, address=IPAddr, port=service_port, check=consul.Check.http(f”http://IPAddr:service_port/health”, interval=”10s”) #Health check endpoint ) print(f”Service ‘service_name’ registered successfully with Consul.”)except Exception as e: print(f”Error registering service: e”)“`This code snippet registers a service named “my-python-service” with Consul.

It specifies the service’s IP address, port, and a unique service ID. It also includes a health check, which periodically checks a “/health” endpoint on the service to ensure it’s running correctly. A health check is crucial for removing unhealthy instances from service discovery results.To discover this service from another service (e.g., another Python service), the following example can be used:“`pythonimport consulimport requests# Initialize Consul clientc = consul.Consul()# Service to discoverservice_name = “my-python-service”# Discover the servicetry: index = None _, services = c.health.service(service_name, index=index) if services: # Assuming the service is up and running, retrieve the address and port service_address = services[0][‘Service’][‘Address’] service_port = services[0][‘Service’][‘Port’] print(f”Found service ‘service_name’ at service_address:service_port”) # Make a request to the service try: response = requests.get(f”http://service_address:service_port/”) print(f”Response from service: response.text”) except requests.exceptions.RequestException as e: print(f”Error connecting to service: e”) else: print(f”Service ‘service_name’ not found.”)except Exception as e: print(f”Error discovering service: e”)“`This code queries Consul for the “my-python-service”.

If the service is found and healthy, the code retrieves the service’s address and port, and attempts to make a request to it.

Diagram Illustrating the Service Discovery Process

The diagram below illustrates the typical service discovery process.

The diagram depicts a simplified sequence diagram showing the interaction between three main components: “Service A”, “Service Discovery Mechanism (e.g., Consul)”, and “Service B”.

1. Service A Registration

An arrow points from “Service A” to “Service Discovery Mechanism” labeled “Register Service (Address, Port, Health Check)”. This signifies Service A sending its information (address, port, and health check details) to the service discovery mechanism.

2. Service B Discovery

An arrow points from “Service B” to “Service Discovery Mechanism” labeled “Query for Service A”. This indicates Service B requesting the address and port of Service A from the service discovery mechanism.

3. Service Discovery Response

An arrow points from “Service Discovery Mechanism” to “Service B” labeled “Return Service A Address and Port”. The service discovery mechanism responds to Service B with the address and port of Service A (obtained from the registration).

4. Communication

An arrow points from “Service B” to “Service A” labeled “Communicate with Service A”. Service B then uses the returned address and port to communicate with Service A.

A dashed line connects the “Service Discovery Mechanism” to a “Health Check” component. The diagram shows that the “Service Discovery Mechanism” periodically performs health checks on the registered services to ensure they are healthy.

Data Serialization and Formats

Data serialization plays a crucial role in inter-service communication, defining how data is structured and transmitted between services. The choice of serialization format significantly impacts performance, bandwidth usage, and the ability to evolve services over time. Selecting the right format is critical for building robust and scalable distributed systems.

Common Data Serialization Formats

Several data serialization formats are widely used in inter-service communication. Each has its strengths and weaknesses, making it essential to choose the one that best fits the specific requirements of a system.

- JSON (JavaScript Object Notation): JSON is a human-readable, text-based format that is widely used due to its simplicity and ease of use. It’s a good choice for interoperability, as it’s supported by almost all programming languages and platforms. Its flexibility makes it easy to adapt to changing data structures. However, JSON can be verbose, leading to larger message sizes and potentially impacting performance.

For instance, consider a simple data object representing a user:

"userId": 123,

"username": "johndoe",

"email": "[email protected]"The above example is straightforward and easy to understand.

- Protocol Buffers (Protobuf): Developed by Google, Protocol Buffers is a binary serialization format designed for efficiency. It offers a compact representation of data, resulting in smaller message sizes and faster serialization/deserialization times. Protobuf requires a schema definition file (.proto) that describes the structure of the data, enabling strong typing and efficient data validation. This format is particularly well-suited for performance-critical applications where bandwidth and processing speed are paramount.

For example, the same user data might be represented in a .proto file like this:

syntax = "proto3";

package user;

message User

int32 userId = 1;

string username = 2;

string email = 3;The generated Protobuf code is then used to serialize and deserialize the data efficiently.

- Avro: Avro is another binary serialization format that provides rich data structures and supports schema evolution. It stores the schema with the data, making it self-describing and enabling compatibility across different versions of the schema. Avro is often used in big data environments and with data processing frameworks. It offers both a compact binary format and a JSON representation for easier debugging.

Avro’s schema evolution capabilities are a significant advantage when dealing with evolving data models.

An Avro schema for the user data might look like this (in JSON format):

"type": "record",

"name": "User",

"fields": [

"name": "userId", "type": "int" ,

"name": "username", "type": "string" ,

"name": "email", "type": "string"

]

Performance Characteristics of Different Serialization Formats

The performance of a serialization format is typically measured in terms of serialization and deserialization speed, and the size of the serialized data. These factors directly impact the overall performance of inter-service communication.

- Serialization/Deserialization Speed: Binary formats like Protobuf and Avro generally offer faster serialization and deserialization speeds compared to text-based formats like JSON. This is because binary formats are designed for efficient processing by the underlying systems, while JSON requires parsing of text. The time required for these operations can become a bottleneck in high-volume communication scenarios.

- Serialized Data Size: Binary formats usually produce smaller serialized data sizes than JSON. This can significantly reduce bandwidth consumption, especially when transmitting large amounts of data. Smaller data sizes also lead to faster transmission times and reduced latency. The compactness of binary formats is a key factor in their performance advantage.

- CPU Usage: The CPU usage during serialization and deserialization varies depending on the format and the complexity of the data. Binary formats, optimized for speed, can often reduce CPU load. The parsing required by text-based formats can be more CPU-intensive.

- Examples and Real-Life Cases: In a microservices architecture, consider a service that frequently communicates with other services to update user profiles. Using Protobuf can result in faster response times and lower bandwidth usage compared to JSON. In contrast, if services are primarily communicating with web browsers, the simplicity of JSON may be preferred despite its performance limitations. For example, in a large-scale e-commerce platform, reducing the size of product data sent between services (e.g., product catalogs, inventory) can lead to significant performance improvements during peak traffic.

Handling Versioning of Data Formats to Ensure Backward Compatibility

As services evolve, data formats must change to accommodate new features or improve existing ones. Maintaining backward compatibility is crucial to avoid breaking existing services that rely on the older versions of the data format. Proper versioning strategies enable seamless updates and prevent downtime.

- Versioning Strategies: Several strategies can be employed for versioning data formats:

- Schema Evolution: For formats like Protobuf and Avro, schema evolution allows adding new fields or modifying existing ones while maintaining compatibility. Older services can still read data serialized with the newer schema, ignoring fields they don’t recognize.

- Version Numbers: Including a version number in the data format itself allows services to identify the data’s version. This enables services to handle different versions appropriately.

- Backward Compatibility: Design data formats to be backward compatible from the start. This means that newer versions should be able to read data serialized by older versions. This is often achieved by adding new fields with default values or making existing fields optional.

- Impact of Versioning on Communication: Versioning affects how services communicate and process data. Services must be able to handle different versions of the data format, potentially using different code paths to process older and newer data. This can increase the complexity of the service, but it is essential for ensuring interoperability and avoiding service outages.

- Versioning in Protobuf and Avro: Protobuf and Avro provide built-in mechanisms for schema evolution. In Protobuf, new fields can be added with default values. In Avro, schemas can be evolved by adding new fields, changing the type of existing fields, or renaming fields, while maintaining compatibility. These features make these formats well-suited for evolving systems.

- Real-World Examples: Consider a service that initially provides only user names. As the system evolves, the service might need to include user email addresses. With proper versioning (e.g., adding a new field for the email address), existing services that do not require the email address can continue to function without modification. Similarly, a social media platform can add new features (e.g., profile pictures) without breaking older clients.

Best Practices for Versioning

Following best practices for versioning data formats is essential for maintaining a stable and scalable system.

- Start with a Versioning Strategy: Plan for versioning from the beginning. Determine how you will manage schema changes and ensure backward compatibility.

- Use Semantic Versioning: Employ semantic versioning (e.g., Major.Minor.Patch) for your data formats. This clearly communicates the nature of the changes (breaking changes, new features, bug fixes).

- Add New Fields with Default Values: When adding new fields, provide default values to ensure that older services can still read the data without errors.

- Make Fields Optional: Make fields optional when they are not essential for all services. This allows for gradual adoption of new features.

- Avoid Breaking Changes: Minimize breaking changes (e.g., removing fields or changing field types) whenever possible. If breaking changes are unavoidable, provide a migration path and communicate the changes clearly.

- Test Thoroughly: Test your services with different versions of the data format to ensure compatibility. Implement automated tests to catch potential issues early.

- Document Changes: Document all changes to your data formats, including version numbers, field additions/modifications, and any compatibility considerations.

- Monitor and Alert: Monitor the usage of different versions of your data formats and set up alerts to detect any compatibility issues.

API Gateways and Reverse Proxies

API gateways and reverse proxies are critical components in a microservices architecture, acting as a central point of entry for client requests and managing the interactions with backend services. They enhance security, improve performance, and simplify the overall architecture by decoupling clients from the complexities of the underlying microservices.

Role of API Gateways in Managing Inter-Service Communication

The primary role of an API gateway is to mediate all client requests to the microservices. It sits in front of the backend services and provides a single entry point for clients, abstracting the internal complexities of the microservices architecture. This central point allows for consistent management and control of requests, security, and traffic.

Functionalities of an API Gateway

API gateways offer a wide range of functionalities that are essential for managing inter-service communication. These features contribute to improved security, performance, and manageability of the microservices.

- Routing: The API gateway routes incoming requests to the appropriate backend service based on the request’s URL, headers, or other criteria. This enables clients to interact with services without needing to know their specific locations or internal structure. For example, a request to `/users` might be routed to the `user-service`, while a request to `/products` goes to the `product-service`.

- Authentication: API gateways can handle authentication, verifying the identity of the client before forwarding the request to the backend services. This can involve verifying API keys, tokens (e.g., JWT), or other credentials. This centralized authentication mechanism simplifies security management and ensures consistent security policies across all services.

- Authorization: After authentication, the API gateway can enforce authorization rules, determining whether the authenticated client has permission to access a specific resource or perform a particular action. This ensures that only authorized users can access sensitive data or functionality.

- Rate Limiting: To protect backend services from overload and ensure fair usage, API gateways implement rate limiting. This restricts the number of requests a client can make within a specific time window. For instance, a gateway might limit a client to 100 requests per minute, preventing a single client from monopolizing resources.

- Request Transformation: API gateways can transform requests before forwarding them to the backend services. This can involve modifying headers, converting data formats, or aggregating data from multiple services. This allows the gateway to adapt requests to the specific requirements of the backend services.

- Response Transformation: Similar to request transformation, API gateways can also transform responses from backend services before sending them back to the client. This might involve formatting data, masking sensitive information, or aggregating data from multiple services into a single response.

- Monitoring and Logging: API gateways often provide comprehensive monitoring and logging capabilities, allowing administrators to track request traffic, identify performance bottlenecks, and detect potential security threats. This data is invaluable for troubleshooting issues and optimizing the performance of the microservices.

- Service Discovery Integration: API gateways can integrate with service discovery mechanisms to dynamically locate and route requests to the appropriate instances of backend services. This ensures that the gateway always routes requests to available and healthy service instances.

Configuring an API Gateway for a Sample Microservices Architecture

Consider a sample microservices architecture comprising three services: `user-service`, `product-service`, and `order-service`. The API gateway will handle requests to these services, providing a single point of entry. We will use a simplified configuration example.

Configuration Example (Conceptual – using a hypothetical configuration file format):

# API Gateway Configuration# Routesroutes: -path: /users/* service: user-service methods: [GET, POST, PUT, DELETE] authentication: jwt # Use JWT for authentication rate_limit: 100/minute # Rate limit 100 requests per minute -path: /products/* service: product-service methods: [GET] -path: /orders/* service: order-service methods: [POST] authentication: api_key # Authenticate using API keys Explanation of the Configuration:

- The configuration defines routes, each specifying a path, the backend service to which the request should be routed, the allowed HTTP methods, authentication requirements, and rate-limiting rules.

- For example, requests to `/users/*` are routed to the `user-service`, require JWT authentication, and are rate-limited to 100 requests per minute.

- Requests to `/products/*` are routed to the `product-service` without any authentication or rate limiting in this example.

- Requests to `/orders/*` are routed to the `order-service` and require API key authentication.

Implementation Notes:

- Actual API gateway implementations (e.g., Kong, Apigee, AWS API Gateway, Azure API Management, or similar) use different configuration formats.

- The configuration would typically involve defining endpoints, authentication methods, rate limits, and other features specific to the gateway’s capabilities.

- Service discovery integration is often configured separately, allowing the gateway to dynamically discover and route requests to the correct service instances.

Diagram Illustrating Request Flow Through an API Gateway

The following diagram illustrates the flow of requests through an API gateway in a microservices architecture.

Diagram Description:

The diagram depicts the flow of a client request through an API gateway to a backend microservice. The client initiates a request to the API gateway. The API gateway receives the request and performs several actions.

The diagram can be described as follows:

- Client: Initiates a request (e.g., GET /users/123)

- API Gateway:

- Receives the request.

- Authenticates the request (e.g., validates a JWT token).

- Authorizes the request (e.g., checks if the user has permission to access the resource).

- Routes the request to the appropriate backend service (e.g., `user-service`).

- Applies rate limiting (e.g., checks if the request exceeds the rate limit).

- Transforms the request if needed (e.g., converts data formats).

- Backend Service (e.g., user-service): Processes the request.

- API Gateway:

- Receives the response from the backend service.

- Transforms the response if needed (e.g., masks sensitive data).

- Returns the response to the client.

- Database (Optional): The backend service interacts with a database to store and retrieve data.

The arrows show the direction of the request and response. The API gateway acts as a central point, handling all incoming and outgoing traffic.

Error Handling and Resilience

Effective error handling and resilience are crucial for building robust and reliable inter-service communication. In a distributed system, services can fail independently, and these failures can cascade, leading to significant system-wide issues. Implementing strategies to handle errors gracefully and prevent cascading failures is essential to maintain service availability and a positive user experience.

Strategies for Handling Errors

Inter-service communication is inherently prone to errors due to network issues, service unavailability, and data corruption. Several strategies can be employed to handle these errors effectively.

- Retries: Retrying failed requests is a common technique. Implement retry mechanisms to automatically resend requests that initially fail. However, it is crucial to incorporate appropriate backoff strategies to avoid overwhelming the failing service.

- Circuit Breakers: Circuit breakers act as a protective mechanism to prevent cascading failures. When a service repeatedly fails, the circuit breaker “opens,” preventing further requests from being sent to the failing service. This allows the failing service time to recover and prevents the failure from impacting other services.

- Timeouts: Setting timeouts on requests is vital. Timeouts prevent a service from indefinitely waiting for a response from a failing service, which can consume resources and block threads.

- Rate Limiting: Rate limiting can protect services from being overwhelmed by excessive requests, especially during periods of high load or when a dependent service is experiencing issues.

- Error Logging and Monitoring: Comprehensive logging and monitoring are essential for identifying and diagnosing errors. Centralized logging and monitoring systems provide valuable insights into service behavior and facilitate proactive issue resolution.

Implementing Circuit Breakers

Circuit breakers are a fundamental pattern for building resilient systems. They monitor the health of a service and prevent cascading failures by isolating failing services.

- States of a Circuit Breaker: A circuit breaker typically has three states:

- Closed: In the closed state, requests are passed through to the service. The circuit breaker monitors the success and failure rates of requests.

- Open: When the failure rate exceeds a predefined threshold, the circuit breaker “opens.” In the open state, all requests are immediately rejected without being sent to the service. This prevents further requests from overwhelming the failing service.

- Half-Open: After a period, the circuit breaker transitions to the half-open state. In this state, a limited number of requests are allowed to pass through to the service. If these requests succeed, the circuit breaker closes. If they fail, the circuit breaker opens again.

- Failure Threshold: Define a failure threshold (e.g., the percentage of failed requests within a specific time window) to determine when the circuit breaker should open.

- Trip Duration: Specify the duration for which the circuit breaker remains in the open state before transitioning to the half-open state.

- Implementation Libraries: Several libraries and frameworks provide circuit breaker implementations (e.g., Hystrix, Resilience4j).

Implementing Retry Mechanisms with Exponential Backoff

Retry mechanisms are essential for handling transient errors, such as temporary network glitches or service unavailability. Exponential backoff is a crucial component of a retry strategy.

- Exponential Backoff Formula: Exponential backoff increases the delay between retry attempts exponentially. This helps to avoid overwhelming the failing service and allows it time to recover. The formula for exponential backoff is:

`delay = base

– multiplier ^ attempts`where:

- `base` is the initial delay (e.g., 1 second).

- `multiplier` is the factor by which the delay increases (e.g., 2).

- `attempts` is the number of retry attempts.

- Example Implementation:

import time import random def retry_with_exponential_backoff(func, retries=3, base_delay=1, multiplier=2, max_delay=10): for attempt in range(retries + 1): try: return func() except Exception as e: if attempt == retries: raise # Re-raise the exception after all retries delay = min(base_delay- (multiplier-* attempt), max_delay) sleep_time = delay- random.uniform(0.5, 1.5) # Add some jitter print(f"Attempt attempt + 1 failed. Retrying in sleep_time:.2f seconds...") time.sleep(sleep_time)This example defines a function `retry_with_exponential_backoff` that takes a function (`func`) to be retried, the maximum number of retries, the base delay, the multiplier, and the maximum delay. It attempts to execute the function. If it fails, it calculates the delay using exponential backoff, adds jitter to avoid thundering herd problems, and sleeps for the calculated time before retrying.

- Jitter: Add a small amount of randomness (jitter) to the backoff delay to prevent multiple clients from retrying simultaneously, which can overwhelm the service.

- Retry Conditions: Define the conditions under which retries should occur (e.g., specific HTTP status codes, exception types).

Best Practices for Implementing Resilience Patterns

Implementing resilience patterns effectively requires careful consideration of various factors.

- Monitor and Alert: Implement robust monitoring and alerting to detect failures and trigger appropriate responses. Set up alerts for circuit breaker state changes, retry failures, and high error rates.

- Test Thoroughly: Rigorously test resilience patterns under various failure scenarios (e.g., service downtime, network latency) to ensure they function as expected. Simulate failures in a controlled environment to validate the effectiveness of retry mechanisms, circuit breakers, and timeouts.

- Limit the Scope of Retries: Avoid retrying requests that have side effects (e.g., creating resources). Retry only idempotent operations (operations that can be executed multiple times without unintended consequences).

- Document Everything: Clearly document the resilience patterns implemented, including the retry strategy, circuit breaker configuration, and timeout values. This documentation should be easily accessible and maintained.

- Choose Appropriate Libraries: Leverage established libraries and frameworks that provide pre-built implementations of resilience patterns. These libraries often offer features like monitoring, metrics, and configuration options. Examples include Resilience4j (Java), Polly (.NET), and Hystrix (Java, though no longer actively developed).

- Consider Idempotency: Design your services to be idempotent, allowing safe retries of requests. Idempotent operations can be executed multiple times without changing the result beyond the initial execution. This is crucial for retry mechanisms to work correctly.

- Prioritize Root Cause Analysis: While resilience patterns help mitigate the impact of failures, it’s crucial to identify and address the root causes of errors. Regularly analyze logs and metrics to understand the underlying issues and prevent them from recurring.

Security Considerations

Securing inter-service communication is paramount in a microservices architecture. The distributed nature of these systems introduces unique security challenges that must be addressed to protect sensitive data and ensure the overall integrity of the application. Failure to implement robust security measures can expose the system to various threats, including unauthorized access, data breaches, and denial-of-service attacks.

Security Challenges in Inter-Service Communication

Several security challenges arise when services communicate with each other. These challenges stem from the increased attack surface and the complexities of managing security across a distributed environment.

- Authentication and Authorization: Verifying the identity of each service and controlling its access to resources is crucial. Without proper authentication and authorization, malicious actors could impersonate legitimate services or gain unauthorized access to sensitive data.

- Data Encryption: Protecting data in transit is essential to prevent eavesdropping and data breaches. Encryption ensures that even if the communication is intercepted, the data remains unreadable to unauthorized parties.

- Network Security: Services often communicate over a network, which introduces vulnerabilities such as man-in-the-middle attacks and denial-of-service attacks. Implementing network security measures, such as firewalls and intrusion detection systems, is crucial.

- Vulnerability Management: Regularly identifying and addressing vulnerabilities in the services and their dependencies is vital. This includes patching software, updating libraries, and performing security audits.

- Secrets Management: Securely storing and managing sensitive information, such as API keys, passwords, and certificates, is a significant challenge. Hardcoding secrets or storing them insecurely can lead to significant security risks.

Authentication and Authorization Methods for Securing Service-to-Service Communication

Several methods can be used to authenticate and authorize service-to-service communication, ensuring that only authorized services can access protected resources. The choice of method depends on the specific requirements of the application and the communication protocols used.

- API Keys: Services can be authenticated using API keys, which are unique identifiers associated with each service. The calling service includes the API key in the request header, and the receiving service validates the key. While simple to implement, API keys can be vulnerable if not managed securely.

- JSON Web Tokens (JWTs): JWTs are a standard for securely transmitting information between parties as a JSON object. A JWT contains claims, such as the service’s identity and permissions. Services can use JWTs to authenticate and authorize requests, enabling fine-grained access control.

- OAuth 2.0: OAuth 2.0 is a widely used authorization framework that allows a service to access resources on behalf of a user or another service. It provides a secure and standardized way to delegate access without sharing credentials.

- Mutual TLS (mTLS): mTLS is a secure communication protocol that uses client-side certificates to authenticate both the client and the server. This provides a strong form of authentication and encryption, making it difficult for attackers to intercept or impersonate services.

Implementing Mutual TLS (mTLS) for Secure Communication

Mutual TLS (mTLS) provides a robust method for securing service-to-service communication by verifying the identity of both the client and the server. Implementing mTLS involves several steps, including generating and managing certificates, configuring services to use TLS, and validating certificates during communication.

- Certificate Generation: Each service requires a digital certificate issued by a trusted Certificate Authority (CA) or a self-signed certificate for testing purposes. The certificate contains the service’s identity and public key.

- Certificate Distribution: The certificates must be securely distributed to the services. This can be done using a secrets management system or by manually configuring each service.

- Server Configuration: The server-side service must be configured to require client certificates for all incoming connections. This ensures that only clients with valid certificates can establish a connection.

- Client Configuration: The client-side service must be configured to present its certificate to the server during the TLS handshake. The client also needs to trust the CA that issued the server’s certificate.

- Communication: When a client service initiates communication with a server service, the following steps occur:

- The client service sends a TLS handshake request to the server.

- The server presents its certificate to the client.

- The client verifies the server’s certificate, ensuring that it is valid and trusted.

- The client presents its certificate to the server.

- The server verifies the client’s certificate, ensuring that it is valid and trusted.

- If both certificates are valid, a secure TLS connection is established.

Example using Envoy proxy:

In a Kubernetes environment, Envoy can be used as a service mesh to implement mTLS. Each service has an Envoy sidecar proxy injected into its pod. The Envoy proxies automatically handle certificate management and mTLS communication between services. When service A wants to communicate with service B, service A’s Envoy proxy initiates a TLS connection to service B’s Envoy proxy. The Envoy proxies handle the certificate exchange and validation, ensuring secure communication.

Comparison of Security Measures for Different Communication Protocols

The following table compares the security measures that can be applied to different communication protocols used in inter-service communication. The choice of protocol and security measures depends on the specific needs of the application.

| Protocol | Authentication | Authorization | Encryption |

|---|---|---|---|

| HTTP/HTTPS | API Keys, JWTs, OAuth 2.0, mTLS | Role-Based Access Control (RBAC), Attribute-Based Access Control (ABAC) | TLS/SSL |

| gRPC | mTLS, JWTs | gRPC interceptors, custom authorization logic | TLS |

| Message Queues (e.g., Kafka, RabbitMQ) | Username/Password, mTLS, SASL/SCRAM | Access Control Lists (ACLs), Role-Based Access Control (RBAC) | TLS/SSL |

Monitoring and Logging

Monitoring and logging are crucial for maintaining the health, performance, and security of inter-service communication within a microservices architecture. Without effective monitoring and logging, it becomes incredibly difficult to diagnose issues, optimize performance, and ensure the overall reliability of the system. These practices provide visibility into the interactions between services, allowing for proactive identification and resolution of problems.

Importance of Monitoring and Logging

Monitoring and logging provide critical insights into the behavior of a microservices architecture. This information is vital for several reasons.

- Debugging: Logs provide detailed information about the execution of services, enabling developers to quickly identify and resolve errors. They pinpoint the exact location of issues, including error messages, stack traces, and context-specific data.

- Performance Optimization: Monitoring allows for the identification of performance bottlenecks. Metrics like latency, throughput, and error rates can be tracked to reveal slow services or inefficient communication patterns.

- Proactive Issue Detection: Monitoring tools can detect anomalies and unusual behavior before they impact users. Setting up alerts based on predefined thresholds allows for rapid response to potential problems.

- Security Auditing: Logs record security-related events, such as authentication attempts, access control violations, and suspicious activities. This information is essential for security audits and incident response.

- Capacity Planning: Monitoring resource utilization (CPU, memory, network) helps in capacity planning. Understanding resource consumption patterns allows for efficient scaling and resource allocation.

- Compliance: Logging and monitoring are often required for regulatory compliance in industries like finance and healthcare.

Collecting and Analyzing Logs

Collecting and analyzing logs effectively requires a structured approach to ensure data is easily accessible and interpretable. This includes choosing the right logging framework, centralizing log aggregation, and utilizing appropriate analysis tools.

- Choosing a Logging Framework: Select a logging framework that integrates well with the chosen programming languages and frameworks. Popular options include Log4j, SLF4j, and Winston.

- Structured Logging: Implement structured logging. This involves logging data in a consistent, machine-readable format, such as JSON. This allows for easier parsing, filtering, and analysis. For example:

"timestamp": "2024-01-20T10:00:00Z",

"service": "user-service",

"level": "ERROR",

"message": "Failed to retrieve user data",

"userId": "123",

"error": "Database connection timeout" - Centralized Log Aggregation: Use a centralized log aggregation system to collect logs from all services in a single location. This simplifies searching, filtering, and analysis. Common tools include the ELK stack (Elasticsearch, Logstash, Kibana), Splunk, and Graylog.

- Log Analysis Tools: Employ log analysis tools to search, filter, and visualize log data. These tools help in identifying patterns, trends, and anomalies. Kibana, for instance, allows for the creation of dashboards to monitor key metrics.

- Alerting: Configure alerts to notify teams of critical events or performance issues. These alerts can be triggered based on specific log messages, error rates, or performance thresholds.

Using Distributed Tracing Tools

Distributed tracing provides a comprehensive view of requests as they traverse multiple services. This is particularly useful for identifying performance bottlenecks and understanding the flow of requests across a microservices architecture. Tools like Jaeger and Zipkin are designed for this purpose.

- Jaeger: Jaeger is a distributed tracing system that allows you to trace requests as they move through a microservices architecture. It visualizes the entire path of a request, including the time spent in each service and the dependencies between services. It is particularly effective for pinpointing latency issues and identifying services that are causing performance problems.

- Zipkin: Zipkin is another popular distributed tracing system. It is inspired by Google’s Dapper and provides similar functionality to Jaeger. It allows you to trace requests across multiple services and visualize the call graphs. Zipkin provides detailed information about each span, including timing information and any relevant tags.

- Implementation Steps:

- Instrumentation: Instrument the services to generate trace data. This typically involves adding tracing libraries to the code and configuring them to propagate trace context.

- Trace Propagation: Ensure that the trace context is propagated across service boundaries. This is usually done by passing trace headers in HTTP requests.

- Data Collection: Configure the services to send trace data to a tracing backend (e.g., Jaeger or Zipkin).

- Visualization: Use the tracing UI to visualize the traces and analyze the performance of the services.

- Benefits:

- End-to-end Request Tracking: Tracing provides a complete view of a request’s journey through all services.

- Performance Analysis: It allows you to identify slow services and bottlenecks.

- Debugging: It simplifies debugging by showing the sequence of events and the time spent in each service.

- Dependency Mapping: It helps you understand the dependencies between services.

Diagram of a Typical Monitoring and Logging Setup

The diagram illustrates a typical setup for monitoring and logging in a microservices environment. The components involved are:

Components:

- Microservices: These are the individual services that make up the application. Each service is instrumented to generate logs and trace data.

- Logging Frameworks: Frameworks like Log4j, SLF4j, or Winston are used within each microservice to generate structured logs.

- Distributed Tracing Libraries: Libraries like OpenTelemetry, Jaeger client, or Zipkin client are integrated into the microservices to capture and propagate trace information.

- Log Aggregator: A centralized log aggregation system (e.g., ELK stack, Splunk, Graylog) collects logs from all microservices.

- Tracing Backend: A tracing backend (e.g., Jaeger, Zipkin) receives trace data from the microservices.

- Monitoring Tools: Tools such as Prometheus and Grafana collect and visualize metrics from the microservices and infrastructure.

- Alerting System: An alerting system (e.g., PagerDuty, Alertmanager) monitors the logs, metrics, and traces and sends notifications when issues are detected.

- Dashboard and UI: Dashboards and user interfaces provide a centralized view of logs, metrics, and traces.

Data Flow:

- Logs: Microservices generate logs using logging frameworks. These logs are sent to the log aggregator.

- Traces: Microservices use distributed tracing libraries to generate trace data. This data is sent to the tracing backend.

- Metrics: Microservices expose metrics that are collected by the monitoring tools.

- Analysis and Visualization: The log aggregator, tracing backend, and monitoring tools provide analysis and visualization capabilities.

- Alerting: The alerting system monitors the data and sends alerts when issues are detected.

Diagram Description:

The diagram presents a clear visual representation of how these components interact. Microservices generate logs, metrics, and traces. Logs are collected by a log aggregator. Traces are sent to a tracing backend. Metrics are collected by a monitoring system.

The log aggregator, tracing backend, and monitoring system feed data to dashboards and UIs, enabling comprehensive monitoring. An alerting system monitors all data streams, triggering notifications based on predefined thresholds. The diagram demonstrates a complete end-to-end flow of data, from generation to analysis and alerting, enabling efficient monitoring and logging across a microservices architecture.

Outcome Summary

In conclusion, mastering inter-service communication is not merely a technical necessity but a strategic imperative for anyone embracing microservices. From selecting appropriate protocols and embracing asynchronous patterns to prioritizing security and robust monitoring, each facet contributes to a more resilient, scalable, and maintainable system. By embracing the strategies Artikeld in this guide, you can confidently navigate the challenges of inter-service communication and unlock the full potential of a microservices architecture, leading to more efficient and adaptable software solutions.

Helpful Answers

What is the main difference between REST and gRPC?

REST typically uses JSON over HTTP and is human-readable, making it easier to debug. gRPC uses Protocol Buffers, offering higher performance and efficiency due to binary data transfer, but with a steeper learning curve.

Why is service discovery important?

Service discovery enables services to dynamically locate each other, especially in environments where service instances are frequently added, removed, or scaled. It eliminates the need for hardcoded service addresses.

What are circuit breakers, and why are they useful?

Circuit breakers prevent cascading failures by monitoring service health and temporarily halting requests to failing services. This allows the failing service to recover without bringing down the entire system.

How do you handle data versioning in inter-service communication?

Data versioning involves strategies like using version numbers in API endpoints, providing backward compatibility, and using schema evolution tools to ensure that changes to data formats don’t break existing services.

What is the role of an API gateway?

An API gateway acts as a central entry point for all client requests, handling routing, authentication, authorization, rate limiting, and other cross-cutting concerns, simplifying the management of microservices.