Cloud computing has revolutionized how applications and services are delivered, yet the crucial element of fault tolerance often gets overlooked. This comprehensive guide delves into the intricacies of designing for fault tolerance in cloud environments, providing a roadmap for building resilient and dependable systems. Understanding the nuances of different deployment models, strategies, and considerations is key to achieving high availability and minimizing downtime.

From the fundamental concepts of fault tolerance to practical implementation strategies, this guide explores the essential steps for creating robust cloud architectures. It examines various aspects, including database design, infrastructure considerations, microservice architecture, security, monitoring, and cost optimization. The discussion is anchored in practical examples and real-world case studies, making the concepts tangible and applicable.

Introduction to Fault Tolerance in Cloud Design

Fault tolerance in cloud computing is the ability of a system or application to continue operating even when some components fail. This resilience is crucial for maintaining service availability and preventing disruptions to users. Modern cloud applications, from online banking to social media platforms, require high levels of uptime and reliability. The absence of fault tolerance can lead to significant financial losses, reputational damage, and even legal repercussions.The importance of fault tolerance stems from the distributed and dynamic nature of cloud environments.

Failures can originate from various sources, including hardware malfunctions, software bugs, network outages, or even human errors. By designing for fault tolerance, cloud providers and developers can minimize the impact of these failures, ensuring consistent service delivery and user experience.

Definition of Fault Tolerance in Cloud Computing

Fault tolerance in cloud computing refers to the capability of a system to maintain its functionality and service availability despite component failures. This involves redundant systems and mechanisms that automatically take over when failures occur. The key aspect is that the system continues to operate without significant interruption or loss of data, even when individual parts or resources experience issues.

This is achieved through strategies like replication, load balancing, and failover mechanisms.

Importance of Fault Tolerance for Cloud Applications and Services

Fault tolerance is paramount for maintaining the reliability and availability of cloud applications and services. Uninterrupted service is critical for users’ trust and satisfaction. In mission-critical applications, downtime can result in significant financial losses or disruptions to operations. For example, e-commerce platforms rely on uninterrupted service to process transactions and maintain customer trust. A fault-tolerant system minimizes downtime and ensures a consistent and reliable user experience.

Key Benefits of Building Fault-Tolerant Cloud Systems

Building fault-tolerant cloud systems offers numerous advantages. High availability and minimal downtime are achieved by mitigating the impact of failures. Reduced operational costs and increased efficiency result from minimized troubleshooting and maintenance efforts. Improved user satisfaction and trust are ensured through a consistent and reliable service. Finally, enhanced business continuity and disaster recovery capabilities are facilitated by the resilient nature of fault-tolerant systems.

Challenges Associated with Achieving Fault Tolerance in Cloud Environments

Implementing fault tolerance in cloud environments presents specific challenges. The dynamic and distributed nature of cloud infrastructures necessitates intricate design and management. Complexity increases with the scale and variety of components. Maintaining consistency and data integrity across redundant systems is critical. Cost optimization can be a challenge when implementing redundant infrastructure.

Fault Tolerance Strategies Comparison

Different strategies are employed to achieve fault tolerance. The choice depends on the specific application needs and resources.

| Strategy | Description | Advantages | Disadvantages |

|---|---|---|---|

| Replication | Creating multiple copies of data or components across different servers or locations. | High availability, data redundancy, improved disaster recovery. | Increased storage costs, complexity in data synchronization. |

| Load Balancing | Distributing incoming requests across multiple servers to prevent overload on any single resource. | Improved performance, reduced response times, enhanced scalability. | Requires sophisticated routing and monitoring systems. |

| Failover | Automatic switching to a backup system or component when the primary one fails. | Minimal downtime, ensures continuous operation. | Requires robust failover mechanisms, potential for data loss if not properly managed. |

Understanding Cloud Deployment Models

Cloud deployment models significantly impact fault tolerance strategies. Choosing the appropriate model depends on factors like the level of control desired, the complexity of applications, and the required resilience to failures. Understanding the specific responsibilities of each model regarding fault tolerance is crucial for successful cloud-based system design.

Differences in Fault Tolerance Responsibility Across Deployment Models

Different cloud deployment models allocate varying degrees of responsibility for fault tolerance to the provider and the user. This allocation directly influences the strategies employed and the level of control achievable. The Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS) models each differ in their approach to fault tolerance.

IaaS: Infrastructure as a Service

IaaS provides the most granular level of control, allowing users to manage their infrastructure, including servers, storage, and networks. This level of control necessitates a robust understanding of fault tolerance strategies, as the user is responsible for implementing and maintaining these mechanisms. Users must design and deploy redundant components, configure failover mechanisms, and implement monitoring systems. Implementing a highly available architecture, including load balancers and geographically distributed instances, falls under the user’s purview.

PaaS: Platform as a Service

PaaS abstracts the infrastructure layer, offering a platform for developers to build and deploy applications. Fault tolerance responsibilities are shared. The provider manages the underlying infrastructure, and the user focuses on application logic and deployment. PaaS platforms often provide built-in features for fault tolerance, such as automatic scaling and load balancing. The user, however, may need to configure specific aspects of fault tolerance within the platform’s constraints.

For instance, the user may have options for configuring deployment across multiple regions for high availability.

SaaS: Software as a Service

SaaS provides the most abstracted view, where the provider manages the entire infrastructure and application. The user interacts with the application through a front-end interface. Fault tolerance is entirely the responsibility of the provider. Users benefit from the provider’s extensive experience in handling failures, such as distributed systems and high-availability architectures. However, the user typically has minimal control over the specifics of the fault tolerance mechanisms.

Providers often utilize sophisticated techniques like clustering, load balancing, and redundant systems to ensure high availability and reliability.

Comparison of Fault Tolerance Considerations Across Deployment Models

| Deployment Model | Fault Tolerance Responsibility | Fault Tolerance Strategies | Level of Abstraction | Level of Management |

|---|---|---|---|---|

| IaaS | User | Redundancy, failover, monitoring, load balancing, distributed instances | Lowest | Highest |

| PaaS | Shared (Provider & User) | Automatic scaling, load balancing, potentially regional deployments | Medium | Medium |

| SaaS | Provider | Clustering, load balancing, redundant systems, distributed architecture | Highest | Lowest |

Fault Tolerance Strategies for Cloud Applications

Cloud applications must be designed to withstand failures, ensuring continuous service delivery. Robust fault tolerance strategies are crucial for maintaining high availability and minimizing downtime. These strategies encompass various techniques for replicating data, load balancing traffic, and implementing failover mechanisms, all aimed at ensuring that the application remains operational even when components fail.

Redundancy in Cloud Architectures

Redundancy is a fundamental concept in cloud architecture. It involves creating multiple copies of critical resources, such as servers, storage, and applications. This redundancy acts as a safeguard against failures, allowing the system to continue operating even if a component becomes unavailable. Implementing redundant components ensures high availability and business continuity by providing alternative paths for data access and processing.

A common example is having multiple servers hosting the same application; if one fails, others can take over, maintaining service uninterrupted.

Data Redundancy Strategies

Various strategies are employed to achieve data redundancy. Replication involves creating copies of data across multiple storage locations. Backups, on the other hand, create copies of data at a specific point in time, often for disaster recovery purposes. Replication is crucial for high availability, enabling quick failover to a backup instance in case of a primary failure.

Backups are vital for data recovery in case of data loss or corruption, serving as a recovery mechanism.

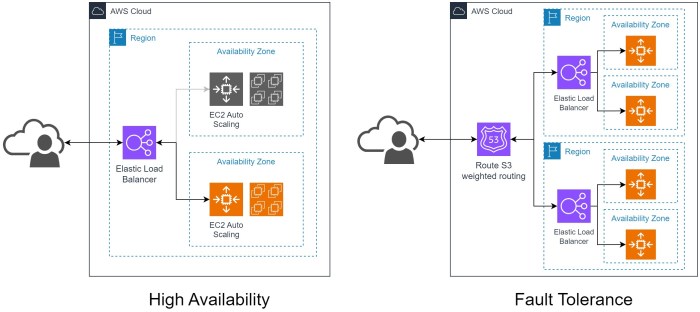

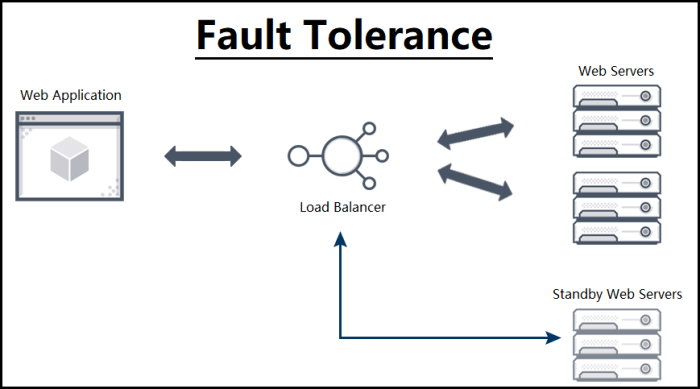

Application Availability through Load Balancing and Failover

Load balancing distributes incoming traffic across multiple servers, preventing any single server from becoming overloaded. This strategy is essential for handling high traffic volumes and ensuring consistent performance. Failover mechanisms automatically switch traffic to a backup server if the primary server fails, ensuring uninterrupted service. This combination of strategies allows cloud applications to handle peak loads and maintain availability during failures.

For example, a web application can have multiple servers; load balancing distributes user requests, and a failover mechanism ensures seamless operation if one server fails.

Monitoring and Alerting for Failure Detection

Monitoring and alerting systems play a critical role in detecting and responding to failures. These systems track the health and performance of various components, providing early warnings of potential issues. Alerts are triggered when predefined thresholds are exceeded, allowing administrators to address problems promptly. These systems can also provide valuable insights into the root cause of failures, aiding in proactive maintenance and reducing downtime.

For instance, monitoring CPU utilization, memory consumption, and network traffic can identify impending issues before they escalate.

Comparison of Data Replication Strategies

| Replication Strategy | Description | Pros | Cons |

|---|---|---|---|

| Synchronous Replication | Data is replicated in real-time. Changes are immediately reflected across all copies. | High data consistency, minimal latency on read operations. | Requires high bandwidth and low latency network connections, and can potentially be slow for large datasets. |

| Asynchronous Replication | Data is replicated in a delayed manner. Changes are not immediately reflected across all copies. | Lower bandwidth and latency requirements. | Potential for data inconsistencies, and the latency on read operations might be higher. |

This table illustrates the key differences between synchronous and asynchronous replication strategies. Choosing the appropriate strategy depends on the specific needs of the application, considering factors like data consistency requirements, bandwidth limitations, and acceptable latency.

Designing for Fault Tolerance in Databases

Robust database design is critical for cloud applications, ensuring continuous operation despite potential failures. Data integrity and accessibility are paramount, requiring strategies to maintain availability and consistency even during outages or component failures. This necessitates a deep understanding of distributed database architectures and the specific fault tolerance mechanisms employed.Effective fault tolerance in databases is crucial for cloud applications because it guarantees data integrity and availability during unexpected events.

This reliability is essential for maintaining user trust and operational continuity. Downtime or data loss can have significant financial and reputational consequences, thus highlighting the necessity of proactive strategies.

Importance of Database Fault Tolerance in Cloud Applications

Cloud applications often rely heavily on databases for storing and retrieving information. Unplanned outages or failures in database components can severely impact application performance and user experience. A well-designed database system, with robust fault tolerance mechanisms, ensures minimal disruption to the application and maintains data integrity.

Techniques for Ensuring Data Consistency and Availability in Distributed Databases

Distributed databases, common in cloud deployments, are composed of multiple nodes spread across various locations. Maintaining consistency and availability in such environments requires sophisticated strategies. Replication is a fundamental technique, where data is duplicated across multiple nodes. This redundancy allows for continued operation even if some nodes fail. Sophisticated techniques like quorum-based approaches ensure that data updates are properly propagated to all replicas.

Use of Distributed Transaction Management Systems

Distributed transaction management systems play a vital role in maintaining data consistency across multiple databases or data centers. These systems coordinate and manage transactions that span multiple database nodes. Using two-phase commit protocols or other consensus algorithms, they ensure that all updates are applied correctly, maintaining data integrity even in the presence of network failures or node failures.

Comparison of Database Systems in Terms of Fault Tolerance

| Database System | Replication Strategy | Consistency Mechanism | Transaction Management | Fault Tolerance Features |

|---|---|---|---|---|

| Relational Database Management Systems (RDBMS) | Often relies on synchronous replication, potentially impacting performance | ACID properties, but might require careful configuration for distributed scenarios | Two-phase commit protocol, but can be complex in distributed environments | Good for single-site scenarios, but may need specialized clustering solutions for high availability |

| NoSQL Document Databases | Various replication strategies (e.g., asynchronous, master-slave), offering trade-offs between consistency and performance | Often relaxed consistency models, like eventual consistency | Transaction support varies significantly | Well-suited for highly scalable applications, but requiring careful consideration of consistency requirements |

| NoSQL Key-Value Stores | Often use master-slave replication models | Generally offer eventual consistency | Limited transaction support, often focusing on atomic operations | Excellent for high-volume, read-heavy applications, but less suited for complex transactions |

| NewSQL Databases | Support various replication strategies and high availability models | Offer strong consistency guarantees, often with ACID-like properties | Built-in distributed transaction management | Good balance of performance, scalability, and strong consistency |

Note: The table highlights key aspects of fault tolerance for different database systems. Specific implementation details and performance characteristics can vary depending on the specific database system and its configuration. The choice of database system should be carefully considered in relation to the specific requirements of the application.

Implementing Fault Tolerance at the Infrastructure Level

Robust fault tolerance in cloud deployments hinges on a resilient infrastructure. This involves proactive design choices that minimize the impact of failures, ensuring high availability and minimal service disruption. A well-designed cloud infrastructure can absorb failures at various levels, maintaining service continuity.Effective cloud infrastructure fault tolerance extends beyond simply replicating components. It necessitates a holistic approach, integrating various technologies and services to achieve high availability and minimize downtime.

This includes virtualization, containerization, auto-scaling, load balancing, and carefully planned database replication strategies.

Virtualization and Containerization Technologies

Virtualization and containerization technologies are crucial components of fault-tolerant cloud infrastructure. Virtual machines (VMs) isolate applications from the underlying hardware, allowing for easier failover and faster recovery. If one VM fails, the others remain unaffected. Containerization, through technologies like Docker, provides further isolation and portability, enhancing the resilience of applications by packaging them with their dependencies. This modularity simplifies deployment and recovery, enabling quick failover to redundant instances.

This approach enhances fault isolation and reduces the impact of failures.

Cloud Infrastructure Services

Cloud infrastructure services play a critical role in achieving fault tolerance. Auto-scaling dynamically adjusts the number of resources (e.g., VMs, instances) based on demand and automatically replaces failing instances. This ensures consistent performance and availability, mitigating the effects of unexpected spikes in user load or resource depletion. Load balancing distributes traffic across multiple instances, preventing any single point of failure from overwhelming a system.

This sophisticated approach proactively handles anticipated or sudden surges in user activity.

Designing a Robust Cloud Infrastructure for High Availability

Designing a high-availability cloud infrastructure requires careful planning. Redundancy is key; multiple instances of critical components, such as servers, storage, and networking, are deployed across different zones or regions. Geographic distribution reduces the risk of widespread outages caused by natural disasters or localized failures. This geographically diverse infrastructure ensures continuous operation even if one region experiences an outage.

A critical element is the implementation of robust monitoring and alerting systems to detect potential issues early. This proactive approach enables swift intervention and recovery.

Key Infrastructure Components in Fault Tolerance

| Component | Description | Fault Tolerance Mechanism |

|---|---|---|

| Virtual Machines (VMs) | Virtualized computing environments | Redundant VMs across multiple hosts; automatic failover |

| Containers | Lightweight, portable application packages | Redundant container instances; orchestration tools for failover |

| Auto-Scaling | Dynamic resource provisioning based on demand | Adapts to workload fluctuations; maintains capacity during failures |

| Load Balancing | Distributes traffic across multiple instances | Prevents overload on individual instances; ensures even resource utilization |

| Redundant Storage | Multiple copies of data on different storage devices | Data protection against storage failures; quick recovery in case of failures |

| Geographic Distribution | Deploying resources across different regions | Mitigation of regional outages; ensures global availability |

Implementing Fault Tolerance for Microservices

Designing fault-tolerant microservices is crucial for building robust and reliable cloud applications. Microservices, by their nature, are distributed and independent. This distributed architecture, while offering benefits like scalability and agility, introduces new challenges in ensuring continuous operation in the face of failures. Effective fault tolerance strategies are essential to mitigate these risks and maintain service availability.

Microservice Architecture Design Considerations

Microservices architectures demand careful consideration of dependencies and communication patterns. Designing for fault tolerance requires understanding the potential failure points within each microservice and between them. Decoupling microservices through well-defined APIs is a key strategy for limiting the impact of failures in one service on others. Employing asynchronous communication methods like message queues can further enhance resilience by allowing services to operate independently even if one service is experiencing temporary issues.

Handling Failures Within Individual Microservices

Robust error handling within individual microservices is vital. Implementations should anticipate and gracefully handle various types of failures, including network issues, database failures, and internal service errors. Implementing comprehensive logging and monitoring is crucial to understand the root cause of failures. Implementing retries, timeouts, and circuit breakers are further strategies for ensuring service availability.

Circuit Breakers and Other Resilience Patterns

Circuit breakers are essential resilience patterns for preventing cascading failures. A circuit breaker acts as a protective mechanism, temporarily preventing calls to a failing service to avoid further disruptions. Other resilience patterns, like fallback mechanisms and bulkheads, provide alternative paths and isolate potential points of failure. When a service experiences frequent failures, a circuit breaker opens the connection, preventing further calls to the failing service until it recovers.

Best Practices for Building Fault-Tolerant Microservices

| Best Practice | Description |

|---|---|

| Dependency Management | Minimize dependencies between microservices. Avoid tight coupling that can lead to cascading failures. Employ well-defined interfaces and contracts to ensure decoupling. |

| Asynchronous Communication | Utilize asynchronous communication patterns (e.g., message queues) to reduce the impact of slow or unresponsive services on other parts of the system. This promotes loose coupling and enhances responsiveness. |

| Robust Error Handling | Implement comprehensive error handling mechanisms to catch and gracefully handle various types of failures within individual microservices. Employ logging to track failures and monitor their frequency. |

| Circuit Breakers | Implement circuit breakers to prevent cascading failures by temporarily blocking calls to a failing service. This helps isolate failures and maintain overall system health. |

| Monitoring and Logging | Establish comprehensive monitoring and logging systems to track the health of microservices. Analyze logs to identify patterns and trends in failures. This allows for proactive issue resolution. |

| Automated Testing | Implement automated testing procedures to validate the fault tolerance of microservices. Ensure that the system can handle failures without significant disruptions. |

Security Considerations in Fault-Tolerant Systems

Robust fault tolerance in cloud systems necessitates a strong security posture. Ignoring security during design can lead to significant vulnerabilities, potentially exposing sensitive data and critical functionalities to malicious actors. This section explores crucial security considerations in fault-tolerant cloud designs, focusing on protecting replicated data and systems against attacks during failures.Securing fault-tolerant systems involves more than simply replicating components; it requires a holistic approach encompassing data encryption, access controls, and proactive threat modeling.

A comprehensive security strategy is essential to maintain data integrity, confidentiality, and availability, even under stress or failure conditions.

Importance of Security in Fault-Tolerant Designs

Fault tolerance, while improving system resilience, can also amplify security risks if not adequately addressed. A failure in one component, especially one responsible for security, can compromise the entire system. Protecting against unauthorized access, data breaches, and malicious attacks during failures is paramount. This necessitates strong authentication mechanisms, secure communication channels, and robust access control policies across all replicated components.

Securing Replicated Data and Systems

Ensuring the security of replicated data and systems is critical. Data encryption throughout the replication process, both in transit and at rest, is fundamental. This safeguards data from unauthorized access, even if a component fails. Additionally, access controls should be granular and rigorously enforced across all replicated instances, preventing unauthorized modifications or deletions. Regular security audits and penetration testing of replicated systems are vital for identifying and patching vulnerabilities.

Protecting Against Malicious Attacks During Failures

Malicious actors may attempt to exploit system vulnerabilities during failures. This includes denial-of-service attacks targeting critical components or attempts to manipulate data replication mechanisms. Implementing intrusion detection systems and employing anomaly detection techniques can identify and respond to suspicious activity during failures. A robust logging and monitoring infrastructure is essential to track events and respond to any deviations from normal behavior, allowing swift remediation.

Common Security Threats and Mitigations

| Security Threat | Description | Mitigation Strategy |

|---|---|---|

| Data breaches during replication | Unauthorized access to replicated data during failures or component outages. | Data encryption at rest and in transit, secure communication protocols, and strict access controls. |

| Denial-of-service attacks on critical components | Malicious attempts to overwhelm system resources, potentially disrupting data replication and causing service outages. | Network traffic filtering, rate limiting, intrusion detection systems, and redundant infrastructure. |

| Unauthorized access to replicated systems | Malicious actors gaining access to replicated instances to manipulate data or gain control. | Strong authentication mechanisms, multi-factor authentication, and granular access control policies. |

| Exploitation of vulnerabilities during failures | Malicious actors exploiting vulnerabilities in components during system failures. | Regular security audits, penetration testing, and vulnerability scanning of all replicated components. |

| Manipulation of replication mechanisms | Attempts to alter data replication processes for malicious purposes. | Auditing and monitoring of replication processes, implementing checksums or other validation methods to detect unauthorized modifications. |

Monitoring and Alerting for Fault Tolerance

Effective fault tolerance in cloud environments relies heavily on robust monitoring and alerting systems. These systems provide the crucial feedback loop needed to identify and respond to issues proactively, minimizing downtime and ensuring application availability. Comprehensive monitoring allows for the detection of subtle anomalies that could escalate into significant problems, enabling timely intervention and restoration.

Monitoring Tools and Techniques

Monitoring cloud environments requires a multifaceted approach, encompassing various tools and techniques tailored to specific needs. This involves tracking performance metrics, resource utilization, and application behavior to identify potential failures. Leveraging logging and metrics aggregation is crucial for gaining comprehensive insights into system health. Centralized logging platforms are vital for collecting and analyzing logs from diverse sources, facilitating efficient troubleshooting.

Alerting for Critical System Failures

Setting up alerts for critical system failures is a critical component of a fault-tolerant system. These alerts must be configured to trigger when specific thresholds are breached, such as exceeding CPU utilization or encountering database connection errors. Alerts should be tailored to the specific applications and services being monitored, providing actionable insights into the nature and severity of the issue.

Furthermore, different levels of alert severity (e.g., informational, warning, critical) help prioritize responses and ensure prompt attention to the most pressing problems.

Automated Responses to Detected Issues

Automated responses to detected issues are paramount for achieving true fault tolerance. A critical aspect is the integration of automated recovery mechanisms into the monitoring and alerting systems. When an alert triggers, predefined actions should automatically execute, such as scaling up resources, initiating failover mechanisms, or triggering remediation scripts. The automation of these responses significantly reduces the response time to incidents, limiting potential damage and minimizing downtime.

This minimizes the risk of prolonged outages, thereby improving the overall system reliability.

Monitoring Tools for Cloud Environments

Effective monitoring relies on the appropriate selection of tools tailored to specific needs. The choice of tools depends on factors like the cloud provider, the specific applications, and the desired level of detail. A variety of tools offer different functionalities, and careful evaluation is crucial to ensure the right tools are used.

| Monitoring Tool | Suitable for | Key Features |

|---|---|---|

| CloudWatch (AWS) | AWS environments | Metrics collection, logging, dashboards, alarms, and tracing. |

| Azure Monitor (Azure) | Azure environments | Metrics, logs, traces, and application performance monitoring. |

| Datadog | Multi-cloud environments | Comprehensive monitoring, alerting, and analysis across various applications and services. |

| Prometheus | Open-source solutions | Metrics collection, time series database, and flexible alerting system. |

| Grafana | Data visualization | Visualization and dashboards for monitoring metrics from various sources. |

Cost Optimization in Fault Tolerant Design

Cloud deployments, while offering unparalleled scalability and availability, can come with significant cost implications, particularly when implementing fault tolerance. Balancing the need for robust system resilience with financial constraints is a critical aspect of successful cloud architecture. This section explores the trade-offs between fault tolerance and cost, providing strategies for optimizing costs without sacrificing essential resilience.Optimizing cloud costs in fault-tolerant designs requires a meticulous approach that considers the specific needs of the application and the potential impact of different strategies.

It’s not simply a matter of choosing the cheapest option; rather, it’s about finding the right balance between cost-effectiveness and system reliability.

Trade-offs Between Fault Tolerance and Cost

Fault tolerance often necessitates redundancy, which directly increases operational expenditure. For instance, replicating data across multiple availability zones incurs storage and network costs. Choosing the right level of redundancy, therefore, becomes crucial. Over-engineering fault tolerance can lead to unnecessary expenditure, while insufficient redundancy risks data loss or service disruption, potentially resulting in higher costs associated with recovery and downtime.

Strategies for Optimizing Costs

Several strategies can help optimize costs without compromising fault tolerance. These include:

- Leveraging Cloud-Native Services: Cloud providers offer a range of managed services for fault tolerance, such as distributed databases and message queues. Utilizing these services can be more cost-effective than managing infrastructure redundancies. For example, using Amazon DynamoDB instead of replicating a relational database can significantly reduce storage and maintenance costs.

- Employing Intelligent Scaling Strategies: Dynamic scaling adjusts resources based on demand. By proactively adjusting the number of instances or replicas based on usage patterns, organizations can avoid unnecessary costs during periods of low activity. Cloud providers offer tools that enable automatic scaling based on metrics such as CPU utilization or request volume.

- Choosing Appropriate Fault Tolerance Levels: Implementing fault tolerance should align with the specific risk tolerance and potential impact of service disruptions. A critical application might justify higher levels of redundancy, while a less critical application may not require such extensive measures. A meticulous risk assessment and cost-benefit analysis are essential.

- Optimizing Infrastructure Configuration: Careful configuration of cloud infrastructure can significantly impact costs. Optimizing storage options, choosing appropriate instance types, and implementing efficient networking configurations can reduce expenditure without compromising resilience. For instance, using storage-optimized instances for data backups or choosing spot instances for non-critical tasks can optimize costs while ensuring availability.

Examples of Balancing Fault Tolerance with Cost Efficiency

A retail company, for example, could leverage a multi-region architecture for its e-commerce platform, replicating critical data across different availability zones. This redundancy provides high availability and fault tolerance. To optimize costs, they might use spot instances for non-critical tasks like background processing or testing environments.

Comparing Costs of Different Fault Tolerance Approaches

| Fault Tolerance Approach | Cost Breakdown (Example – USD per Month) | Advantages | Disadvantages |

|---|---|---|---|

| Single Region, No Redundancy | $500 | Low initial cost | High risk of downtime and data loss |

| Multi-Region Replication (Data) | $1000 | High availability, reduced downtime | Increased storage and network costs |

| Multi-Region Replication (Application) | $1500 | High availability, fault tolerance at application level | Higher infrastructure cost, complex deployment |

| Cloud-Native Services (e.g., DynamoDB) | $800 | Simplified management, cost-effective scalability | Potential vendor lock-in |

Note: These costs are examples and can vary significantly depending on specific usage patterns, instance types, and cloud provider.

Case Studies of Fault-Tolerant Cloud Systems

Real-world applications and services often leverage fault tolerance to ensure continuous operation even during unexpected disruptions. Examining these case studies provides valuable insights into the strategies and technologies employed to achieve high availability and resilience. Understanding the benefits and drawbacks of different approaches allows for informed decisions when designing cloud systems.

Amazon Web Services (AWS) Cloud Infrastructure

AWS demonstrates a comprehensive approach to fault tolerance across its infrastructure. The system utilizes redundant components, geographically distributed data centers, and sophisticated monitoring tools. This redundancy ensures that if one component fails, another can take over seamlessly. For example, AWS’s global network of data centers provides multiple paths for data transmission and processing, mitigating single points of failure.

Google Cloud Platform (GCP) Fault Tolerance Mechanisms

GCP employs various fault tolerance strategies, including replicated data storage and automatic failover mechanisms. The use of distributed databases and replicated services across multiple zones minimizes downtime. Their approach includes proactive monitoring of system health and automated responses to detected issues, effectively minimizing the impact of potential failures.

Netflix Streaming Service

Netflix’s globally distributed streaming service demonstrates sophisticated fault tolerance. Their system leverages a complex network of servers and data centers to deliver content quickly and reliably. This involves load balancing, content replication, and dynamic routing to ensure continuous service, even during peak demand or regional outages. Their use of microservices architecture allows for independent scaling and deployment, enhancing resilience against failures.

Detailed Explanation of a Fault Tolerance Implementation (AWS S3)

Amazon S3 (Simple Storage Service) utilizes a multi-layered approach to achieve high availability and data durability. S3 replicates data across multiple availability zones within a region, meaning data is duplicated in separate data centers. This replication ensures that if one data center fails, the data remains accessible from other operational zones. Crucially, S3 employs erasure coding to further protect against data loss.

This technique distributes data across multiple storage devices, allowing for the reconstruction of data even if some storage devices fail. If one or more hard drives fail, S3 can reconstruct the data from the remaining, healthy copies. This multi-layered redundancy and erasure coding combine to deliver exceptionally high data availability and durability.

Ending Remarks

In conclusion, designing for fault tolerance in the cloud is a multifaceted process demanding careful consideration of various factors. This guide has illuminated the key principles, strategies, and considerations for building resilient systems. From understanding deployment models to implementing fault-tolerant microservices, and optimizing costs while maintaining reliability, this discussion highlights the crucial role of fault tolerance in modern cloud architectures.

By mastering these principles, organizations can effectively mitigate risks and ensure high availability of their cloud-based applications and services.

FAQ Insights

What are some common causes of failures in cloud environments?

Common causes include hardware failures, network outages, software glitches, and security breaches. These can range from simple component failures to more complex system-level issues.

How can I choose the right fault tolerance strategy for my application?

The optimal strategy depends on factors like the criticality of the application, the expected volume of traffic, the budget constraints, and the desired level of redundancy. Consider the trade-offs between cost and availability when making your decision.

What role does data replication play in fault tolerance?

Data replication creates redundant copies of data across different locations or systems. This ensures data availability even if one component fails. The chosen replication strategy (e.g., synchronous, asynchronous) impacts the level of data consistency and latency.

What are the cost implications of implementing fault tolerance?

Implementing fault tolerance often involves increased infrastructure costs due to redundancy and the need for monitoring and management tools. However, the cost of downtime can significantly outweigh these investments, especially for mission-critical applications.