Serverless architecture is revolutionizing software development, offering a paradigm shift from traditional infrastructure management. This approach allows developers to focus on writing code and building applications without the burden of server provisioning, maintenance, and scaling. By abstracting away the underlying infrastructure, serverless computing enables a more agile and efficient development process, leading to faster time-to-market and reduced operational costs.

This analysis will delve into the core advantages of serverless architecture, exploring its impact on cost optimization, scalability, operational overhead, and developer productivity. We will examine how serverless empowers developers to build resilient, event-driven applications that seamlessly integrate with other cloud services, ultimately transforming the way software is designed, deployed, and maintained. Through detailed explanations, examples, and visual aids, this exploration will provide a clear understanding of the benefits serverless offers.

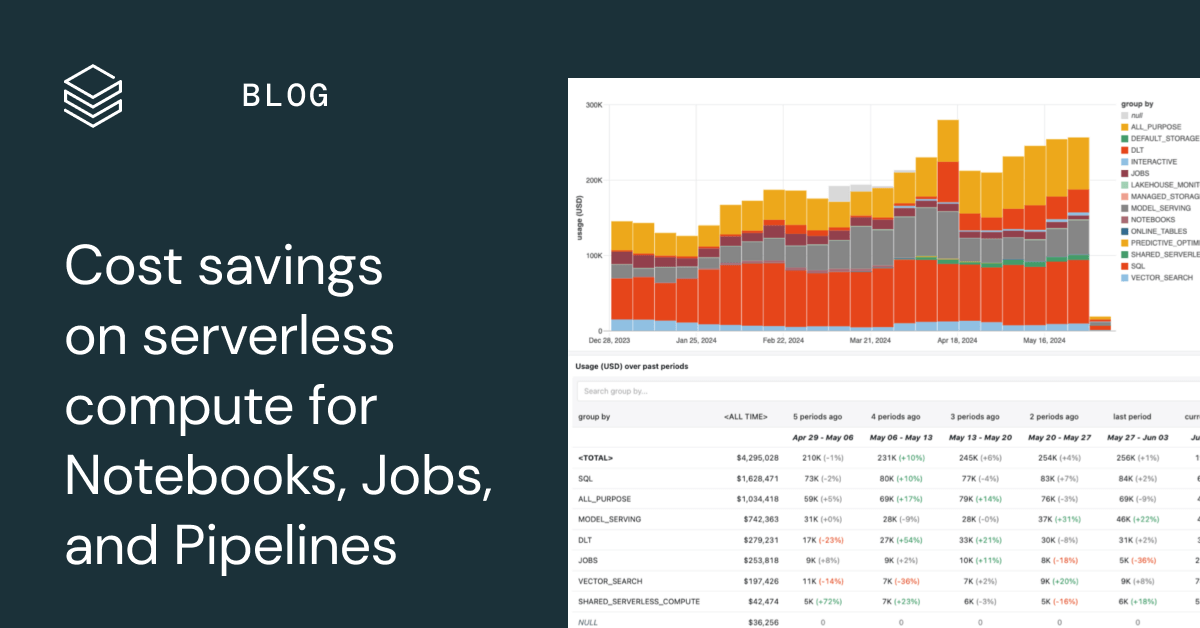

Cost Optimization

Serverless architecture offers significant advantages in cost optimization compared to traditional infrastructure models. By eliminating the need for upfront investments in servers and providing a pay-per-use pricing model, serverless allows developers to significantly reduce operational expenses and optimize resource allocation. This approach shifts the financial burden from fixed infrastructure costs to a model that aligns directly with application usage.

Reducing Infrastructure Costs

Serverless computing fundamentally changes how infrastructure costs are incurred. Traditional models require purchasing, maintaining, and provisioning servers, regardless of actual utilization. This often leads to over-provisioning to handle peak loads, resulting in idle resources and wasted capital. Serverless, however, eliminates the need for these upfront investments.

- Elimination of Server Management: With serverless, the cloud provider manages the underlying infrastructure, including servers, operating systems, and scaling. Developers are freed from these operational responsibilities, reducing the need for dedicated IT staff and associated costs.

- Automatic Scaling and Resource Allocation: Serverless platforms automatically scale resources based on demand. This ensures that applications only consume the resources they need, avoiding over-provisioning and its associated costs. When the application is idle, no resources are consumed, resulting in zero costs.

- Reduced Operational Overhead: Traditional infrastructure requires ongoing maintenance, including patching, security updates, and performance monitoring. Serverless platforms handle these tasks automatically, freeing developers from these time-consuming and costly activities.

Pricing Models in Serverless Computing

Serverless computing primarily employs a pay-per-use pricing model, which contrasts sharply with the fixed costs of traditional infrastructure. This model offers granular control over spending and allows for efficient resource allocation.

- Pay-per-Invocation: Developers are charged for the number of times a function is executed. This model is ideal for event-driven architectures where functions are triggered by specific events, such as file uploads or database updates.

- Pay-per-Execution Time: Costs are based on the duration of the function’s execution, measured in milliseconds or seconds. This model is beneficial for computationally intensive tasks, as it directly reflects the resources consumed by the application.

- Pay-per-Request: Some serverless services, such as API gateways, charge based on the number of requests received. This model is suitable for applications that handle a high volume of API calls.

- Free Tier and Usage Limits: Many serverless providers offer free tiers or generous usage limits, allowing developers to experiment and build applications without incurring significant costs.

Cost Savings Comparison

The following table illustrates a hypothetical comparison of costs between a traditional server-based application and a serverless implementation. This example demonstrates the potential for significant cost savings with serverless.

| Metric | Traditional | Serverless | Savings |

|---|---|---|---|

| Monthly Server Costs (Estimate) | $500 | $50 | $450 |

| Developer Time (Maintenance) | 20 hours | 5 hours | 15 hours |

| Monthly Database Costs (Estimate) | $200 | $150 | $50 |

| Total Monthly Costs (Estimate) | $700 | $200 | $500 |

This table provides a simplified view. Actual cost savings will vary depending on the specific application, usage patterns, and the chosen serverless services. However, it clearly demonstrates the potential for substantial cost reductions by shifting from a fixed-cost infrastructure model to a pay-per-use approach. For instance, if an application experiences periods of low traffic, a traditional server will still incur costs, while a serverless function will only be charged for actual invocations.

Scalability and Elasticity

Serverless architectures inherently excel in scalability and elasticity, offering developers unprecedented control over resource allocation and application performance. This inherent characteristic stems from the event-driven nature of serverless functions and the underlying infrastructure that supports them. The ability to automatically adjust resources based on demand is a core tenet of serverless, providing significant advantages over traditional server-based approaches.

Automatic Scaling Based on Demand

Serverless platforms automatically scale applications in response to incoming traffic or events. This dynamic scaling is achieved through a combination of mechanisms, including:

- Event Triggers: Serverless functions are typically triggered by events, such as HTTP requests, database updates, or scheduled tasks. When an event occurs, the platform automatically provisions the necessary resources to execute the function.

- Concurrent Execution: As demand increases, the serverless platform can launch multiple instances of a function concurrently. This allows the application to handle a large number of requests simultaneously.

- Resource Allocation: The platform dynamically allocates compute resources, such as CPU and memory, to each function instance based on the needs of the incoming requests. This ensures that functions have sufficient resources to execute efficiently.

- Monitoring and Optimization: Serverless platforms continuously monitor the performance of functions and automatically adjust resource allocation and scaling parameters to optimize performance and minimize costs.

The automatic scaling process eliminates the need for developers to manually provision and manage servers, which significantly reduces operational overhead and allows developers to focus on writing code. The platform takes care of all the infrastructure management tasks, such as capacity planning, server provisioning, and load balancing.

Benefits of Automatic Scaling for Handling Traffic Spikes

Automatic scaling in serverless architectures provides significant benefits for handling traffic spikes, ensuring application availability and responsiveness.

- Improved Availability: During traffic spikes, the serverless platform automatically scales up the application to handle the increased load, preventing the application from becoming overloaded and unavailable.

- Enhanced Performance: By scaling up resources, the application can maintain optimal performance, even during peak traffic periods. This ensures a positive user experience.

- Cost Efficiency: Serverless platforms automatically scale down resources when traffic decreases, which helps to minimize costs. Developers only pay for the resources they consume.

- Reduced Operational Overhead: Automatic scaling eliminates the need for manual intervention to scale the application, reducing the operational burden on development teams.

This ability to automatically adjust resources based on demand is particularly valuable for applications with unpredictable traffic patterns, such as e-commerce websites, social media platforms, and event-driven applications. For instance, during a flash sale, an e-commerce website built on a serverless architecture can automatically scale up to handle a surge in traffic, ensuring that customers can complete their purchases without experiencing delays or errors.

Conversely, during periods of low traffic, the application can scale down to conserve resources and reduce costs.

Diagram Illustrating the Scaling Process of a Serverless Function

The scaling process of a serverless function can be visualized through a diagram that illustrates the flow of requests and resource allocation.

Diagram Description:

The diagram depicts the scaling process of a serverless function in response to increasing incoming requests. The diagram consists of several key elements:

- Incoming Requests: Represented by arrows entering the system, these indicate the flow of user requests. Initially, the rate of incoming requests is low.

- Event Trigger: An event trigger, such as an HTTP request or a database update, initiates the execution of the serverless function.

- Serverless Function Instance: Initially, a single instance of the serverless function is running. This instance processes incoming requests.

- Scaling Logic: The serverless platform’s scaling logic monitors the function’s performance and automatically provisions additional function instances when the demand increases. This logic considers factors such as request rate, execution time, and resource utilization.

- Multiple Function Instances: As the incoming request rate increases, the scaling logic triggers the creation of multiple instances of the serverless function. These instances work concurrently to handle the load. Each instance has its own allocated resources (CPU, memory).

- Resource Allocation: The platform dynamically allocates resources to each function instance, ensuring that each instance has sufficient resources to execute efficiently.

- Horizontal Scaling: The diagram illustrates horizontal scaling, where the system adds more instances of the function to handle the increased load. This is in contrast to vertical scaling, where resources are added to a single server.

- Outgoing Responses: Arrows exiting the system represent the responses from the serverless function instances, returning the results to the users.

- Monitoring and Metrics: The diagram highlights the continuous monitoring of function performance through metrics like execution time, error rates, and resource utilization. These metrics inform the scaling decisions.

The diagram visually represents the elastic nature of serverless, where the system seamlessly adjusts the number of function instances and allocated resources to meet the changing demands. The diagram illustrates how the platform handles a surge in traffic by automatically scaling up the number of function instances to handle the increased load.

Reduced Operational Overhead

Serverless architecture significantly diminishes the operational burden traditionally faced by developers. This shift allows developers to focus on core application logic rather than infrastructure management. By abstracting away server administration, serverless platforms provide a more efficient development experience, leading to faster deployment cycles and improved productivity.

Tasks Eliminated with Serverless

Serverless architectures remove the necessity for developers to manage a range of operational tasks. This allows for greater focus on application development and innovation.

- Server Provisioning and Management: Developers no longer need to allocate, configure, or maintain servers. The serverless platform handles the underlying infrastructure, including operating system updates, patching, and capacity planning. This eliminates the need for tasks such as choosing instance types, managing server clusters, and scaling servers manually.

- Operating System Administration: Serverless providers take responsibility for the operating system, including security updates and maintenance. This removes the need for developers to patch, monitor, or troubleshoot OS-related issues.

- Capacity Planning and Scaling: The serverless platform automatically scales resources based on demand. Developers do not need to predict traffic patterns or manually adjust server capacity. This eliminates the risk of over-provisioning or under-provisioning resources.

- Infrastructure Monitoring and Maintenance: Serverless providers offer built-in monitoring and logging capabilities, reducing the need for developers to set up and maintain monitoring tools. This simplifies the process of tracking application performance and identifying potential issues.

- Deployment and Release Management: Serverless platforms often provide streamlined deployment processes, simplifying the process of deploying code updates. This reduces the time and effort required for deployments and enables faster release cycles.

Simplified Deployment and Updates

Serverless architectures provide a streamlined deployment process, simplifying updates and enabling faster release cycles. The focus shifts from infrastructure configuration to code deployment.

- Automated Deployment Pipelines: Serverless platforms typically integrate with CI/CD (Continuous Integration/Continuous Deployment) pipelines. This allows for automated builds, testing, and deployments, reducing manual effort and improving deployment frequency.

- Simplified Code Uploads: Developers can upload code directly to the serverless platform without needing to configure or manage servers. The platform handles the underlying infrastructure, making deployment straightforward. For example, AWS Lambda allows developers to upload code packages (e.g., ZIP files) containing their function code.

- Version Control and Rollbacks: Serverless platforms often provide versioning capabilities, allowing developers to easily roll back to previous versions of their code if needed. This reduces the risk associated with deployments and simplifies troubleshooting.

- Zero Downtime Deployments: Many serverless platforms support zero-downtime deployments, ensuring that applications remain available during updates. This is achieved by deploying new versions of code alongside existing versions and gradually shifting traffic to the new version.

Monitoring and Logging for Serverless Applications

Effective monitoring and logging are essential for serverless applications. Serverless platforms offer built-in tools and integrations to simplify these processes.

- Centralized Logging: Serverless platforms provide centralized logging, allowing developers to collect logs from all their functions and services in a single location. This simplifies troubleshooting and analysis. For example, AWS CloudWatch Logs aggregates logs from AWS Lambda functions, providing a centralized view of application activity.

- Real-time Monitoring: Serverless platforms offer real-time monitoring dashboards that display key metrics such as invocation count, duration, and error rates. This allows developers to quickly identify and address performance issues.

- Automated Alerting: Developers can configure alerts based on specific metrics or events, such as high error rates or long execution times. This allows for proactive identification of problems and reduces the impact of application issues.

- Distributed Tracing: Some serverless platforms offer distributed tracing capabilities, allowing developers to track requests as they flow through multiple functions and services. This helps to identify performance bottlenecks and troubleshoot complex issues. For example, AWS X-Ray can be used to trace requests through AWS Lambda functions and other AWS services.

- Integration with Third-party Tools: Serverless platforms often integrate with third-party monitoring and logging tools, such as Datadog, New Relic, and Splunk. This allows developers to leverage existing monitoring infrastructure and customize their monitoring solutions.

Faster Time to Market

Serverless architecture fundamentally reshapes the software development lifecycle, significantly reducing the time required to bring applications and features to market. This acceleration stems from a combination of factors, including streamlined development processes, reduced infrastructure management, and the ability to rapidly iterate on designs. The impact is substantial, allowing businesses to respond more quickly to market demands and gain a competitive edge.

Accelerated Development Cycles

Serverless platforms streamline the development process by abstracting away infrastructure management, enabling developers to focus on writing code. This shift results in faster development cycles, from initial concept to deployment.

- Simplified Deployment: Serverless platforms typically offer automated deployment processes, such as CI/CD pipelines, reducing the time and effort required to deploy code changes. For instance, a developer can deploy a new function with a single command, bypassing the need to configure and manage servers.

- Reduced Code Complexity: Serverless architectures encourage the creation of small, independent functions, which are easier to develop, test, and debug. This modularity reduces the overall complexity of the codebase, accelerating the development process.

- Faster Testing and Debugging: The isolated nature of serverless functions simplifies testing and debugging. Developers can test individual functions in isolation, speeding up the identification and resolution of issues. For example, testing a single API endpoint becomes significantly easier when it’s implemented as a serverless function.

- Increased Developer Productivity: By automating infrastructure management, serverless platforms free up developers to focus on writing business logic, leading to increased productivity. Developers spend less time on tasks like server provisioning and maintenance, allowing them to concentrate on feature development.

Rapid Prototyping Enabled by Serverless

Serverless architectures facilitate rapid prototyping by allowing developers to quickly build and deploy minimum viable products (MVPs). This capability enables faster experimentation and validation of ideas, reducing the risk associated with large-scale development efforts.

- Quick Iteration: Serverless functions are easy to update and deploy, which allows for rapid iteration on prototypes. Developers can quickly experiment with different features and functionalities, gather user feedback, and refine the prototype based on real-world usage.

- Cost-Effective Experimentation: Serverless platforms offer a pay-as-you-go pricing model, making it cost-effective to experiment with new ideas. Developers can deploy and test prototypes without incurring significant infrastructure costs, reducing the financial barrier to innovation.

- Simplified Scalability: Serverless platforms automatically scale resources based on demand, eliminating the need to manually provision and manage infrastructure. This allows developers to focus on the core functionality of the prototype without worrying about scalability issues.

- Example: Chatbot Prototyping: Consider the rapid prototyping of a chatbot. A developer can quickly build a serverless function to handle user input, integrate it with a messaging platform, and deploy it within hours. This allows for immediate testing and feedback gathering, enabling rapid iteration and refinement of the chatbot’s functionality.

Serverless Application Development Workflow

The following steps illustrate the typical workflow for developing a serverless application:

- Define Application Requirements: The initial step involves clearly defining the application’s purpose, features, and target audience. This includes outlining the functionalities, such as the API endpoints or data processing tasks, and the expected user interactions.

- Design the Architecture: This involves designing the application’s architecture, including selecting the appropriate serverless services (e.g., AWS Lambda, Azure Functions, Google Cloud Functions), defining the data storage mechanisms (e.g., databases, object storage), and outlining the communication patterns between different components.

- Develop Serverless Functions: This involves writing the code for individual serverless functions that perform specific tasks. Each function should be designed to be small, focused, and independent, adhering to the principles of modularity.

- Configure Triggers and Events: Define the triggers that will invoke the serverless functions. These triggers can include HTTP requests, database updates, scheduled events, or messages from other services. This step determines how and when the functions are executed.

- Implement API Gateway (if applicable): If the application exposes an API, configure an API gateway to handle routing, authentication, and authorization. This gateway acts as the entry point for external requests, directing them to the appropriate serverless functions.

- Deploy and Test: Deploy the serverless functions and associated resources to the cloud platform. Thoroughly test the application to ensure that it functions as expected, including unit tests for individual functions and integration tests to verify the interactions between different components.

- Monitor and Optimize: Implement monitoring tools to track the application’s performance, resource utilization, and error rates. Continuously optimize the application based on the monitoring data, focusing on performance, cost, and reliability.

Increased Developer Productivity

Serverless architecture significantly enhances developer productivity by shifting the focus from infrastructure management to core application development. This paradigm allows developers to concentrate on writing code, deploying features, and iterating rapidly, ultimately leading to faster development cycles and improved software quality. The underlying infrastructure, including server provisioning, scaling, and maintenance, is abstracted away, enabling developers to dedicate their time and expertise to building innovative solutions.

Focus on Code

Serverless platforms intrinsically allow developers to concentrate their efforts on the creation and refinement of application logic. The operational aspects of the application, such as server configuration, patching, and monitoring, are handled by the cloud provider. This fundamental shift allows developers to:

- Prioritize Feature Development: Developers can focus on building new features and functionalities rather than managing the underlying infrastructure. This leads to a faster pace of innovation and a more agile development process.

- Reduce Debugging Time: With the infrastructure managed by the provider, developers spend less time troubleshooting server-related issues and more time on identifying and fixing bugs within the application code itself.

- Accelerate Deployment Cycles: Serverless platforms often offer streamlined deployment processes, enabling developers to deploy code changes quickly and frequently. This rapid iteration allows for faster feedback loops and continuous improvement.

Elimination of Server Management

A core tenet of serverless architecture is the complete abstraction of server management responsibilities. Developers no longer need to provision, configure, or maintain servers, as the cloud provider handles these tasks automatically. This frees up valuable time and resources, eliminating the need for specialized server administration skills.

- No Server Provisioning: Developers do not have to estimate server capacity, choose instance types, or manually scale resources. The platform automatically scales the resources based on demand.

- Automated Scaling and Availability: Serverless platforms inherently provide automatic scaling, handling traffic spikes and ensuring high availability without manual intervention. This removes the need for developers to write and maintain scaling scripts.

- Reduced Operational Overhead: The operational burden associated with server management, including patching, security updates, and monitoring, is eliminated. The cloud provider takes responsibility for these tasks, reducing the operational overhead for development teams.

“Before serverless, I spent a significant portion of my time on server maintenance and troubleshooting. With serverless, I can now focus almost entirely on writing code and building features. This has dramatically increased our team’s productivity and allowed us to deliver new features much faster.”

A Senior Developer at a SaaS company.

Improved Application Resilience

Serverless architectures inherently promote enhanced application resilience due to their distributed nature and the operational characteristics of the underlying platforms. This design minimizes the impact of individual component failures and provides mechanisms for automated recovery, leading to more robust and available applications. The decoupling of application logic into independent, event-triggered functions contributes significantly to this resilience.

Fault Tolerance Features of Serverless Platforms

Serverless platforms are designed with fault tolerance as a core principle. These platforms employ several strategies to ensure applications remain operational even when failures occur.

- Automatic Scaling and Redundancy: Serverless platforms automatically scale the resources allocated to functions based on demand. This means that if one instance of a function fails, the platform can quickly spin up new instances to handle the workload. Furthermore, functions are typically deployed across multiple availability zones or regions, providing redundancy. If one zone or region experiences an outage, the platform can automatically route traffic to healthy instances in other locations.

- Managed Infrastructure: The underlying infrastructure, including servers, operating systems, and networking, is managed by the serverless provider. This reduces the burden on developers to manage these components, freeing them from tasks such as patching, security updates, and capacity planning. The provider is responsible for ensuring the infrastructure is highly available and resilient.

- Event-Driven Architecture: Serverless applications often leverage event-driven architectures. This means that functions are triggered by events, such as HTTP requests, database updates, or messages from a message queue. The platform manages the event triggers and ensures that functions are invoked reliably. If a function fails to process an event, the platform can retry the invocation or implement other error handling mechanisms, depending on the configuration.

- Idempotency Considerations: Functions can be designed to be idempotent, meaning they can be executed multiple times without changing the outcome beyond the first execution. This is particularly important in serverless environments where retries are common. Designing functions to be idempotent ensures that even if a function is invoked multiple times due to a failure, the application state remains consistent. For example, a function that processes an order might check if the order has already been processed before executing the processing logic again.

Serverless Automatic Failure Handling Examples

Serverless platforms incorporate several mechanisms to automatically handle failures, ensuring application continuity and minimizing downtime. Here are specific examples:

- Automatic Retry Mechanisms: When a function invocation fails, the serverless platform often automatically retries the invocation. The retry behavior can be configured, including the number of retries, the interval between retries, and the type of failure that triggers a retry. This mechanism is particularly useful for transient errors, such as temporary network issues or database connection problems.

- Circuit Breaker Pattern Implementation: Serverless platforms can be integrated with circuit breaker patterns. This pattern monitors the success and failure rates of function invocations. If the failure rate exceeds a threshold, the circuit breaker opens, preventing further invocations of the failing function. This prevents cascading failures and gives the underlying systems time to recover. After a period, the circuit breaker can transition to a “half-open” state, allowing a limited number of invocations to test if the function has recovered.

- Dead-Letter Queues: For event-driven applications, serverless platforms often support dead-letter queues (DLQs). If a function fails to process an event after multiple retries, the event is sent to a DLQ. This allows developers to inspect and analyze the failed events, troubleshoot the underlying issues, and potentially reprocess the events once the issues are resolved.

- Monitoring and Alerting: Serverless platforms provide monitoring and alerting capabilities. Developers can configure alerts to be triggered based on various metrics, such as function invocation errors, latency, and resource utilization. These alerts can notify developers of potential issues, allowing them to proactively address problems before they impact users. Monitoring tools can often be integrated with third-party services for advanced analysis.

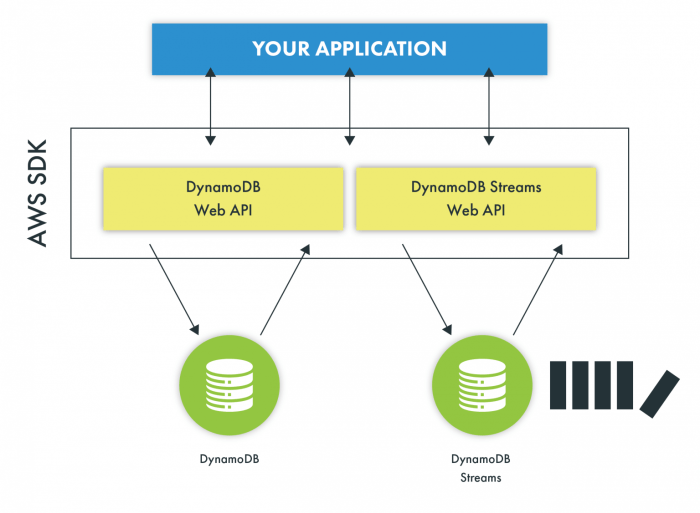

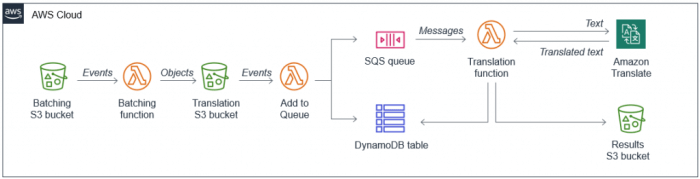

Event-Driven Architectures

Serverless architectures are inherently well-suited for event-driven application development. This paradigm shift leverages the ability of serverless functions to react to events in real-time, enabling more responsive, scalable, and resilient applications. By decoupling components and allowing them to communicate asynchronously, event-driven architectures promote a more flexible and maintainable system design.

Enabling Event-Driven Application Development

Serverless platforms provide the necessary infrastructure and tools to facilitate event-driven application development. These platforms typically offer managed services for event ingestion, processing, and routing, eliminating the need for developers to manage the underlying infrastructure.

- Event Sources: Serverless platforms support a wide range of event sources, including:

- Cloud storage services (e.g., Amazon S3, Google Cloud Storage) that trigger events when objects are created, modified, or deleted.

- Database services (e.g., Amazon DynamoDB, Google Cloud Datastore) that trigger events on data changes.

- Message queues (e.g., Amazon SQS, Google Cloud Pub/Sub) that enable asynchronous communication between components.

- API gateways (e.g., Amazon API Gateway, Google Cloud Endpoints) that trigger events on HTTP requests.

- Custom events emitted by applications.

- Event Processing: Serverless functions are the primary mechanism for processing events. When an event occurs, the platform automatically invokes the corresponding function. The function then performs the necessary logic, such as data transformation, data storage, or notification sending.

- Event Routing: Serverless platforms often provide event routing capabilities that allow developers to specify which functions should be triggered by which events. This routing mechanism can be based on event type, source, or content.

Advantages of Event-Driven Architectures

Event-driven architectures offer several advantages, particularly in the context of serverless deployments. These advantages stem from the inherent asynchronicity, decoupling, and scalability that event-driven systems provide.

- Increased Responsiveness: Applications can react to events in real-time, providing a more responsive user experience. For example, a user can receive a notification immediately after an order is placed.

- Improved Scalability: Event-driven architectures are inherently scalable because each event trigger can independently invoke a function. The platform automatically scales the function instances based on the event volume.

- Enhanced Resilience: Components are decoupled, meaning that the failure of one component does not necessarily impact other components. Events can be queued and retried if a function fails, improving system resilience.

- Greater Flexibility: Event-driven architectures are flexible, allowing developers to easily add, modify, or remove components without affecting the entire system.

- Simplified Development: Developers can focus on writing event-handling logic rather than managing complex infrastructure.

Common Event Triggers and Corresponding Actions in a Serverless Context

Serverless architectures utilize various event triggers to initiate actions. These triggers and actions are integral to building event-driven applications.

- File Upload to Cloud Storage:

- Trigger: A new file is uploaded to an object storage service, such as Amazon S3 or Google Cloud Storage.

- Action: A serverless function is triggered to process the uploaded file. This may involve resizing images, extracting metadata, or indexing the file for search. For instance, when a user uploads a photo to a social media platform, a function could automatically generate thumbnails in various sizes.

- Database Record Creation/Modification:

- Trigger: A new record is created or an existing record is modified in a database service, such as Amazon DynamoDB or Google Cloud Firestore.

- Action: A serverless function is triggered to update related data, send notifications, or perform other data-related tasks. An example is updating a user’s profile after a database record change.

- Message Received in a Message Queue:

- Trigger: A message is added to a message queue service, such as Amazon SQS or Google Cloud Pub/Sub.

- Action: A serverless function is triggered to process the message. This could involve sending emails, updating order statuses, or performing other background tasks. For example, a function might process an order placed via a message queue.

- HTTP Request to an API Gateway:

- Trigger: An HTTP request is received by an API gateway service, such as Amazon API Gateway or Google Cloud Endpoints.

- Action: A serverless function is triggered to handle the request. This could involve processing the request, retrieving data from a database, or invoking other services. For instance, a function might handle user authentication requests.

- Scheduled Events:

- Trigger: A scheduled event is triggered by a service like Amazon CloudWatch Events or Google Cloud Scheduler.

- Action: A serverless function is triggered at a specific time or interval. This can be used for tasks like generating reports, cleaning up data, or sending out periodic notifications. For example, a function could be scheduled to send daily summary emails.

Integration with Other Services

Serverless architectures excel at seamless integration with a wide array of cloud services, offering developers unparalleled flexibility and efficiency in building and deploying applications. This capability stems from the inherent design of serverless platforms, which are built to interact easily with other cloud-based components. The modular nature of serverless functions, coupled with event-driven triggers and pre-built connectors, simplifies the process of connecting various services, allowing developers to focus on application logic rather than infrastructure management.

Serverless Function Interaction with Cloud Services

Serverless functions interact with various cloud services, leveraging APIs and SDKs provided by cloud providers. These interactions enable developers to build complex applications by combining the functionality of different services.

- Databases: Serverless functions can connect to databases like Amazon DynamoDB, Amazon RDS, Google Cloud SQL, or Azure Cosmos DB. This interaction typically involves the following steps:

- Connection Establishment: The function establishes a connection to the database using database-specific SDKs or drivers. This may involve providing credentials, connection strings, and other configuration parameters.

- Data Retrieval: Functions can query the database to retrieve data. The function constructs SQL queries (for relational databases) or uses NoSQL database-specific query languages. The database returns the results.

- Data Modification: Functions can insert, update, or delete data in the database. These operations are performed using database-specific commands and APIs.

- Example: An e-commerce application might use a serverless function triggered by a new order event. The function then inserts the order details into a database (e.g., Amazon DynamoDB) for order tracking and processing.

- Storage Services: Serverless functions can interact with storage services like Amazon S3, Google Cloud Storage, or Azure Blob Storage.

- Object Upload/Download: Functions can upload files to storage buckets or download files from them. This is often used for handling user-uploaded content or serving static assets.

- Object Metadata Management: Functions can retrieve and modify object metadata (e.g., file size, creation date).

- Event-Driven Processing: Functions can be triggered by events related to storage objects, such as a new file upload.

- Example: A photo-sharing application could use a serverless function to resize images uploaded to an S3 bucket. The function is triggered by the upload event, resizes the image, and stores the resized version in another bucket.

- Messaging Services: Serverless functions integrate with messaging services like Amazon SQS, Google Cloud Pub/Sub, or Azure Service Bus.

- Message Consumption: Functions can consume messages from queues or topics.

- Message Production: Functions can publish messages to queues or topics.

- Asynchronous Processing: Messaging services enable asynchronous processing, allowing functions to handle tasks in the background without blocking the main application flow.

- Example: A serverless function can be triggered by a message in an SQS queue to process a user registration request. The function might send a confirmation email and update the user database.

- Other Services: Serverless functions can integrate with a vast array of other cloud services, including:

- APIs and Gateways: Services like Amazon API Gateway, Google Cloud API Gateway, or Azure API Management enable functions to expose APIs.

- Machine Learning Services: Services like Amazon SageMaker, Google Cloud AI Platform, or Azure Machine Learning can be used to integrate machine learning models into serverless applications.

- Identity and Access Management (IAM): Services like AWS IAM, Google Cloud IAM, or Azure Active Directory are used for authentication and authorization.

Serverless Integration Process: Flow Chart

The integration process between a serverless function and another cloud service can be visualized using a flow chart. The following describes the key steps.

* Start: The process begins with a specific trigger, such as an API request, a scheduled event, or a change in a cloud service.

Trigger Activation

The trigger activates the serverless function. This could be an HTTP request, a scheduled event, or an event notification from another cloud service.

Function Execution

The serverless function executes the pre-defined code.

Service Interaction

Within the function’s code, an API call is made to interact with another cloud service. This call utilizes the appropriate SDK or API client for the target service. For instance, an Amazon S3 client for interacting with S3.

Service Operation

The cloud service performs the requested operation based on the API call from the serverless function. This operation could involve reading from, writing to, or modifying data within the cloud service.

Response Handling

The serverless function receives a response from the cloud service. This response includes data from the service or indicates the success or failure of the operation.

Data Processing (Optional)

The function processes the response data as needed. This could involve data transformation, validation, or aggregation.

Output/Action

The function performs a subsequent action or returns the processed data. The output might be stored in another cloud service, sent to a user, or used to trigger another function.

End

The process ends.

The process can be represented as a sequential flow: `Start -> Trigger Activation -> Function Execution -> Service Interaction -> Service Operation -> Response Handling -> Data Processing (Optional) -> Output/Action -> End`. This flow can be repeated multiple times to achieve complex integrations. For example, an application that uploads images to S3, generates thumbnails using a serverless function, and stores the thumbnail information in a database, would involve the execution of multiple serverless functions and interactions with S3 and a database service.

This modular approach makes it easier to debug, maintain, and update the application’s functionality.

Focus on Business Logic

Serverless architecture fundamentally shifts the development paradigm by allowing developers to concentrate on the core value of their applications: the business logic. This shift is achieved through the abstraction of infrastructure management, enabling developers to focus their efforts on writing code that directly addresses user needs and business requirements. The focus moves away from server provisioning, scaling, and maintenance, and towards delivering features and functionality.

Abstraction of Infrastructure Concerns

The primary advantage of serverless architecture lies in its abstraction of infrastructure management. Developers no longer need to be concerned with the underlying servers, operating systems, or scaling mechanisms. This abstraction is achieved through the use of Function-as-a-Service (FaaS) platforms, which handle the execution of code in response to events, and other managed services that provide the necessary resources. This approach allows developers to focus solely on writing the code that implements the application’s functionality.The FaaS model allows developers to deploy individual functions, often written in a variety of programming languages, without needing to provision or manage any servers.

The platform automatically scales the resources required to execute these functions based on demand. This is a significant departure from traditional environments where developers had to estimate and provision server capacity upfront, leading to either under-utilization of resources or performance bottlenecks during peak loads.Consider a scenario where a developer is building an e-commerce platform. In a traditional environment, the developer would need to set up and configure web servers, database servers, caching layers, and load balancers.

In a serverless environment, the developer can focus on writing the code that handles user authentication, product catalog management, order processing, and payment integration. The FaaS platform takes care of the underlying infrastructure, such as scaling the compute resources needed to handle user requests. This means the developer can deploy code quickly, iterate on features rapidly, and respond to changing business needs with greater agility.

Comparison of Developer Focus: Traditional vs. Serverless

The following table provides a direct comparison of the areas of focus for developers in traditional and serverless environments, highlighting the significant shift in priorities:

| Area | Traditional | Serverless | Difference |

|---|---|---|---|

| Server Provisioning and Management | Significant time and effort spent on server setup, configuration, patching, and monitoring. | Minimal involvement; infrastructure is managed by the cloud provider. | Reduced operational overhead and increased developer productivity. |

| Scaling and Load Balancing | Requires manual configuration of scaling rules, load balancers, and capacity planning. | Automatic scaling based on demand, handled by the FaaS platform. | Simplified scaling and improved application responsiveness. |

| Security Management | Developers are responsible for securing the servers, operating systems, and applications. | Security is often managed by the cloud provider, with developers focusing on application-level security. | Reduced security burden and improved security posture. |

| Code Deployment and CI/CD | Requires complex deployment pipelines and manual intervention for updates. | Simplified deployment pipelines with automated deployments and rollbacks. | Faster time to market and improved release velocity. |

| Monitoring and Logging | Requires setting up and configuring monitoring tools and logging infrastructure. | Monitoring and logging are often provided by the cloud provider, with pre-built dashboards and alerts. | Simplified monitoring and improved visibility into application performance. |

| Business Logic Implementation | Developers share their time with infrastructure management, which could distract them from the main business objectives. | Developers can focus primarily on writing code that implements business functionality and delivers value to the users. | Increased focus on delivering features and improving user experience. |

Enhanced Security

Serverless architectures offer significant security advantages by shifting the responsibility for infrastructure management to the cloud provider. This delegation of responsibility, combined with inherent architectural features, can lead to more secure and resilient applications. By abstracting away the underlying server infrastructure, developers can focus on securing their application code and data, while the platform handles critical security tasks like patching, updates, and infrastructure security.

Security Advantages of Serverless Architectures

Serverless computing inherently provides several security benefits. The reduced attack surface is a primary advantage, as developers do not manage the underlying operating systems or server configurations. This minimizes the potential for misconfigurations and vulnerabilities related to server management. Furthermore, serverless platforms often provide built-in security features, such as identity and access management (IAM), encryption, and audit logging, which simplify security implementation.

The event-driven nature of many serverless applications also facilitates security monitoring and threat detection.

Security Best Practices in Serverless Development

While serverless platforms handle many security aspects, developers still play a crucial role in ensuring application security. Implementing best practices is essential for mitigating risks and protecting sensitive data.

- Secure Code Development: Adhering to secure coding principles is paramount. This includes input validation to prevent injection attacks (e.g., SQL injection, cross-site scripting), proper handling of sensitive data (e.g., using encryption at rest and in transit), and regular code reviews to identify and address vulnerabilities. For example, developers should utilize parameterized queries to prevent SQL injection vulnerabilities.

- IAM and Access Control: Implementing a robust IAM strategy is critical. Granting least privilege access, where functions only have the necessary permissions to perform their tasks, is a fundamental security principle. Regularly reviewing and updating IAM policies based on the principle of least privilege minimizes the impact of potential security breaches. For example, a function that processes image uploads should only have permissions to read from a specific bucket and write to another, and nothing else.

- Secrets Management: Securely managing secrets, such as API keys, database credentials, and other sensitive information, is essential. Serverless platforms often provide dedicated services or features for secrets management. Using these services, instead of hardcoding secrets directly into the code, helps to prevent accidental exposure. Consider using a service like AWS Secrets Manager or Azure Key Vault to securely store and manage sensitive credentials.

- Input Validation and Sanitization: All user inputs must be validated and sanitized to prevent injection attacks and other vulnerabilities. This includes validating the data type, format, and length of the input. Implement robust input validation techniques, such as regular expressions and whitelisting, to filter out malicious data.

- Monitoring and Logging: Comprehensive monitoring and logging are essential for detecting and responding to security incidents. Serverless platforms typically provide logging and monitoring capabilities, such as AWS CloudWatch and Azure Monitor. These tools allow developers to track function invocations, errors, and other relevant metrics. By analyzing these logs, developers can identify potential security threats and investigate security incidents. Implementing alerting mechanisms to notify developers of suspicious activities, such as unusually high error rates or unauthorized access attempts, is also crucial.

Handling Security Patching and Updates in Serverless Platforms

One of the most significant advantages of serverless architectures is that the cloud provider handles security patching and updates for the underlying infrastructure. This includes patching the operating system, runtime environments, and other software components. This automatic patching reduces the operational burden on developers and ensures that the infrastructure is up-to-date with the latest security fixes.

“The cloud provider is responsible for patching and updating the underlying infrastructure, reducing the operational burden on developers.”

The specific mechanisms for patching and updates vary depending on the cloud provider. However, the general principle is that the provider automatically applies security updates to the underlying infrastructure without requiring any action from the developer. This ensures that the platform is protected against known vulnerabilities and security threats. Furthermore, many serverless platforms provide features that enable developers to test their applications with updated runtimes and dependencies before deploying them to production.

This allows developers to identify and resolve any compatibility issues before the updates are applied to the live environment.

Conclusive Thoughts

In conclusion, serverless architecture presents a compelling proposition for modern software development. By eliminating infrastructure management complexities, it unlocks significant advantages in terms of cost, scalability, and developer productivity. The ability to build resilient, event-driven applications that readily integrate with other services positions serverless as a key enabler of innovation. Embracing serverless allows developers to concentrate on the core value of their work: crafting exceptional software solutions, thereby fostering a more agile and efficient development lifecycle.

FAQ Summary

What is the primary difference between serverless and traditional cloud computing?

The key distinction lies in infrastructure management. Traditional cloud computing requires developers to provision, manage, and scale servers, while serverless abstracts away these responsibilities, allowing developers to focus solely on code.

How does serverless handle scaling?

Serverless platforms automatically scale resources based on demand. When a function is triggered, the platform provisions the necessary resources to handle the workload, and then scales down when the workload decreases, offering automatic elasticity.

Is serverless suitable for all types of applications?

While serverless is well-suited for many applications, it may not be the optimal choice for all. Complex applications with high compute requirements or strict latency demands might benefit from traditional infrastructure or container-based solutions. Serverless excels for event-driven, stateless applications.

What are the security considerations with serverless?

Serverless platforms often provide built-in security features, such as automatic patching and updates. Developers must still implement security best practices, including secure coding, input validation, and access control, but the platform handles much of the underlying infrastructure security.

What are the potential vendor lock-in concerns?

Serverless architectures can introduce vendor lock-in because functions are often tied to specific cloud provider services. Careful consideration of service compatibility and the use of open standards can mitigate this risk, allowing for easier migration if necessary.